想知道比特币价格趋势?本文带你一探究竟

2018年01月14日 由 xiaoshan.xiang 发表

487341

0

加密货币,尤其是比特币,最近一直是社交媒体和搜索引擎的热门。如果采取明智的创新策略,他们的高波动性将带来巨大的潜在利润。似乎每一个人都突然开始谈论加密货币。但由于缺乏索引,与传统金融工具相比,加密货币是不可预测的。本文的目的是教你如何用比特币作为一个例子来预测这些加密货币的价格,以提供对比特币未来趋势的洞察。

在以下的安装环境和库中运行代码:

预测数据可以从Kaggle或Poloniex中收集。为了确保一致性,从Poloniex收集的数据列名改为与Kaggle的匹配。

Kaggle地址:https://www.kaggle.com/mczielinski/bitcoin-historical-data

Poloniex地址:https://poloniex.com/

从源收集的数据需要解析以发送到模型进行预测。这个文章引用了PastSampler类,用于将数据分解为数据和标签的列表。输入尺寸(N)为256,输出尺寸(K)为16。注意,从Poloniex收集的数据在5分钟的基础上进行着。这表明输入跨越1280分钟,而输出覆盖80分钟。

文章地址:https://nicholastsmith.wordpress.com/2017/11/13/cryptocurrency-price-prediction-using-deep-learning-in-tensorflow/

在创建了PastSampler类之后,我将它应用到收集的数据上。由于原始数据范围从0到10000,因此需要缩放数据以使神经网络更容易理解数据。

CNN

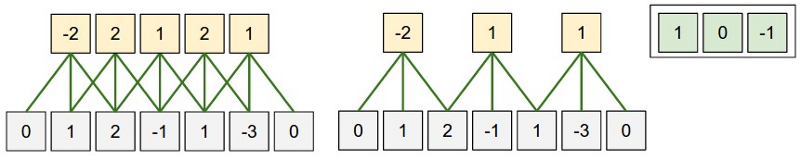

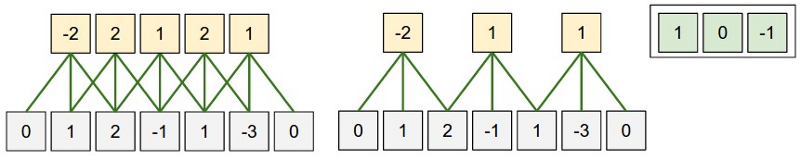

利用一维CNN用在输入数据上滑动的内核捕获数据的局部性。如下图所示。

我创建的第一个模型是CNN。下面的代码设置了GPU编号“1”(因为我有4个,你可以把它设置成你喜欢的任何GPU)。由于在多个GPU上运行时,Tensorflow的运行似乎不太好,因此将它限制为只在1个GPU上运行是比较明智的。如果你没有GPU,不要担心。只需忽略这些行。

构建CNN模型的代码非常简单。dropout层是防止过度拟合问题。损失函数被定义为均方误差(MSE),而优化器是最先进的Adam算法。

唯一需要担心的是每个层之间的输入和输出的大小。计算某一卷积层的输出的方程为:

在文件的末尾,我添加了两个回调函数,CSVLogger和ModelCheckpoint。前者帮助我跟踪所有的训练和验证进度,而后者允许我将模型的权值存储到每个epoch中。

LSTM

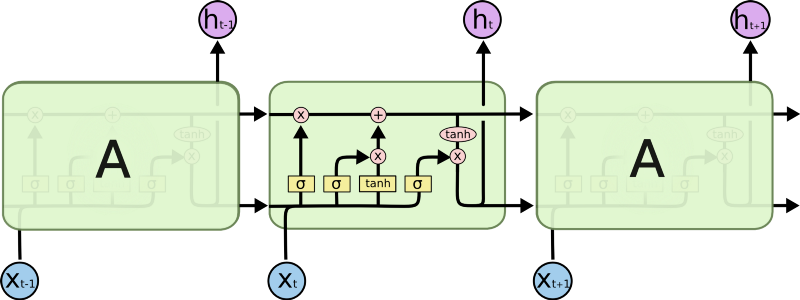

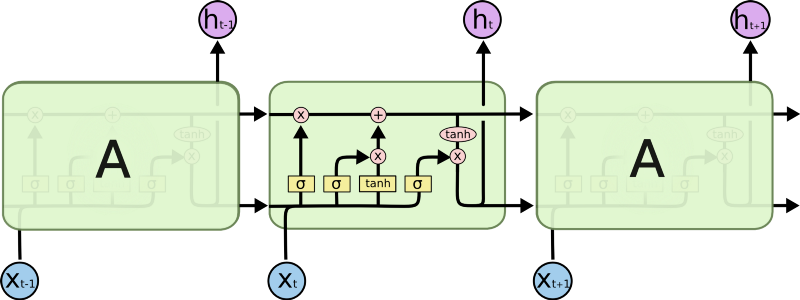

长短期记忆(LSTM)网络是递归神经网络(RNN)的一种变体。它是为了解决vanilla RNN所产生的消失梯度问题而发明的。据称,LSTM能够通过较长的时间步长来记忆输入。

由于不需要关心内核大小、跨步、输入大小和输出大小之间的关系,所以LSTM比CNN更容易实现。只需确保在网络中正确定义输入和输出的维度。

GRU

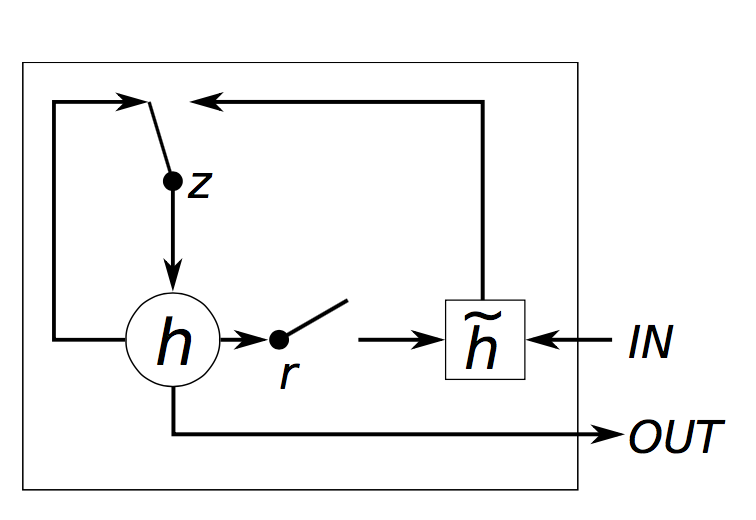

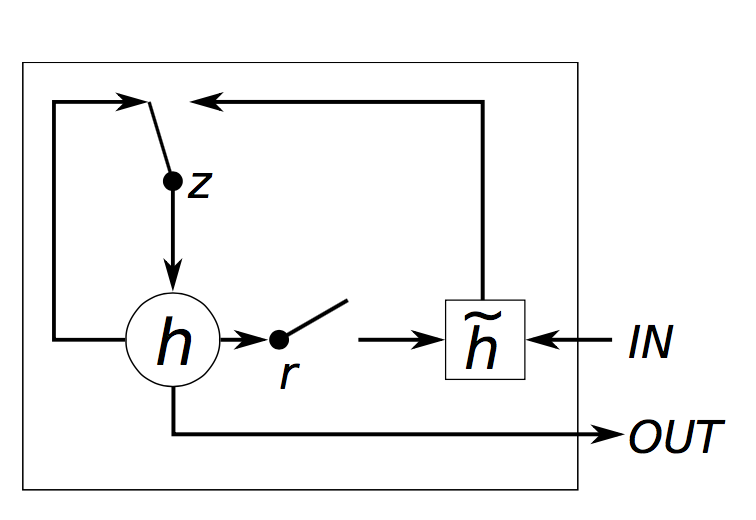

封闭式循环单元(GRU)是RNN的另一个变体。它的网络结构不像LSTM那样复杂,只有一个复位和去掉了内存单元忘记门。据说GRU的性能与LSTM不相上下,但效率更高。(对于本文中的问题,LSTM大约需要45秒/epoch的时间,而GRU的时间小于40秒/epoch).

将LSTM中创建模型的第二行

替换为

由于这三个模型的结果图类似,所以我只展示CNN的版本。首先,我们需要重构模型并将trained_weights加载到模型中。

然后,我们需要将预测的数据进行反向缩放,因为前面使用的是MinMaxScaler,它的范围是[0,1]。

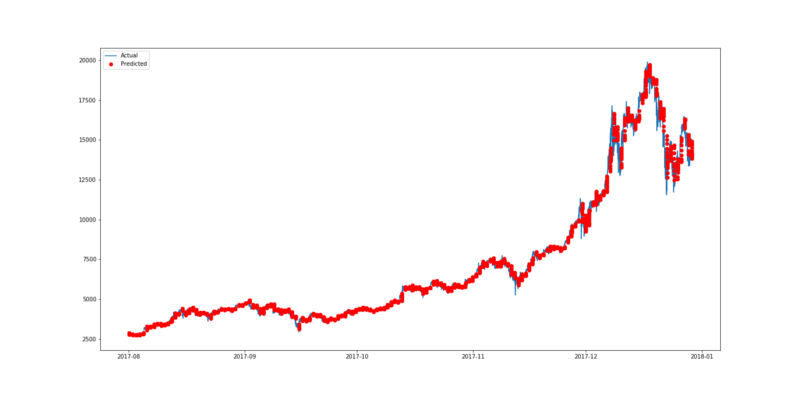

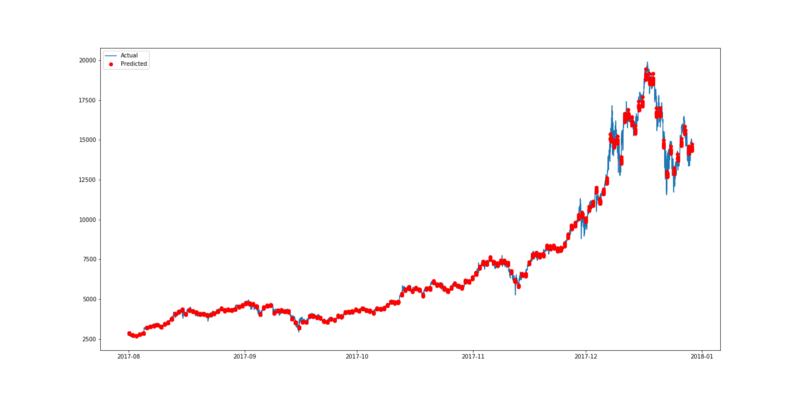

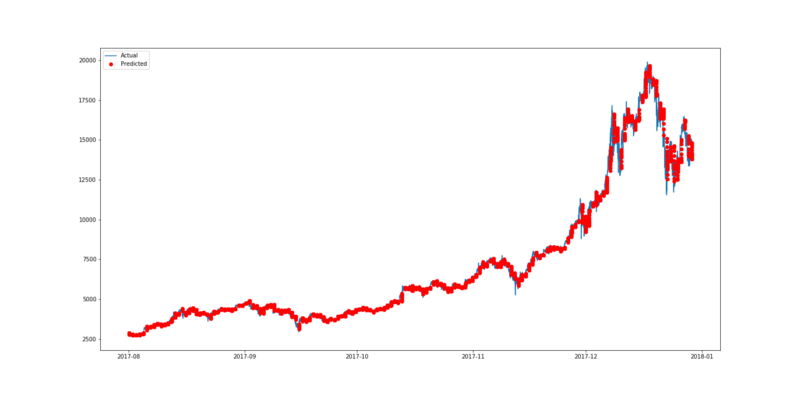

这两个数据帧都是真实的(真实的价格),并且预测的比特币价格已经被创建。为了实现可视化,绘制的图只显示了自2017年8月以后的数据。

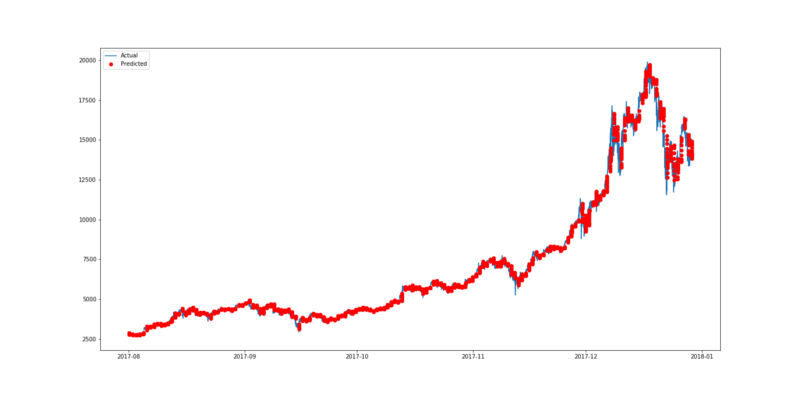

用pyplot画这个图形。由于预期价格以16分钟为基础,没有把所有的价格联系起来,我们可以更容易地看到结果。预测数据被绘制成红点,就像“ro”在第三行表示的那样。下图所示的蓝线表示真实值(实际数据),而红点代表预测的比特币价格。

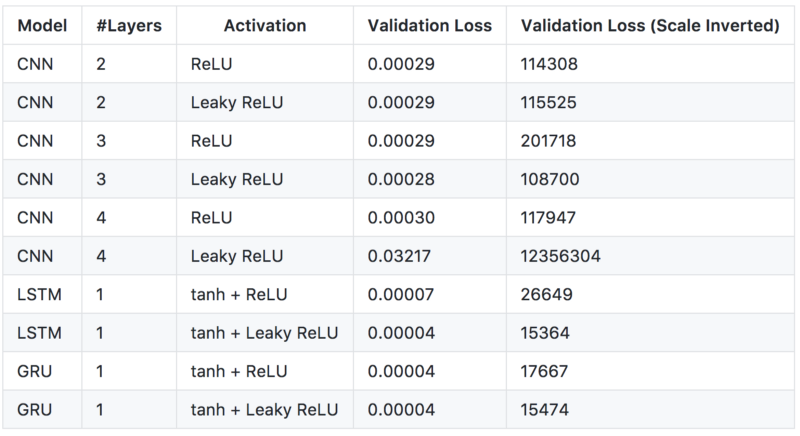

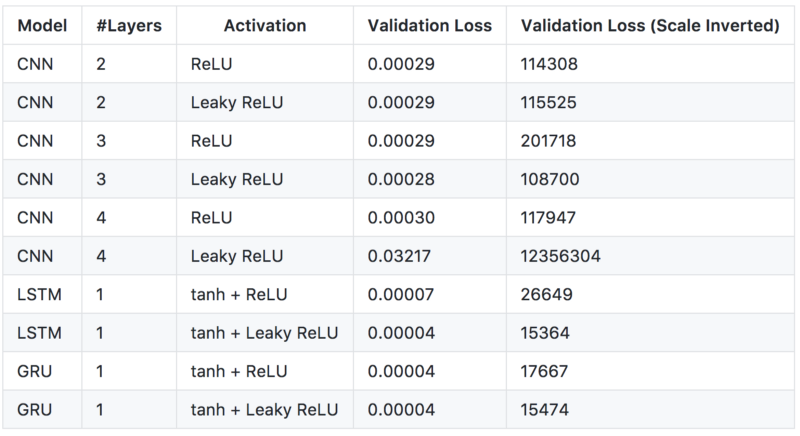

从上图可以看出,这一预测与比特币的实际价格非常相似。为了选择最佳模型,我决定测试几种类型的网络配置,以下是配置列表。

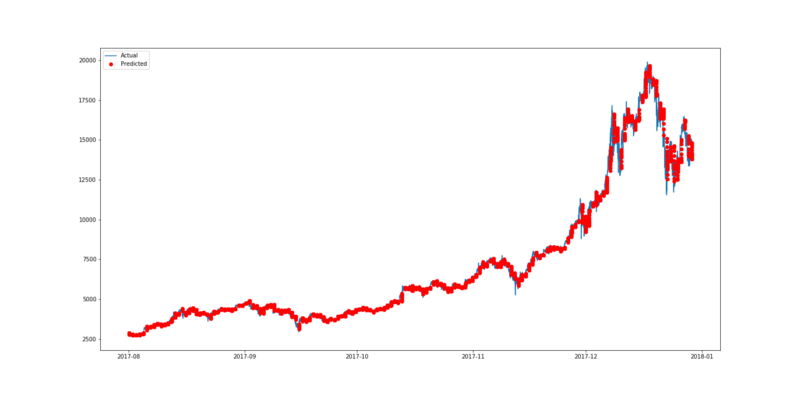

上述表格的每一行都是来自100个训练epoch的最佳验证损失的模型。从上面的结果,我们可以观察到,与普通的ReLU相比,LeakyReLU似乎总是能产生更好的损失。但是,带有Leaky ReLU的4层的CNN作为激活函数会产生大量的验证损失,这可能是由于可能模型的错误部署,该模型可能需要重新验证。CNN模型可以被训练的非常快速(2秒/年),其性能比LSTM和GRU差一些。最好的模型似乎是将tanh和Leaky ReLU作为激活函数的LSTM,虽然3层的CNN似乎能更好地捕获数据的局部时间依赖性。

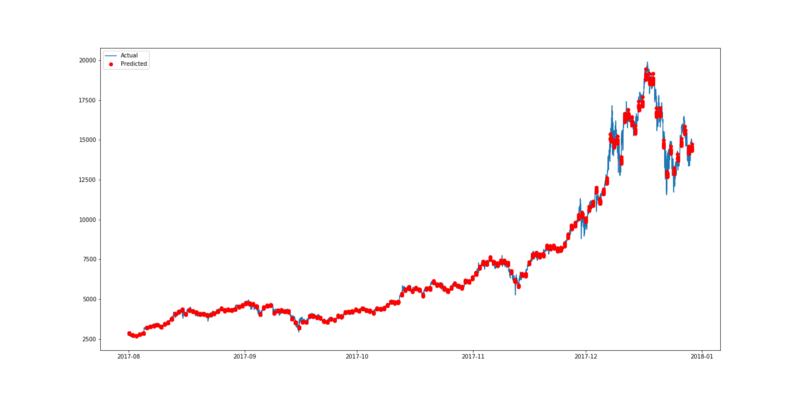

尽管这个预测似乎很不错,但人们还是担心过度拟合。训练和验证损失之间存在差距(5.97E-06 vs 3.92E-05),当使用LeakyReLU训练LSTM时,应采用正则化,以使方差最小化。

为了找出最佳的正则化策略,我用几个不同L1和L2值进行了实验。首先,我们需要定义一个新的函数,以便将数据集成到LSTM中。在在这里,我将使用在偏差向量上正则化的偏差正则化器作为例子。

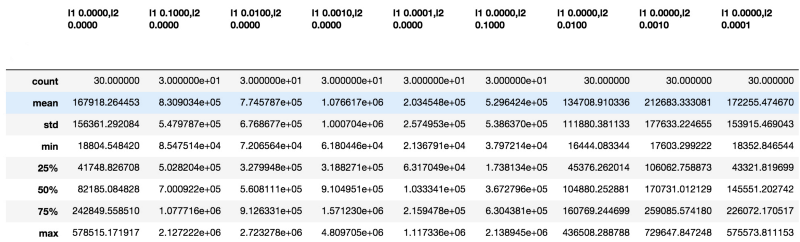

重复训练模型30次,每次30 epoch,为一次实验。

如果你使用的是Jupyter notebook,你可以直接从输出看到下表。

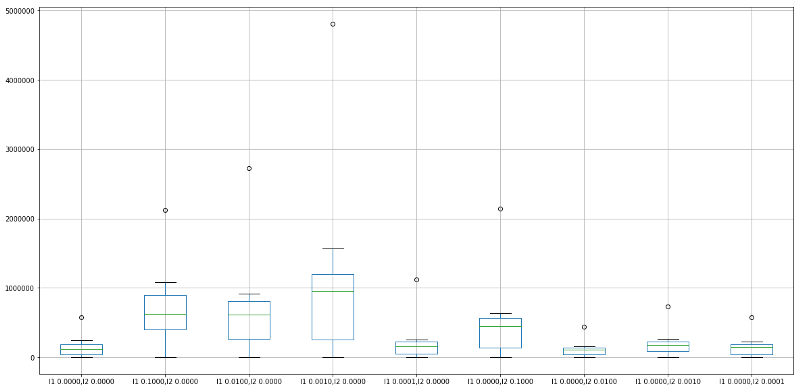

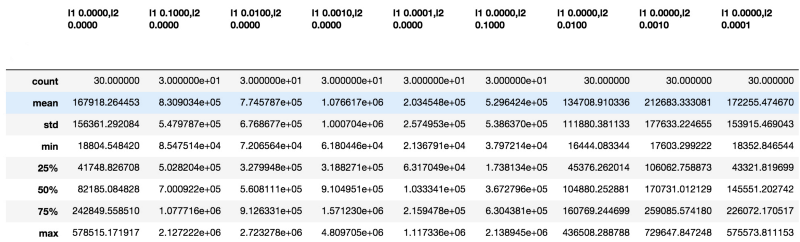

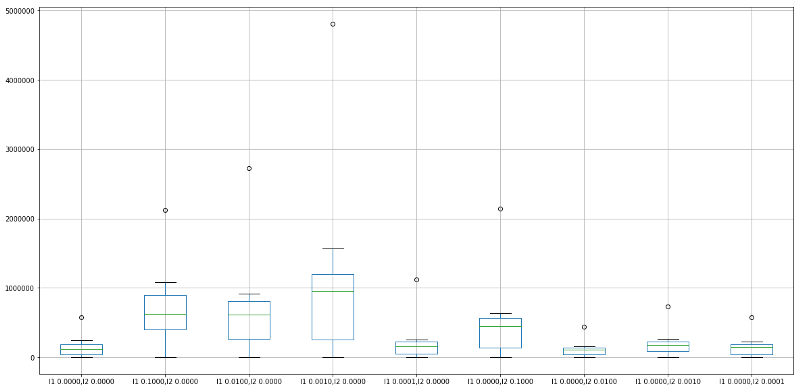

为了可视化比较关系,我们可以使用箱线图:

通过比较发现,L2正则化系数0.01对偏差矢量的影响最大。

为了找出所有正则化器之间的最佳组合,包括激活、偏差、内核、递归矩阵,必须逐一测试它们,这对于我目前的硬件配置来说似乎并不实际。因此,我将把它作为未来的计划。

你学到的知识:

开始

在以下的安装环境和库中运行代码:

- Python 2.7

- Tensorflow=1.2.0

- Keras=2.1.1

- Pandas=0.20.3

- Numpy=1.13.3

- h5py=2.7.0

- sklearn=0.19.1

数据收集

预测数据可以从Kaggle或Poloniex中收集。为了确保一致性,从Poloniex收集的数据列名改为与Kaggle的匹配。

Kaggle地址:https://www.kaggle.com/mczielinski/bitcoin-historical-data

Poloniex地址:https://poloniex.com/

import json

import numpy as np

import os

import pandas as pd

import urllib2

# connect to poloniex's API

url = 'https://poloniex.com/public?command=returnChartData¤cyPair=USDT_BTC&start=1356998100&end=9999999999&period=300'

# parse json returned from the API to Pandas DF

openUrl = urllib2.urlopen(url)

r = openUrl.read()

openUrl.close()

d = json.loads(r.decode())

df = pd.DataFrame(d)

original_columns=[u'close', u'date', u'high', u'low', u'open']

new_columns = ['Close','Timestamp','High','Low','Open']

df = df.loc[:,original_columns]

df.columns = new_columns

df.to_csv('data/bitcoin2015to2017.csv',index=None)

数据准备

从源收集的数据需要解析以发送到模型进行预测。这个文章引用了PastSampler类,用于将数据分解为数据和标签的列表。输入尺寸(N)为256,输出尺寸(K)为16。注意,从Poloniex收集的数据在5分钟的基础上进行着。这表明输入跨越1280分钟,而输出覆盖80分钟。

文章地址:https://nicholastsmith.wordpress.com/2017/11/13/cryptocurrency-price-prediction-using-deep-learning-in-tensorflow/

import numpy as np

import pandas as pd

class PastSampler:

'''

Forms training samples for predicting future values from past value

'''

def __init__(self, N, K, sliding_window = True):

'''

Predict K future sample using N previous samples

'''

self.K = K

self.N = N

self.sliding_window = sliding_window

def transform(self, A):

M = self.N + self.K #Number of samples per row (sample + target)

#indexes

if self.sliding_window:

I = np.arange(M) + np.arange(A.shape[0] - M + 1).reshape(-1, 1)

else:

if A.shape[0]%M == 0:

I = np.arange(M)+np.arange(0,A.shape[0],M).reshape(-1,1)

else:

I = np.arange(M)+np.arange(0,A.shape[0] -M,M).reshape(-1,1)

B = A[I].reshape(-1, M * A.shape[1], A.shape[2])

ci = self.N * A.shape[1] #Number of features per sample

return B[:, :ci], B[:, ci:] #Sample matrix, Target matrix

#data file path

dfp = 'data/bitcoin2015to2017.csv'

#Columns of price data to use

columns = ['Close']

df = pd.read_csv(dfp)

time_stamps = df['Timestamp']

df = df.loc[:,columns]

original_df = pd.read_csv(dfp).loc[:,columns]

在创建了PastSampler类之后,我将它应用到收集的数据上。由于原始数据范围从0到10000,因此需要缩放数据以使神经网络更容易理解数据。

file_name='bitcoin2015to2017_close.h5'

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

# normalization

for c in columns:

df[c] = scaler.fit_transform(df[c].values.reshape(-1,1))

#Features are input sample dimensions(channels)

A = np.array(df)[:,None,:]

original_A = np.array(original_df)[:,None,:]

time_stamps = np.array(time_stamps)[:,None,None]

#Make samples of temporal sequences of pricing data (channel)

NPS, NFS = 256, 16 #Number of past and future samples

ps = PastSampler(NPS, NFS, sliding_window=False)

B, Y = ps.transform(A)

input_times, output_times = ps.transform(time_stamps)

original_B, original_Y = ps.transform(original_A)

import h5py

with h5py.File(file_name, 'w') as f:

f.create_dataset("inputs", data = B)

f.create_dataset('outputs', data = Y)

f.create_dataset("input_times", data = input_times)

f.create_dataset('output_times', data = output_times)

f.create_dataset("original_datas", data=np.array(original_df))

f.create_dataset('original_inputs',data=original_B)

f.create_dataset('original_outputs',data=original_Y)

创建模型

CNN

利用一维CNN用在输入数据上滑动的内核捕获数据的局部性。如下图所示。

CNN插图(来自:http://cs231n.github.io/convolutional-networks/)

import pandas as pd

import numpy as numpy

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Conv1D, MaxPooling1D, LeakyReLU, PReLU

from keras.utils import np_utils

from keras.callbacks import CSVLogger, ModelCheckpoint

import h5py

import os

import tensorflow as tf

from keras.backend.tensorflow_backend import set_session

# Make the program use only one GPU

os.environ['CUDA_DEVICE_ORDER'] = 'PCI_BUS_ID'

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

set_session(tf.Session(config=config))

with h5py.File(''.join(['bitcoin2015to2017_close.h5']), 'r') as hf:

datas = hf['inputs'].value

labels = hf['outputs'].value

output_file_name='bitcoin2015to2017_close_CNN_2_relu'

step_size = datas.shape[1]

batch_size= 8

nb_features = datas.shape[2]

epochs = 100

#split training validation

training_size = int(0.8* datas.shape[0])

training_datas = datas[:training_size,:]

training_labels = labels[:training_size,:]

validation_datas = datas[training_size:,:]

validation_labels = labels[training_size:,:]

#build model

# 2 layers

model = Sequential()

model.add(Conv1D(activation='relu', input_shape=(step_size, nb_features), strides=3, filters=8, kernel_size=20))

model.add(Dropout(0.5))

model.add(Conv1D( strides=4, filters=nb_features, kernel_size=16))

'''

# 3 Layers

model.add(Conv1D(activation='relu', input_shape=(step_size, nb_features), strides=3, filters=8, kernel_size=8))

#model.add(LeakyReLU())

model.add(Dropout(0.5))

model.add(Conv1D(activation='relu', strides=2, filters=8, kernel_size=8))

#model.add(LeakyReLU())

model.add(Dropout(0.5))

model.add(Conv1D( strides=2, filters=nb_features, kernel_size=8))

# 4 layers

model.add(Conv1D(activation='relu', input_shape=(step_size, nb_features), strides=2, filters=8, kernel_size=2))

#model.add(LeakyReLU())

model.add(Dropout(0.5))

model.add(Conv1D(activation='relu', strides=2, filters=8, kernel_size=2))

#model.add(LeakyReLU())

model.add(Dropout(0.5))

model.add(Conv1D(activation='relu', strides=2, filters=8, kernel_size=2))

#model.add(LeakyReLU())

model.add(Dropout(0.5))

model.add(Conv1D( strides=2, filters=nb_features, kernel_size=2))

'''

model.compile(loss='mse', optimizer='adam')

model.fit(training_datas, training_labels,verbose=1, batch_size=batch_size,validation_data=(validation_datas,validation_labels), epochs = epochs, callbacks=[CSVLogger(output_file_name+'.csv', append=True),ModelCheckpoint('weights/'+output_file_name+'-{epoch:02d}-{val_loss:.5f}.hdf5', monitor='val_loss', verbose=1,mode='min')])

我创建的第一个模型是CNN。下面的代码设置了GPU编号“1”(因为我有4个,你可以把它设置成你喜欢的任何GPU)。由于在多个GPU上运行时,Tensorflow的运行似乎不太好,因此将它限制为只在1个GPU上运行是比较明智的。如果你没有GPU,不要担心。只需忽略这些行。

os.environ['CUDA_DEVICE_ORDER'] = 'PCI_BUS_ID'

os.environ['CUDA_VISIBLE_DEVICES'] ='1'

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

构建CNN模型的代码非常简单。dropout层是防止过度拟合问题。损失函数被定义为均方误差(MSE),而优化器是最先进的Adam算法。

model = Sequential()

model.add(Conv1D(activation='relu', input_shape=(step_size, nb_features), strides=3, filters=8, kernel_size=20))

model.add(Dropout(0.5))

model.add(Conv1D( strides=4, filters=nb_features, kernel_size=16))

model.compile(loss='mse', optimizer='adam')

唯一需要担心的是每个层之间的输入和输出的大小。计算某一卷积层的输出的方程为:

输出时间步=(输入时间步-内核大小)/步进+ 1

在文件的末尾,我添加了两个回调函数,CSVLogger和ModelCheckpoint。前者帮助我跟踪所有的训练和验证进度,而后者允许我将模型的权值存储到每个epoch中。

model.fit(training_datas, training_labels,verbose=1, batch_size=batch_size,validation_data=(validation_datas,validation_labels), epochs = epochs, callbacks=[CSVLogger(output_file_name+'.csv', append=True),ModelCheckpoint('weights/'+output_file_name+'-{epoch:02d}-{val_loss:.5f}.hdf5', monitor='val_loss', verbose=1,mode='min')]LSTM

长短期记忆(LSTM)网络是递归神经网络(RNN)的一种变体。它是为了解决vanilla RNN所产生的消失梯度问题而发明的。据称,LSTM能够通过较长的时间步长来记忆输入。

LSTM插图(来源:http://colah.github.io/posts/2015-08-Understanding-LSTMs/)

import pandas as pd

import numpy as numpy

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten,Reshape

from keras.layers import Conv1D, MaxPooling1D

from keras.utils import np_utils

from keras.layers import LSTM, LeakyReLU

from keras.callbacks import CSVLogger, ModelCheckpoint

import h5py

import os

import tensorflow as tf

from keras.backend.tensorflow_backend import set_session

os.environ['CUDA_DEVICE_ORDER'] = 'PCI_BUS_ID'

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

set_session(tf.Session(config=config))

with h5py.File(''.join(['bitcoin2015to2017_close.h5']), 'r') as hf:

datas = hf['inputs'].value

labels = hf['outputs'].value

step_size = datas.shape[1]

units= 50

second_units = 30

batch_size = 8

nb_features = datas.shape[2]

epochs = 100

output_size=16

output_file_name='bitcoin2015to2017_close_LSTM_1_tanh_leaky_'

#split training validation

training_size = int(0.8* datas.shape[0])

training_datas = datas[:training_size,:]

training_labels = labels[:training_size,:,0]

validation_datas = datas[training_size:,:]

validation_labels = labels[training_size:,:,0]

#build model

model = Sequential()

model.add(LSTM(units=units,activation='tanh', input_shape=(step_size,nb_features),return_sequences=False))

model.add(Dropout(0.8))

model.add(Dense(output_size))

model.add(LeakyReLU())

model.compile(loss='mse', optimizer='adam')

model.fit(training_datas, training_labels, batch_size=batch_size,validation_data=(validation_datas,validation_labels), epochs = epochs, callbacks=[CSVLogger(output_file_name+'.csv', append=True),ModelCheckpoint('weights/'+output_file_name+'-{epoch:02d}-{val_loss:.5f}.hdf5', monitor='val_loss', verbose=1,mode='min')])

由于不需要关心内核大小、跨步、输入大小和输出大小之间的关系,所以LSTM比CNN更容易实现。只需确保在网络中正确定义输入和输出的维度。

model = Sequential()

model.add(LSTM(units=units,activation='tanh', input_shape=(step_size,nb_features),return_sequences=False))

model.add(Dropout(0.8))

model.add(Dense(output_size))

model.add(LeakyReLU())

model.compile(loss='mse', optimizer='adam')

GRU

封闭式循环单元(GRU)是RNN的另一个变体。它的网络结构不像LSTM那样复杂,只有一个复位和去掉了内存单元忘记门。据说GRU的性能与LSTM不相上下,但效率更高。(对于本文中的问题,LSTM大约需要45秒/epoch的时间,而GRU的时间小于40秒/epoch).

GRU插图(来源:http://www.jackdermody.net/brightwire/article/GRU_Recurrent_Neural_Networks)

import pandas as pd

import numpy as numpy

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten,Reshape

from keras.layers import Conv1D, MaxPooling1D, LeakyReLU

from keras.utils import np_utils

from keras.layers import GRU,CuDNNGRU

from keras.callbacks import CSVLogger, ModelCheckpoint

import h5py

import os

import tensorflow as tf

from keras.backend.tensorflow_backend import set_session

os.environ['CUDA_DEVICE_ORDER'] = 'PCI_BUS_ID'

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

set_session(tf.Session(config=config))

with h5py.File(''.join(['bitcoin2015to2017_close.h5']), 'r') as hf:

datas = hf['inputs'].value

labels = hf['outputs'].value

output_file_name='bitcoin2015to2017_close_GRU_1_tanh_relu_'

step_size = datas.shape[1]

units= 50

batch_size = 8

nb_features = datas.shape[2]

epochs = 100

output_size=16

#split training validation

training_size = int(0.8* datas.shape[0])

training_datas = datas[:training_size,:]

training_labels = labels[:training_size,:,0]

validation_datas = datas[training_size:,:]

validation_labels = labels[training_size:,:,0]

#build model

model = Sequential()

model.add(GRU(units=units, input_shape=(step_size,nb_features),return_sequences=False))

model.add(Activation('tanh'))

model.add(Dropout(0.2))

model.add(Dense(output_size))

model.add(Activation('relu'))

model.compile(loss='mse', optimizer='adam')

model.fit(training_datas, training_labels, batch_size=batch_size,validation_data=(validation_datas,validation_labels), epochs = epochs, callbacks=[CSVLogger(output_file_name+'.csv', append=True),ModelCheckpoint('weights/'+output_file_name+'-{epoch:02d}-{val_loss:.5f}.hdf5', monitor='val_loss', verbose=1,mode='min')])

将LSTM中创建模型的第二行

model.add(LSTM(units=units,activation='tanh', input_shape=(step_size,nb_features),return_sequences=False))

替换为

model.add(GRU(units=units,activation='tanh', input_shape=(step_size,nb_features),return_sequences=False))

结果绘制

由于这三个模型的结果图类似,所以我只展示CNN的版本。首先,我们需要重构模型并将trained_weights加载到模型中。

from keras import applications

from keras.models import Sequential

from keras.models import Model

from keras.layers import Dropout, Flatten, Dense, Activation

from keras.callbacks import CSVLogger

import tensorflow as tf

from scipy.ndimage import imread

import numpy as np

import random

from keras.layers import LSTM

from keras.layers import Conv1D, MaxPooling1D, LeakyReLU

from keras import backend as K

import keras

from keras.callbacks import CSVLogger, ModelCheckpoint

from keras.backend.tensorflow_backend import set_session

from keras import optimizers

import h5py

from sklearn.preprocessing import MinMaxScaler

import os

import pandas as pd

# import matplotlib

import matplotlib.pyplot as plt

os.environ['CUDA_DEVICE_ORDER'] = 'PCI_BUS_ID'

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

with h5py.File(''.join(['bitcoin2015to2017_close.h5']), 'r') as hf:

datas = hf['inputs'].value

labels = hf['outputs'].value

input_times = hf['input_times'].value

output_times = hf['output_times'].value

original_inputs = hf['original_inputs'].value

original_outputs = hf['original_outputs'].value

original_datas = hf['original_datas'].value

scaler=MinMaxScaler()

#split training validation

training_size = int(0.8* datas.shape[0])

training_datas = datas[:training_size,:,:]

training_labels = labels[:training_size,:,:]

validation_datas = datas[training_size:,:,:]

validation_labels = labels[training_size:,:,:]

validation_original_outputs = original_outputs[training_size:,:,:]

validation_original_inputs = original_inputs[training_size:,:,:]

validation_input_times = input_times[training_size:,:,:]

validation_output_times = output_times[training_size:,:,:]

ground_true = np.append(validation_original_inputs,validation_original_outputs, axis=1)

ground_true_times = np.append(validation_input_times,validation_output_times, axis=1)

step_size = datas.shape[1]

batch_size= 8

nb_features = datas.shape[2]

model = Sequential()

# 2 layers

model.add(Conv1D(activation='relu', input_shape=(step_size, nb_features), strides=3, filters=8, kernel_size=20))

# model.add(LeakyReLU())

model.add(Dropout(0.25))

model.add(Conv1D( strides=4, filters=nb_features, kernel_size=16))

model.load_weights('weights/bitcoin2015to2017_close_CNN_2_relu-44-0.00030.hdf5')

model.compile(loss='mse', optimizer='adam')

然后,我们需要将预测的数据进行反向缩放,因为前面使用的是MinMaxScaler,它的范围是[0,1]。

predicted = model.predict(validation_datas)

predicted_inverted = []

for i in range(original_datas.shape[1]):

scaler.fit(original_datas[:,i].reshape(-1,1))

predicted_inverted.append(scaler.inverse_transform(predicted[:,:,i]))

print np.array(predicted_inverted).shape

#get only the close data

ground_true = ground_true[:,:,0].reshape(-1)

ground_true_times = ground_true_times.reshape(-1)

ground_true_times = pd.to_datetime(ground_true_times, unit='s')

# since we are appending in the first dimension

predicted_inverted = np.array(predicted_inverted)[0,:,:].reshape(-1)

print np.array(predicted_inverted).shape

validation_output_times = pd.to_datetime(validation_output_times.reshape(-1), unit='s')

这两个数据帧都是真实的(真实的价格),并且预测的比特币价格已经被创建。为了实现可视化,绘制的图只显示了自2017年8月以后的数据。

ground_true_df = pd.DataFrame()

ground_true_df['times'] = ground_true_times

ground_true_df['value'] = ground_true

prediction_df = pd.DataFrame()

prediction_df['times'] = validation_output_times

prediction_df['value'] = predicted_inverted

prediction_df = prediction_df.loc[(prediction_df["times"].dt.year == 2017 )&(prediction_df["times"].dt.month > 7 ),: ]

ground_true_df = ground_true_df.loc[(ground_true_df["times"].dt.year == 2017 )&(ground_true_df["times"].dt.month > 7 ),:]

用pyplot画这个图形。由于预期价格以16分钟为基础,没有把所有的价格联系起来,我们可以更容易地看到结果。预测数据被绘制成红点,就像“ro”在第三行表示的那样。下图所示的蓝线表示真实值(实际数据),而红点代表预测的比特币价格。

plt.figure(figsize=(20,10))

plt.plot(ground_true_df.times,ground_true_df.value, label = 'Actual')

plt.plot(prediction_df.times,prediction_df.value,'ro', label='Predicted')

plt.legend(loc='upper left')

plt.show()

比特币价格预测的最佳结果图

从上图可以看出,这一预测与比特币的实际价格非常相似。为了选择最佳模型,我决定测试几种类型的网络配置,以下是配置列表。

不同模型的预测结果

上述表格的每一行都是来自100个训练epoch的最佳验证损失的模型。从上面的结果,我们可以观察到,与普通的ReLU相比,LeakyReLU似乎总是能产生更好的损失。但是,带有Leaky ReLU的4层的CNN作为激活函数会产生大量的验证损失,这可能是由于可能模型的错误部署,该模型可能需要重新验证。CNN模型可以被训练的非常快速(2秒/年),其性能比LSTM和GRU差一些。最好的模型似乎是将tanh和Leaky ReLU作为激活函数的LSTM,虽然3层的CNN似乎能更好地捕获数据的局部时间依赖性。

LSTM用tanh和Leaky ReLu作为激活函数

3层CNN用Leaky ReLu作为激活函数

尽管这个预测似乎很不错,但人们还是担心过度拟合。训练和验证损失之间存在差距(5.97E-06 vs 3.92E-05),当使用LeakyReLU训练LSTM时,应采用正则化,以使方差最小化。

正则化

为了找出最佳的正则化策略,我用几个不同L1和L2值进行了实验。首先,我们需要定义一个新的函数,以便将数据集成到LSTM中。在在这里,我将使用在偏差向量上正则化的偏差正则化器作为例子。

def fit_lstm(reg):

global training_datas, training_labels, batch_size, epochs,step_size,nb_features, units

model = Sequential()

model.add(CuDNNLSTM(units=units, bias_regularizer=reg, input_shape=(step_size,nb_features),return_sequences=False))

model.add(Activation('tanh'))

model.add(Dropout(0.2))

model.add(Dense(output_size))

model.add(LeakyReLU())

model.compile(loss='mse', optimizer='adam')

model.fit(training_datas, training_labels, batch_size=batch_size, epochs = epochs, verbose=0)

return model

重复训练模型30次,每次30 epoch,为一次实验。

def experiment(validation_datas,validation_labels,original_datas,ground_true,ground_true_times,validation_original_outputs, validation_output_times, nb_repeat, reg):

error_scores = list()

#get only the close data

ground_true = ground_true[:,:,0].reshape(-1)

ground_true_times = ground_true_times.reshape(-1)

ground_true_times = pd.to_datetime(ground_true_times, unit='s')

validation_output_times = pd.to_datetime(validation_output_times.reshape(-1), unit='s')

for i in range(nb_repeat):

model = fit_lstm(reg)

predicted = model.predict(validation_datas)

predicted_inverted = []

scaler.fit(original_datas[:,0].reshape(-1,1))

predicted_inverted.append(scaler.inverse_transform(predicted))

# since we are appending in the first dimension

predicted_inverted = np.array(predicted_inverted)[0,:,:].reshape(-1)

error_scores.append(mean_squared_error(validation_original_outputs[:,:,0].reshape(-1),predicted_inverted))

return error_scores

regs = [regularizers.l1(0),regularizers.l1(0.1), regularizers.l1(0.01), regularizers.l1(0.001), regularizers.l1(0.0001),regularizers.l2(0.1), regularizers.l2(0.01), regularizers.l2(0.001), regularizers.l2(0.0001)]

nb_repeat = 30

results = pd.DataFrame()

for reg in regs:

name = ('l1 %.4f,l2 %.4f' % (reg.l1, reg.l2))

print "Training "+ str(name)

results[name] = experiment(validation_datas,validation_labels,original_datas,ground_true,ground_true_times,validation_original_outputs, validation_output_times, nb_repeat,reg)

results.describe().to_csv('result/lstm_bias_reg.csv')

results.describe()

如果你使用的是Jupyter notebook,你可以直接从输出看到下表。

运行偏差调节器的结果

为了可视化比较关系,我们可以使用箱线图:

results.describe().boxplot()

plt.show()

通过比较发现,L2正则化系数0.01对偏差矢量的影响最大。

为了找出所有正则化器之间的最佳组合,包括激活、偏差、内核、递归矩阵,必须逐一测试它们,这对于我目前的硬件配置来说似乎并不实际。因此,我将把它作为未来的计划。

结论

你学到的知识:

- 如何收集实时比特币数据。

- 如何为训练和测试准备数据。

- 如何利用深度学习来预测比特币的价格。

- 如何可视化预测结果。

- 如何在模型上应用正则化。

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消