优化PyTorch模型性能:提升AI应用的效率与精度

2023年06月20日 由 Alex 发表

828021

0

介绍

训练深度学习模型,尤其是大型模型,可能是一项昂贵的支出。管理这些成本的主要方法之一是性能优化。性能优化是一个迭代过程,在这个过程中,我们不断地寻找机会来提高应用程序的性能,然后利用这些机会。在本文,我们强调了使用合适的工具进行这种分析的重要性。工具的选择可能取决于许多因素,包括训练加速器的类型(例如,GPU、HPU或其他)和训练框架。

这篇文章的重点是在GPU上使用PyTorch进行训练。更具体地说,我们将重点关注PyTorch内置的性能分析器PyTorch Profiler,以及查看其结果的一种方式,即PyTorch Profiler TensorBoard插件。

这篇文章并不能取代官方PyTorch文档中关于PyTorch Profiler或使用TensorBoard插件分析分析器结果的说明。在这的目的是展示这些工具在日常开发中的使用方法。

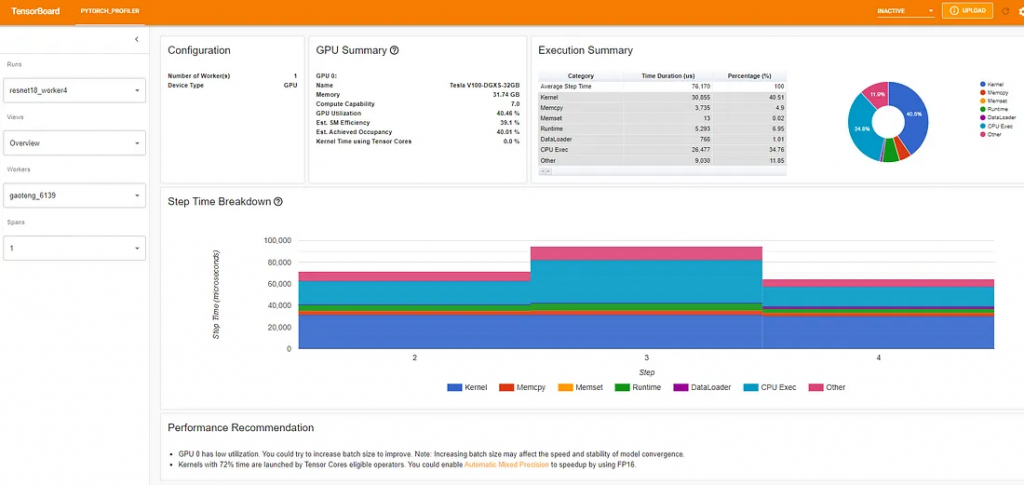

下面教程介绍了一个分类模型(基于Resnet架构),该模型是在流行的Cifar10数据集上训练的。它继续演示如何使用PyTorch Profiler和TensorBoard插件来识别和修复数据加载器中的瓶颈。输入数据管道中的性能瓶颈并不罕见,关于教程,最令人惊讶的是最终(优化后)结果,并将其粘贴在下面:

如果你仔细观察,你会发现优化后的GPU利用率是40.46%。现在没有办法掩饰这一点:这个结果是令人沮丧的。正如开头所说,GPU是我们训练机器中最昂贵的资源,我们的目标应该是最大化其利用率。40.46%的利用率通常代表着加速培训和节约成本的重要机会。当然,我们可以做得更好!在这篇文章中,我们将尝试做得更好。我们将从尝试重现官方教程中呈现的结果开始,看看我们是否可以使用相同的工具来进一步提高训练性能。

玩具示例

下面的代码块包含TensorBoard-plugin教程定义的训练循环,有两个小修改:

教程中使用的GPU是Tesla V100-DGXS-32GB。在本文中,我们尝试使用包含Tesla V100-SXM2-16GB GPU的Amazon EC2 p3.2xlarge实例来重现并改进教程中的性能结果。虽然它们共享相同的架构,但您可以在这里了解到两种GPU之间的一些差异。我们使用AWS PyTorch 2.0 Docker图像运行训练脚本。训练脚本的性能结果显示在TensorBoard查看器的概述页面中,如下图所示:

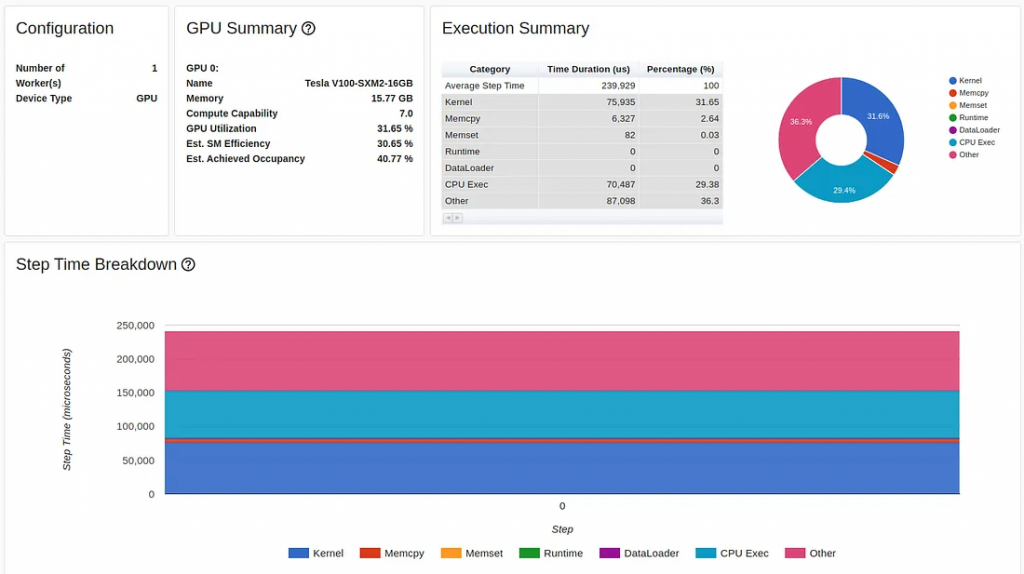

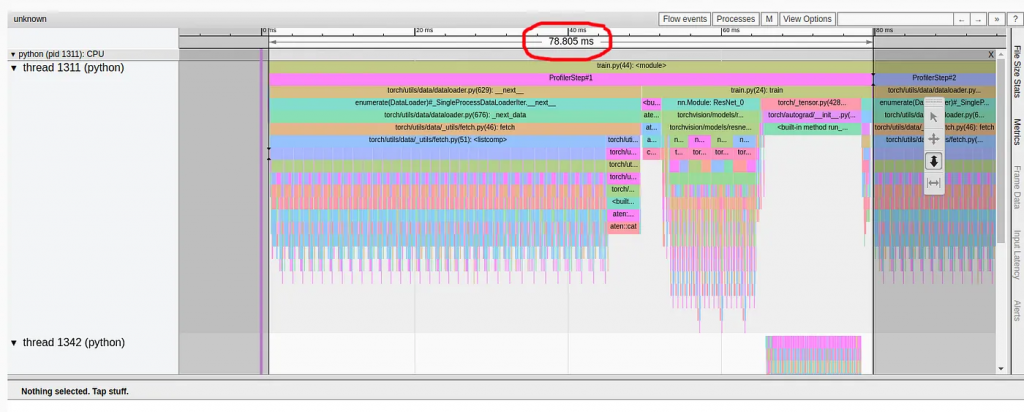

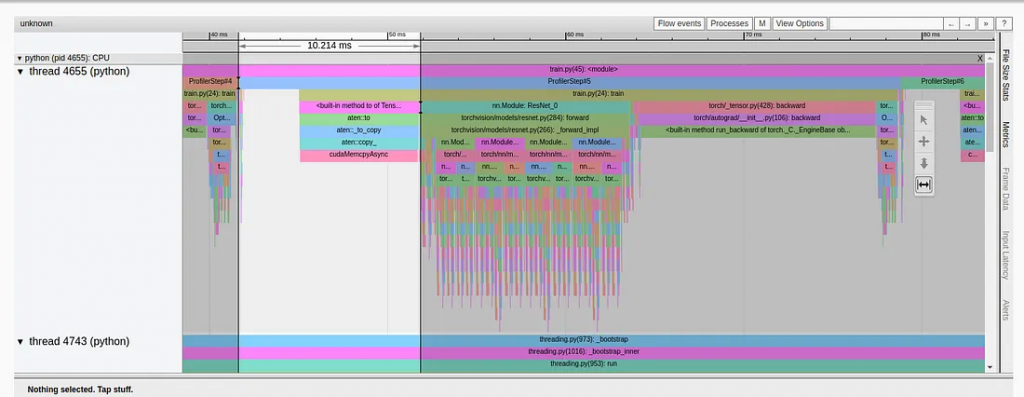

首先,我们注意到与教程相反,在我们的实验中,torch-tb-profiler版本0.4.1的概览页面将三个分析步骤合并为一步。因此,平均整体步骤时间为80毫秒,而不是240毫秒。这可以在Trace选项卡中清楚地看到(Trace选项卡通常提供更准确的报告),其中每个步骤需要大约80毫秒。

请注意,我们的起点是31.65%的GPU利用率和80毫秒的步长时间,这与教程中分别为23.54%和132毫秒的起点有所不同。这可能是训练环境差异的结果,包括GPU类型和PyTorch版本。我们还注意到,虽然教程基线结果清楚地将性能问题诊断为DataLoader的瓶颈,但我们的结果却没有。我们经常发现数据加载瓶颈会伪装成概览选项卡中高比例的“CPU Exec”或“Other”。

优化1:多进程数据加载

我们从应用本教程中描述的多进程数据加载开始。由于Amazon EC2 p3.2xlarge实例有8个vCPU,我们将DataLoader worker的数量设置为8以获得最佳性能:

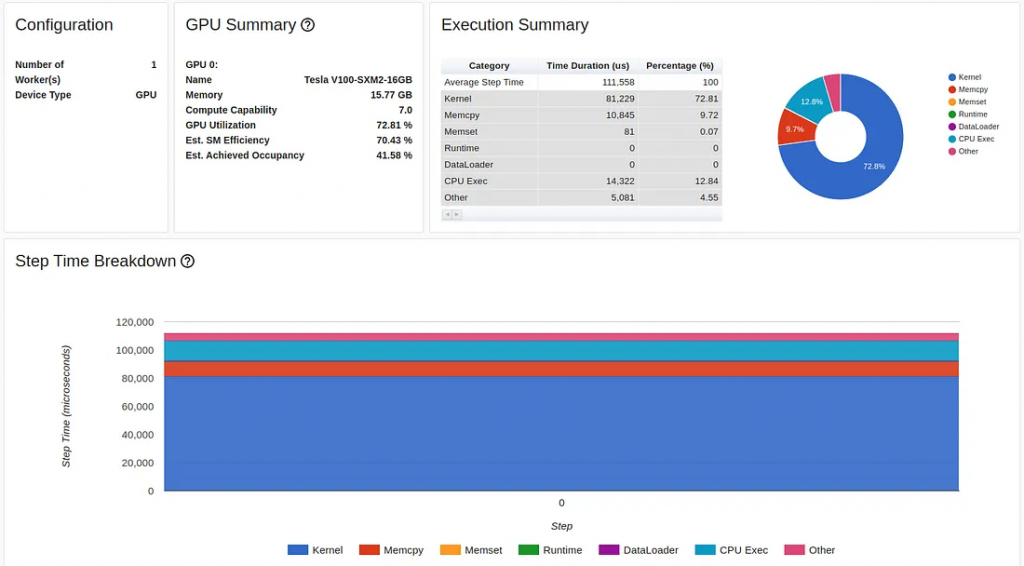

优化的结果如下所示:

对单行代码的更改使GPU利用率提高了200%以上(从31.65%增加到72.81%),并且将训练步长时间减半以上(从80毫秒减少到37毫秒)。

这是本教程中的优化过程结束的地方。尽管我们的GPU利用率(72.81%)比教程中的结果(40.46%)要高得多,但毫不怀疑,你会发现这些结果仍然很不令人满意。

优化2:内存固定

如果我们分析上一个实验的Trace视图,可以看到大量的时间(37毫秒中的10毫秒)仍然用于将训练数据加载到GPU上。

为了解决这个问题,我们将应用另一个pytorch推荐的优化来简化数据输入流,内存固定。使用固定内存可以提高主机到GPU数据复制的速度,更重要的是,我们可以让它们异步。这意味着我们可以在GPU中准备下一个训练批次,同时在当前批次上运行训练步骤。

此优化需要修改两行代码。首先,我们将DataLoader的pin_memory标志设置为True。

然后我们将主机到设备的内存传输(在train函数中)修改为非阻塞:

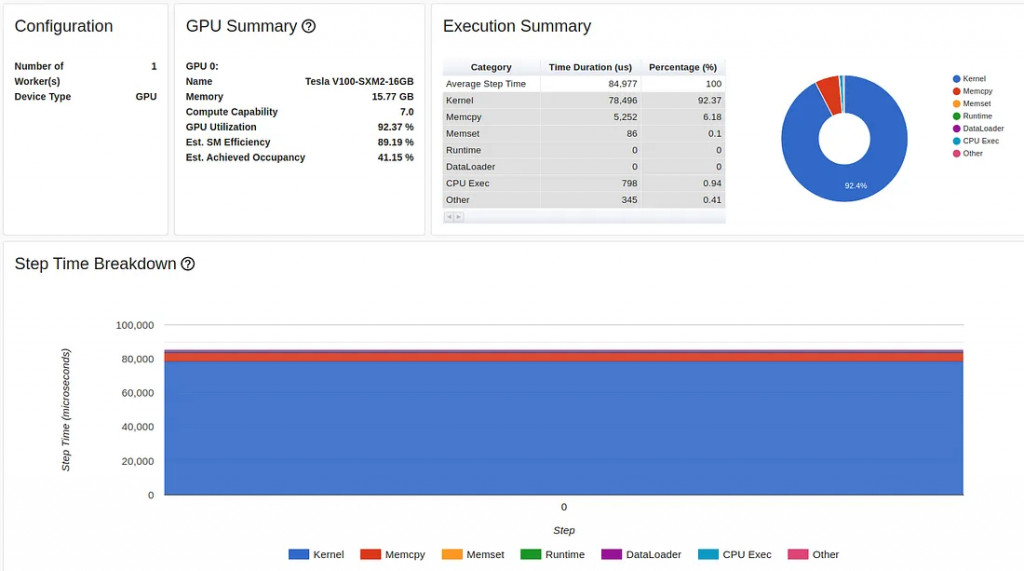

内存固定优化的结果如下:

我们的GPU利用率现在达到了可观的92.37%,我们的步长也进一步减少了。但我们仍然可以做得更好。请注意,尽管进行了优化,但性能报告仍然表明我们花费了大量时间将数据复制到GPU中。我们将在下面的第4步回到这一点。

优化3:增加批量大小

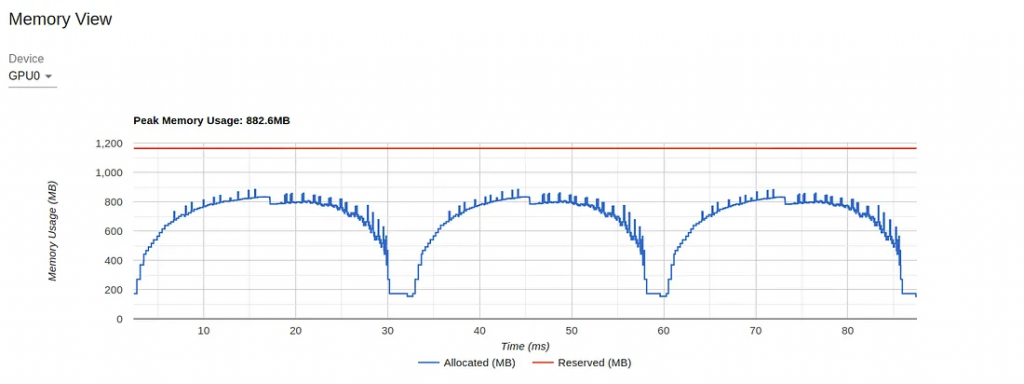

对于我们的下一个优化,我们将注意力放在上一个实验的内存视图上:

图表显示,在16 GB的GPU内存中,我们的利用率峰值不到1 GB。这是资源利用不足的一个极端例子,通常(尽管不总是)表明有提高性能的机会。控制内存利用率的一种方法是增加批处理大小。在下图中,我们显示了将批大小增加到512(内存利用率增加到11.3 GB)时的性能结果。

虽然GPU利用率指标没有太大变化,但我们的训练速度已经大大提高,从每秒1200个样本(批量大小为32的46毫秒)到每秒1584个样本(批量大小为512的324毫秒)。

注意:与我们之前的优化相反,增加批量大小可能会对训练应用程序的行为产生影响。不同的模型对批量大小的变化表现出不同程度的敏感性。有些可能只需要对优化器设置进行一些调整。对于其他人来说,调整到大的批量大小可能更加困难,甚至不可能。

由于这一变化,从CPU复制到GPU的数据量减少了4倍,红色部分问题几乎消失了:

我们现在GPU利用率达到97.51%的新高,训练速度为每秒1670个样本,看看我们还能做些什么。

优化5:将渐变设置为None

在这个阶段,我们似乎充分利用了GPU,但这并不意味着我们不能更有效地利用它。据说减少GPU内存操作的一个流行优化是在每个训练步骤中将模型参数梯度设置为None而不是零。实现这个优化所需要做的就是设置优化器的optimizer.zero_grad调用的set_to_none设置为True:

在我们的例子中,这种优化并没有以任何有意义的方式提高我们的性能。

优化6:自动混合精度

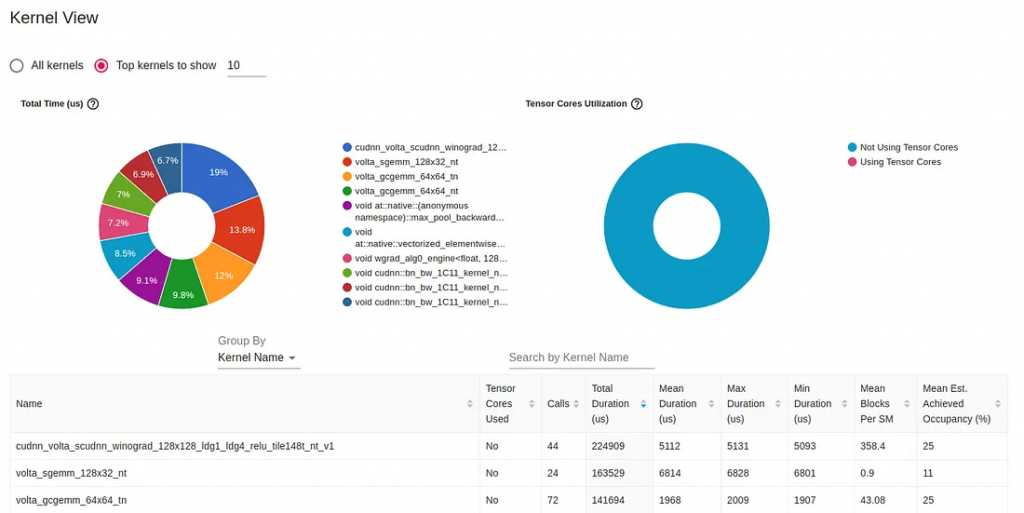

GPU内核视图显示了GPU内核处于活动状态的时间,可以作为提高GPU利用率的有用资源:

在这个报告中最明显的细节之一是缺乏使用GPU Tensor Cores。在相对较新的GPU架构上,Tensor Cores是用于矩阵乘法的专用处理单元,可以显著提高AI应用程序的性能。它们的缺乏使用可能代表着优化的重要机会。

由于Tensor Cores是专门为混合精度计算而设计的,因此增加其利用率的一种直接方法是修改我们的模型以使用自动混合精度(AMP)。在AMP模式下,模型的部分自动转换为低精度的16位浮点数,并在GPU TensorCores上运行。

重要的是,请注意,AMP的完整实现可能需要梯度缩放,我们的演示中没有包括梯度缩放。在使用混合精度训练之前,请务必查看有关它的文档。

下面的代码块演示了对启用AMP所需的训练步骤的修改。

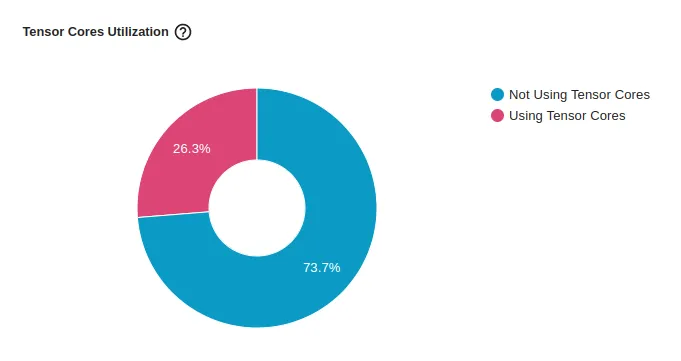

对Tensor Core利用率的影响如下图所示。尽管仅用一行代码,利用率就从0%跃升到26.3%,但它仍显示有进一步改进的机会。

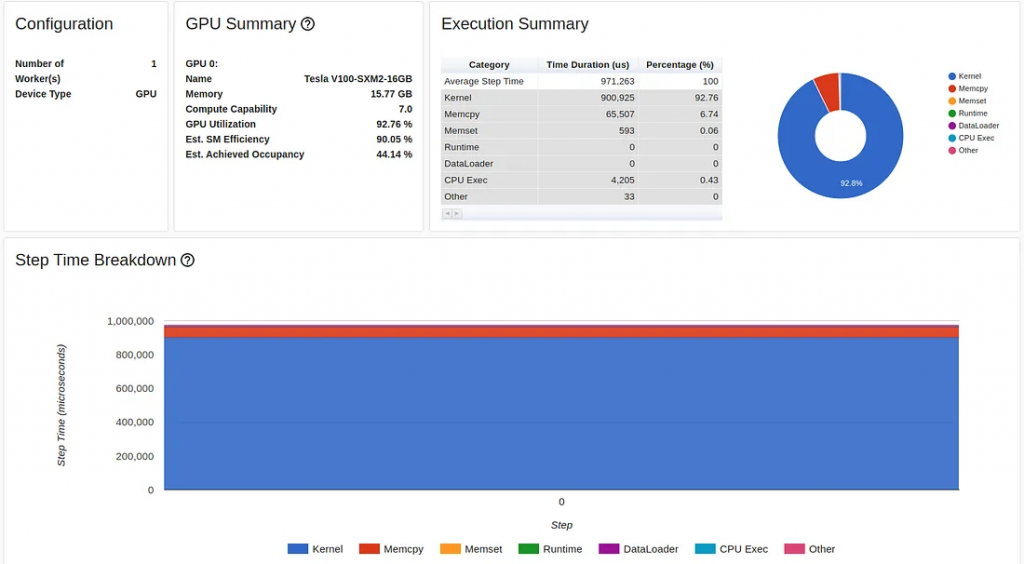

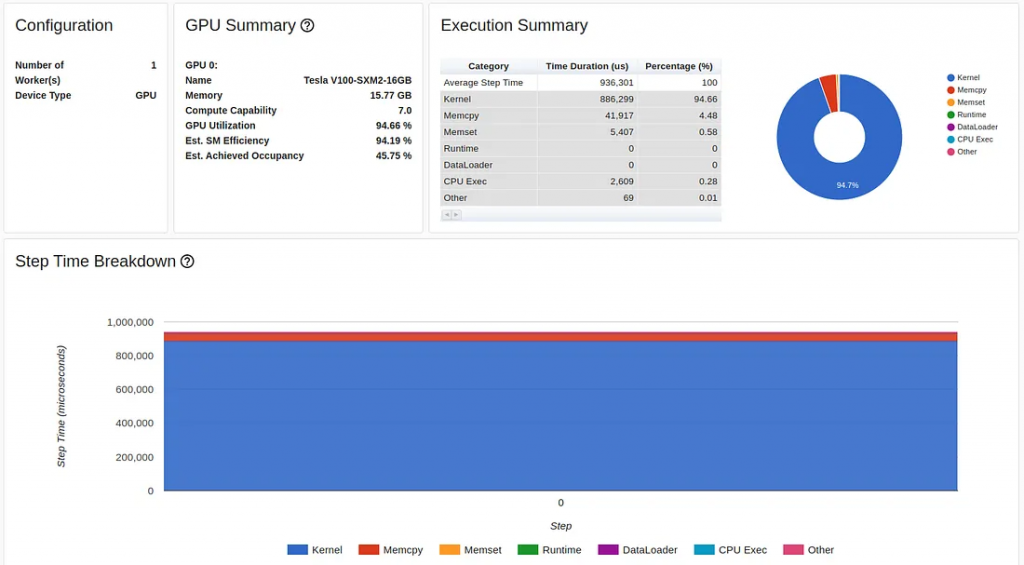

除了增加Tensor Core利用率之外,使用AMP还降低了GPU内存利用率,从而释放出更多空间来增加批处理大小。下图捕获了AMP优化和批大小设置为1024后的训练性能结果:

虽然GPU利用率略有下降,但我们的主要吞吐量指标进一步增加了近50%,从每秒1670个样本增加到每秒2477个样本。

注意:降低模型部分的精度可能会对其收敛产生有意义的影响。在增加批量大小的情况下,使用混合精度的影响将因模型而异。在某些情况下,AMP 几乎不费吹灰之力就能工作。其他时候,您可能需要更努力地调整自动缩放器。还有些时候,您可能需要明确的设置模型不同部分的精度类型(例如,手动混合精度)。

优化7:在图形模式下训练

我们将应用的最终优化是模型编译。与每个PyTorch"急切"执行模式相反,编译API将您的模型转换为中间计算图,然后以最适合底层训练加速器的方式编译为低级计算内核。

下面的代码块演示了应用模型编译所需的更改:

模型编译优化的结果如下所示:

模型编译进一步将我们的吞吐量提高到每秒3268个样本,而之前的实验是每秒2477个样本,性能提高了32%。

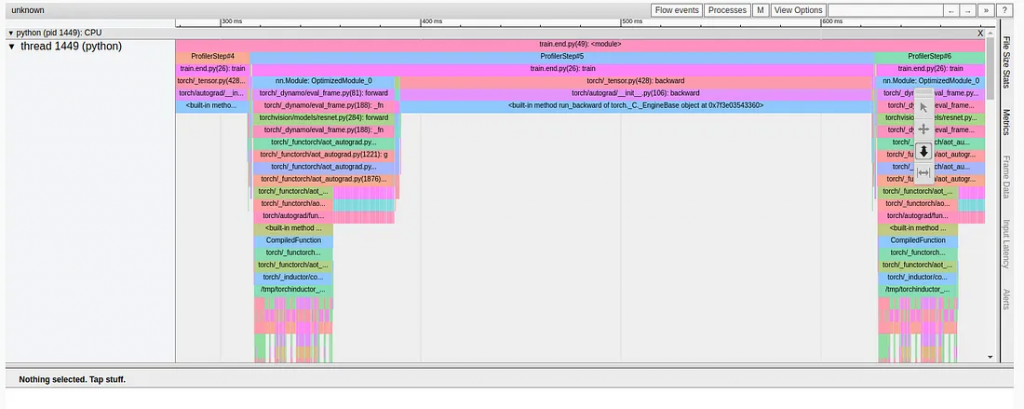

图编译改变训练步骤的方式在TensorBoard插件的不同视图中非常明显。例如,内核视图表明使用了新的(融合的)GPU内核,跟踪视图(如下所示)显示了与我们之前看到的完全不同的模式。

中期业绩

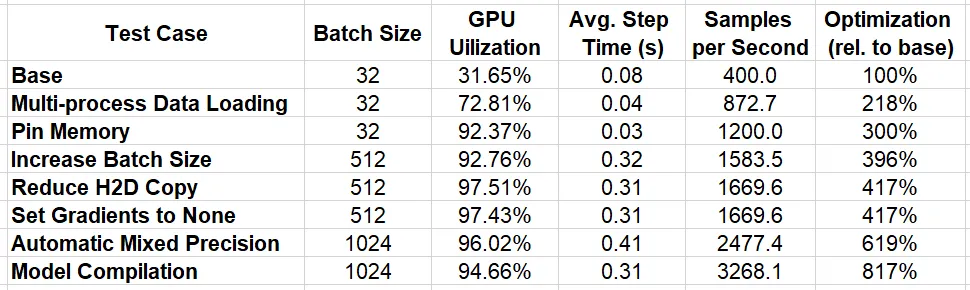

在下表中,我们总结了我们应用的连续优化的结果。

通过使用PyTorch Profiler和TensorBoard插件应用我们的迭代分析和优化方法,我们能够将性能提高817%。

我们的工作完成了吗?绝对不是!我们实现的每个优化都揭示了性能改进的新潜在机会。这些机会以释放资源的形式呈现(例如,移动到混合精度的方式使我们能够增加批处理大小)或以新发现的性能瓶颈的形式呈现(例如,我们最终优化的方式揭示了主机到设备数据传输中的瓶颈)。此外,还有许多其他众所周知的优化形式,我们没有在这篇文章中尝试。最后,新的库优化(例如,我们在步骤7中演示的模型编译特性)一直在发布,进一步实现了我们的性能改进目标。正如我们在介绍中强调的那样,为了充分利用这些机会,性能优化必须是开发工作流程中迭代且一致的一部分。

总结

在这篇文章中,我们展示了在玩具分类模型上进行性能优化的巨大潜力。尽管你可以使用其他的性能分析工具,它们各有优缺点,但我们选择了PyTorch Profiler和TensorBoard插件,因为它们易于集成。

成功优化的路径将根据训练项目的细节而有很大的不同,包括模型架构和训练环境。在实践中,实现您的目标可能比我们在这里提供的示例更困难。我们所描述的一些技术可能对您的性能影响不大,甚至可能使性能变差。我们鼓励您根据项目的具体细节开发自己的工具和技术,以达到优化目标。

来源:https://towardsdatascience.com/pytorch-model-performance-analysis-and-optimization-10c3c5822869

训练深度学习模型,尤其是大型模型,可能是一项昂贵的支出。管理这些成本的主要方法之一是性能优化。性能优化是一个迭代过程,在这个过程中,我们不断地寻找机会来提高应用程序的性能,然后利用这些机会。在本文,我们强调了使用合适的工具进行这种分析的重要性。工具的选择可能取决于许多因素,包括训练加速器的类型(例如,GPU、HPU或其他)和训练框架。

性能优化流程

这篇文章的重点是在GPU上使用PyTorch进行训练。更具体地说,我们将重点关注PyTorch内置的性能分析器PyTorch Profiler,以及查看其结果的一种方式,即PyTorch Profiler TensorBoard插件。

这篇文章并不能取代官方PyTorch文档中关于PyTorch Profiler或使用TensorBoard插件分析分析器结果的说明。在这的目的是展示这些工具在日常开发中的使用方法。

下面教程介绍了一个分类模型(基于Resnet架构),该模型是在流行的Cifar10数据集上训练的。它继续演示如何使用PyTorch Profiler和TensorBoard插件来识别和修复数据加载器中的瓶颈。输入数据管道中的性能瓶颈并不罕见,关于教程,最令人惊讶的是最终(优化后)结果,并将其粘贴在下面:

优化后的性能(来自PyTorch网站)

如果你仔细观察,你会发现优化后的GPU利用率是40.46%。现在没有办法掩饰这一点:这个结果是令人沮丧的。正如开头所说,GPU是我们训练机器中最昂贵的资源,我们的目标应该是最大化其利用率。40.46%的利用率通常代表着加速培训和节约成本的重要机会。当然,我们可以做得更好!在这篇文章中,我们将尝试做得更好。我们将从尝试重现官方教程中呈现的结果开始,看看我们是否可以使用相同的工具来进一步提高训练性能。

玩具示例

下面的代码块包含TensorBoard-plugin教程定义的训练循环,有两个小修改:

1.我们使用与教程中使用的CIFAR10数据集具有相同属性和行为的假数据集。可以在这里找到此更改的动机。

2.我们初始化torch.profile.schedule,将warmup(预热)标志设置为3,将repeat(重复)标志设置为1。我们发现,预热步骤数量的轻微增加提高了性能分析结果的稳定性。

import numpy as np

import torch

import torch.nn

import torch.optim

import torch.profiler

import torch.utils.data

import torchvision.datasets

import torchvision.models

import torchvision.transforms as T

from torchvision.datasets.vision import VisionDataset

from PIL import Image

class FakeCIFAR(VisionDataset):

def __init__(self, transform):

super().__init__(root=None, transform=transform)

self.data = np.random.randint(low=0,high=256,size=(10000,32,32,3),dtype=np.uint8)

self.targets = np.random.randint(low=0,high=10,size=(10000),dtype=np.uint8).tolist()

def __getitem__(self, index):

img, target = self.data[index], self.targets[index]

img = Image.fromarray(img)

if self.transform is not None:

img = self.transform(img)

return img, target

def __len__(self) -> int:

return len(self.data)

transform = T.Compose(

[T.Resize(224),

T.ToTensor(),

T.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

train_set = FakeCIFAR(transform=transform)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=32,shuffle=True)

device = torch.device("cuda:0")

model = torchvision.models.resnet18(weights='IMAGENET1K_V1').cuda(device)

criterion = torch.nn.CrossEntropyLoss().cuda(device)

optimizer = torch.optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

model.train()

# train step

def train(data):

inputs, labels = data[0].to(device=device), data[1].to(device=device)

outputs = model(inputs)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# training loop wrapped with profiler object

with torch.profiler.profile(

schedule=torch.profiler.schedule(wait=1, warmup=4, active=3, repeat=1),

on_trace_ready=torch.profiler.tensorboard_trace_handler('./log/resnet18'),

record_shapes=True,

profile_memory=True,

with_stack=True

) as prof:

for step, batch_data in enumerate(train_loader):

if step >= (1 + 4 + 3) * 1:

break

train(batch_data)

prof.step() # Need to call this at the end of each step

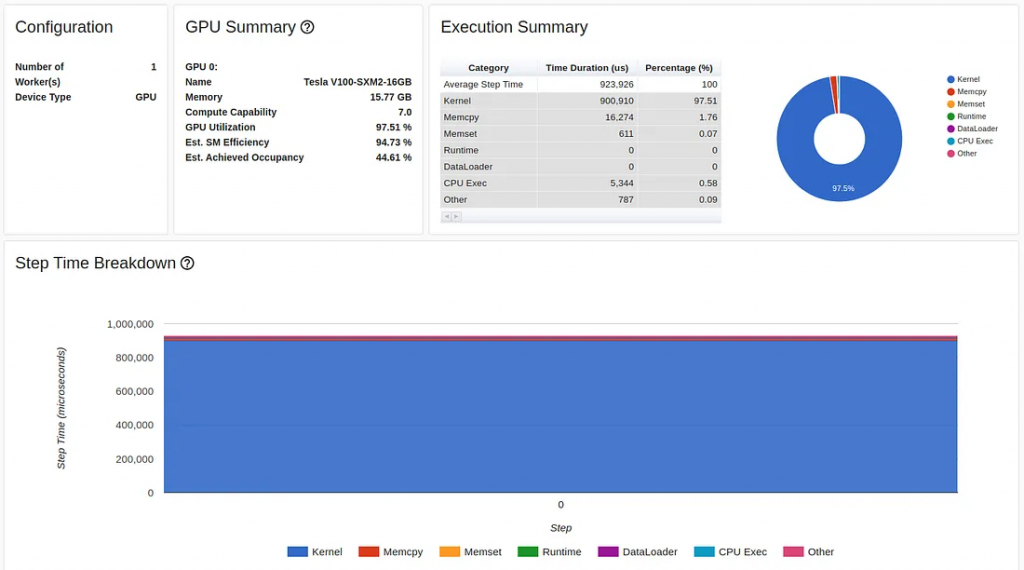

教程中使用的GPU是Tesla V100-DGXS-32GB。在本文中,我们尝试使用包含Tesla V100-SXM2-16GB GPU的Amazon EC2 p3.2xlarge实例来重现并改进教程中的性能结果。虽然它们共享相同的架构,但您可以在这里了解到两种GPU之间的一些差异。我们使用AWS PyTorch 2.0 Docker图像运行训练脚本。训练脚本的性能结果显示在TensorBoard查看器的概述页面中,如下图所示:

TensorBoard Profiler Overview 选项卡中显示的基线性能结果

首先,我们注意到与教程相反,在我们的实验中,torch-tb-profiler版本0.4.1的概览页面将三个分析步骤合并为一步。因此,平均整体步骤时间为80毫秒,而不是240毫秒。这可以在Trace选项卡中清楚地看到(Trace选项卡通常提供更准确的报告),其中每个步骤需要大约80毫秒。

TensorBoard Profiler 跟踪视图选项卡中显示的基线性能结果

请注意,我们的起点是31.65%的GPU利用率和80毫秒的步长时间,这与教程中分别为23.54%和132毫秒的起点有所不同。这可能是训练环境差异的结果,包括GPU类型和PyTorch版本。我们还注意到,虽然教程基线结果清楚地将性能问题诊断为DataLoader的瓶颈,但我们的结果却没有。我们经常发现数据加载瓶颈会伪装成概览选项卡中高比例的“CPU Exec”或“Other”。

优化1:多进程数据加载

我们从应用本教程中描述的多进程数据加载开始。由于Amazon EC2 p3.2xlarge实例有8个vCPU,我们将DataLoader worker的数量设置为8以获得最佳性能:

train_loader = torch.utils.data.DataLoader(train_set, batch_size=32,

shuffle=True, num_workers=8)

优化的结果如下所示:

TensorBoard Profiler Overview选项卡中加载多进程数据的结果

对单行代码的更改使GPU利用率提高了200%以上(从31.65%增加到72.81%),并且将训练步长时间减半以上(从80毫秒减少到37毫秒)。

这是本教程中的优化过程结束的地方。尽管我们的GPU利用率(72.81%)比教程中的结果(40.46%)要高得多,但毫不怀疑,你会发现这些结果仍然很不令人满意。

优化2:内存固定

如果我们分析上一个实验的Trace视图,可以看到大量的时间(37毫秒中的10毫秒)仍然用于将训练数据加载到GPU上。

Trace View选项卡中的多进程数据加载结果

为了解决这个问题,我们将应用另一个pytorch推荐的优化来简化数据输入流,内存固定。使用固定内存可以提高主机到GPU数据复制的速度,更重要的是,我们可以让它们异步。这意味着我们可以在GPU中准备下一个训练批次,同时在当前批次上运行训练步骤。

此优化需要修改两行代码。首先,我们将DataLoader的pin_memory标志设置为True。

train_loader = torch.utils.data.DataLoader(train_set, batch_size=32,

shuffle=True, num_workers=8, pin_memory=True)

然后我们将主机到设备的内存传输(在train函数中)修改为非阻塞:

inputs, labels = data[0].to(device=device, non_blocking=True), \

data[1].to(device=device, non_blocking=True)

内存固定优化的结果如下:

TensorBoard Profiler Overview 选项卡中 Memory Pinning 的结果

我们的GPU利用率现在达到了可观的92.37%,我们的步长也进一步减少了。但我们仍然可以做得更好。请注意,尽管进行了优化,但性能报告仍然表明我们花费了大量时间将数据复制到GPU中。我们将在下面的第4步回到这一点。

优化3:增加批量大小

对于我们的下一个优化,我们将注意力放在上一个实验的内存视图上:

TensorBoard Profiler中的内存视图

图表显示,在16 GB的GPU内存中,我们的利用率峰值不到1 GB。这是资源利用不足的一个极端例子,通常(尽管不总是)表明有提高性能的机会。控制内存利用率的一种方法是增加批处理大小。在下图中,我们显示了将批大小增加到512(内存利用率增加到11.3 GB)时的性能结果。

在TensorBoard Profiler Overview选项卡中增加批处理大小的结果

虽然GPU利用率指标没有太大变化,但我们的训练速度已经大大提高,从每秒1200个样本(批量大小为32的46毫秒)到每秒1584个样本(批量大小为512的324毫秒)。

注意:与我们之前的优化相反,增加批量大小可能会对训练应用程序的行为产生影响。不同的模型对批量大小的变化表现出不同程度的敏感性。有些可能只需要对优化器设置进行一些调整。对于其他人来说,调整到大的批量大小可能更加困难,甚至不可能。

优化4:减少主机到设备的拷贝

您可能注意到了前面结果的饼图中表示主机到设备数据副本的红色部分。解决这种瓶颈最直接的方法是看看我们是否可以减少每个批量中的数据量。注意,在图像输入的情况下,我们将数据类型从8位无符号整数转换为32位浮点数,并在执行数据复制之前应用规范化。在下面的代码块中,我们建议对输入数据流进行更改,其中我们延迟数据类型转换和规范化,直到数据在GPU上:

# maintain the image input as an 8-bit uint8 tensor

transform = T.Compose(

[T.Resize(224),

T.PILToTensor()

])

train_set = FakeCIFAR(transform=transform)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=1024, shuffle=True, num_workers=8, pin_memory=True)

device = torch.device("cuda:0")

model = torch.compile(torchvision.models.resnet18(weights='IMAGENET1K_V1').cuda(device), fullgraph=True)

criterion = torch.nn.CrossEntropyLoss().cuda(device)

optimizer = torch.optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

model.train()

# train step

def train(data):

inputs, labels = data[0].to(device=device, non_blocking=True), \

data[1].to(device=device, non_blocking=True)

# convert to float32 and normalize

inputs = (inputs.to(torch.float32) / 255. - 0.5) / 0.5

outputs = model(inputs)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

由于这一变化,从CPU复制到GPU的数据量减少了4倍,红色部分问题几乎消失了:

在TensorBoard Profiler Overview选项卡中减少CPU到GPU拷贝的结果

我们现在GPU利用率达到97.51%的新高,训练速度为每秒1670个样本,看看我们还能做些什么。

优化5:将渐变设置为None

在这个阶段,我们似乎充分利用了GPU,但这并不意味着我们不能更有效地利用它。据说减少GPU内存操作的一个流行优化是在每个训练步骤中将模型参数梯度设置为None而不是零。实现这个优化所需要做的就是设置优化器的optimizer.zero_grad调用的set_to_none设置为True:

optimizer.zero_grad(set_to_none=True)

在我们的例子中,这种优化并没有以任何有意义的方式提高我们的性能。

优化6:自动混合精度

GPU内核视图显示了GPU内核处于活动状态的时间,可以作为提高GPU利用率的有用资源:

TensorBoard Profiler中的内核视图

在这个报告中最明显的细节之一是缺乏使用GPU Tensor Cores。在相对较新的GPU架构上,Tensor Cores是用于矩阵乘法的专用处理单元,可以显著提高AI应用程序的性能。它们的缺乏使用可能代表着优化的重要机会。

由于Tensor Cores是专门为混合精度计算而设计的,因此增加其利用率的一种直接方法是修改我们的模型以使用自动混合精度(AMP)。在AMP模式下,模型的部分自动转换为低精度的16位浮点数,并在GPU TensorCores上运行。

重要的是,请注意,AMP的完整实现可能需要梯度缩放,我们的演示中没有包括梯度缩放。在使用混合精度训练之前,请务必查看有关它的文档。

下面的代码块演示了对启用AMP所需的训练步骤的修改。

def train(data):

inputs, labels = data[0].to(device=device, non_blocking=True), \

data[1].to(device=device, non_blocking=True)

inputs = (inputs.to(torch.float32) / 255. - 0.5) / 0.5

with torch.autocast(device_type='cuda', dtype=torch.float16):

outputs = model(inputs)

loss = criterion(outputs, labels)

# 注意 - torch.cuda。 amp.GradScaler() 可能需要

optimizer.zero_grad(set_to_none=True)

loss.backward()

optimizer.step()

对Tensor Core利用率的影响如下图所示。尽管仅用一行代码,利用率就从0%跃升到26.3%,但它仍显示有进一步改进的机会。

从 TensorBoard Profiler 中的内核视图进行 AMP 优化的 Tensor 核心利用率

除了增加Tensor Core利用率之外,使用AMP还降低了GPU内存利用率,从而释放出更多空间来增加批处理大小。下图捕获了AMP优化和批大小设置为1024后的训练性能结果:

在TensorBoard Profiler Overview选项卡中AMP优化的结果

虽然GPU利用率略有下降,但我们的主要吞吐量指标进一步增加了近50%,从每秒1670个样本增加到每秒2477个样本。

注意:降低模型部分的精度可能会对其收敛产生有意义的影响。在增加批量大小的情况下,使用混合精度的影响将因模型而异。在某些情况下,AMP 几乎不费吹灰之力就能工作。其他时候,您可能需要更努力地调整自动缩放器。还有些时候,您可能需要明确的设置模型不同部分的精度类型(例如,手动混合精度)。

优化7:在图形模式下训练

我们将应用的最终优化是模型编译。与每个PyTorch"急切"执行模式相反,编译API将您的模型转换为中间计算图,然后以最适合底层训练加速器的方式编译为低级计算内核。

下面的代码块演示了应用模型编译所需的更改:

model = torchvision.models.resnet18(weights='IMAGENET1K_V1').cuda(device)

model = torch.compile(model)

模型编译优化的结果如下所示:

TensorBoard Profiler Overview选项卡中编译图形的结果

模型编译进一步将我们的吞吐量提高到每秒3268个样本,而之前的实验是每秒2477个样本,性能提高了32%。

图编译改变训练步骤的方式在TensorBoard插件的不同视图中非常明显。例如,内核视图表明使用了新的(融合的)GPU内核,跟踪视图(如下所示)显示了与我们之前看到的完全不同的模式。

TensorBoard Profiler Trace View选项卡中编译图形的结果

中期业绩

在下表中,我们总结了我们应用的连续优化的结果。

性能结果摘要

通过使用PyTorch Profiler和TensorBoard插件应用我们的迭代分析和优化方法,我们能够将性能提高817%。

我们的工作完成了吗?绝对不是!我们实现的每个优化都揭示了性能改进的新潜在机会。这些机会以释放资源的形式呈现(例如,移动到混合精度的方式使我们能够增加批处理大小)或以新发现的性能瓶颈的形式呈现(例如,我们最终优化的方式揭示了主机到设备数据传输中的瓶颈)。此外,还有许多其他众所周知的优化形式,我们没有在这篇文章中尝试。最后,新的库优化(例如,我们在步骤7中演示的模型编译特性)一直在发布,进一步实现了我们的性能改进目标。正如我们在介绍中强调的那样,为了充分利用这些机会,性能优化必须是开发工作流程中迭代且一致的一部分。

总结

在这篇文章中,我们展示了在玩具分类模型上进行性能优化的巨大潜力。尽管你可以使用其他的性能分析工具,它们各有优缺点,但我们选择了PyTorch Profiler和TensorBoard插件,因为它们易于集成。

成功优化的路径将根据训练项目的细节而有很大的不同,包括模型架构和训练环境。在实践中,实现您的目标可能比我们在这里提供的示例更困难。我们所描述的一些技术可能对您的性能影响不大,甚至可能使性能变差。我们鼓励您根据项目的具体细节开发自己的工具和技术,以达到优化目标。

来源:https://towardsdatascience.com/pytorch-model-performance-analysis-and-optimization-10c3c5822869

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消