使用CNN特征嵌入进行图像相似度计算

2023年07月20日 由 Alex 发表

211164

0

介绍

几个月前,我正在检查市场领先的数据标签软件的功能。除了核心功能(如机器辅助标记、异常值评估和数据编目)之外,一个有趣的功能引起了我的注意——输入感兴趣的图像并从存档数据中返回类似图像的能力,以帮助构建更大的训练数据集。

支持此特性的底层功能是图像相似性,可以在各种用例中找到,从反向图像搜索到类似产品推荐,再到图像聚类。虽然我知道这个概念,并通过日常用户体验参与其中,但我从来没有花时间去挖掘支撑它的逻辑。这让我想到……我可以构建一个Python类,为简单的应用程序提供通用的图像相似功能吗?

本文将介绍这一部分是如何坐的。

图像相似技术概述

近年来,人们开发了几种不同的图像相似性评估方法,但总的来说,它们包括两个主要组成部分:

1):特征提取

2):相似性度量

特征提取

虽然有几种传统的特征提取方法(例如SIFT, HOG)可以识别图像间比较的特定关键特征,但它们往往侧重于手工工程,因此不需要自己学习特征,而是需要大量的知识来设计预处理和特征学习。

然而,近年来,人们越来越关注使用CNN,因为它们具有层次性质,这意味着它们能够自动确定学习或分类。结果是,与传统方法(专注于较低级别的特征)不同,CNN 能够对更抽象的表示进行建模。

从所进行的研究来看,如果定义“相似性”的标准没有很好地定义(即找到相同主题的不同类型-例如鲜花,家具等),那么CNN的更抽象的表示就会很好地工作。

该方法旨在提取由神经网络创建的图像特征嵌入,并比较这些图像以评估两幅图像的相似性。当为特定任务训练神经网络时,图像像素通常由完全连接层转换为特定的特征嵌入,然后将其传递给分类器(取决于神经网络架构)进行推理。虽然这些特征嵌入通常是黑盒,但它们捕获了足够的图像信息,可以有效地对图像进行分类。因此,其逻辑是,通过比较CNN最后一层输出的嵌入,可以推断出原始图像本身的相似程度。

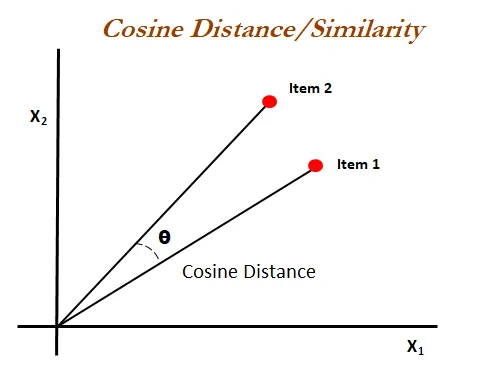

相似性度量

向量相似度的计算方法有很多种,其中使用最广泛的是余弦相似度。余弦相似度度量在多维空间中投影的两个向量之间夹角的余弦值。具体来说,它衡量的是矢量在方向或方向上的相似性,而忽略了矢量在大小或尺度上的差异。

然而,如果提供的特征向量被归一化,使其由0-1之间的值组成,那么向量的大小将不再被考虑,余弦相似度就成为衡量向量相似性的有效方法。

从CNN中提取嵌入

开发该功能的第一步是确定一个足够通用的解决方案,并且在一系列图像主题中都能很好地工作,而不需要每次都对CNN进行微调。为此,我决定利用Pytorch的torchvision模块,该模块附带了许多CNN架构和常见的图像转换。模型本身已经在不同的数据集上进行了预训练——例如ImageNet。

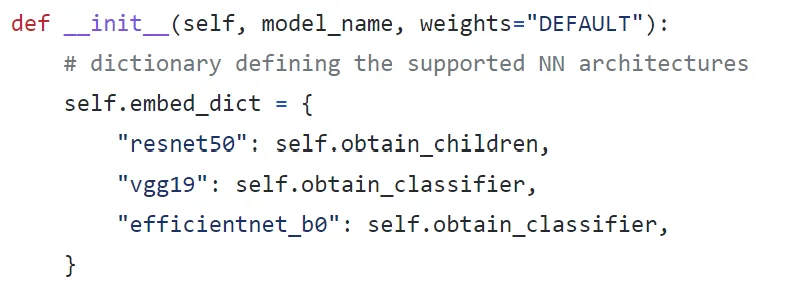

接下来的任务是开发一种合适的方法来从所利用的神经网络中提取特征向量。根据模型体系结构的不同,提取方法也会有所不同。因此,我开发了类,最初支持三种最常见的预训练神经网络架构(ResNet50, VGG19和EfficientNet):

我确定了删除最终分类层并输出最终层嵌入的适当方法。对于ResNet来说,这只是删除最后一层(“子层”)的情况,而对于VGG19和EfficientNet,你必须指定架构的“分类器”组件,并从中删除输出层:

def assign_layer(self):

model_embed = self.embed_dict[self.architecture]()

return model_embed

def obtain_children(self):

model_embed = nn.Sequential(*list(self.model.children())[:-1])

return model_embed

def obtain_classifier(self):

self.model.classifier = self.model.classifier[:-1]

return self.model

# Above is saved to class using _init_ : "self.embed = self.assign_layer()"

图像预处理

接下来是确定最合适的图像预处理,以匹配所选择的神经网络。值得庆幸的是,火炬视野已经包含了默认转换,我试图在可能的情况下利用它:

def assign_transform(self, weights):

weights_dict = {

"resnet50": models.ResNet50_Weights,

"vgg19": models.VGG19_Weights,

"efficientnet_b0": models.EfficientNet_B0_Weights,

}

# try load preprocess from torchvision else assign default

try:

w = weights_dict[self.architecture]

weights = getattr(w, weights)

preprocess = weights.transforms()

except Exception:

preprocess = transforms.Compose(

[

transforms.Resize(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]

),

]

)

return preprocess

# Saved to class using _init_ : "self.transform = self.assign_transform(weights)"

嵌入

把这些放在一起,我们可以有效地嵌入图像,并以最适合我们用例的格式存储向量(在我的例子下,我将它们存储为一个类变量,可以在以后保存为文本文件)。

def embed_image(self, img):

# load and preprocess image

img = Image.open(img)

img_trans = self.transform(img)

# store computational graph on GPU if available

if self.device == "cuda:0":

img_trans = img_trans.cuda()

img_trans = img_trans.unsqueeze(0)

return self.embed(img_trans)

确定相似性

在获得两个或多个图像的特征嵌入后,下一步是比较两个向量。为此,我选择了Pytorch的余弦相似性实现,因为它能够使用张量和GPU支持。

值得注意的是,我设计的功能是这样的,我首先嵌入一个图像数据集,我存储在一个类变量(' self.dataset ')中,然后比较目标图像和这个嵌入数据集-如下所示:

def similar_images(self, target_file, n=None):

"""

Function for comparing target image to embedded image dataset

Parameters:

-----------

target_file: str specifying the path of target image to compare

with the saved feature embedding dataset

n: int specifying the top n most similar images to return

"""

target_vector = self.embed_image(target_file)

# initiate computation of consine similarity

cosine = nn.CosineSimilarity(dim=1)

# iteratively store similarity of stored images to target image

sim_dict = {}

for k, v in self.dataset.items():

sim = cosine(v, target_vector)[0].item()

sim_dict[k] = sim

# sort based on decreasing similarity

items = sim_dict.items()

sim_dict = {k: v for k, v in sorted(items, key=lambda i: i[1], reverse=True)}

# cut to defined top n similar images

if n is not None:

sim_dict = dict(list(sim_dict.items())[: int(n)])

self.output_images(sim_dict, target_file)

return sim_dict

聚类图像

我后来添加的一个额外功能是对嵌入的图像进行聚类。为此,我们可以使用Pytorch实现KMeans聚类:

def cluster_dataset(self, nclusters, dist="euclidean", display=False):

vecs = torch.stack(list(self.dataset.values())).squeeze()

imgs = list(self.dataset.keys())

np.random.seed(100)

cluster_ids_x, cluster_centers = kmeans(

X=vecs, num_clusters=nclusters, distance=dist, device=self.device

)

# assign clusters to images

self.image_clusters = dict(zip(imgs, cluster_ids_x.tolist()))

# store cluster centres

cluster_num = list(range(0, len(cluster_centers)))

self.cluster_centers = dict(zip(cluster_num, cluster_centers.tolist()))

if display:

self.display_clusters()

return

相似性搜索

现在我已经概述了包功能的主要组件,我们可以深入研究获得的一些初步结果。

数据

在此之前,要介绍一下我为演示设计的数据集。

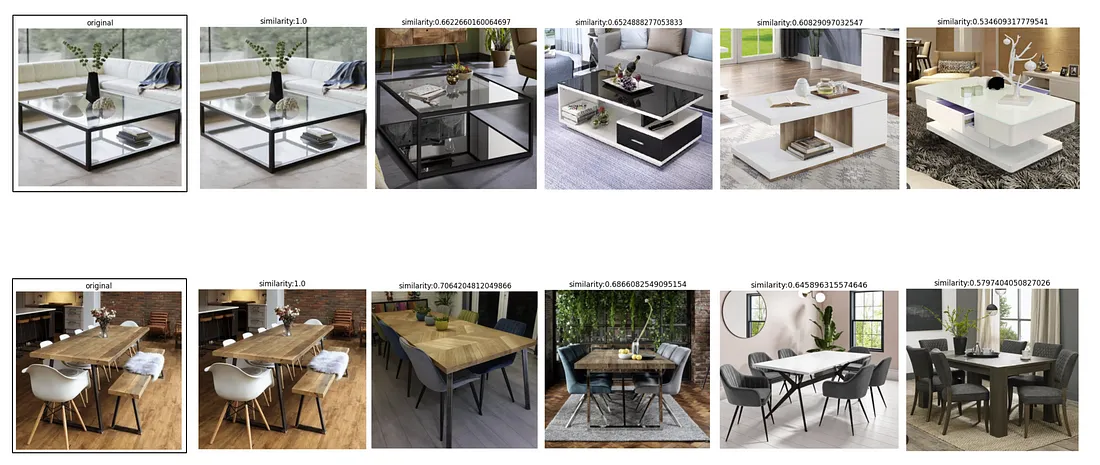

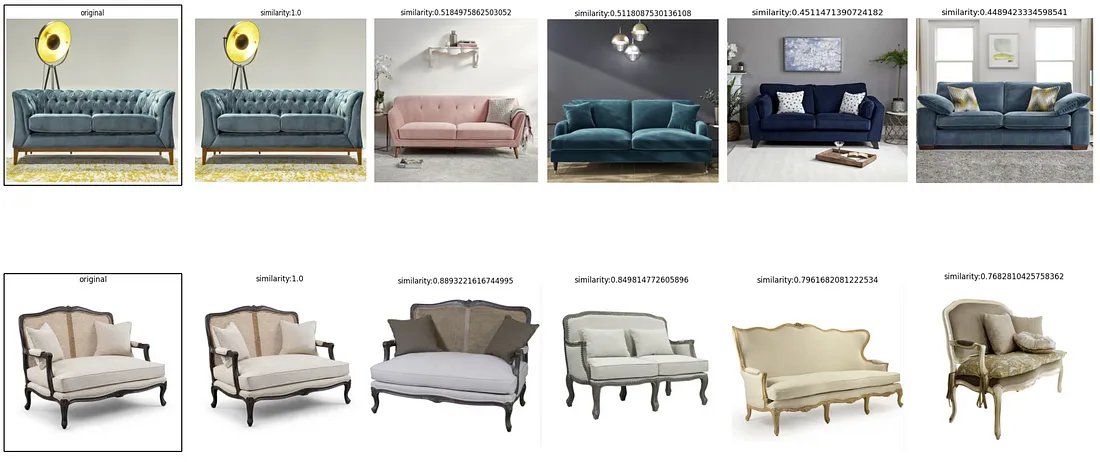

数据包括30张家具的图片,分为3类:桌子、沙发和架子(每种有10张图片)。然而,为了增加一些复杂性,我决定将每个类别分成两个子主题。下图显示了这一点:

桌子图像

沙发图像

架子图像

结果

使用我在Python类中内置的功能,可以通过一行代码来执行相似性搜索。用户只需要提供“目标”图像的文件路径(即我想要比较嵌入图像数据集的图像),并指定返回的前“n”最相似的图像:

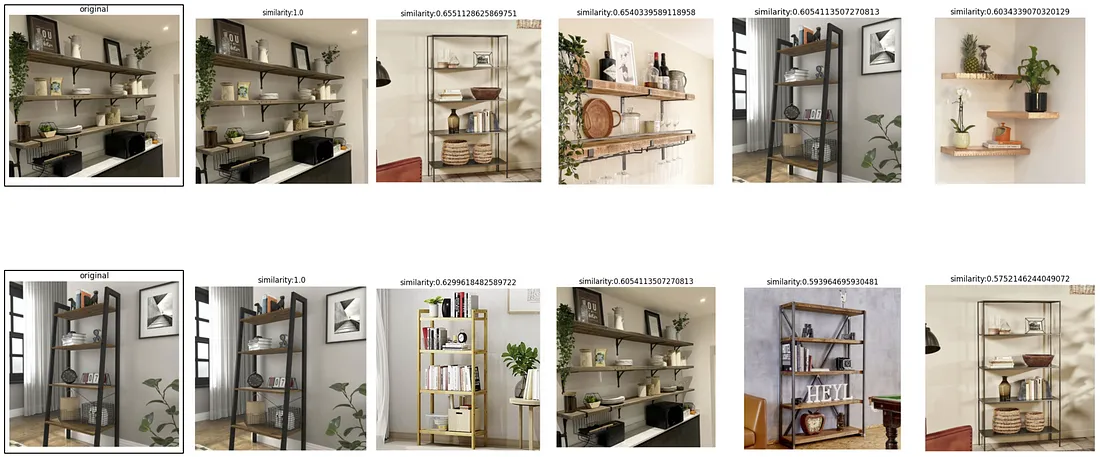

ImgSim.similar_images("C:/Users/jdoe/Projects/Image_Similarity/data/all_img/table_1.jpg", n=5)下面展示了几个例子,我从类别中的每个子主题中选择了第一张图像,并要求前5张最相似的图像。将指定的目标图像标记为“原始”,前5张图像按照相似度递减的顺序排列:

两个桌子主题示例

两个沙发主题示例

两个架子主题的实力

上面显示了一个开箱即用的CNN模型的惊人的好结果,没有针对这个特定的用例(识别家具)进行训练。

1. 桌子:该模型正确识别最相似的图像,有效地区分餐桌和咖啡桌

2. 沙发:该模型正确识别最相似的图像,有效区分沙发在某些上下文中的特征与沙发是图像中唯一物体的图像

3. 架子:这个类别的结果不那么友好。希望能够区分壁挂式货架和独立式货架,然而,输出的结果有一些重叠

图像聚类

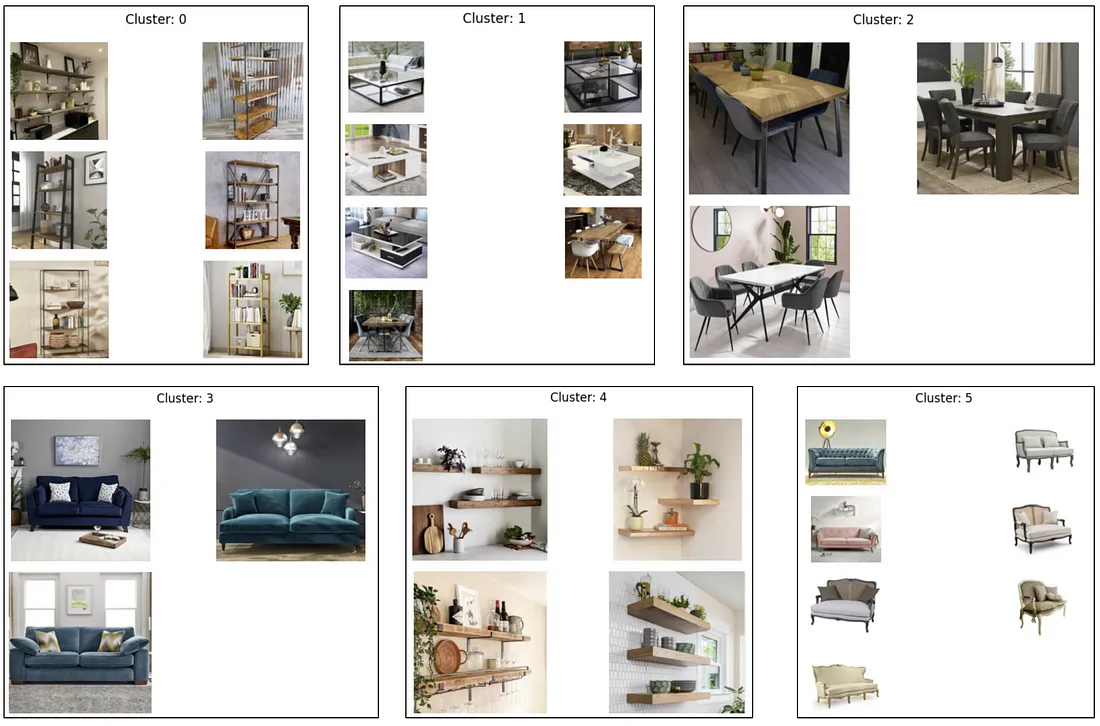

最后,我想测试类中包含的聚类功能的结果。考虑到我的数据集可以大致分为6个桶(3个类别,每个类别有2个子主题),我开始查看当我将KMeans 聚类数量设置为6时的结果。

虽然无监督聚类的结果并不糟糕,但它们确实有很大的改进空间。从上面你可以看到,至少这个模型已经能够区分三个主要的家具组,桌子、沙发和架子的图像,都属于不同的集群。

然而,考虑到在这三个类别中,每一个类别都有两个不同的子主题(例如,咖啡与餐桌,独立式与壁挂式货架,有背景的沙发与没有背景的沙发),希望这6个类别能够清晰地形成,但遗憾的是事实并非如此。

总结

总的来说,利用预训练神经网络获得的结果是有希望的。虽然仍然有一些改进可以做,考虑到使用的ResNet权重来自已经在ImageNet数据集上预训练的模型,它已经适应得很好了。

改善这些结果可能需要对CNN进行特定领域数据的定制训练——在这个例子中是不同家具的图像。对ResNet模型(或其他支持的CNN)进行微调,以完成图像分类等任务,将使模型更加适合于识别代表我们类的关键特征的特定特征——例如,独立式架子的腿与壁挂式架子的腿。

然而,考虑到这个项目的灵感是利用图像相似性来识别存档数据以支持构建训练数据集,我相信所开发的功能提供了一个合适的起点。

来源:https://medium.com/@f.a.reid/image-similarity-using-feature-embeddings-357dc01514f8

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消