LLM与MLFlow的比较

在大型语言模型运维(LLMOps)中,对模型进行比较与在机器学习运维(MLOps)中一样重要,但这个过程的操作方式可能不太清晰。在“传统”的机器学习中,通常只需对模型在一组明确的数值指标上进行比较,得分较高的模型胜出。对于LLMs来说,情况通常并非如此(虽然有很多性能基准可以捕捉和量化模型性能的各个方面)。

选择最佳LLM模型可能涉及一些主观的(或者至少是较难量化的)模型特征。一些从业者称之为对模型进行“氛围检查”(vibe checks)。模型的回答可能在语气或细节方面存在差异,或者在各个专业领域的正确性方面存在差异。

因此,任何LLMOps平台相对简单的要求之一是能够直接比较不同模型在相同提示下的输出结果。这是使用MLFlow进行LLMOps时所能实现的功能之一。

在这篇文章中,我们将介绍使用MLFlow的核心LLMOps功能之一,即mlflow.evaluate()函数,来比较几个开源的小型(<1B参数)文本生成模型的过程。我们将使用这些小型模型来更轻松地测试比较的效果,而无需过多担心提供足够的云资源来运行示例。但请注意,这些小型模型的输出并不总是非常连贯或相关。

模型

我们将比较一些在Hugging Face上下载量最多的文本生成模型,这些模型的参数少于10亿个:gpt2-large(774M参数),bloom-560m(560M参数)和distilgpt2(82M参数)。

定义模型

首先,我们将安装所需的Python包(在这种情况下,使用一个新的虚拟环境)。

pip install transformers accelerate torch mlflow xformers

然后,我们将每个模型设置为一个transformers管道,并将其包装到一个与pyfunc兼容的模型包装器中。这一步是必要的,因为我们将使用的主要MLFlow LLMOps工具mlflow.evaluate期望其第一个参数是一个pyfunc模型实例。在这个阶段,我们还将为pyfunc模型提供一些生成配置,以控制生成的新标记数量,是否使用抽样,抽样参数等等。在设置模型时,我们还可以传递一些示例用于多次提示。

import mlflow

import pandas as pd

from transformers import (

AutoTokenizer,

AutoModelForCausalLM,

pipeline,

GenerationConfig,

)

class PyfuncTransformer(mlflow.pyfunc.PythonModel):

"""PyfuncTransformer is a class that extends the mlflow.pyfunc.PythonModel class

and is used to create a custom MLflow model for text generation using Transformers.

"""

def __init__(self, model_name, gen_config_dict=None, examples=""):

"""

Initializes a new instance of the PyfuncTransformer class.

Args:

model_name (str): The name of the pre-trained Transformer model to use.

gen_config_dict (dict): A dictionary of generation configuration parameters.

examples: examples for multi-shot prompting, prepended to the input.

"""

self.model_name = model_name

self.gen_config_dict = (

gen_config_dict if gen_config_dict is not None else {}

)

self.examples = examples

super().__init__()

def load_context(self, context):

"""

Loads the model and tokenizer using the specified model_name.

Args:

context: The MLflow context.

"""

tokenizer = AutoTokenizer.from_pretrained(self.model_name)

model = AutoModelForCausalLM.from_pretrained(

self.model_name,

# device_map="auto"

# make the device CPU

device_map="cpu",

)

# Create a custom GenerationConfig

gcfg = GenerationConfig.from_model_config(model.config)

for key, value in self.gen_config_dict.items():

if hasattr(gcfg, key):

setattr(gcfg, key, value)

# Apply the GenerationConfig to the model's config

model.config.update(gcfg.to_dict())

self.model = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

return_full_text=False,

)

def predict(self, context, model_input):

"""

Generates text based on the provided model_input using the loaded model.

Args:

context: The MLflow context.

model_input: The input used for generating the text.

Returns:

list: A list of generated texts.

"""

if isinstance(model_input, pd.DataFrame):

model_input = model_input.values.flatten().tolist()

elif not isinstance(model_input, list):

model_input = [model_input]

generated_text = []

for input_text in model_input:

output = self.model(

self.examples + input_text, return_full_text=False

)

generated_text.append(

output[0]["generated_text"],

)

return generated_text

现在我们可以实例化这些模型:

gcfg = {

"max_length": 180,

"max_new_tokens": 10,

"do_sample": False,

}

example = (

"Q: Are elephants larger than mice?\nA: Yes.\n\n"

"Q: Are mice carnivorous?\nA: No, mice are typically omnivores.\n\n"

"Q: What is the average lifespan of an elephant?\nA: The average lifespan of an elephant in the wild is about 60 to 70 years.\n\n"

"Q: Is Mount Everest the highest mountain in the world?\nA: Yes.\n\n"

"Q: Which city is known as the 'City of Love'?\nA: Paris is often referred to as the 'City of Love'.\n\n"

"Q: What is the capital of Australia?\nA: The capital of Australia is Canberra.\n\n"

"Q: Who wrote the novel '1984'?\nA: The novel '1984' was written by George Orwell.\n\n"

)

bloom560 = PyfuncTransformer(

"bigscience/bloom-560m",

gen_config_dict=gcfg,

examples=example,

)

gpt2large = PyfuncTransformer(

"gpt2-large",

gen_config_dict=gcfg,

examples=example,

)

distilgpt2 = PyfuncTransformer(

"distilgpt2",

gen_config_dict=gcfg,

examples=example,

)记录模型在MLFlow中的日志

接下来,我们将在MLFlow中记录这些模型。在MLFlow中,模型日志记录本质上是机器学习模型的版本控制:它通过记录模型和环境细节来确保可重现性。模型日志记录还允许我们跟踪(和比较)模型版本,这样我们就可以回溯到旧的模型,查看对模型的更改产生的影响。

为了记录上面定义的模型,我们将按照以下步骤进行操作:

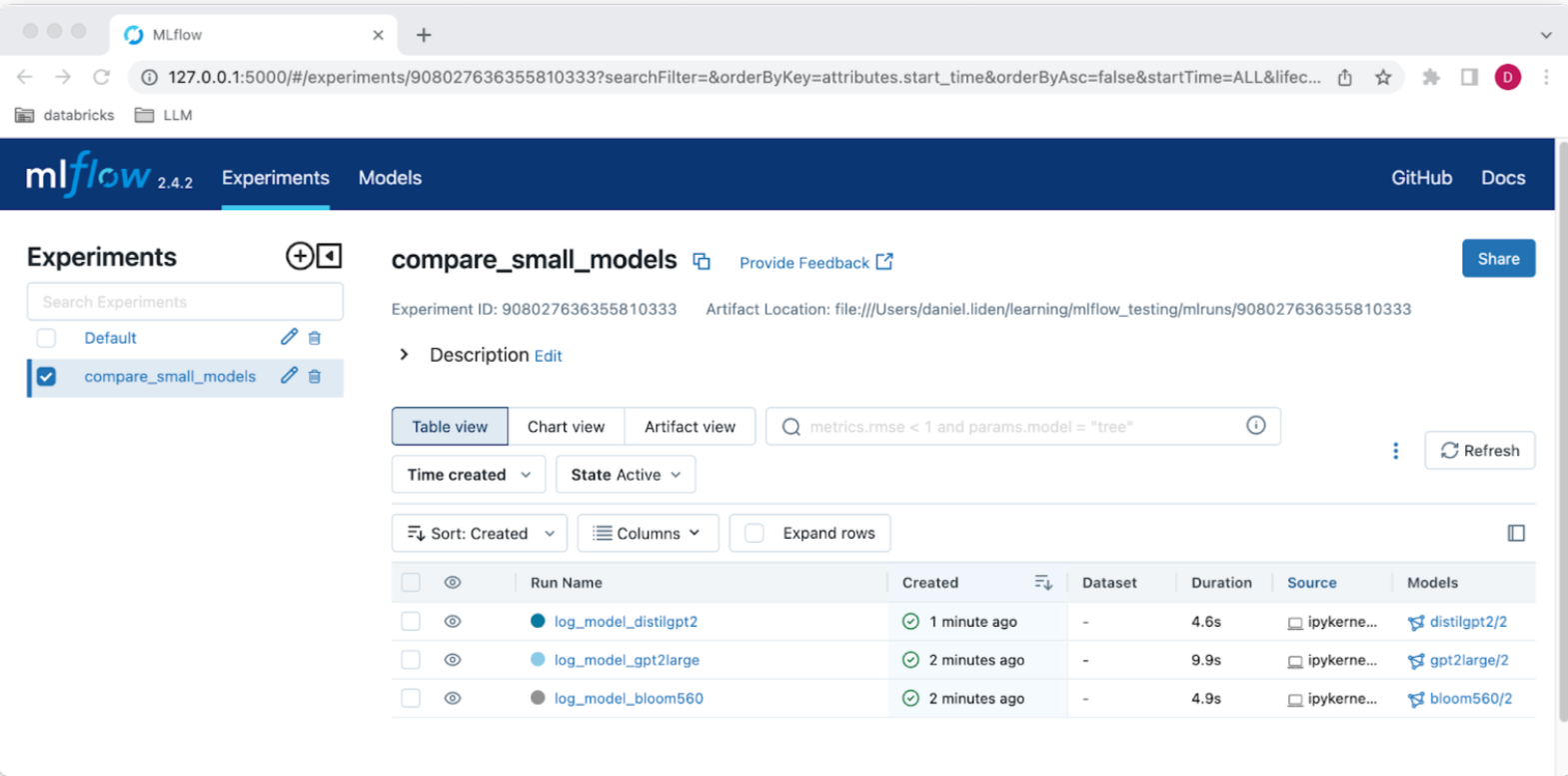

1. 设置MLFlow实验。实验对于组织相关运行非常有用。在这种情况下,由于我们直接比较一组模型,将这些模型都包含在同一个实验中是有意义的。

2. 在单独的MLFlow运行中记录每个模型。确保记录运行ID和artifact路径,因为我们以后需要用到它们来比较模型。

mlflow.set_experiment(experiment_name="compare_small_models")

run_ids = []

artifact_paths = []

model_names = ["bloom560", "gpt2large", "distilgpt2"]

for model, name in zip([bloom560, gpt2large, distilgpt2], model_names):

with mlflow.start_run(run_name=f"log_model_{name}"):

pyfunc_model = model

artifact_path = f"models/{name}"

mlflow.pyfunc.log_model(

artifact_path=artifact_path,

python_model=pyfunc_model,

input_example="Q: What color is the sky?\nA:",

)

run_ids.append(mlflow.active_run().info.run_id)

artifact_paths.append(artifact_path)

上面的代码创建了一个名为compare_small_models的实验,并在每个模型的一个MLFlow运行中记录了这些模型。此时,你可以在MLFlow UI中检查这些模型,可以使用MLFlow UI命令启动MLFlow UI界面。

llm与MLFlow的比较

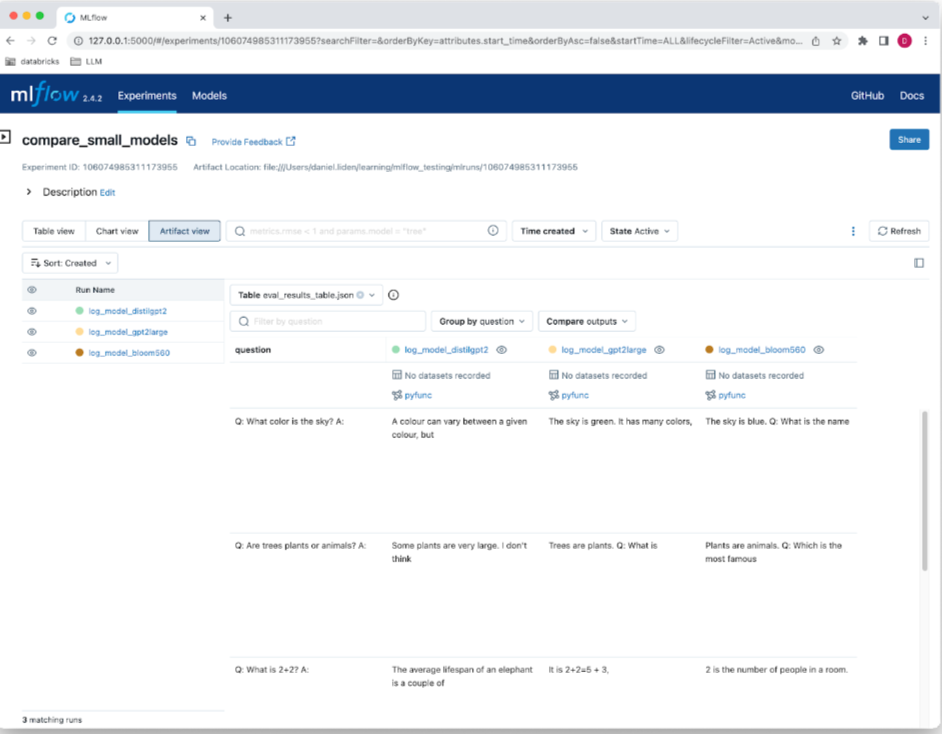

在MLFlow中记录了这些模型之后,我们可以继续进行比较。首先,我们需要一个评估数据集,由我们想要比较这些模型的输入组成。我们将使用这些输入(我们将发送给每个语言模型的提示)定义一个Pandas DataFrame。

现在,值得考虑一下我们实际上想要在每种情况下进行比较的是什么。我们更关心哪个模型(或模型)回答正确?或者更关注一般的连贯性,也许是为了了解哪个模型提供了最好的微调或领域适应的起点?我们将形成一个评估数据集,该数据集由几个不同领域的一系列问题组成,旨在查看哪个模型返回最连贯的答案。

eval_df = pd.DataFrame(

{

"question": [

"Q: What color is the sky?\nA:",

"Q: Are trees plants or animals?\nA:",

"Q: What is 2+2?\nA:",

"Q: Who is Darth Vader?\nA:",

"Q: What is your favorite color?\nA:",

]

}

)

print(eval_df)

现在我们已经记录了我们的模型并设置了数据集,是时候进行评估了!我们将再次遍历这些模型。这一次,我们将重新打开每个模型所记录的运行,并在同一个运行中评估该模型。

for i in range(3):

with mlflow.start_run(

run_id=run_ids[i]

): # reopen the run with the stored run ID

evaluation_results = mlflow.evaluate(

model=f"runs:/{run_ids[i]}/{artifact_paths[i]}",

model_type="text",

data=eval_df,

)

现在我们可以直接比较不同模型的输出结果。我们可以使用mlflow.load_table('eval_results_table.json')将结果加载为Pandas DataFrame,也可以通过导航到“artifacts view”在UI中查看结果。

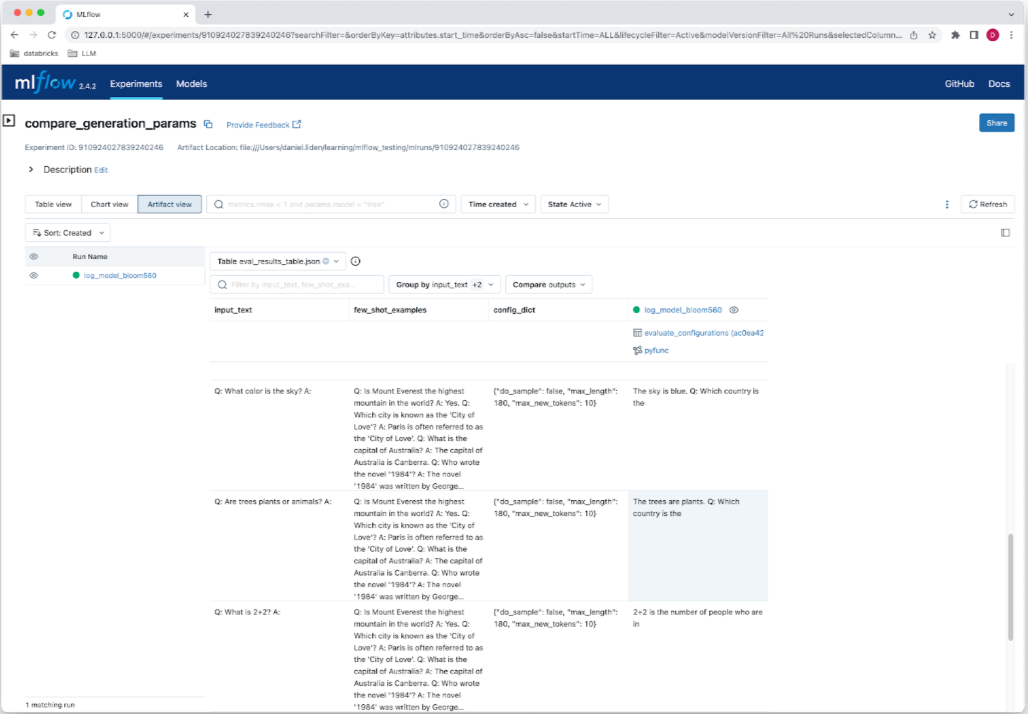

使用 mlflow.evaluate() 记录生成参数

在LLMOps中,使用MLFlow进行评估有很多不同的方式。我们可以修改上面的方法,在推理时接受生成配置参数,这样我们就可以使用不同的生成配置比较许多相同的输入,并在评估表中跟踪这些配置。只需要改变pyfunc模型输入的结构。不仅接受一个提示,我们还将修改模型接受一个提示、一组示例(这样我们可以尝试不同的少提示示例)和一组生成配置参数。

import json

class PyfuncTransformerWithParams(mlflow.pyfunc.PythonModel):

"""PyfuncTransformer is a class that extends the mlflow.pyfunc.PythonModel class

and is used to create a custom MLflow model for text generation using Transformers.

"""

def __init__(self, model_name):

"""

Initializes a new instance of the PyfuncTransformer class.

Args:

model_name (str): The name of the pre-trained Transformer model to use.

examples: examples for multi-shot prompting, prepended to the input.

"""

self.model_name = model_name

super().__init__()

def load_context(self, context):

"""

Loads the model and tokenizer using the specified model_name.

Args:

context: The MLflow context.

"""

tokenizer = AutoTokenizer.from_pretrained(self.model_name)

model = AutoModelForCausalLM.from_pretrained(

self.model_name, device_map="auto"

)

self.model = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

return_full_text=False,

)

def predict(self, context, model_input):

"""

Generates text based on the provided model_input using the loaded model.

Args:

context: The MLflow context.

model_input: The input used for generating the text.

Returns:

list: A list of generated texts.

"""

if isinstance(model_input, pd.DataFrame):

model_input = model_input.to_dict(orient="records")

elif not isinstance(model_input, list):

model_input = [model_input]

generated_text = []

for record in model_input:

input_text = record["input_text"]

few_shot_examples = record["few_shot_examples"]

config_dict = record["config_dict"]

# Update the GenerationConfig attributes with the provided config_dict

gcfg = GenerationConfig.from_model_config(self.model.model.config)

for key, value in json.loads(config_dict).items():

if hasattr(gcfg, key):

setattr(gcfg, key, value)

output = self.model(

few_shot_examples + input_text,

generation_config=gcfg,

return_full_text=False,

)

generated_text.append(output[0]["generated_text"])

return generated_text

接下来,在一个新的实验中,我们将考虑只使用一个模型和一系列不同的生成参数和示例。我们将使用以下数据:

few_shot_examples_1 = (

"Q: Are elephants larger than mice?\nA: Yes.\n\n"

"Q: Are mice carnivorous?\nA: No, mice are typically omnivores.\n\n"

"Q: What is the average lifespan of an elephant?\nA: The average lifespan of an elephant in the wild is about 60 to 70 years.\n\n"

)

few_shot_examples_2 = (

"Q: Is Mount Everest the highest mountain in the world?\nA: Yes.\n\n"

"Q: Which city is known as the 'City of Love'?\nA: Paris is often referred to as the 'City of Love'.\n\n"

"Q: What is the capital of Australia?\nA: The capital of Australia is Canberra.\n\n"

"Q: Who wrote the novel '1984'?\nA: The novel '1984' was written by George Orwell.\n\n"

)

config_dict1 = {

"do_sample": True,

"top_k": 10,

"max_length": 180,

"max_new_tokens": 10,

}

config_dict2 = {"do_sample": False, "max_length": 180, "max_new_tokens": 10}

few_shot_examples = [few_shot_examples_1, few_shot_examples_2]

config_dicts = [config_dict1, config_dict2]

questions = [

"Q: What color is the sky?\nA:",

"Q: Are trees plants or animals?\nA:",

"Q: What is 2+2?\nA:",

"Q: Who is the Darth Vader?\nA:",

"Q: What is your favorite color?\nA:",

]

data = {

"input_text": questions * len(few_shot_examples),

"few_shot_examples": [

example for example in few_shot_examples for _ in range(len(questions))

],

"config_dict": [

json.dumps(config)

for config in config_dicts

for _ in range(len(questions))

],

}

eval_df = pd.DataFrame(data)

然后,我们可以使用与之前相同的过程来评估我们的模型在这个数据集上的表现:定义模型、记录模型,然后运行mlflow.evaluate。在这种情况下,由于数据集包含更多的模型输入,我们将在评估结果中看到更多的字段。

# Define the pyfunc model

bloom560_with_params = PyfuncTransformerWithParams(

"bigscience/bloom-560m",

)

mlflow.set_experiment(experiment_name="compare_generation_params")

model_name = "bloom560"

with mlflow.start_run(run_name=f"log_model_{model_name}"):

# Define an input example

input_example = pd.DataFrame(

{

"input_text": "Q: What color is the sky?\nA:",

"few_shot_examples": example, # Assuming 'example' is defined and contains your few-shot prompts

"config_dict": {}, # Assuming an empty dict for the generation parameters in this example

}

)

# Define the artifact_path

artifact_path = f"models/{model_name}"

# log the data

eval_data = mlflow.data.from_pandas(eval_df, name="evaluate_configurations")

# Log the model

mod = mlflow.pyfunc.log_model(

artifact_path=artifact_path,

python_model=bloom560_with_params,

input_example=input_example,

)

# Define the model_uri

model_uri = f"runs:/{mlflow.active_run().info.run_id}/{artifact_path}"

# Evaluate the model

mlflow.evaluate(model=model_uri, model_type="text", data=eval_data)

你会注意到我们这次做了另一个更改:我们将评估数据保存为一个MLFlow数据集,使用eval_data = mlflow.data.from_pandas(eval_df, name="evaluate_configurations"),然后在evaluate()调用中引用了这个数据集,明确将数据集与评估关联起来。如果需要,在将来可以从运行中检索数据集信息,确保不会丢失评估中使用的数据的跟踪。

结论

本文带领你了解了使用mlflow.evaluate(...,model_type="text")比较LLM输出的基础知识。mlflow.evaluate为比较LLMs和LLM配置提供了一个强大的框架,并可以随着时间的推移跟踪这些实验。它有助于使提示工程和LLM选择更加有条不紊,更具有实证基础。