利用Optuna实现自动超参数优化:发挥高效调整的潜力

介绍

超参数优化是机器学习模型开发的重要组成部分。它涉及在给定模型上找到最佳超参数集合的过程,旨在改善其性能和泛化能力。选择最佳超参数是一项具有挑战性的任务,通常需要尝试各种组合并评估其对模型性能的影响。这个过程可能耗时且计算成本高。幸运的是,像Optuna这样的工具已经成为简化和自动化超参数优化过程的强大解决方案。在本文中,我们将探讨超参数优化的重要性,Optuna的作用、关键特点以及如何应用它来提高机器学习模型开发的效率和效果。

Optuna: 优化超参数的高效与自动化,释放模 型的全部潜力。

超参数优化的重要性

超参数是机器学习模型的参数,不是从数据中学习而是在训练过程之前设置的。它们影响模型的行为、性能和泛化能力。选择正确的超参数可以使模型从表现不佳到出色。常见的超参数包括学习率、批处理大小、神经网络中的层数、正则化项等等。

手动调整超参数可能是一项乏味且耗时的任务。它通常涉及尝试和错误,以及对模型和数据的深刻理解。自动化超参数优化工具,例如Optuna,提供了一种更高效和系统化的方法。

介绍Optuna

Optuna是一个用于超参数优化的开源Python库。由日本公司Preferred Networks开发,Optuna通过优化目标函数来提供一种优雅而自动化的搜索最佳超参数的方式。它被设计为用户友好且高度适应不同的机器学习框架,如scikit-learn、PyTorch、TensorFlow、XGBoost等等。

Optuna的关键特点

贝叶斯优化:Optuna利用贝叶斯优化技术高效地探索超参数空间。它对超参数和目标函数之间的关系建模,能够明智地决定下一个要探索的配置。这种方法可以加快收敛速度并获得更好的结果。

可扩展性:Optuna非常可扩展,允许用户定义自定义的搜索空间、优化算法和剪枝策略。这种灵活性使其适用于各种机器学习任务和模型。

并行化:Optuna支持并行执行,可以同时评估多个配置。这个特性可以大大减少超参数优化所需的时间。

集成性:Optuna与流行的机器学习库无缝集成,使其在各种项目中易于使用。这种集成扩展到了scikit-learn、PyTorch、TensorFlow等框架,允许用户将超参数优化纳入现有的工作流程中。

Optuna的工作原理

Optuna基于以下三个步骤操作:

定义搜索空间:用户指定要优化的超参数的范围和类型,如整数、浮点数或分类值。这一步定义了Optuna将要探索的搜索空间。

定义目标函数:目标函数是优化过程的核心。它评估给定一组超参数的模型性能。Optuna旨在最小化或最大化这个函数,具体取决于优化目标。

优化:Optuna使用贝叶斯优化来采样不同的超参数配置。它迭代地评估这些配置并更新概率模型,缩小搜索空间。这个过程将持续进行,直到找到令人满意的超参数集。

使用Optuna的好处

效率:Optuna显著减少了超参数优化所需的时间和资源。其智能的搜索策略和并行化能力有助于更快地收敛。

改进性能:通过系统地探索超参数空间,Optuna增加了发现最佳配置的可能性,进而提高了模型性能。

自动化:Optuna自动化了繁琐的超参数调整任务,使数据科学家和机器学习工程师能够专注于模型开发和问题解决,而不是手动调整。

可重现性:Optuna中的自动化优化过程确保超参数调整可以轻松复制和分享,增强了机器学习实验的可重现性。

代码

下面是一个完整的Python代码示例,演示使用Optuna对两个机器学习模型(随机森林和XGBoost)进行超参数优化。我们将为两个模型优化超参数并使用合成数据比较它们的结果。我们还将生成图表来可视化优化过程并比较模型性能。

!pip install optuna

import optuna

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from xgboost import XGBClassifier

from sklearn.metrics import accuracy_score

# Generate synthetic data

X, y = make_classification(n_samples=1000, n_features=20, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Define objective functions for Random Forest and XGBoost

def rf_objective(trial):

n_estimators = trial.suggest_int('n_estimators', 10, 100)

max_depth = trial.suggest_int('max_depth', 2, 32, log=True)

model = RandomForestClassifier(n_estimators=n_estimators, max_depth=max_depth, random_state=42)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

return accuracy_score(y_test, y_pred)

def xgb_objective(trial):

n_estimators = trial.suggest_int('n_estimators', 10, 100)

max_depth = trial.suggest_int('max_depth', 2, 10)

learning_rate = trial.suggest_loguniform('learning_rate', 1e-5, 1)

model = XGBClassifier(n_estimators=n_estimators, max_depth=max_depth, learning_rate=learning_rate, random_state=42)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

return accuracy_score(y_test, y_pred)

# Optimize hyperparameters for Random Forest

rf_study = optuna.create_study(direction='maximize')

rf_study.optimize(rf_objective, n_trials=50)

# Optimize hyperparameters for XGBoost

xgb_study = optuna.create_study(direction='maximize')

xgb_study.optimize(xgb_objective, n_trials=50)

# Plot the optimization results

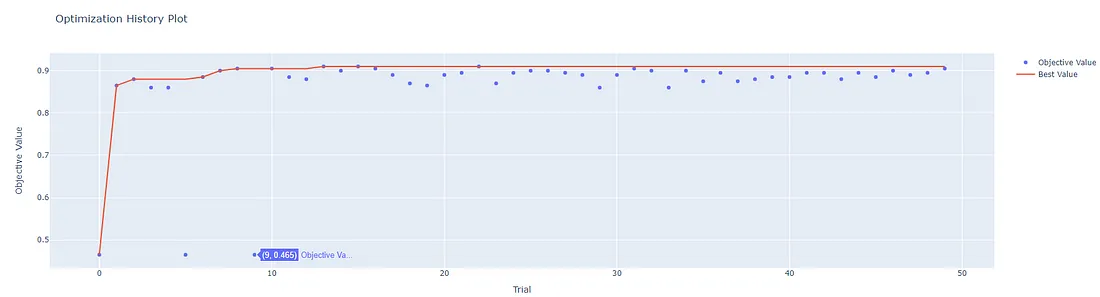

optuna.visualization.plot_optimization_history(rf_study).show()

optuna.visualization.plot_optimization_history(xgb_study).show()

# Get the best hyperparameters for each model

best_rf_params = rf_study.best_params

best_xgb_params = xgb_study.best_params

# Train models with the best hyperparameters

best_rf_model = RandomForestClassifier(n_estimators=best_rf_params['n_estimators'],

max_depth=best_rf_params['max_depth'], random_state=42)

best_rf_model.fit(X_train, y_train)

best_xgb_model = XGBClassifier(n_estimators=best_xgb_params['n_estimators'],

max_depth=best_xgb_params['max_depth'],

learning_rate=best_xgb_params['learning_rate'], random_state=42)

best_xgb_model.fit(X_train, y_train)

# Evaluate and compare models

rf_accuracy = best_rf_model.score(X_test, y_test)

xgb_accuracy = best_xgb_model.score(X_test, y_test)

print("Random Forest Model Accuracy:", rf_accuracy)

print("XGBoost Model Accuracy:", xgb_accuracy)

在这段代码中:

1. 我们生成合成数据并将其分为训练集和测试集。

2. 我们为随机森林和XGBoost模型定义目标函数,以便使用Optuna进行优化。要优化的超参数包括估计器数量、最大深度和学习率。

3. 我们为每个模型创建单独的Optuna研究,并优化它们各自的目标函数。

4. 我们使用optuna.visualization.plot_optimization_history函数可视化优化历史。

5. 我们从Optuna研究中获取两个模型的最佳超参数。

6. 我们使用最佳超参数训练模型。

7. 我们评估并比较模型在测试数据上的准确性,并打印它们的准确性。

[I 2023-10-25 22:07:14,345] A new study created in memory with name: no-name-25c2cdcb-5698-46b8-926c-9a6106d9f65a

[I 2023-10-25 22:07:15,664] Trial 0 finished with value: 0.87 and parameters: {'n_estimators': 55, 'max_depth': 4}. Best is trial 0 with value: 0.87.

[I 2023-10-25 22:07:17,344] Trial 1 finished with value: 0.9 and parameters: {'n_estimators': 61, 'max_depth': 7}. Best is trial 1 with value: 0.9.

[I 2023-10-25 22:07:18,499] Trial 2 finished with value: 0.89 and parameters: {'n_estimators': 62, 'max_depth': 8}. Best is trial 1 with value: 0.9.

[I 2023-10-25 22:07:20,011] Trial 3 finished with value: 0.905 and parameters: {'n_estimators': 92, 'max_depth': 19}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:20,496] Trial 4 finished with value: 0.865 and parameters: {'n_estimators': 54, 'max_depth': 3}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:20,643] Trial 5 finished with value: 0.84 and parameters: {'n_estimators': 13, 'max_depth': 2}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:21,134] Trial 6 finished with value: 0.86 and parameters: {'n_estimators': 49, 'max_depth': 2}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:21,722] Trial 7 finished with value: 0.86 and parameters: {'n_estimators': 49, 'max_depth': 2}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:22,904] Trial 8 finished with value: 0.855 and parameters: {'n_estimators': 65, 'max_depth': 2}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:24,268] Trial 9 finished with value: 0.9 and parameters: {'n_estimators': 76, 'max_depth': 26}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:26,291] Trial 10 finished with value: 0.9 and parameters: {'n_estimators': 100, 'max_depth': 28}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:28,099] Trial 11 finished with value: 0.895 and parameters: {'n_estimators': 99, 'max_depth': 12}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:32,032] Trial 12 finished with value: 0.905 and parameters: {'n_estimators': 81, 'max_depth': 16}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:33,852] Trial 13 finished with value: 0.895 and parameters: {'n_estimators': 82, 'max_depth': 15}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:35,212] Trial 14 finished with value: 0.895 and parameters: {'n_estimators': 85, 'max_depth': 17}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:36,981] Trial 15 finished with value: 0.9 and parameters: {'n_estimators': 91, 'max_depth': 32}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:37,653] Trial 16 finished with value: 0.87 and parameters: {'n_estimators': 34, 'max_depth': 20}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:38,900] Trial 17 finished with value: 0.895 and parameters: {'n_estimators': 71, 'max_depth': 11}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:40,528] Trial 18 finished with value: 0.905 and parameters: {'n_estimators': 89, 'max_depth': 21}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:41,763] Trial 19 finished with value: 0.895 and parameters: {'n_estimators': 75, 'max_depth': 12}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:42,860] Trial 20 finished with value: 0.875 and parameters: {'n_estimators': 40, 'max_depth': 22}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:45,823] Trial 21 finished with value: 0.9 and parameters: {'n_estimators': 84, 'max_depth': 21}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:47,671] Trial 22 finished with value: 0.905 and parameters: {'n_estimators': 92, 'max_depth': 16}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:48,348] Trial 23 finished with value: 0.9 and parameters: {'n_estimators': 91, 'max_depth': 24}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:49,109] Trial 24 finished with value: 0.905 and parameters: {'n_estimators': 80, 'max_depth': 31}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:49,877] Trial 25 finished with value: 0.9 and parameters: {'n_estimators': 93, 'max_depth': 18}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:50,307] Trial 26 finished with value: 0.89 and parameters: {'n_estimators': 69, 'max_depth': 9}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:50,929] Trial 27 finished with value: 0.905 and parameters: {'n_estimators': 87, 'max_depth': 14}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:51,582] Trial 28 finished with value: 0.9 and parameters: {'n_estimators': 100, 'max_depth': 23}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:51,968] Trial 29 finished with value: 0.885 and parameters: {'n_estimators': 77, 'max_depth': 6}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:52,103] Trial 30 finished with value: 0.875 and parameters: {'n_estimators': 15, 'max_depth': 19}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:53,071] Trial 31 finished with value: 0.9 and parameters: {'n_estimators': 94, 'max_depth': 15}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:53,780] Trial 32 finished with value: 0.905 and parameters: {'n_estimators': 89, 'max_depth': 16}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:54,477] Trial 33 finished with value: 0.9 and parameters: {'n_estimators': 95, 'max_depth': 27}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:55,627] Trial 34 finished with value: 0.9 and parameters: {'n_estimators': 84, 'max_depth': 19}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:56,204] Trial 35 finished with value: 0.9 and parameters: {'n_estimators': 70, 'max_depth': 13}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:56,635] Trial 36 finished with value: 0.885 and parameters: {'n_estimators': 62, 'max_depth': 10}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:57,930] Trial 37 finished with value: 0.9 and parameters: {'n_estimators': 79, 'max_depth': 16}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:07:58,961] Trial 38 finished with value: 0.89 and parameters: {'n_estimators': 56, 'max_depth': 7}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:00,117] Trial 39 finished with value: 0.9 and parameters: {'n_estimators': 97, 'max_depth': 13}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:00,770] Trial 40 finished with value: 0.905 and parameters: {'n_estimators': 89, 'max_depth': 24}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:01,350] Trial 41 finished with value: 0.905 and parameters: {'n_estimators': 81, 'max_depth': 31}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:01,908] Trial 42 finished with value: 0.905 and parameters: {'n_estimators': 73, 'max_depth': 30}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:02,484] Trial 43 finished with value: 0.9 and parameters: {'n_estimators': 79, 'max_depth': 26}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:02,917] Trial 44 finished with value: 0.895 and parameters: {'n_estimators': 65, 'max_depth': 20}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:03,564] Trial 45 finished with value: 0.905 and parameters: {'n_estimators': 88, 'max_depth': 17}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:04,431] Trial 46 finished with value: 0.9 and parameters: {'n_estimators': 96, 'max_depth': 27}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:04,974] Trial 47 finished with value: 0.9 and parameters: {'n_estimators': 82, 'max_depth': 22}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:05,421] Trial 48 finished with value: 0.89 and parameters: {'n_estimators': 57, 'max_depth': 18}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:06,060] Trial 49 finished with value: 0.9 and parameters: {'n_estimators': 86, 'max_depth': 14}. Best is trial 3 with value: 0.905.

[I 2023-10-25 22:08:06,069] A new study created in memory with name: no-name-f6cbfaac-9671-442f-b2a7-aceb5cbc7ee8

Random Forest Model Accuracy: 0.905

XGBoost Model Accuracy: 0.91

确保您已安装所需库(scikit-learn,XGBoost,Optuna)以运行此代码。该代码提供了随机森林和XGBoost的超参数优化,可视化和模型比较,但您可以根据需要适应不同的模型和数据集。

结论

超参数优化是机器学习模型开发中至关重要的一步。当手动进行时,它可能具有挑战性,耗时且计算资源消耗大。Optuna通过其贝叶斯优化和用户友好的界面,为此问题提供了一种优雅的解决方案。通过自动化超参数搜索过程,它提高了机器学习模型开发的效率和效果。随着机器学习领域的不断发展,类似Optuna的工具将越来越重要,用于加速最先进模型的开发和部署。