深度确定性策略梯度(DDPG):弥合连续行动空间和强化学习之间的差距

介绍

强化学习(RL)近年来取得了重要的进展,这得益于能够使智能体学习和适应环境的算法的发展。在RL中面临的一个关键挑战是处理连续动作空间,这在许多现实世界应用中都是存在的。传统的RL算法如Q-learning和策略梯度方法是为了离散动作空间而设计的,在面对连续动作时遇到了困难。深度确定性策略梯度(DDPG)是一种突破性的算法,通过使RL智能体能够高效地在连续动作空间中导航来应对这一挑战。本文探讨了DDPG的基本原理,特点和应用,以增加对其在强化学习领域中的重要性的理解。

DDPG 的基础

DDPG 是一种actor-critic 算法,结合了基于策略和基于价值的方法的优点。它由 Timothy P. Lillicrap等人在2016年的论文“连续控制与深度强化学习”中引入。DDPG 扩展了 DQN 架构,它以在处理高维状态空间方面的成功而闻名。其关键创新是将 DQN 适应到连续动作空间中。

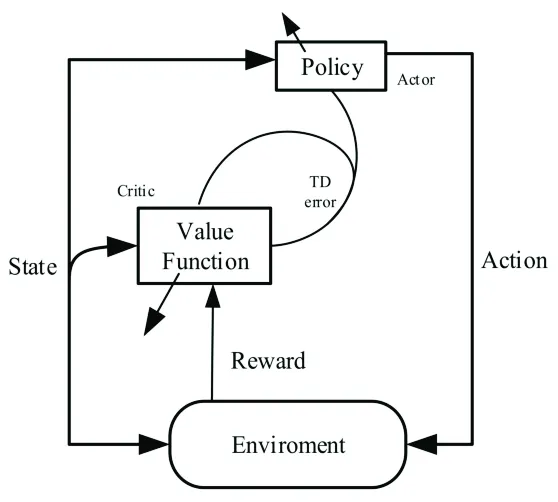

1. Actor-Critic架构:在 DDPG 中,代理使用两个神经网络:一个演员和一个评论家。演员网络负责根据当前状态选择动作,而评论家网络评估这些动作。演员旨在最大化预期回报,而评论家通过估计动作值函数评估所选择动作的质量。通过维护这两个网络,DDPG 在探索和利用之间达到了平衡,这对于高效学习至关重要。

2. 目标网络:DDPG 使用目标网络的想法来增强训练的稳定性。它使用目标演员和评论家网络,这些网络与主演员和评论家网络缓慢地更新。这种稳定技术减轻了训练过程中可能发生的发散问题。

3. Ornstein-Uhlenbeck 噪声:为了鼓励在连续动作空间中进行探索,DDPG 引入了奥恩斯坦-乌伦贝克 (OU) 过程,这是一种将时间相关噪声添加到动作中的随机过程。该噪声对于防止代理陷入局部最优解并促进有效探索非常重要。

DDPG 的特点

DDPG 具有几个显着特点,使其成为各种强化学习应用的重要选择:

1. 处理连续动作空间:DDPG 最显著的特点是其处理连续动作空间的能力。传统的强化学习算法在动作不是离散和有限的情况下往往难以处理。DDPG 利用确定性策略,使代理可以轻松产生连续值动作。

2. 样本效率:与其他强化学习算法相比,DDPG 具有相对较高的样本效率。DDPG 的离策略特性使其能够高效地重用过去的经验,加快学习过程。这种特性在数据收集可能昂贵或耗时的实际场景中尤其有价值。

3. 稳定训练:DDPG 中使用目标网络有助于稳定训练。通过减少方差和潜在发散问题,DDPG 收敛更可靠,不容易出现振荡。

4. 探索和利用平衡:在动作中加入 OU 噪声确保了探索和利用之间的平衡。代理被鼓励在动作空间中进行探索,同时逐渐利用学到的策略。

DDPG 的应用

DDPG 在许多领域都找到了应用,包括机器人技术、自主控制、金融和游戏等。一些著名的应用案例包括:

1. 机器人技术:DDPG 被用于训练机器人代理在连续且高维动作空间中执行任务。包括机器人臂控制、运动和操纵等任务。

2. 自动驾驶车辆:在自动驾驶和无人机控制中,DDPG 被用于在连续和动态环境中导航车辆,做出方向盘、油门和制动等决策。

3. 金融:DDPG 在优化金融资产交易策略方面显示出潜力,通过根据市场情况动态调整投资组合分配。

4. 游戏:在游戏行业中,DDPG 被用于训练各种视频游戏的代理,包括虚拟和实体游戏,其中精确和连续的控制至关重要。

代码示例

创建一个完整的 Deep Deterministic Policy Gradients (DDPG) 实现,包括数据集和绘图,需要多个组件,包括一个环境,用于演员和评论家的神经网络,DDPG 算法以及用于收集和可视化数据的代码。以下是一个使用 OpenAI Gym 环境和 Matplotlib 库进行绘图的简化 Python 代码示例。请注意,这只是一个基本示例,实际应用中你可能需要调整超参数并实现更高级的技术以获得更好的结果。

import numpy as np

import tensorflow as tf

import gym

import matplotlib.pyplot as plt

from collections import deque # Import the 'deque' data structure

import random # Import the 'random' module for sampling

# Create the environment (you can use any Gym environment)

env = gym.make('Pendulum-v1')

state_dim = env.observation_space.shape[0]

action_dim = env.action_space.shape[0]

# Actor and Critic networks using TensorFlow

def build_actor_network():

actor = tf.keras.Sequential([

tf.keras.layers.Input(shape=(state_dim,)),

tf.keras.layers.Dense(400, activation='relu'),

tf.keras.layers.Dense(300, activation='relu'),

tf.keras.layers.Dense(action_dim, activation='tanh')

])

return actor

def build_critic_network():

critic = tf.keras.Sequential([

tf.keras.layers.Input(shape=(state_dim + action_dim,)),

tf.keras.layers.Dense(400, activation='relu'),

tf.keras.layers.Dense(300, activation='relu'),

tf.keras.layers.Dense(1)

])

return critic

# DDPG Agent

class DDPGAgent:

def __init__(self, buffer_size=10000, gamma=0.99): # Define and set the gamma parameter

self.gamma = gamma # Store the gamma value

self.actor = build_actor_network()

self.critic = build_critic_network()

self.target_actor = build_actor_network()

self.target_critic = build_critic_network()

# Experience replay buffer

self.buffer = deque(maxlen=buffer_size)

# Define target networks with the same weights as the online networks

self.target_actor.set_weights(self.actor.get_weights())

self.target_critic.set_weights(self.critic.get_weights())

# Define optimizer for actor and critic

self.actor_optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

self.critic_optimizer = tf.keras.optimizers.Adam(learning_rate=0.002)

def add_to_buffer(self, state, action, reward, next_state, done):

self.buffer.append((state, action, reward, next_state, done))

def sample_from_buffer(self, batch_size):

batch = random.sample(self.buffer, batch_size)

states, actions, rewards, next_states, dones = zip(*batch)

return np.array(states), np.array(actions), np.array(rewards), np.array(next_states), np.array(dones)

def actor_loss(self, actor, states, actions):

actor_actions = actor(states)

return -tf.math.reduce_mean(self.target_critic(tf.concat([states, actor_actions], axis=-1)))

def critic_loss(self, critic, target_critic, states, actions, rewards, next_states, dones, gamma):

target_actions = self.target_actor(next_states)

target_Q = target_critic(tf.concat([next_states, target_actions], axis=-1))

target_Q = rewards + (1 - dones) * gamma * target_Q

predicted_Q = critic(tf.concat([states, actions], axis=-1))

return tf.keras.losses.MeanSquaredError()(target_Q, predicted_Q)

def update_target_networks(self, tau):

for target, online in zip([self.target_actor, self.target_critic], [self.actor, self.critic]):

target_weights = target.get_weights()

online_weights = online.get_weights()

new_weights = []

for tw, ow in zip(target_weights, online_weights):

new_weights.append(tw * (1 - tau) + ow * tau)

target.set_weights(new_weights)

def select_action(self, state):

# Convert the state to a NumPy array

state = np.array(state)

# Add batch dimension to the state

state = np.expand_dims(state, axis=0)

# Use the actor network to predict the action

action = self.actor(state)

# Clip the action to the environment's action space range (if needed)

action = np.clip(action, env.action_space.low, env.action_space.high)

return action[0]

def train(self, batch):

states, actions, rewards, next_states, dones = batch

with tf.GradientTape() as tape:

actor_loss = self.actor_loss(self.actor, states, actions)

actor_gradients = tape.gradient(actor_loss, self.actor.trainable_variables)

self.actor_optimizer.apply_gradients(zip(actor_gradients, self.actor.trainable_variables))

with tf.GradientTape() as tape:

critic_loss = self.critic_loss(self.critic, self.target_critic, states, actions, rewards, next_states, dones, self.gamma) # Use self.gamma

critic_gradients = tape.gradient(critic_loss, self.critic.trainable_variables)

self.critic_optimizer.apply_gradients(zip(critic_gradients, self.critic.trainable_variables))

# Update target networks

self.update_target_networks(tau=0.001) # Corrected usage without 'self' as an argument

return actor_loss, critic_loss

# Create the DDPG agent

agent = DDPGAgent()

# Define training parameters

num_episodes = 1000

max_steps = 500

batch_size = 64

# Data for plotting

episode_rewards = []

# Main training loop

for episode in range(num_episodes):

state = env.reset()

episode_reward = 0

for step in range(max_steps):

action = agent.select_action(state)

next_state, reward, done, _ = env.step(action)

agent.add_to_buffer(state, action, reward, next_state, done)

if len(agent.buffer) > batch_size:

batch = agent.sample_from_buffer(batch_size)

agent.train(batch)

state = next_state

episode_reward += reward

if done:

break

episode_rewards.append(episode_reward)

if episode % 10 == 0:

print(f"Episode {episode}, Reward: {episode_reward}")

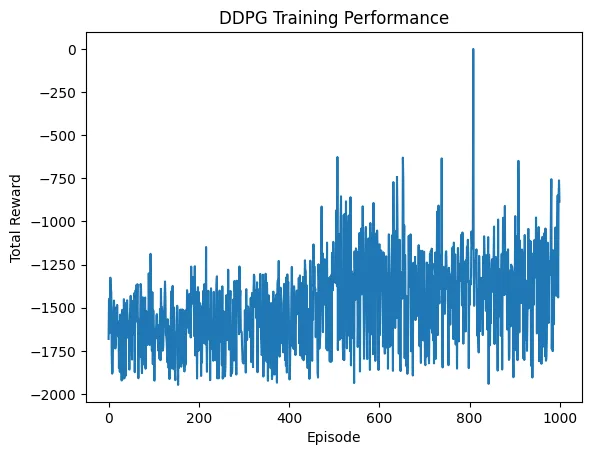

# Plot the rewards

plt.plot(episode_rewards)

plt.xlabel('Episode')

plt.ylabel('Total Reward')

plt.title('DDPG Training Performance')

plt.show()

请注意,这是一个用于教育目的的简化DDPG实现。在实际应用中,你需要对超参数进行微调,实现探索策略,并添加更高级的技术,如目标网络更新、经验重播和噪声注入,以获得更好的收敛性和性能。

Episode 0, Reward: -1680.8948963876296

Episode 10, Reward: -1679.5995310661028

Episode 20, Reward: -1606.5684823667889

Episode 30, Reward: -1512.7027887078905

Episode 40, Reward: -1676.5041254870414

Episode 50, Reward: -1487.7886571655226

Episode 60, Reward: -1398.5321690200287

Episode 70, Reward: -1551.9420807262784

Episode 80, Reward: -1486.3573806257755

Episode 90, Reward: -1554.8263679376812

Episode 100, Reward: -1626.2031086536663

Episode 110, Reward: -1748.090455073805

Episode 120, Reward: -1748.9325973864356

Episode 130, Reward: -1815.397089952616

Episode 140, Reward: -1851.6618096208608

Episode 150, Reward: -1640.4318136353127

Episode 160, Reward: -1770.584144941453

Episode 170, Reward: -1833.3219935587158

Episode 180, Reward: -1623.6034643226374

Episode 190, Reward: -1665.2530466311787

Episode 200, Reward: -1408.9315897707427

Episode 210, Reward: -1402.3905855302553

Episode 220, Reward: -1492.498696778396

Episode 230, Reward: -1575.0888791882496

Episode 240, Reward: -1318.1519733134166

Episode 250, Reward: -1690.9066406844872

Episode 260, Reward: -1514.6773317792013

Episode 270, Reward: -1402.8461496077618

Episode 280, Reward: -1465.1098395045676

Episode 290, Reward: -1260.8688433687248

Episode 300, Reward: -1796.0143575950494

Episode 310, Reward: -1531.2343184101258

Episode 320, Reward: -1844.936063494665

Episode 330, Reward: -1874.1536186912906

Episode 340, Reward: -1768.260784763511

Episode 350, Reward: -1812.1616207847326

Episode 360, Reward: -1473.1086455036793

Episode 370, Reward: -1475.9218817489402

Episode 380, Reward: -1684.7238211820204

Episode 390, Reward: -1560.571142399997

Episode 400, Reward: -1653.463864644562

Episode 410, Reward: -1739.1047749465001

Episode 420, Reward: -1538.6847651826101

Episode 430, Reward: -1797.7695370907268

Episode 440, Reward: -1753.385184699908

Episode 450, Reward: -1411.2439738408768

Episode 460, Reward: -1529.6975201567782

Episode 470, Reward: -1529.3672711616964

Episode 480, Reward: -1270.6924999352966

Episode 490, Reward: -1811.122971166864

Episode 500, Reward: -1352.2794767410176

Episode 510, Reward: -1074.376306592394

Episode 520, Reward: -1799.215113417089

Episode 530, Reward: -1114.8987398325082

Episode 540, Reward: -1674.867892414308

Episode 550, Reward: -1417.7894890126722

Episode 560, Reward: -1040.5575650055653

Episode 570, Reward: -1031.9366290130758

Episode 580, Reward: -1009.1584381585435

Episode 590, Reward: -1068.7452936074503

Episode 600, Reward: -1132.6695536883617

Episode 610, Reward: -1649.3662247818313

Episode 620, Reward: -1419.6648128394509

Episode 630, Reward: -1866.03017186529

Episode 640, Reward: -972.867763976454

Episode 650, Reward: -1379.1625350345296

Episode 660, Reward: -1431.522727369365

Episode 670, Reward: -1752.5052691979815

Episode 680, Reward: -1571.8786387127752

Episode 690, Reward: -1169.199621666014

Episode 700, Reward: -1415.4519449991785

Episode 710, Reward: -1822.466464204076

Episode 720, Reward: -1318.4644312000457

Episode 730, Reward: -1414.7454637691387

Episode 740, Reward: -1846.2784374568773

Episode 750, Reward: -1390.9115099028634

Episode 760, Reward: -1498.9023940292273

Episode 770, Reward: -1598.1958680718178

Episode 780, Reward: -1312.1821681827178

Episode 790, Reward: -1373.6441153027827

Episode 800, Reward: -1483.8361337740548

Episode 810, Reward: -1489.298107055303

Episode 820, Reward: -1759.191355938306

Episode 830, Reward: -1202.6665991836596

Episode 840, Reward: -1391.037023586251

Episode 850, Reward: -1217.818059883296

Episode 860, Reward: -1296.4888469664088

Episode 870, Reward: -1332.3981382154534

Episode 880, Reward: -1176.7749026769732

Episode 890, Reward: -1282.8680852206767

Episode 900, Reward: -1342.5245204993857

Episode 910, Reward: -1643.2737877348507

Episode 920, Reward: -1278.685316991944

Episode 930, Reward: -1043.1786265699413

Episode 940, Reward: -1305.3037606182481

Episode 950, Reward: -1202.8848555969596

Episode 960, Reward: -1607.5294672752339

Episode 970, Reward: -1793.96667258336

Episode 980, Reward: -951.5458544987462

Episode 990, Reward: -1035.3949288302485

在这个特定的日志中,似乎是一个代理人或系统经历了一系列事件并获得了奖励。奖励在时间上波动,表明代理人在训练或测试过程中的表现有所变化。以下是从这些数据中得出的一些见解:

1. 奖励进展:日志中的奖励随时间变化,既有正值也有负值。趋势不一直在改善或恶化,这表明代理人的表现可能对不同因素敏感。

2. 探索与利用:在强化学习中,代理人经常面临探索(尝试新策略)和利用(使用最佳已知策略)之间的权衡。奖励的变化可能表明代理人仍在探索不同的任务方法。

3. 学习动态:奖励的波动表明学习过程可能不稳定。代理人可能遇到挑战或环境发生变化,导致性能的变化。

4. 训练进展:奖励的趋势可能表明代理人是否正在收敛到最优策略或仍在努力寻找最佳方法。随着时间的推移,奖励的持续改善将是学习的积极迹象。

5. 挑战与机会:奖励突然下降或飙升可能指示代理人遇到的特定挑战或机会。分析这些时刻可以提供关于什么有效和什么无效的见解。

6. 超参数调整:为了改善代理人的性能,可能需要进行超参数调整和算法调整。奖励日志可以帮助确定这些变化的效果。

需要注意的是,需要进一步分析和背景知识来得出最终结论并做出关于代理人的表现和训练过程的明智决策。根据具体的问题和应用,可能需要使用不同的指标和评估方法来评估代理人学习的成功程度。

结论

总之,深度确定性策略梯度(DDPG)在强化学习领域取得了显著的进展。通过弥补RL和连续动作空间之间的差距,DDPG为机器人、自主控制、金融和游戏等应用打开了新的可能性。其处理连续动作、样本效率和训练稳定性的特性使其成为研究人员和实践者的宝贵工具。随着RL领域的不断发展,DDPG很可能在塑造现实世界智能代理的未来中起到核心作用。