使用PostgreSQL和OpenAI嵌入进行语义搜索

在企业数据库中实施语义搜索可能具有挑战性,并且需要付出巨大的努力。然而,事情一定要这样吗?在本文中,我将演示如何利用 PostgreSQL 和 OpenAI Embeddings 对数据实施语义搜索。

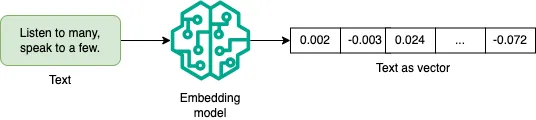

在非常高的水平上,具有法学硕士的向量数据库允许对可用数据(存储在数据库、文档等中)进行语义搜索。这要归功于“向量空间中单词表示的有效估计”论文(也称为“Word2Vec 论文”) )由传奇人物杰夫·迪恩(Jeff Dean)共同创作,我们知道如何将单词表示为实值向量。词嵌入是向量空间中单词的密集向量表示,其中具有相似含义的单词彼此更接近。词嵌入捕获词之间的语义关系,并且有不止一种技术可以创建它们。

让我们练习并使用 OpenAI 的text-embedding-ada模型!距离函数的选择通常并不重要。OpenAI 建议使用余弦相似度。如果你您不想使用 OpenAI 嵌入,并且更喜欢在本地运行不同的模型而不是进行 API 调用,我建议考虑 SentenceTransformers预训练模型之一。明智地选择您的型号。

import os

import openai

from openai.embeddings_utils import cosine_similarity

openai.api_key = os.getenv("OPENAI_API_KEY")

def get_embedding(text: str) -> list:

response = openai.Embedding.create(

input=text,

model="text-embedding-ada-002"

)

return response['data'][0]['embedding']

good_ride = "good ride"

good_ride_embedding = get_embedding(good_ride)

print(good_ride_embedding)

# [0.0010935445316135883, -0.01159335020929575, 0.014949149452149868, -0.029251709580421448, -0.022591838613152504, 0.006514389533549547, -0.014793967828154564, -0.048364896327257156, -0.006336577236652374, -0.027027441188693047, ...]

len(good_ride_embedding)

# 1536

现在我们已经了解了什么是嵌入,让我们利用它来对一些评论进行排序。

good_ride_review_1 = "I really enjoyed the trip! The ride was incredibly smooth, the pick-up location was convenient, and the drop-off point was right in front of the coffee shop."

good_ride_review_1_embedding = get_embedding(good_ride_review_1)

cosine_similarity(good_ride_review_1_embedding, good_ride_embedding)

# 0.8300454513797334

good_ride_review_2 = "The drive was exceptionally comfortable. I felt secure throughout the journey and greatly appreciated the on-board entertainment, which allowed me to have some fun while the car was in motion."

good_ride_review_2_embedding = get_embedding(good_ride_review_2)

cosine_similarity(good_ride_review_2_embedding, good_ride_embedding)

# 0.821774476808789

bad_ride_review = "A sudden hard brake at the intersection really caught me off guard and stressed me out. I wasn't prepared for it. Additionally, I noticed some trash left in the cabin from a previous rider."

bad_ride_review_embedding = get_embedding(bad_ride_review)

cosine_similarity(bad_ride_review_embedding, good_ride_embedding)

# 0.7950041130579355

虽然绝对差异可能看起来很小,但请考虑具有成千上万条评论的排序功能。在这种情况下,我们可以优先只突出顶部的积极因素。

一旦单词或文档被转换为嵌入,就可以将其存储在数据库中。但是,此操作不会自动将数据库分类为矢量数据库。只有当数据库开始支持向量上的快速操作时,我们才能正确地将其标记为向量数据库。

有大量的商业和开源矢量数据库,使其成为一个备受讨论的话题。我将使用pgvector演示矢量数据库的功能,这是一个开源 PostgreSQL 扩展,可以为最流行的数据库提供矢量相似性搜索功能。

让我们使用 pgvector 运行 PostgreSQL 容器:

docker pull ankane/pgvector

docker run --env "POSTGRES_PASSWORD=postgres" --name "postgres-with-pgvector" --publish 5432:5432 --detach ankane/pgvectorenv "POSTGRES_PASSWORD=postgres" --name "postgres-with-pgvector" --publish 5432:5432 --detach ankane/pgvector

让我们启动pgcli连接到数据库(pgcli postgres://postgres:postgres@localhost:5432)并创建一个表,插入我们上面计算的嵌入,然后选择相似的项目:

-- Enable pgvector extension.

CREATE EXTENSION vector;

-- Create a vector column with 1536 dimensions.

-- The `text-embedding-ada-002` model has 1536 dimensions.

CREATE TABLE reviews (text TEXT, embedding vector(1536));

-- Insert three reviews from the above. I omitted the input for your convinience.

INSERT INTO reviews (text, embedding) VALUES ('I really enjoyed the trip! The ride was incredibly smooth, the pick-up location was convenient, and the drop-off point was right in front of the coffee shop.', '[-0.00533589581027627, -0.01026702206581831, 0.021472081542015076, -0.04132508486509323, ...');

INSERT INTO reviews (text, embedding) VALUES ('The drive was exceptionally comfortable. I felt secure throughout the journey and greatly appreciated the on-board entertainment, which allowed me to have some fun while the car was in motion.', '[0.0001858668401837349, -0.004922827705740929, 0.012813017703592777, -0.041855424642562866, ...');

INSERT INTO reviews (text, embedding) VALUES ('A sudden hard brake at the intersection really caught me off guard and stressed me out. I was not prepared for it. Additionally, I noticed some trash left in the cabin from a previous rider.', '[0.00191772251855582, -0.004589076619595289, 0.004269456025213003, -0.0225954819470644, ...');

-- sanity check

select count(1) from reviews;

-- +-------+

-- | count |

-- |-------|

-- | 3 |

-- +-------+

我们现在已经准备好搜索类似的文件。我又缩短了“good ride”的嵌入表示,因为打印1536尺寸实在是过多。

--- The embedding we use here is for "good ride"

SELECT substring(text, 0, 80) FROM reviews ORDER BY embedding <-> '[0.0010935445316135883, -0.01159335020929575, 0.014949149452149868, -0.029251709580421448, ...';

-- +--------------------------------------------------------------------------+

-- | substring |

-- |--------------------------------------------------------------------------|

-- | I really enjoyed the trip! The ride was incredibly smooth, the pick-u... |

-- | The drive was exceptionally comfortable. I felt secure throughout the... |

-- | A sudden hard brake at the intersection really caught me off guard an... |

-- +--------------------------------------------------------------------------+

SELECT 3

Time: 0.024s

完成了!正如你所看到的,我们为多个文件计算了嵌入(embeddings),将它们存储在数据库中,并进行了向量相似性搜索。潜在应用非常广泛,从企业搜索到医疗记录系统中的特性,用以识别有相似症状的患者。此外,这种方法不仅限于文本;对于声音、视频和图像等其他类型的数据,也可以计算相似性。