推出Llama Packs:预打包模块中心

2023年11月24日 由 alex 发表

602

0

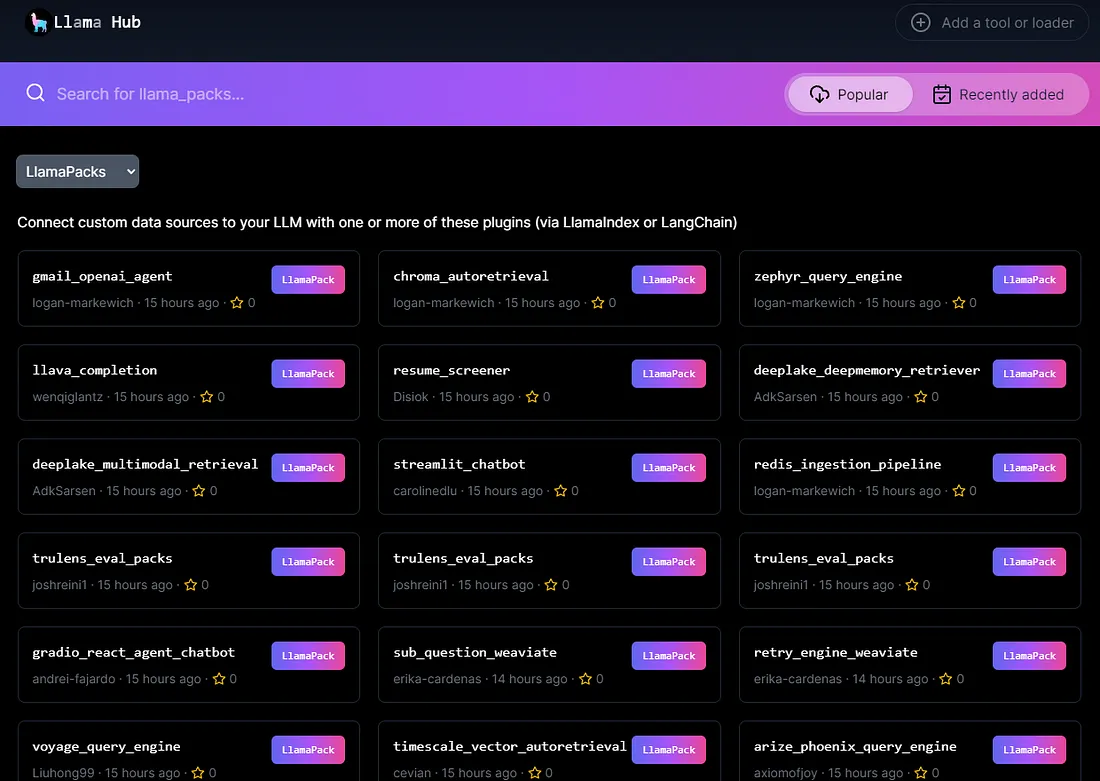

Llama Packs——一个社区驱动的预打包模块中心,你可以用这些模块来快速启动你的大型语言模型(LLM)应用程序。导入这些模块可用于广泛的用例,从构建一个Streamlit应用程序到搭建基于Weaviate的高级检索系统,再到可以进行结构化数据提取的简历解析器。同样重要的是,你可以检查并根据自己的喜好来定制这些模块。

概述

Llama Packs可以用两种方式来描述:

- 一方面,它们是预先打包的模块,可以通过参数初始化,并且可以开箱即用来实现给定的用例(无论是完整的RAG(可重用、附加、可组合)流程、应用程序模板等)。你还可以导入子模块(例如LLMs、查询引擎)以直接使用。

- 另一方面,Llama Packs也是你可以查看、修改和使用的模板。

它们可以通过llama_index Python库或命令行界面(CLI)用一行代码下载:

命令行界面(CLI):

llamaindex-cli download-llamapack <pack_name> --download-dir <pack_directory>dir <pack_directory>

Python

from llama_index.llama_pack import download_llama_pack

# download and install dependencies

VoyageQueryEnginePack = download_llama_pack(

"<pack_name>", "<pack_directory>"

)

Llama Packs可以跨越不同的抽象层次——有些是完整的预打包模板(完整的Streamlit / Gradio应用程序),而有些则结合了一些较小的模块(例如我们的SubQuestionQueryEngine与Weaviate)。

示例演练

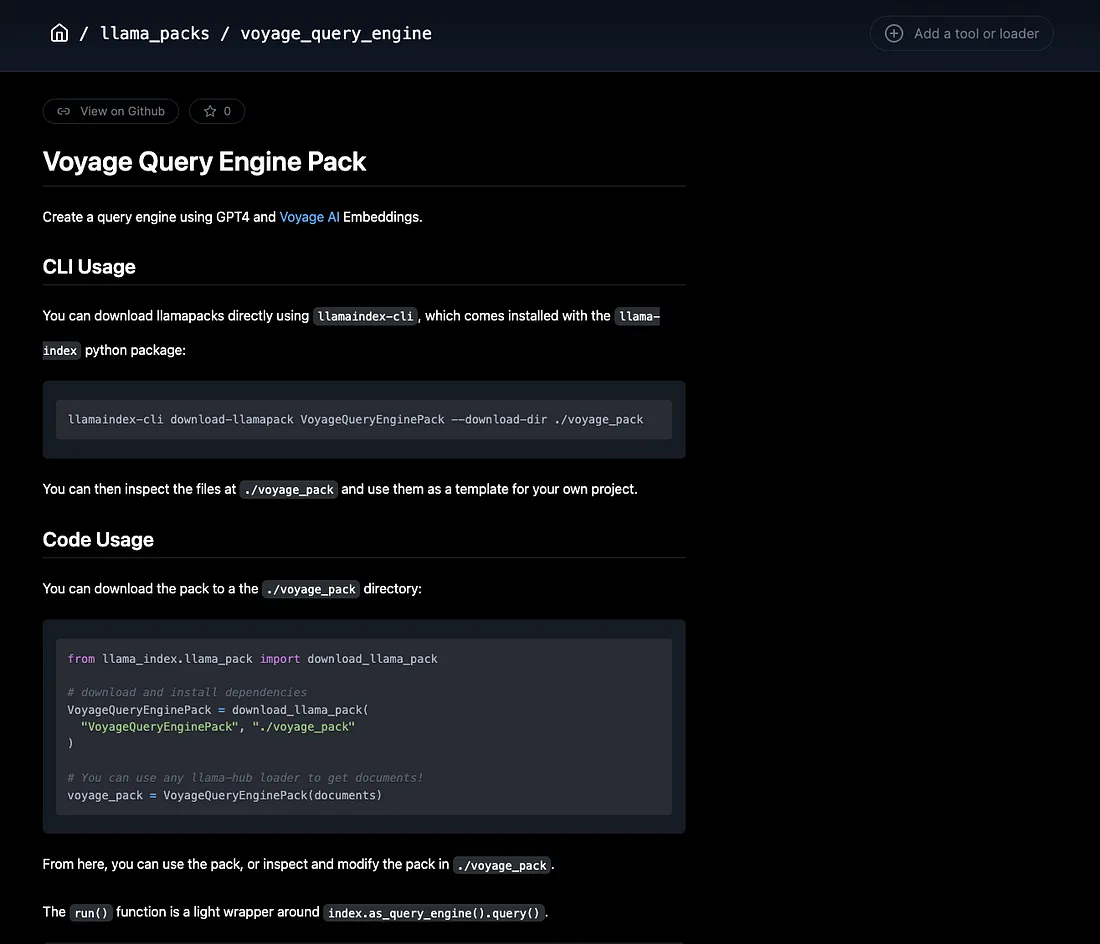

展示Llama Packs特性最好的方式是展示一个例子。我们将演练一个简单的Llama Packs,它为用户提供了一个搭配Voyage AI嵌入的RAG管道设置。

首先,我们下载并初始化一组文档中的Pack:

from llama_index.llama_pack import download_llama_pack

# download pack

VoyageQueryEnginePack = download_llama_pack("VoyageQueryEnginePack", "./voyage_pack")

# initialize pack (assume documents is defined)

voyage_pack = VoyageQueryEnginePack(documents)

每个Llama Packs都实现了一个get_modules()函数,允许你检查/使用模块。

modules = voyage_pack.get_modules()

display(modules)

# get LLM, vector index

llm = modules["llm"]

vector_index = modules["index"]

Llama Packs可以以开箱即用的方式运行。通过调用run,我们将执行RAG管道并获得响应。在这个设置中,你不需要担心内部的问题。

# this will run the full pack

response = voyage_pack.run("What did the author do growing up?", similarity_top_k=2)

print(str(response))

The author spent his time outside of school mainly writing and programming. He wrote short stories and attempted to write programs on an IBM 1401. Later, he started programming on a TRS-80, creating simple games and a word processor. He also painted still lives while studying at the Accademia.

第二件重要的事情是你可以完全访问Llama Packs的代码。这使得你可以自定义Llama Packs,移除代码,或者仅将其作为参考来构建你自己的应用程序。我们来看一下在voyage_pack/base.py中下载的包,并将OpenAI LLM替换为Anthropic:

from llama_index.llms import Anthropic

...

class VoyageQueryEnginePack(BaseLlamaPack):

def __init__(self, documents: List[Document]) -> None:

llm = Anthropic()

embed_model = VoyageEmbedding(

model_name="voyage-01", voyage_api_key=os.environ["VOYAGE_API_KEY"]

)

service_context = ServiceContext.from_defaults(llm=llm, embed_model=embed_model)

self.llm = llm

self.index = VectorStoreIndex.from_documents(

documents, service_context=service_context

)

def get_modules(self) -> Dict[str, Any]:

"""Get modules."""

return {"llm": self.llm, "index": self.index}

def run(self, query_str: str, **kwargs: Any) -> Any:

"""Run the pipeline."""

query_engine = self.index.as_query_engine(**kwargs)

return query_engine.query(query_str)

你可以直接重新导入模块并再次运行它:

from voyage_pack.base import VoyageQueryEnginePack

voyage_pack = VoyageQueryEnginePack(documents)

response = voyage_pack.run("What did the author do during his time in RISD?")

print(str(response))

文章来源:https://medium.com/llamaindex-blog/introducing-llama-packs-e14f453b913a

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消