OpenAI字符串标记化解释

2023年12月04日 由 alex 发表

602

0

简介

OpenAI的大型语言模型通过将文本转换为标记(tokens)来运作。

这些模型学会了辨别这些标记之间的统计联系,并且擅长预测序列中后续标记。

标记化

字符串可以从一个字符到一个单词,再到多个单词不等。对于大型上下文窗口的语言模型(LLM)中,可以提交整个文档。

Tiktoken是OpenAI开发的一个开源标记器。Tiktoken将常见字符序列(句子)转换成标记;并且能够将标记再转换回句子。

可以通过使用Web UI,或者如文章后面所展示的,以编程方式对Tiktoken进行实验。

利用tiktoken Web UI工具,如下所示,可以对文本字符串如何经过标记化并达到该段落的总标记数量有一个基本的了解。

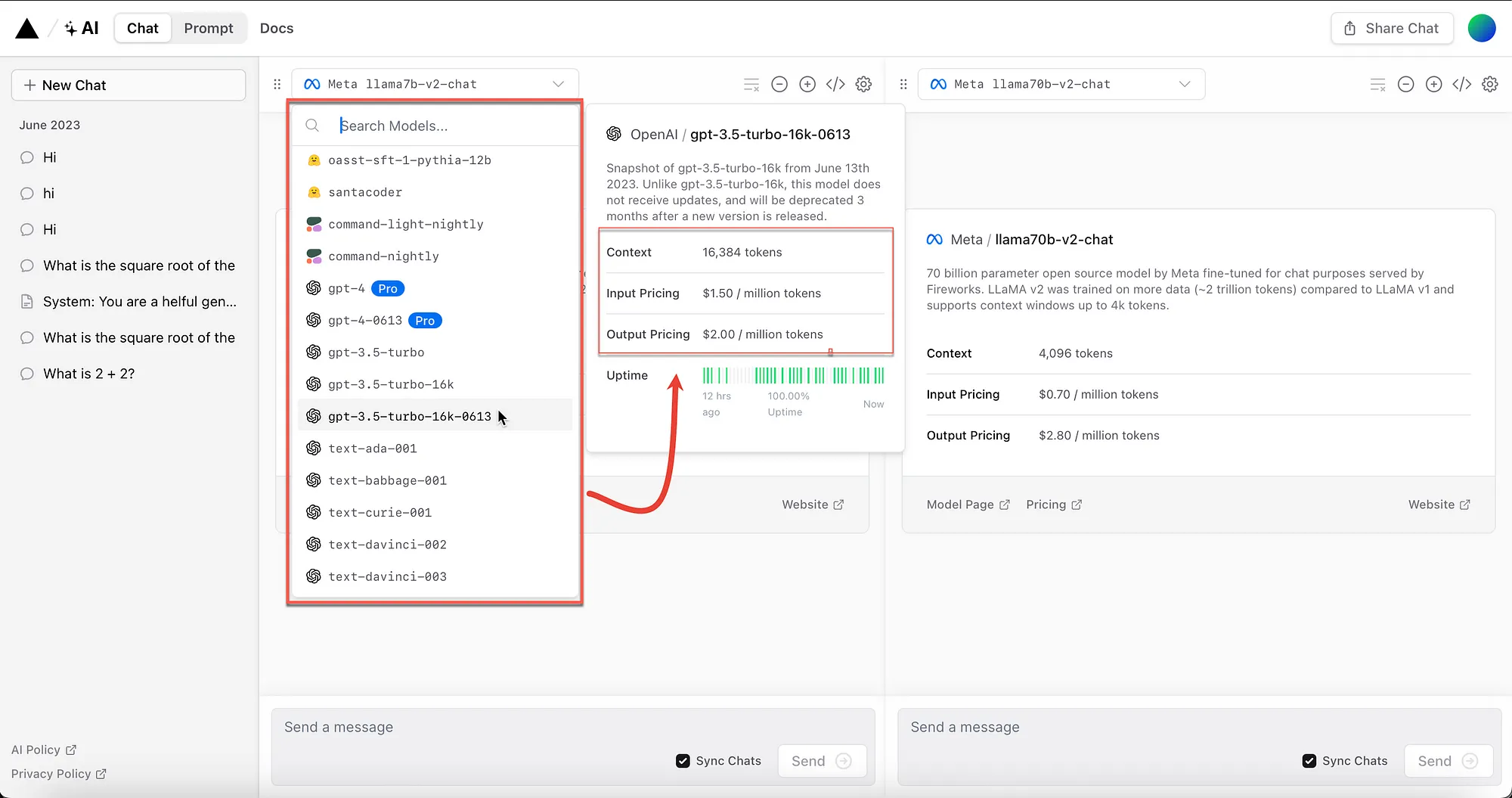

考虑下面的图片,Vercel游乐场有一个非常好的图形用户界面,显示了每个模型的输入和输出定价。

字符串标记化

- 标记化是可逆的且无损的,因此你可以将标记化还原为原始文本。

- 标记化作用于任意文本。

- 标记化具有压缩文本的作用:标记化序列比对应于原始文本的字节序列要短。通常在实践中,平均每个标记化大约对应4个字节。

- 标记化允许模型识别常见的子词。例如,在英语中,ing是一个常见的子词,BPE编码经常会将编码如encod和ing分成分词。模型会经常在不同的上下文中看到ing分词,帮助模型一般化并更好地理解语法。

标记化与模型

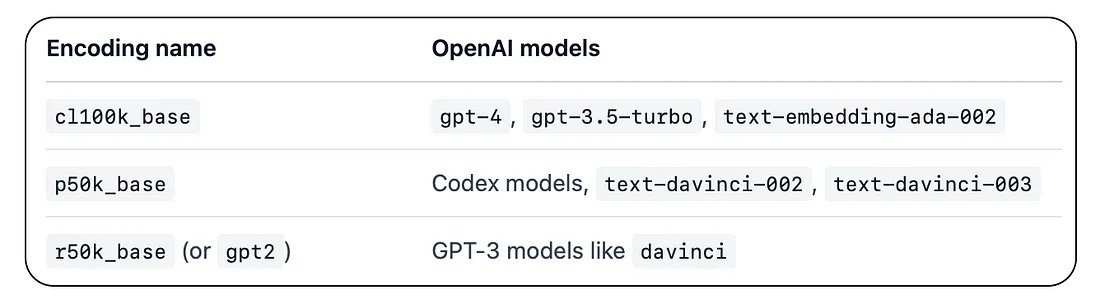

考虑下面的表格,标记化过程并不是在所有模型上都一样;不同的模型使用不同的编码。

较新的模型,如GPT-3.5和GPT-4,相比老版本的GPT-3和Codex模型使用了不同的分词器,导致对同一输入文本得到不同的标记。

不同标记编码与不同的模型相关联,因此在将文本转换为分词时需要记住这一点,以便意识到将使用什么模型。

考虑以下图片,其中编码名称与特定的OpenAI模型相关联。

抖音Python代码

你将需要安装tiktoken和OpenAI,并且拥有一个OpenAI API密钥。

pip install tiktoken

pip install openai

import tiktoken

import os

import openai

openai.api_key = "Your api key goes here"

encoding = tiktoken.get_encoding("cl100k_base")

encoding = tiktoken.encoding_for_model("gpt-3.5-turbo")

encoding.encode("How long is the great wall of China?")

以下是输入句子的标记化版本:

[4438, 1317, 374, 279, 2294, 7147, 315, 5734, 30]

以及一个用来计算令牌数量的小程序:

def num_tokens_from_string(string: str, encoding_name: str) -> int:

"""Returns the number of tokens in a text string."""

encoding = tiktoken.get_encoding(encoding_name)

num_tokens = len(encoding.encode(string))

return num_tokens

num_tokens_from_string("How long is the great wall of China?", "cl100k_base")

输出为:

9

正如前面提到的,将这些标记转换回一个常规字符串。

# # # # # # # # # # # # # # # # # # # # # # # # #

# Turn tokens into text with encoding.decode() #

# # # # # # # # # # # # # # # # # # # # # # # # #

encoding.decode([4438, 1317, 374, 279, 2294, 7147, 315, 5734, 30])

输出为:

How long is the great wall of China?

单字节符号:

[encoding.decode_single_token_bytes(token) for token in [4438, 1317, 374, 279, 2294, 7147, 315, 5734, 30]]

输出:

[b'How',

b' long',

b' is',

b' the',

b' great',

b' wall',

b' of',

b' China',

b'?']

这个函数将不同的编码与一个字符串进行比较……

# # # # # # # # # # # # # # # # # # # # # # # # #

# Comparing Encodings #

# # # # # # # # # # # # # # # # # # # # # # # # #

def compare_encodings(example_string: str) -> None:

"""Prints a comparison of three string encodings."""

# print the example string

print(f'\nExample string: "{example_string}"')

# for each encoding, print the # of tokens, the token integers, and the token bytes

for encoding_name in ["r50k_base", "p50k_base", "cl100k_base"]:

encoding = tiktoken.get_encoding(encoding_name)

token_integers = encoding.encode(example_string)

num_tokens = len(token_integers)

token_bytes = [encoding.decode_single_token_bytes(token) for token in token_integers]

print()

print(f"{encoding_name}: {num_tokens} tokens")

print(f"token integers: {token_integers}")

print(f"token bytes: {token_bytes}")

compare_encodings("How long is the great wall of China?")

输出为:

Example string: "How long is the great wall of China?"

r50k_base: 9 tokens

token integers: [2437, 890, 318, 262, 1049, 3355, 286, 2807, 30]

token bytes: [b'How', b' long', b' is', b' the', b' great', b' wall', b' of', b' China', b'?']

p50k_base: 9 tokens

token integers: [2437, 890, 318, 262, 1049, 3355, 286, 2807, 30]

token bytes: [b'How', b' long', b' is', b' the', b' great', b' wall', b' of', b' China', b'?']

cl100k_base: 9 tokens

token integers: [4438, 1317, 374, 279, 2294, 7147, 315, 5734, 30]

token bytes: [b'How', b' long', b' is', b' the', b' great', b' wall', b' of', b' China', b'?']

# # # # # # # # # # # # # # # # # # # # # # # # # #

# Counting tokens for chat completions API calls #

# # # # # # # # # # # # # # # # # # # # # # # ## # #

def num_tokens_from_messages(messages, model="gpt-3.5-turbo-0613"):

"""Return the number of tokens used by a list of messages."""

try:

encoding = tiktoken.encoding_for_model(model)

except KeyError:

print("Warning: model not found. Using cl100k_base encoding.")

encoding = tiktoken.get_encoding("cl100k_base")

if model in {

"gpt-3.5-turbo-0613",

"gpt-3.5-turbo-16k-0613",

"gpt-4-0314",

"gpt-4-32k-0314",

"gpt-4-0613",

"gpt-4-32k-0613",

}:

tokens_per_message = 3

tokens_per_name = 1

elif model == "gpt-3.5-turbo-0301":

tokens_per_message = 4 # every message follows <|start|>{role/name}\n{content}<|end|>\n

tokens_per_name = -1 # if there's a name, the role is omitted

elif "gpt-3.5-turbo" in model:

print("Warning: gpt-3.5-turbo may update over time. Returning num tokens assuming gpt-3.5-turbo-0613.")

return num_tokens_from_messages(messages, model="gpt-3.5-turbo-0613")

elif "gpt-4" in model:

print("Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.")

return num_tokens_from_messages(messages, model="gpt-4-0613")

else:

raise NotImplementedError(

f"""num_tokens_from_messages() is not implemented for model {model}. See https://github.com/openai/openai-python/blob/main/chatml.md for information on how messages are converted to tokens."""

)

num_tokens = 0

for message in messages:

num_tokens += tokens_per_message

for key, value in message.items():

num_tokens += len(encoding.encode(value))

if key == "name":

num_tokens += tokens_per_name

num_tokens += 3 # every reply is primed with <|start|>assistant<|message|>

return num_tokens

以下是一个例子,其中一段对话提交给六个GPT模型……

os.environ['OPENAI_API_KEY'] = str("your api key goes here")

from openai import OpenAI

client = OpenAI()

# let's verify the function above matches the OpenAI API response

import openai

example_messages = [

{

"role": "system",

"content": "You are a helpful, pattern-following assistant that translates corporate jargon into plain English.",

},

{

"role": "system",

"name": "example_user",

"content": "New synergies will help drive top-line growth.",

},

{

"role": "system",

"name": "example_assistant",

"content": "Things working well together will increase revenue.",

},

{

"role": "system",

"name": "example_user",

"content": "Let's circle back when we have more bandwidth to touch base on opportunities for increased leverage.",

},

{

"role": "system",

"name": "example_assistant",

"content": "Let's talk later when we're less busy about how to do better.",

},

{

"role": "user",

"content": "This late pivot means we don't have time to boil the ocean for the client deliverable.",

},

]

###########################################################################

for model in [

"gpt-3.5-turbo-0301",

"gpt-3.5-turbo-0613",

"gpt-3.5-turbo",

"gpt-4-0314",

"gpt-4-0613",

"gpt-4",

]:

print(model)

# example token count from the function defined above

print(f"{num_tokens_from_messages(example_messages, model)} prompt tokens counted by num_tokens_from_messages().")

# example token count from the OpenAI API

response = client.chat.completions.create(

model=model,

messages=example_messages,

temperature=1,

max_tokens=30,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)

#print(f'{response["usage"]["prompt_tokens"]} prompt tokens counted by the OpenAI API.')

#print(response.choices[0].message.content). completion_tokens=28, prompt_tokens=12, total_tokens=40)

print("Number of Prompt Tokens Tokens:", response.usage.prompt_tokens)

print("Number of completion Tokens:", response.usage.completion_tokens)

print("Number of Total Tokens:", response.usage.total_tokens)gpt-3.5-turbo-0301

127 prompt tokens counted by num_tokens_from_messages().

Number of Prompt Tokens Tokens: 127

Number of completion Tokens: 23

Number of Total Tokens: 150

gpt-3.5-turbo-0613

129 prompt tokens counted by num_tokens_from_messages().

Number of Prompt Tokens Tokens: 129

Number of completion Tokens: 20

Number of Total Tokens: 149

gpt-3.5-turbo

Warning: gpt-3.5-turbo may update over time. Returning num tokens assuming gpt-3.5-turbo-0613.

129 prompt tokens counted by num_tokens_from_messages().

Number of Prompt Tokens Tokens: 129

Number of completion Tokens: 21

Number of Total Tokens: 150

gpt-4-0314

129 prompt tokens counted by num_tokens_from_messages().

Number of Prompt Tokens Tokens: 129

Number of completion Tokens: 17

Number of Total Tokens: 146

gpt-4-0613

129 prompt tokens counted by num_tokens_from_messages().

Number of Prompt Tokens Tolens: 129

Number of completion Tokens: 18

Number of Total Tokens: 147

gpt-4

Warning: gpt-4 may update over time. Returning num tokens assuming gpt-4-0613.

129 prompt tokens counted by num_tokens_from_messages().

Number of Prompt Tokens Tokens: 129

Number of completion Tokens: 19

Number of Total Tokens: 148

文章来源:https://cobusgreyling.medium.com/openai-string-tokenisation-explained-31a7b06203c0

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消