使用ChatGPT自动化知识图构建

介绍

在这篇文章中,我们将介绍如何使用OpenAI的gpt-3.5-turbo从原始文本数据构建知识图谱的主题。大型语言模型(LLM)在文本生成和问答任务上展现出了超越传统方法的性能。检索增强生成(RAG)进一步改善了它们的性能,使它们能够访问最新的和特定领域的知识。我们在这篇文章的目标是利用LLM作为信息抽取工具,将原始文本转化为能够轻易查询的事实,以便获取有用的见解。但首先,我们需要定义一些关键概念。

什么是知识图谱?

知识图谱是一个语义网络,它表示着将现实世界中的实体相互联系。这些实体通常对应于人、组织、对象、事件和概念。知识图谱由以下结构的三元组组成:

头部 → 关系 → 尾部

或者用语义网的术语表示为:

主体 → 谓语 → 宾语

网络表示形式允许我们抽取并分析这些实体之间存在的复杂关系。

知识图谱常伴随着对概念、关系及其属性的定义——本体论。本体是一种正式规范,它定义了目标领域中的概念及其关系,从而为网络提供了语义。

搜索引擎和网络上的其他自动化代理使用本体来理解特定网页内容的意义,以便索引它并正确显示。

案例描述

对于这个用例,我们将使用OpenAI的gpt-3.5-turbo从亚马逊产品数据集的产品描述中创建一个知识图谱。

实现

我们将在Python中实现这一解决方案。首先,我们需要安装并导入所需的库。

导入库和读取数据

!pip install pandas openai sentence-transformers networkx

import json

import logging

import matplotlib.pyplot as plt

import networkx as nx

from networkx import connected_components

from openai import OpenAI

import pandas as pd

from sentence_transformers import SentenceTransformer, util

现在,我们将把亚马逊产品数据集读取为一个pandas数据框架。

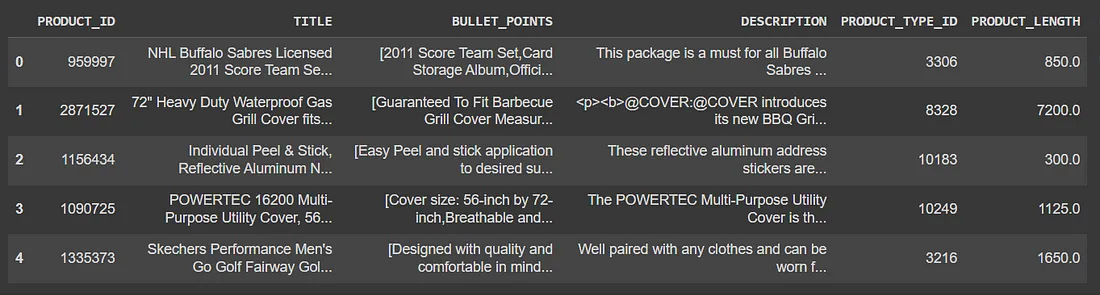

data = pd.read_csv("amazon_products.csv")我们可以在下图中看到数据集的内容。该数据集包含以下列:“PRODUCT_ID”、“TITLE”、“BULLET_POINTS”、“DESCRIPTION”、“PRODUCT_TYPE_ID”和“PRODUCT_LENGTH”。我们将把“TITLE”、“BULLET_POINTS”和“DESCRIPTION”列组合成一列“text”,这将代表我们将提示 ChatGPT 从中提取实体和关系的产品的规格。

data['text'] = data['TITLE'] + data['BULLET_POINTS'] + data['DESCRIPTION']

信息提取

我们将指导ChatGPT从所提供的产品规格中提取实体和关系,并将结果作为JSON对象数组返回。JSON对象必须包含以下键:‘head’、‘head_type’、‘relation’、‘tail’和‘tail_type’。

‘head’键必须包含从用户提示中提供的类型列表中提取的实体的文本。‘head_type’键必须包含提取的头实体的类型,该类型必须是用户列表中的类型之一。‘relation’键必须包含‘head’和‘tail’之间的关系类型,‘tail’键必须表示作为三元组中的对象的提取实体的文本,而‘tail_type’键必须包含尾实体的类型。

我们将使用下面列出的实体类型和关系类型提示ChatGPT进行实体-关系提取。我们将这些实体和关系映射到Schema.org本体的相应实体和关系上。映射中的键代表提供给ChatGPT的实体和关系类型,值代表Schema.org中的对象和属性的URL。

# ENTITY TYPES:

entity_types = {

"product": "https://schema.org/Product",

"rating": "https://schema.org/AggregateRating",

"price": "https://schema.org/Offer",

"characteristic": "https://schema.org/PropertyValue",

"material": "https://schema.org/Text",

"manufacturer": "https://schema.org/Organization",

"brand": "https://schema.org/Brand",

"measurement": "https://schema.org/QuantitativeValue",

"organization": "https://schema.org/Organization",

"color": "https://schema.org/Text",

}

# RELATION TYPES:

relation_types = {

"hasCharacteristic": "https://schema.org/additionalProperty",

"hasColor": "https://schema.org/color",

"hasBrand": "https://schema.org/brand",

"isProducedBy": "https://schema.org/manufacturer",

"hasColor": "https://schema.org/color",

"hasMeasurement": "https://schema.org/hasMeasurement",

"isSimilarTo": "https://schema.org/isSimilarTo",

"madeOfMaterial": "https://schema.org/material",

"hasPrice": "https://schema.org/offers",

"hasRating": "https://schema.org/aggregateRating",

"relatedTo": "https://schema.org/isRelatedTo"

}

为了使用ChatGPT执行信息提取,我们创建了一个OpenAI客户端,并使用聊天完成API,为从原始产品规格中识别出的每个关系生成输出的JSON对象数组。默认选择的模型是gpt-3.5-turbo,因为它的性能对这种简单演示来说已经足够好。

client = OpenAI(api_key="<YOUR_API_KEY>")

def extract_information(text, model="gpt-3.5-turbo"):

completion = client.chat.completions.create(

model=model,

temperature=0,

messages=[

{

"role": "system",

"content": system_prompt

},

{

"role": "user",

"content": user_prompt.format(

entity_types=entity_types,

relation_types=relation_types,

specification=text

)

}

]

)

return completion.choices[0].message.content

system_prompt变量包含指导ChatGPT从原始文本中提取实体和关系的指令,并将结果以JSON对象数组的形式返回,每个对象都有以下键:‘head’、‘head_type’、‘relation’、‘tail’及‘tail_type’。

system_prompt = """You are an expert agent specialized in analyzing product specifications in an online retail store.

Your task is to identify the entities and relations requested with the user prompt, from a given product specification.

You must generate the output in a JSON containing a list with JOSN objects having the following keys: "head", "head_type", "relation", "tail", and "tail_type".

The "head" key must contain the text of the extracted entity with one of the types from the provided list in the user prompt, the "head_type"

key must contain the type of the extracted head entity which must be one of the types from the provided user list,

the "relation" key must contain the type of relation between the "head" and the "tail", the "tail" key must represent the text of an

extracted entity which is the tail of the relation, and the "tail_type" key must contain the type of the tail entity. Attempt to extract as

many entities and relations as you can.

"""

user_prompt变量包含了数据集中一个规范要求输出的单个示例,它提示ChatGPT以同样的方式从提供的规范中提取实体和关系。这是一个使用ChatGPT的单次学习的示例。

user_prompt = """Based on the following example, extract entities and relations from the provided text.

Use the following entity types:

# ENTITY TYPES:

{entity_types}

Use the following relation types:

{relation_types}

--> Beginning of example

# Specification

"YUVORA 3D Brick Wall Stickers | PE Foam Fancy Wallpaper for Walls,

Waterproof & Self Adhesive, White Color 3D Latest Unique Design Wallpaper for Home (70*70 CMT) -40 Tiles

[Made of soft PE foam,Anti Children's Collision,take care of your family.Waterproof, moist-proof and sound insulated. Easy clean and maintenance with wet cloth,economic wall covering material.,Self adhesive peel and stick wallpaper,Easy paste And removement .Easy To cut DIY the shape according to your room area,The embossed 3d wall sticker offers stunning visual impact. the tiles are light, water proof, anti-collision, they can be installed in minutes over a clean and sleek surface without any mess or specialized tools, and never crack with time.,Peel and stick 3d wallpaper is also an economic wall covering material, they will remain on your walls for as long as you wish them to be. The tiles can also be easily installed directly over existing panels or smooth surface.,Usage range: Featured walls,Kitchen,bedroom,living room, dinning room,TV walls,sofa background,office wall decoration,etc. Don't use in shower and rugged wall surface]

Provide high quality foam 3D wall panels self adhesive peel and stick wallpaper, made of soft PE foam,children's collision, waterproof, moist-proof and sound insulated,easy cleaning and maintenance with wet cloth,economic wall covering material, the material of 3D foam wallpaper is SAFE, easy to paste and remove . Easy to cut DIY the shape according to your decor area. Offers best quality products. This wallpaper we are is a real wallpaper with factory done self adhesive backing. You would be glad that you it. Product features High-density foaming technology Total Three production processes Can be use of up to 10 years Surface Treatment: 3D Deep Embossing Damask Pattern."

################

# Output

[

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "isProducedBy",

"tail": "YUVORA",

"tail_type": "manufacturer"

}},

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "hasCharacteristic",

"tail": "Waterproof",

"tail_type": "characteristic"

}},

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "hasCharacteristic",

"tail": "Self Adhesive",

"tail_type": "characteristic"

}},

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "hasColor",

"tail": "White",

"tail_type": "color"

}},

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "hasMeasurement",

"tail": "70*70 CMT",

"tail_type": "measurement"

}},

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "hasMeasurement",

"tail": "40 tiles",

"tail_type": "measurement"

}},

{{

"head": "YUVORA 3D Brick Wall Stickers",

"head_type": "product",

"relation": "hasMeasurement",

"tail": "40 tiles",

"tail_type": "measurement"

}}

]

--> End of example

For the following specification, generate extract entitites and relations as in the provided example.

# Specification

{specification}

################

# Output

"""

现在,我们为数据集中的每一个规格调用 extract_information 函数,并创建一个包含所有提取的三元组的列表,这将代表我们的知识图谱。对于这次演示,我们将使用仅包含100个产品规格的子集来生成一个知识图谱。

kg = []

for content in data['text'].values[:100]:

try:

extracted_relations = extract_information(content)

extracted_relations = json.loads(extracted_relations)

kg.extend(extracted_relations)

except Exception as e:

logging.error(e)

kg_relations = pd.DataFrame(kg)

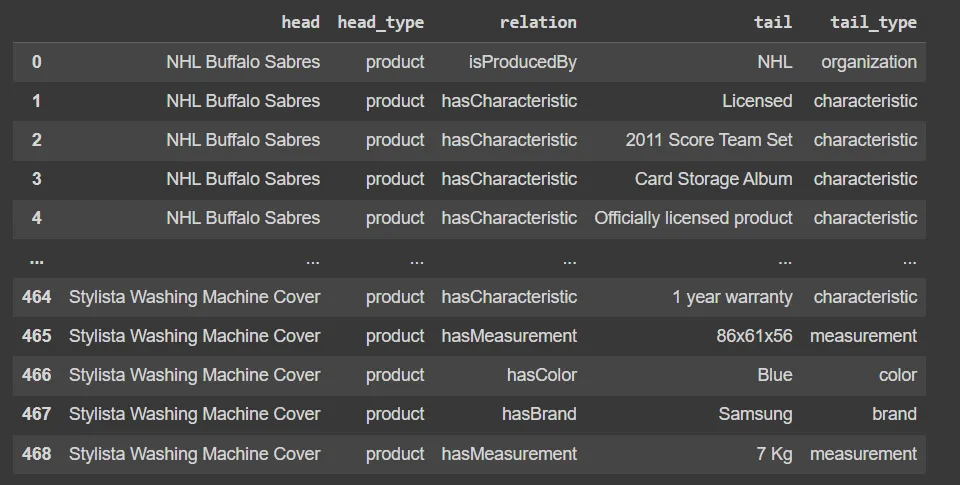

信息提取的结果显示在下面的图表中。

实体解析

实体解析(Entity Resolution,简称ER)是指消除指向现实世界概念的实体的歧义的过程。在这个案例中,我们将尝试对数据集中的头实体和尾实体进行基本的实体解析。之所以这样做是为了更精确地表示文本中存在的事实。

我们将使用自然语言处理(NLP)技术来进行实体解析,更具体地说,我们将使用sentence-transformers库为每个头实体创建嵌入,并计算头实体间的余弦相似性。

我们将使用‘all-MiniLM-L6-v2’句子转换器来创建嵌入,因为它是一个快速且相对准确的模型,适合这种用例。对于每对头实体,如果相似性大于0.95,我们则认为这些实体是同一个实体,并将它们的文本值规范化为相同。尾实体也适用相同的推理过程。

这个过程将帮助我们实现以下结果。如果我们有两个实体,一个的值为‘Microsoft’,另一个为‘Microsoft Inc.’,那么这两个实体将被合并为一个。

我们按照以下方式加载和使用嵌入模型来计算第一个和第二个头实体之间的相似性。

heads = kg_relations['head'].values

embedding_model = SentenceTransformer('all-MiniLM-L6-v2')

embeddings = embedding_model.encode(heads)

similarity = util.cos_sim(embeddings[0], embeddings[1])

为了在实体解析后可视化提取的知识图,我们使用了networkx Python库。首先,我们创建一个空图,并将提取出的每一个关系添加到图中。

G = nx.Graph()

for _, row in kg_relations.iterrows():

G.add_edge(row['head'], row['tail'], label=row['relation'])

要绘制这个图表我们可以使用以下代码:

pos = nx.spring_layout(G, seed=47, k=0.9)

labels = nx.get_edge_attributes(G, 'label')

plt.figure(figsize=(15, 15))

nx.draw(G, pos, with_labels=True, font_size=10, node_size=700, node_color='lightblue', edge_color='gray', alpha=0.6)

nx.draw_networkx_edge_labels(G, pos, edge_labels=labels, font_size=8, label_pos=0.3, verticalalignment='baseline')

plt.title('Product Knowledge Graph')

plt.show()

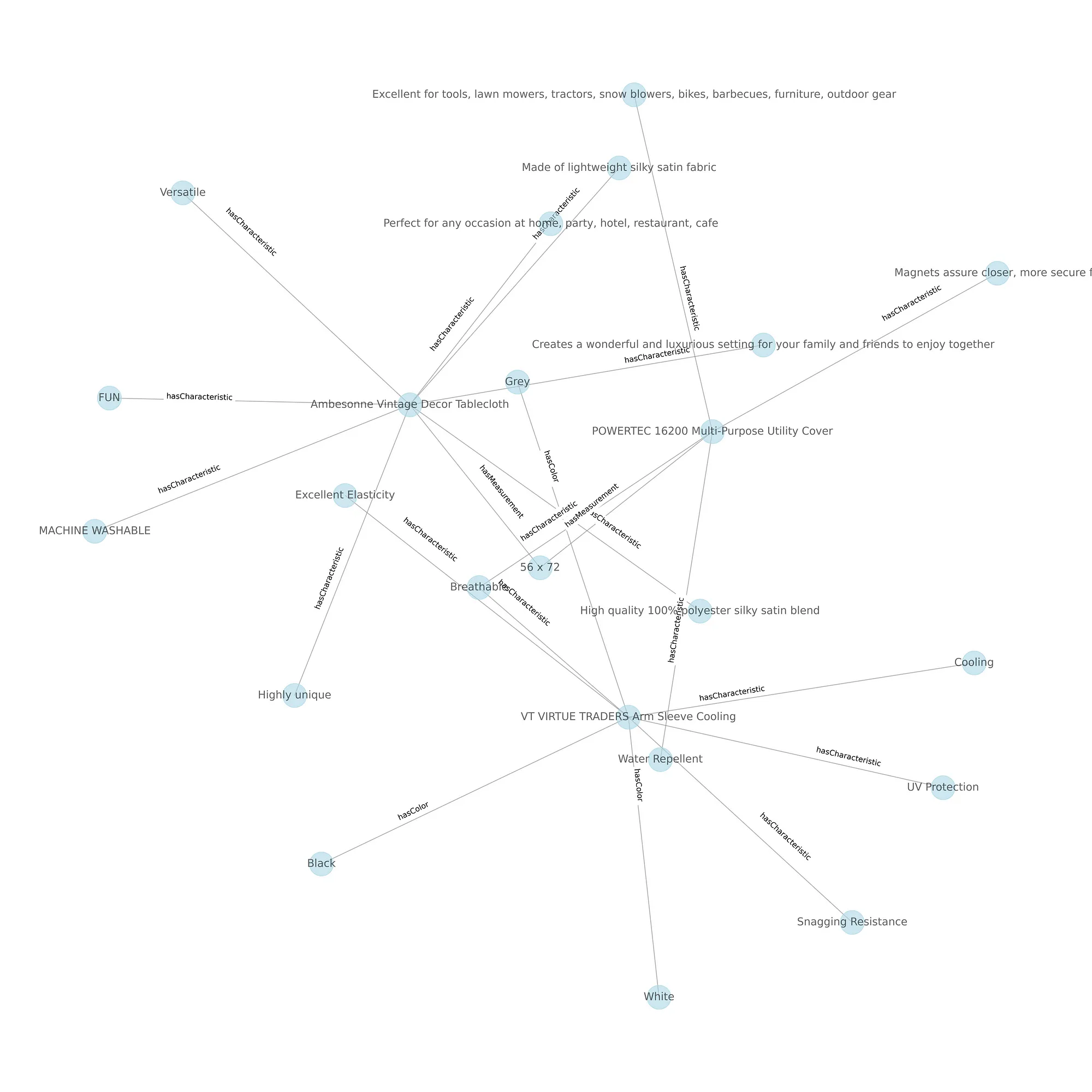

下图显示了从生成的知识图谱中提取出来的子图。

我们可以看到,通过这种方式,我们能够基于它们共享的特性连接多个不同的产品。这对于学习产品间的共同属性、规范化产品规格、使用像Schema.org这样的通用架构来描述网络上的资源,甚至根据产品规格做出产品推荐都是有用的。

结论

大多数企业都有大量未利用的非结构化数据散落在数据湖中。创建知识图谱来从这些未使用的数据中提取洞察力的方法将帮助获取被困在未处理和非结构化文本语料中的信息,并利用这些信息做出更明智的决策。