使用Openvino加速矢量搜索应用程序

在本文中,我们使用 OpenAI 的 CLIP 进行文本到图像和图像到图像的搜索,并将在速度提升方面对比分析 PyTorch 模型、FP16 OpenVINO 格式和 INT8 OpenVINO 格式。

OpenAI的CLIP

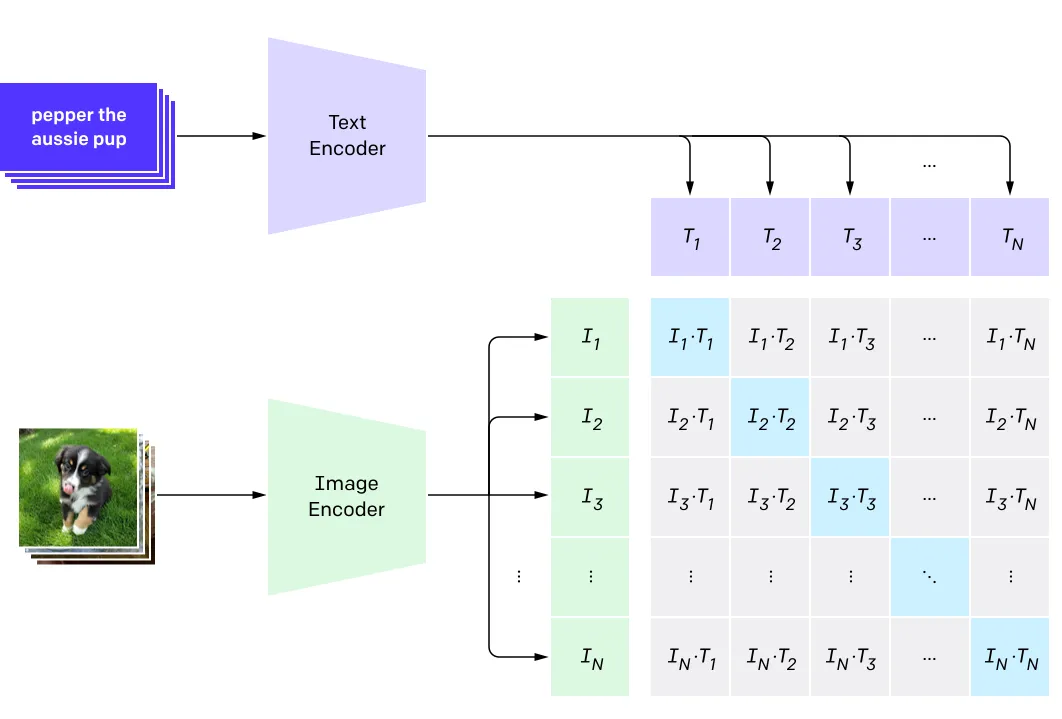

CLIP是一个能够同时处理图像和文本的神经网络。

CLIP 是一个多模态模型,这意味着它能理解文本和图像输入。它还意味着它可以将输入嵌入到一个多模态空间中,在这个空间里,无论其类型如何,图像和文本都位于语义上有意义的位置。

下面的图片展示了预训练过程的可视化。

英特尔OpenVINO

OpenVINO工具包是一个免费的工具包,它便于从框架中优化深度学习模型,并将推理引擎部署到英特尔硬件上。我们将使用OpenVINO CLIP模型,采用FP16和INT8格式。

本文使用OpenVINO来加速LanceDB嵌入管道。

实现

在实现部分,我们看到了使用概念标题数据集的Hugging Face 和OpenVINO格式的CLIP模型的比较实现。

我们从第一步开始,从Hugging Face中加载概念标题数据集。

# https://huggingface.co/datasets/conceptual_captions

image_data = load_dataset(

"conceptual_captions", split="train",

)

我们将从大量的图像中选择100个图像作为样本

#taking first 100 images

image_data_df = pd.DataFrame(image_data[:100])

辅助函数用于验证图像URL并从图像URL获取图像和标题

def check_valid_URLs(image_URL):

"""

Not all the URLs are valid. This function returns True if the URL is valid. False otherwise.

"""

try:

response = requests.get(image_URL)

Image.open(BytesIO(response.content))

return True

except:

return False

def get_image(image_URL):

response = requests.get(image_URL)

image = Image.open(BytesIO(response.content)).convert("RGB")

return image

def get_image_caption(image_ID):

return image_data[image_ID]["caption"]

# Transform dataframe

image_data_df["is_valid"] = image_data_df["image_url"].apply(check_valid_URLs)

#removing all the in_valid URLs

image_data_df = image_data_df[image_data_df["is_valid"]==True]

image_data_df.head()

现在我们已经准备好了数据集,我们准备开始使用Hugging Face 和OpenVINO进行CLIP,并在速度方面对它们的性能进行比较分析。

Pytorch CLIP使用Hugging Face

我们将从使用Hugging Face的CLIP开始,并使用LanceDB矢量数据库提取嵌入和搜索所需的时间。

def get_model_info(model_ID, device):

"""

Loading CLIP from HuggingFace

"""

# Save the model to device

model = CLIPModel.from_pretrained(model_ID).to(device)

# Get the processor

processor = CLIPProcessor.from_pretrained(model_ID)

# Get the tokenizer

tokenizer = CLIPTokenizer.from_pretrained(model_ID)

# Return model, processor & tokenizer

return model, processor, tokenizer

# Set the device

device = "cuda" if torch.cuda.is_available() else "cpu"

model_ID = "openai/clip-vit-base-patch16"

model, processor, tokenizer = get_model_info(model_ID, device)

让我们编写一个辅助函数来提取文本和图像嵌入

def get_single_text_embedding(text):

# Get single text embeddings

inputs = tokenizer(text, return_tensors = "pt").to(device)

text_embeddings = model.get_text_features(**inputs)

# convert the embeddings to numpy array

embedding_as_np = text_embeddings.cpu().detach().numpy()

return embedding_as_np

def get_all_text_embeddings(df, text_col):

# Get all the text embeddings

df["text_embeddings"] = df[str(text_col)].apply(get_single_text_embedding)

return df

def get_single_image_embedding(my_image):

# Get single image embeddings

image = processor(

text = None,

images = my_image,

return_tensors="pt"

)["pixel_values"].to(device)

embedding = model.get_image_features(image)

# convert the embeddings to numpy array

embedding_as_np = embedding.cpu().detach().numpy()

return embedding_as_np

def get_all_images_embedding(df, img_column):

# Get all image embeddings

df["img_embeddings"] = df[str(img_column)].apply(get_single_image_embedding)

return df

连接LanceDB以存储提取的嵌入并进行搜索

import lancedb

db = lancedb.connect("./.lancedb")

使用CLIP Hugging Faces模型提取84张图片的嵌入表示,以及提取嵌入所需的时间。

import time

# extracting embeddings using Hugging Face

start_time = time.time()

image_data_df = get_all_images_embedding(image_data_df, "image")

print(f"Time Taken to Extracted Embeddings of {len(image_data_df)} Images(in seconds): ", time.time()-start_time)

结果:

Time Taken to Extracted Embeddings of 83 Images(in seconds): 65.51

将图像及其嵌入导入 LanceDB 以进行查询

def create_and_ingest(image_data_df, table_name):

"""

Create and Ingest Extracted Embeddings using Hugging Face

"""

image_url = image_data_df.image_url.tolist()

image_embeddings = [arr.astype(np.float32).tolist() for arr in image_data_df.img_embeddings.tolist()]

data = []

for i in range(len(image_url)):

temp = {}

temp['vector'] = image_embeddings[i][0]

temp['image'] = image_url[i]

data.append(temp)

# Create a Table

tbl = db.create_table(name= table_name, data=data, mode= "overwrite")

return tbl

#create and ingest embeddings for pt model

pt_tbl = create_and_ingest(image_data_df, "pt_table")

查询存储的嵌入表示

提取所有图片的嵌入表示所花费的时间,以及查询存储的嵌入表示

# Get the image embedding and query for each caption

pt_img_results = {}

start_time = time.time()

for i in range(len(image_data_df)):

img_query = image_data_df.iloc[i].image

query_embedding = get_single_image_embedding(img_query).tolist()

#querying with image

result = pt_tbl.search(query_embedding[0]).limit(4).to_list()

pt_img_results[str(i)] = result

print(f"Time taken to Extract Img embeddings and searching for {len(image_data_df)} images is ", time.time()-start_time)

结果:

Time taken to Extract Img embeddings and searching for 83 images is 72.34

使用 FP16 OpenVINO格式的CLIP 模型

现在我们将开始使用CLIP F16 OpenVINO格式,并记录提取嵌入向量以及将其导入到LanceDB向量数据库所需的时间。

!pip install -q --extra-index-url https://download.pytorch.org/whl/cpu gradio "openvino>=2023.2.0" "transformers[torch]>=4.30"

为将模型转换成OpenVINO格式并保存FP16 CLIP OpenVINO模型准备输入。

#inputs preparation for conversion and creating processor

text = image_data_df.iloc[10].caption

image = image_data_df.iloc[10].image

inputs = processor(text=[text], images=[image], return_tensors="pt", padding=True)

import openvino as ov

#saving openvino model

model.config.torchscript = True

ov_model = ov.convert_model(model, example_input=dict(inputs))

ov.save_model(ov_model, 'clip-vit-base-patch16.xml')

编译CLIP OpenVINO模型

import numpy as np

from scipy.special import softmax

from openvino.runtime import Core

"""

Compiling CLIP in Openvino FP16 format

"""

# create OpenVINO core object instance

core = Core()

# compile model for loading on device

compiled_model = core.compile_model(ov_model, device_name="AUTO", config={"PERFORMANCE_HINT": "CUMULATIVE_THROUGHPUT"})

# obtain output tensor for getting predictions

image_embeds = compiled_model.output(0)

logits_per_image_out = compiled_model.output(0)

image_url = image_data_df.image_url.tolist()

image_embeddings = []

def extract_openvino_embeddings(image_data_df):

"""

Extracting Image Embeddings using OpenVINO

"""

for i in range(len(image_data_df)):

image = image_data_df.iloc[i].image

inputs = processor(images=[image], return_tensors="np", padding=True)

image_embeddings.append(compiled_model(dict(inputs))["image_embeds"][0])

return image_embeddings

使用CLIP FP16 OpenVINO模型提取84张图像的嵌入,并记录提取嵌入所需的时间。

import time

#time taken to extract embeddings using CLIP OpenVINO format

start_time = time.time()

image_embeddings = extract_openvino_embeddings(image_data_df)

print(f"Time Taken to Extract Embeddings of {len(image_data_df)} Images(in seconds): ", time.time()-start_time)

结果:

Time Taken to Extract Embeddings of 83 Images(in seconds): 55.12

将图像及其嵌入导入LanceDB以进行查询

def create_and_ingest_openvino(image_url, image_embeddings, table_name):

"""

Create and Ingest Extracted Embeddings using OpenVINO FP16 format

"""

data = []

for i in range(len(image_url)):

temp = {}

temp['vector'] = image_embeddings[i]

temp['image'] = image_url[i]

data.append(temp)

# Create a Table

tbl = db.create_table(name= table_name, data=data, mode= "overwrite")

return tbl

#create and ingest embeddings for OpenVINO fp16 model

ov_tbl = create_and_ingest_openvino(image_url, image_embeddings, "ov_tbl")Query the stored embeddings

所用时间来提取所有图片的嵌入表示以及查询存储的嵌入表示。

# Get the image embedding and query for each caption

ov_img_results = {}

start_time = time.time()

for i in range(len(image_data_df)):

img_query = image_data_df.iloc[i].image

image = image_data_df.iloc[i].image

inputs = processor(images=[image], return_tensors="np", padding=True)

query_embedding = compiled_model(dict(inputs))["image_embeds"][0]

#querying with query image

result = ov_tbl.search(query_embedding).limit(4).to_list()

ov_img_results[str(i)] = result

print(f"Time taken to Extract Img embeddings and searching for {len(image_data_df)} images is ", time.time()-start_time)

结果:

Time taken to Extract Img embeddings and searching for 83 images is 62.54

NNCF INT 8位量化

使用NNCF(神经网络压缩框架)的8位后训练优化技术,并通过OpenVINO工具包推断量化模型。

%pip install -q datasets

%pip install -q "nncf>=2.7.0"

import os

from transformers import CLIPProcessor, CLIPModel

fp16_model_path = 'clip-vit-base-patch16.xml'

#inputs preparation for conversion and creating processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch16")

max_length = model.config.text_config.max_position_embeddings

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch16")

使用NNCF的辅助函数转换成Int8格式。

import requests

from io import BytesIO

import numpy as np

from PIL import Image

from requests.packages.urllib3.exceptions import InsecureRequestWarning

requests.packages.urllib3.disable_warnings(InsecureRequestWarning)

def check_text_data(data):

"""

Check if the given data is text-based.

"""

if isinstance(data, str):

return True

if isinstance(data, list):

return all(isinstance(x, str) for x in data)

return False

def get_pil_from_url(url):

"""

Downloads and converts an image from a URL to a PIL Image object.

"""

response = requests.get(url, verify=False, timeout=20)

image = Image.open(BytesIO(response.content))

return image.convert("RGB")

def collate_fn(example, image_column="image_url", text_column="caption"):

"""

Preprocesses an example by loading and transforming image and text data.

Checks if the text data in the example is valid by calling the `check_text_data` function.

Downloads the image specified by the URL in the image_column by calling the `get_pil_from_url` function.

If there is any error during the download process, returns None.

Returns the preprocessed inputs with transformed image and text data.

"""

assert len(example) == 1

example = example[0]

if not check_text_data(example[text_column]):

raise ValueError("Text data is not valid")

url = example[image_column]

try:

image = get_pil_from_url(url)

h, w = image.size

if h == 1 or w == 1:

return None

except Exception:

return None

#preparing inputs for processor

inputs = processor(text=example[text_column], images=[image], return_tensors="pt", padding=True)

if inputs['input_ids'].shape[1] > max_length:

return None

return inputs

import torch

from datasets import load_dataset

from tqdm.notebook import tqdm

def prepare_calibration_data(dataloader, init_steps):

"""

This function prepares calibration data from a dataloader for a specified number of initialization steps.

It iterates over the dataloader, fetching batches and storing the relevant data.

"""

data = []

print(f"Fetching {init_steps} for the initialization...")

counter = 0

for batch in tqdm(dataloader):

if counter == init_steps:

break

if batch:

counter += 1

with torch.no_grad():

data.append(

{

"pixel_values": batch["pixel_values"].to("cpu"),

"input_ids": batch["input_ids"].to("cpu"),

"attention_mask": batch["attention_mask"].to("cpu")

}

)

return data

def prepare_dataset(opt_init_steps=300, max_train_samples=1000):

"""

Prepares a vision-text dataset for quantization.

"""

dataset = load_dataset("conceptual_captions", streaming=True)

train_dataset = dataset["train"].shuffle(seed=42, buffer_size=max_train_samples)

dataloader = torch.utils.data.DataLoader(train_dataset, collate_fn=collate_fn, batch_size=1)

calibration_data = prepare_calibration_data(dataloader, opt_init_steps)

return calibration_data

初始化 NNCF 并保存量化模型

import logging

import nncf

from openvino.runtime import Core, serialize

core = Core()

#Initialize NNCF

nncf.set_log_level(logging.ERROR)

int8_model_path = 'clip-vit-base-patch16_int8.xml'

calibration_data = prepare_dataset()

ov_model = core.read_model(fp16_model_path)

if len(calibration_data) == 0:

raise RuntimeError(

'Calibration dataset is empty. Please check internet connection and try to download images manually.'

)

#Quantize CLIP fp16 model using NNCF

calibration_dataset = nncf.Dataset(calibration_data)

quantized_model = nncf.quantize(

model=ov_model,

calibration_dataset=calibration_dataset,

model_type=nncf.ModelType.TRANSFORMER,

)

#Saving Quantized model

serialize(quantized_model, int8_model_path)

编译INT8模型 和 提取特征的辅助函数

import numpy as np

from scipy.special import softmax

from openvino.runtime import Core

# create OpenVINO core object instance

core = Core()

# compile model for loading on device

compiled_model = core.compile_model(quantized_model, device_name="AUTO", config={"PERFORMANCE_HINT": "CUMULATIVE_THROUGHPUT"})

# obtain output tensor for getting predictions

image_embeds = compiled_model.output(0)

logits_per_image_out = compiled_model.output(0)

image_url = image_data_df.image_url.tolist()

image_embeddings = []

def extract_quantized_openvino_embeddings(image_data_df, compiled_model):

"""

Extract embeddings of Images using CLIP Quantized model

"""

for i in range(len(image_data_df)):

image = image_data_df.iloc[i].image

inputs = processor(images=[image], return_tensors="np", padding=True)

image_embeddings.append(compiled_model(dict(inputs))["image_embeds"][0])

return image_embeddings

import time

start_time = time.time()

#time taken to extract embeddings using CLIP OpenVINO format

image_embeddings = extract_quantized_openvino_embeddings(image_data_df, compiled_model)

print(f"Time Taken to Extracted Embeddings of {len(image_data_df)} Images(in seconds): ", time.time()-start_time)

结果:

Time Taken to Extracted Embeddings of 83 Images(in seconds): 44.40

将嵌入式数据导入到LanceDB

#create and ingest embeddings for OpenVINO int8 model format

qov_tbl = create_and_ingest_openvino(image_url, image_embeddings, "qov_tbl")

提取所有图像的嵌入并查询存储的嵌入所需的时间

# Get the image embedding and query for each caption

ov_img_results = {}

start_time = time.time()

for i in range(len(image_data_df)):

img_query = image_data_df.iloc[i].image

image = image_data_df.iloc[i].image

inputs = processor(images=[image], return_tensors="np", padding=True)

query_embedding = compiled_model(dict(inputs))["image_embeds"][0]

#querying with query image

result = qov_tbl.search(query_embedding).limit(4).to_list()

ov_img_results[str(i)] = result

print(f"Time taken to Extract Img embeddings and searching using Quantized OpenVINO Model for {len(image_data_df)} images is ", time.time()-start_time)

结果:

Time taken to Extract Img embeddings and searching using Quantized Openvino Model for 83 images is 49.49

现在我们有了所有CLIP模型格式的性能吞吐量,包括Hugging Face的Pytorch、FP16 OpenVINO和INT8 OpenVINO。

结论

以上结果均基于CPU,并将Pytorch模型与OpenVINO模型格式(FP16/INT8)进行比较。