构建RAG聊天机器人来回答有关Python库的问题

使用 Fleet Context 访问整个 Python 宇宙

就在不久前,Fleet AI 推出了 Fleet Context,这是一个命令行界面(CLI)和为 Python 生态系统提供的开源语料库,包含顶级 1000+ Python 库的 400 万个以上高质量嵌入。目前,它提供所有前 1225 个顶级 Python 库的嵌入,并且每天都在添加更多库和版本。

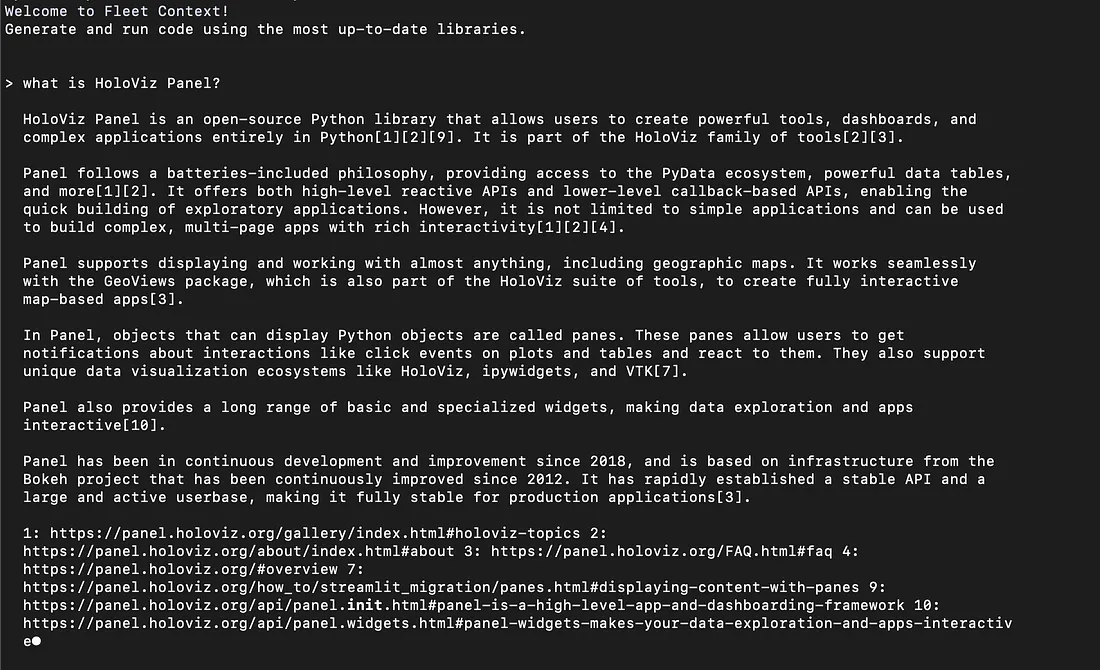

我们如何使用 Fleet Context 查询 1000+ Python 包的相关问题?安装好包 pip install fleet-context 后,我们可以在命令行界面或 Python 控制台中运行 Fleet Context:

命令行界面

一旦定义了 OpenAI 环境变量 export OPENAI_API_KEY=xxx,我们就可以在命令行中运行 context 并开始询问有关 Python 库的问题。例如,我在这里问了“HoloViz Panel 是什么?”。

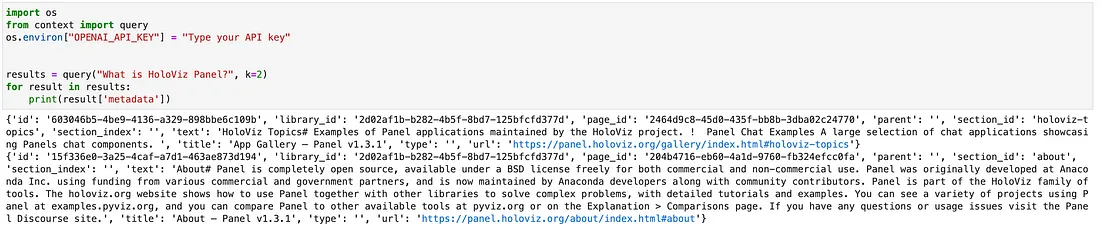

Python控制台

我们可以通过context库的query方法直接从提供的托管向量数据库中查询嵌入式数据。当我们提出问题“HoloViz Panel是什么?”时,它返回了一定数量(k=2)与Panel文档相关的文本块。

请注意,返回的结果包括许多元数据,如library_id、page_id、parent、section_id、title、text、type等,我们可以利用这些信息进行查询和使用。

建立一个小组聊天机器人来询问有关Python库的问题

让我们按照以下三步建立一个Fleet Context的Panel聊天机器人用户界面:

导入包

在我们开始之前,让我们确保已经安装了所需的包,并且导入了这些包:

from context import query

from openai import AsyncOpenAI

import panel as pn

pn.extension()

定义系统提示

完全感谢Fleet Context团队,我们从他们的代码中采用了这个系统提示,并对其进行了一些调整:

# taken from fleet context

SYSTEM_PROMPT = """

You are an expert in Python libraries. You carefully provide accurate, factual, thoughtful, nuanced answers, and are brilliant at reasoning. If you think there might not be a correct answer, you say so.

Each token you produce is another opportunity to use computation, therefore you always spend a few sentences explaining background context, assumptions, and step-by-step thinking BEFORE you try to answer a question.

Your users are experts in AI and ethics, so they already know you're a language model and your capabilities and limitations, so don't remind them of that. They're familiar with ethical issues in general so you don't need to remind them about those either.

Your users are also in a CLI environment. You are capable of writing and running code. DO NOT write hypothetical code. ALWAYS write real code that will execute and run end-to-end.

Instructions:

- Be objective, direct. Include literal information from the context, don't add any conclusion or subjective information.

- When writing code, ALWAYS have some sort of output (like a print statement). If you're writing a function, call it at the end. Do not generate the output, because the user can run it themselves.

- ALWAYS cite your sources. Context will be given to you after the text ### Context source_url ### with source_url being the url to the file. For example, ### Context https://example.com/docs/api.html#files ### will have a source_url of https://example.com/docs/api.html#files.

- When you cite your source, please cite it as [num] with `num` starting at 1 and incrementing with each source cited (1, 2, 3, ...). At the bottom, have a newline-separated `num: source_url` at the end of the response. ALWAYS add a new line between sources or else the user won't be able to read it. DO NOT convert links into markdown, EVER! If you do that, the user will not be able to click on the links.

For example:

**Context 1**: https://example.com/docs/api.html#pdfs

I'm a big fan of PDFs.

**Context 2**: https://example.com/docs/api.html#csvs

I'm a big fan of CSVs.

### Prompt ###

What is this person a big fan of?

### Response ###

This person is a big fan of PDFs[1] and CSVs[2].

1: https://example.com/docs/api.html#pdfs

2: https://example.com/docs/api.html#csvs

"""

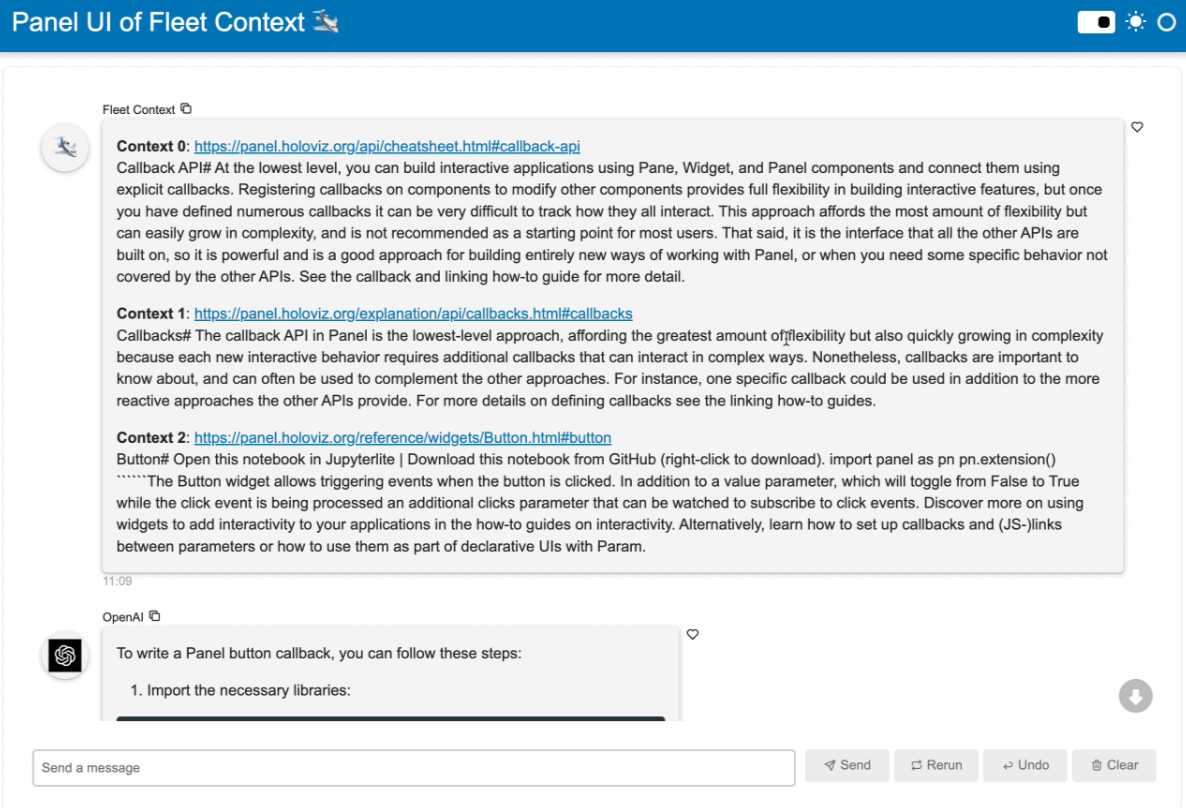

定义聊天界面

定义面板聊天界面的关键组件是 pn.chat.ChatInterface。特别是在回调方法中,我们需要定义聊天机器人的响应方式——答案功能。

在这个功能中,我们:

- 初始化系统提示

- 使用Fleet Contextquery查询方法针对我们给出的问题查询 k=3 个相关文本块

- 我们将检索到的文本块、URL和用户消息格式化为所需的OpenAI消息格式

- 我们将消息历史提供给一个OpenAI模型。

- 然后我们异步地从OpenAI流式传输响应。

```

async def answer(contents, user, instance):

# start with system prompt

messages = [{"role": "system", "content": SYSTEM_PROMPT}]

# add context to the user input

context = ""

fleet_responses = query(contents, k=3)

for i, response in enumerate(fleet_responses):

context += f"\n\n**Context {i}**: {response['metadata']['url']}\n{response['metadata']['text']}"

instance.send(context, avatar="?️", user="Fleet Context", respond=False)

# get history of messages (skipping the intro message)

# and serialize fleet context messages as "user" role

messages.extend(

instance.serialize(role_names={"user": ["user", "Fleet Context"]})[1:]

)

openai_response = await client.chat.completions.create(

model=MODEL, messages=messages, temperature=0.2, stream=True

)

message = ""

async for chunk in openai_response:

token = chunk.choices[0].delta.content

if token:

message += token

yield message

client = AsyncOpenAI()

intro_message = pn.chat.ChatMessage("Ask me anything about Python libraries!", user="System")

chat_interface = pn.chat.ChatInterface(intro_message, callback=answer, callback_user="OpenAI")

将所有内容格式化至模板中 最后,我们在模板中格式化所有内容,并在命令行中运行 panel serve app.py 来获取最终的应用程序:

template = pn.template.FastListTemplate(main=[chat_interface], title=”Panel UI of Fleet Context ?️”)

template.servable()

现在,你应该有一个可以回答有关Python库问题的工作中的AI聊天机器人了。如果你想增加更复杂的RAG(检索增强生成)功能,LlamaIndex已经将其整合到系统中。

结论

在本文中,我们使用Fleet Context和Panel创建了一个RAG聊天机器人,它可以访问Python宇宙。我们可以询问任何关于排名前1000+的Python软件包的问题,获取答案以及来自软件包文档的响应。