探索生成语言模型的复杂性:聚焦GLaM

2023年12月19日 由 alex 发表

690

0

介绍

GLaM代表“生成性语言模型”。它属于设计用来理解、解释和生成人类语言的机器学习模型的更广泛类别。这些模型是在包含来自多种来源的文本的巨大数据集上训练的,使它们能够学习语言模式、上下文和使用方式。生成性语言模型,或GLaM,是人工智能领域的一项重大发展,特别是在自然语言处理(NLP)方面。本文将探讨GLaM的基础知识、技术基础、应用、挑战以及潜在的未来发展。

技术基础

- 训练和架构:GLaM通常使用深度学习技术在大量文本数据上进行训练。它采用了神经网络架构,例如变压器(transformers),在理解语言的上下文和序列方面展现出了非凡的效率。

- 算法和技术:它使用先进的算法来处理和生成语言。这些可能包括注意力机制,它帮助模型专注于文本的相关部分,以及迁移学习,它允许模型将从一个任务中获得的知识应用到另一个任务。

应用领域

- 自然语言理解和生成:GLaM擅长理解上下文并生成连贯、与上下文相符的响应。这使得它在聊天机器人、虚拟助手和客户服务自动化等应用中非常有用。

- 内容创作:它可以帮助创建书面内容,如文章、报告甚至创意写作。

- 语言翻译:鉴于它对语言细微差别的深刻理解,GLaM在翻译语言时能够保持原文的微妙之处和上下文。

挑战

- 伦理考虑:如同任何AI技术,关于偏见、隐私和误用的担忧是存在的。确保GLaM公平无偏见是一个重大挑战。

- 计算资源:训练和运行像GLaM这样的高级模型需要大量的计算资源,这可能是广泛采用的障碍。

- 理解限制:虽然GLaM强大,但它不是万无一失的。尤其是在复杂或模棱两可的情况下,它可能会生成错误或无意义的响应。

未来发展

- 提高效率:正在进行的研究旨在使像GLaM这样的模型更加高效,减少它们的环境影响,并使它们能够被更多用户访问。

- 增强理解:GLaM未来的版本可能会更好地掌握细腻的人类交流方面,比如情绪和讽刺。

- 更广泛的应用:随着技术的成熟,我们可以期待GLaM在包括教育、医疗和娱乐在内的更多领域找到应用。

代码

从头开始创建一个完整的GLaM(生成性语言模型)并在Python中包含数据可视化是一个复杂的任务,涉及多个步骤。为了给你一个概述,我将概述一个基本框架并提供一些示例代码片段。然而,请记住,开发像GLaM这样的成熟语言模型通常超出了单个脚本或小项目的范围,并且通常需要大量的计算资源。

步骤概述

- 创建一个合成数据集:这涉及到生成或模拟一个模仿自然语言的数据集。

- 预处理数据:为训练准备文本数据。

- 构建GLaM模型:设计神经网络架构。

- 训练模型:将数据输入模型进行训练。

- 评估和绘制结果:评估模型的性能并可视化结果。

import random

import string

import numpy as np

import matplotlib.pyplot as plt.pyplot as plt

from keras.models import Sequential

from keras.layers import Embedding, LSTM, Dense

from keras.preprocessing.text import Tokenizer

from keras.preprocessing.sequence import pad_sequences

# Function to generate synthetic text

def generate_synthetic_text(length=1000):

return ''.join(random.choice(string.ascii_letters + " " + string.digits + ".?!") for _ in range(length))

# Generate a synthetic dataset

synthetic_dataset = [generate_synthetic_text(100) for _ in range(100)]

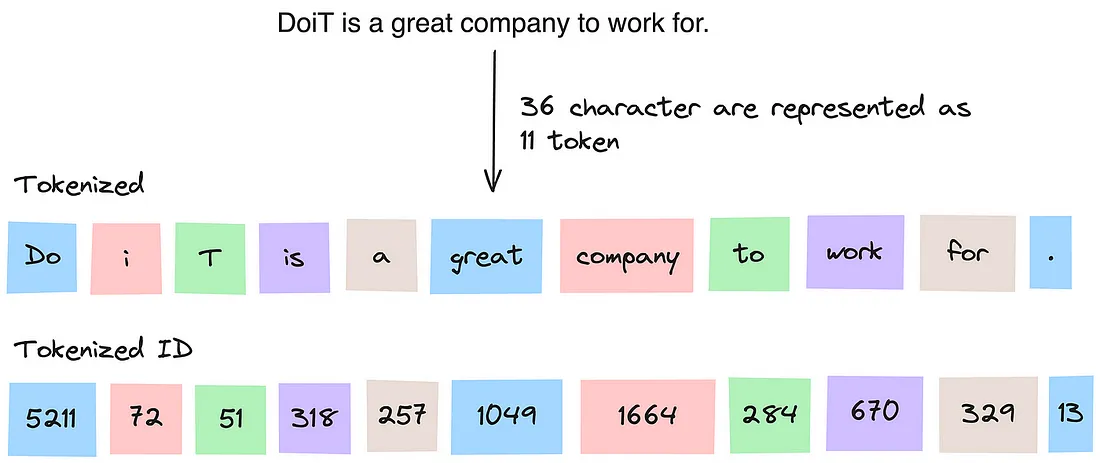

# Preprocess the data

tokenizer = Tokenizer()

tokenizer.fit_on_texts(synthetic_dataset)

sequences = tokenizer.texts_to_sequences(synthetic_dataset)

# Pad sequences for uniform length

max_sequence_length = max([len(seq) for seq in sequences])

padded_sequences = pad_sequences(sequences, maxlen=max_sequence_length)

# Create dummy binary labels

labels = np.random.randint(0, 2, size=(len(padded_sequences),))

# Define a simple LSTM model

model = Sequential()

model.add(Embedding(input_dim=len(tokenizer.word_index) + 1, output_dim=100))

model.add(LSTM(128))

model.add(Dense(1, activation='sigmoid'))

# Compile the model

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Train the model

history = model.fit(padded_sequences, labels, epochs=10, batch_size=32, validation_split=0.2)

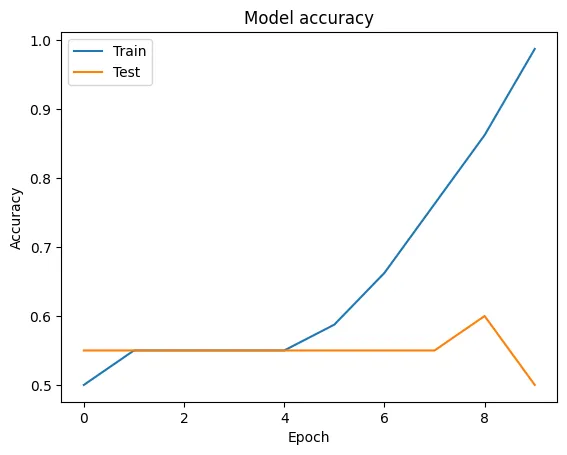

# Plotting training & validation accuracy values

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

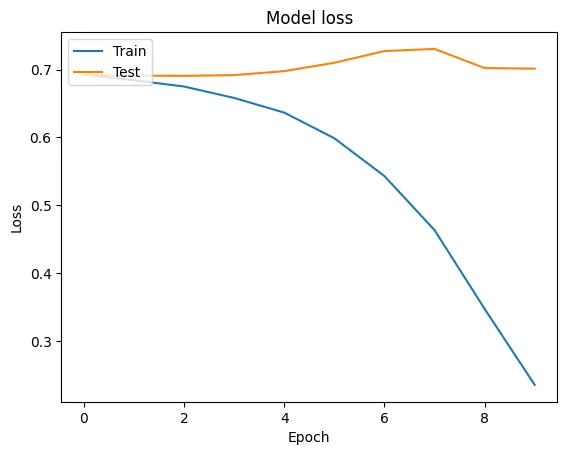

# Plotting training & validation loss values

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

- 实际实施像GLaM这样的模型远比这更复杂,并将涉及大量的微调、庞大的数据集和大量的计算资源。

- 上面的代码是高度简化的,仅作为一个示例来说明。

- 现实的语言模型还需要处理许多其他方面,比如理解上下文、注意力机制等。

Epoch 1/10

3/3 [==============================] - 3s 302ms/step - loss: 0.6926 - accuracy: 0.5000 - val_loss: 0.6922 - val_accuracy: 0.5500

Epoch 2/10

3/3 [==============================] - 0s 42ms/step - loss: 0.6842 - accuracy: 0.5500 - val_loss: 0.6910 - val_accuracy: 0.5500

Epoch 3/10

3/3 [==============================] - 0s 44ms/step - loss: 0.6749 - accuracy: 0.5500 - val_loss: 0.6907 - val_accuracy: 0.5500

Epoch 4/10

3/3 [==============================] - 0s 38ms/step - loss: 0.6581 - accuracy: 0.5500 - val_loss: 0.6918 - val_accuracy: 0.5500

Epoch 5/10

3/3 [==============================] - 0s 35ms/step - loss: 0.6365 - accuracy: 0.5500 - val_loss: 0.6976 - val_accuracy: 0.5500

Epoch 6/10

3/3 [==============================] - 0s 34ms/step - loss: 0.5987 - accuracy: 0.5875 - val_loss: 0.7099 - val_accuracy: 0.5500

Epoch 7/10

3/3 [==============================] - 0s 44ms/step - loss: 0.5429 - accuracy: 0.6625 - val_loss: 0.7272 - val_accuracy: 0.5500

Epoch 8/10

3/3 [==============================] - 0s 36ms/step - loss: 0.4632 - accuracy: 0.7625 - val_loss: 0.7304 - val_accuracy: 0.5500

Epoch 9/10

3/3 [==============================] - 0s 34ms/step - loss: 0.3471 - accuracy: 0.8625 - val_loss: 0.7022 - val_accuracy: 0.6000

Epoch 10/10

3/3 [==============================] - 0s 35ms/step - loss: 0.2349 - accuracy: 0.9875 - val_loss: 0.7012 - val_accuracy: 0.5000

结论

GLaM代表着迈向复杂人工智能之路上的一个重要里程碑。它理解和生成人类语言的能力,在众多领域都具有深远的影响。虽然仍然存在挑战,特别是在伦理和资源领域,但GLaM革新我们与机器互动和处理信息方式的潜力是巨大的。随着技术的发展,看到GLaM如何继续塑造人工智能和机器学习的未来景观将会非常吸引人。

文章来源:https://medium.com/the-modern-scientist/exploring-the-intricacies-of-generative-language-models-a-focus-on-glam-2da1a71e8d68

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消