RAG价值链:大型语言模型信息增强的检索策略

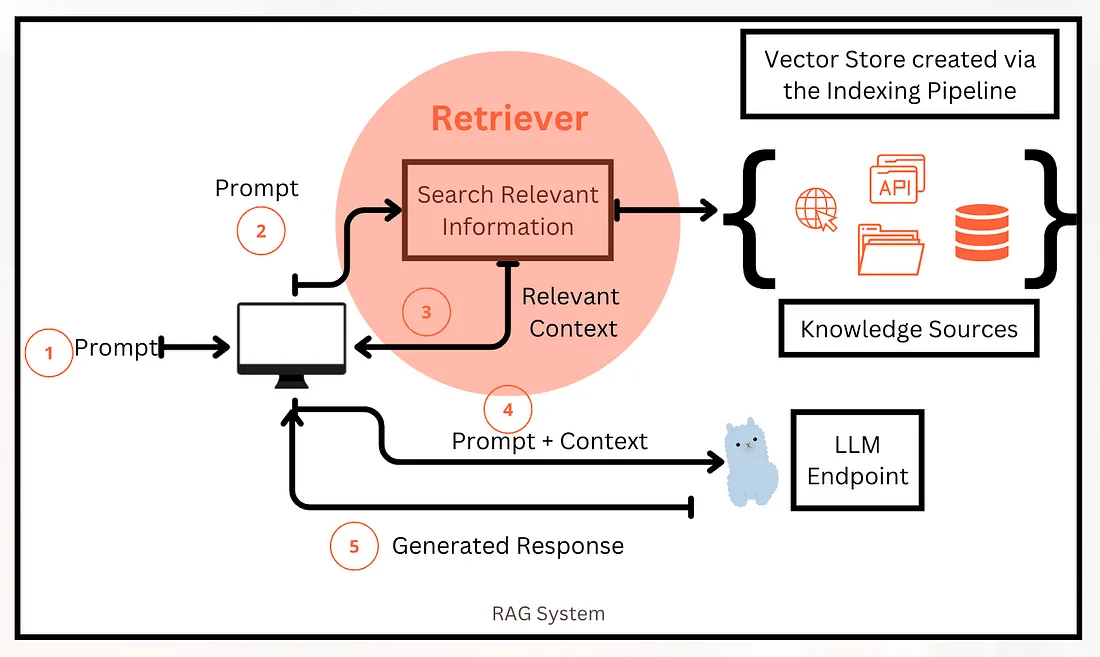

整个 RAG 价值链中最关键的步骤是搜索和检索相关信息(称为文档)。

当用户输入查询或提示时,该系统(检索器)负责准确获取用于响应用户查询的正确信息片段。

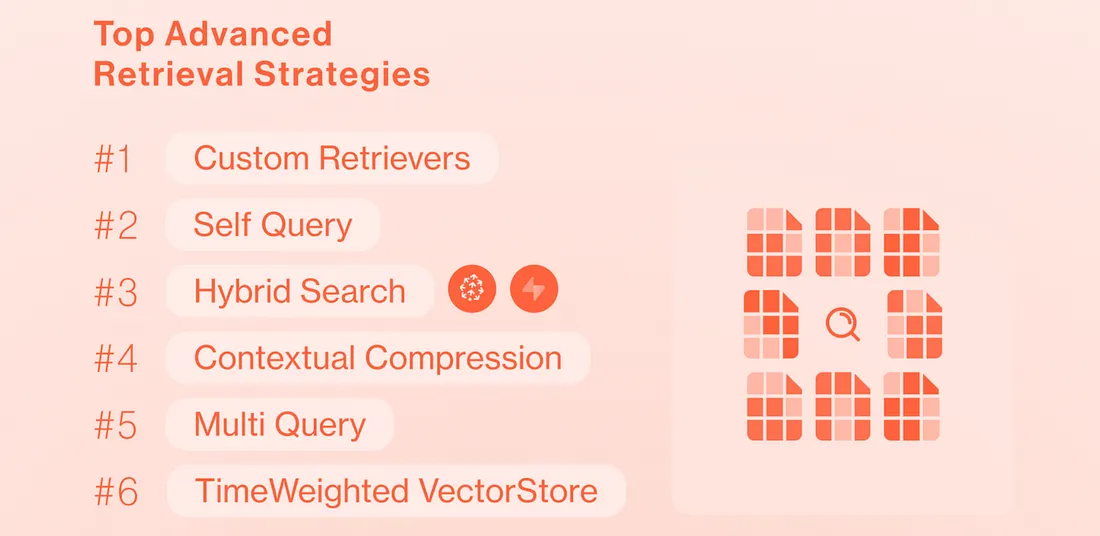

根据浪链2023年人工智能现状调查,最常用的6种检索策略是自我查询、上下文压缩、多重查询和时间加权。

流行的检索方法

相似性搜索

向量数据库的相似性搜索功能构成了检索器的支柱。通过计算输入和文档的嵌入向量之间的距离来计算相似性。

最大边缘相关性

MMR解决了检索中的冗余问题。MMR仅考虑每个文档在鉴于之前结果所带来的新信息量上的相关性。MMR试图在同时保持对已经排名的文档/短语的查询相关性的同时减少结果的冗余。

多查询检索

多查询检索使用语言模型自动进行提示调整,为用户输入生成多样化的查询,从每个查询中检索相关文档,并结合它们来克服局限性并获得更全面的结果集。这种方法旨在通过考虑同一查询的多个视角来提高检索性能。

上下文压缩

有时,相关信息隐藏在长篇的文档中,里面有很多额外的内容。上下文压缩通过将文档压缩为仅与搜索匹配的重要部分来帮忙。

多向量检索

有时在文档中存储多个向量是有意义的。例如一个章节、它的摘要以及一些引用。检索变得更加高效,因为它可以匹配到所有不同类型的已嵌入信息。

父文档检索

在分解文档以供检索时,存在一个难题。小块在嵌入中更好地捕捉到含义,但如果它们太短,上下文就会丢失。父文档检索通过存储小块找到了一个中间立场。在检索过程中,它会提取这些小块,然后使用它们的父ID获取它们来源的较大文档。

自我查询

自我查询检索器是一个能够自问问题的系统。当你用普通语言提出一个问题时,它使用一个特殊的过程将该问题转换成一个结构化的查询。然后,它使用这个结构化查询来搜索它存储的信息。这样,它不仅仅是将你的问题与文档进行比较;它也根据你的问题在文档中查找特定细节,使搜索更加高效和准确。

时间加权检索

这种方法用时间延迟补充了语义相似性搜索。因此,它给予那些更新鲜或使用更频繁的文档更多权重,而不是那些比较旧的文档。

集成技术

正如术语所暗示的,可以将多种检索方法结合起来使用。实施集成技术的方法有很多种,具体用例将定义检索器的结构。

示例:使用LangChain进行相似性搜索

- 使用TextLoader加载文本文件,

- 使用RecursiveCharacterTextSplitter分割文本为块,

- 使用all-MiniLM-L6-v2创建嵌入,

- 将嵌入存储到Chromadb中,

- 使用similarity_search检索块

from langchain.document_loaders import TextLoader

from langchain.embeddings.sentence_transformer import

SentenceTransformerEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Chroma

# load the document and split it into chunks

loader = TextLoader('../Data/AK_BusyPersonIntroLLM.txt')

documents = loader.load()

# split it into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000,

chunk_overlap=200)

docs = text_splitter.split_documents(documents)

# create the open-source embedding function

embedding_function = SentenceTransformerEmbeddings

(model_name="all-MiniLM-L6-v2")

# load it into Chroma

db = Chroma.from_documents(docs, embedding_function)

# query it

query = "What did Andrej say about LLM operating system?"

# distance based search

docs = db.similarity_search(query)

# print results

print(docs[0].page_content)

示例:相似性向量搜索

- 使用TextLoader加载我们的文本文件,

- 使用RecursiveCharacterTextSplitter将文本分割成块,

- 使用all-MiniLM-L6-v2创建嵌入向量,

- 将嵌入向量存储到Chromadb中,

- 将输入查询转换成向量嵌入,

- 使用similarity_search_by_vector检索块。

from langchain.document_loaders import TextLoader

from langchain.embeddings.sentence_transformer import SentenceTransformerEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Chroma

# load the document and split it into chunks

loader = TextLoader('../Data/AK_BusyPersonIntroLLM.txt')

documents = loader.load()

# split it into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000,

chunk_overlap=200)

docs = text_splitter.split_documents(documents)

# create the open-source embedding function

embedding_function = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

# load it into Chroma

db = Chroma.from_documents(docs, embedding_function)

# query it

query = "What did Andrej say about LLM operating system?"

# convert query to embedding

query_vector=embedding_function.embed_query(query)

# distance based search

docs = db.similarity_search_by_vector(query)

# print results

print(docs[0].page_content)

示例:最大边际相关性

- 使用 TextLoader 加载我们的文本文件,

- 使用 RecursiveCharacterTextSplitter 将文本分割成块,

- 使用 OpenAI Embeddings 创建嵌入,

- 将嵌入存储到 Qdrant 中,

- 使用 max_marginal_relevance_search 检索和排名文本块。

from langchain.document_loaders import TextLoader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Qdrant

# load the document and split it into chunks

loader = TextLoader('../Data/AK_BusyPersonIntroLLM.txt')

documents = loader.load()

# split it into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000,

chunk_overlap=200)

docs = text_splitter.split_documents(documents)

# create the openai embedding function

embedding_function = OpenAIEmbeddings(openai_api_key=openai_api_key)

# load it into Qdrant

db = Qdrant.from_documents(docs, embedding_function, location=":memory:",

collection_name="my_documents")

# query it

query = "What did Andrej say about LLM operating system?"

# max marginal relevance search

docs = db.max_marginal_relevance_search(query,k=2, fetch_k=10)

# print results

for i, doc in enumerate(docs):

print(f"{i + 1}.", doc.page_content, "\n")

示例:多查询检索

- 使用 TextLoader 加载文本文件,

- 利用 RecursiveCharacterTextSplitter 将文本拆分成块,

- 使用 OpenAI Embeddings 创建嵌入,

- 将嵌入存储到 Qdrant,

- 将 LLM 设置为 ChatOpenAI(gpt 3.5),

- 设置日志记录以查看由 LLM 生成的查询变体,

- 使用 MultiQueryRetriever 和 get_relevant_documents 函数。

from langchain.document_loaders import TextLoader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Qdrant

from langchain.retrievers.multi_query import MultiQueryRetriever

from langchain.chat_models import ChatOpenAI

# load the document and split it into chunks

loader = TextLoader('../Data/AK_BusyPersonIntroLLM.txt')

documents = loader.load()

# split it into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

docs = text_splitter.split_documents(documents)

# create the openai embedding function

embedding_function = OpenAIEmbeddings(openai_api_key=openai_api_key)

# load it into Qdrant

db = Qdrant.from_documents(docs, embedding_function, location=":memory:",collection_name="my_documents")

# query it

query = "What did Andrej say about LLM operating system?"

# set the LLM for multiquery

llm = ChatOpenAI(temperature=0, openai_api_key=openai_api_key)

# Multiquery retrieval using OpenAI

retriever_from_llm = MultiQueryRetriever.from_llm(retriever=db.as_retriever(), llm=llm)

# set up logging to see the queries generated

import logging

logging.basicConfig()

logging.getLogger("langchain.retrievers.multi_query").setLevel(logging.INFO)

# retrieved documents

unique_docs = retriever_from_llm.get_relevant_documents(query=query)

# print results

for i, doc in enumerate(unique_docs):

print(f"{i + 1}.", doc.page_content, "\n")

示例:上下文压缩

- 使用TextLoader加载文本文件,

- 使用RecursiveCharacterTextSplitter分割文本为多块,

- 使用OpenAI Embeddings创建嵌入向量,

- 设置检索器为FAISS,

- 将LLM(大型语言模型)配置为ChatOpenAI(gpt 3.5),

- 使用LLMChainExtractor作为压缩器,

- 使用ContextualCompressionRetriever和get_relevant_documents函数。

from langchain.document_loaders import TextLoader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import FAISS

from langchain.llms import OpenAI

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import LLMChainExtractor

# load and split text

loader = TextLoader('../Data/AK_BusyPersonIntroLLM.txt')

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000,

chunk_overlap=200)

docs = text_splitter.split_documents(documents)

# save as vector embeddings

retriever = FAISS.from_documents(docs,

OpenAIEmbeddings(

openai_api_key=openai_api_key)).as_retriever()

# use a compressor

llm = OpenAI(temperature=0, openai_api_key=openai_api_key)

compressor = LLMChainExtractor.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor,

base_retriever=retriever)

query = "What did Andrej say about LLM operating system?"

# retrieve docs

compressed_docs = compression_retriever.get_relevant_documents(query)

# print docs

for i, doc in enumerate(unique_docs):

print(f"{i + 1}.", doc.page_content, "\n")