GoogLeNet:彻底改变深度学习和计算机视觉

介绍

在迅速发展的深度学习和计算机视觉领域,几乎没有哪项创新能像GoogLeNet那样产生重大影响。GoogLeNet在2014年推出,这种深层卷积神经网络架构标志着神经网络结构和优化方式的一个范式转变。在同年赢得ImageNet大规模视觉识别挑战赛(ILSVRC)之后,GoogLeNet为图像分类任务设定了新的基准。本文深入探讨了GoogLeNet的创新点、其架构,以及它对人工智能领域的持久影响。

创新之初:GoogLeNet架构

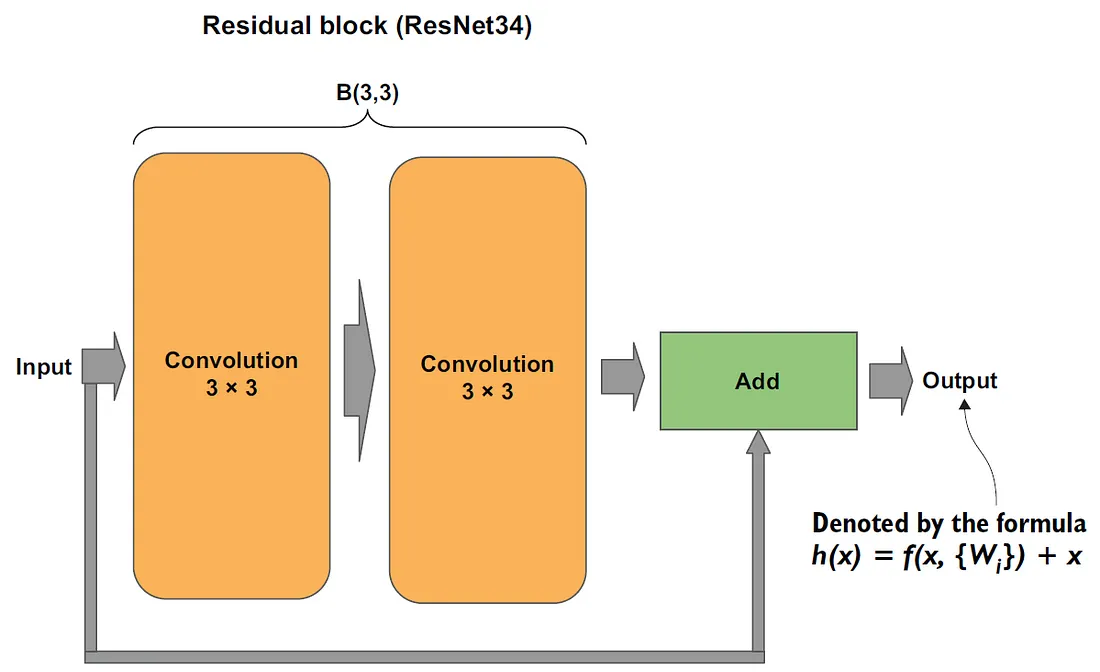

GoogLeNet的核心创新在于其Inception模块,这在当时是一个新概念。这个模块使得网络能够自动且高效地为每个层选择合适类型的卷积滤波器(1x1、3x3、5x5等)。这种创新使GoogLeNet能够在多个尺度上学习更加丰富的特征,这是其身所不具备的能力。Inception模块显著地增加了网络的深度和宽度,使它能够从输入图像中捕捉到更加广泛的特征。

GoogLeNet架构的另一个显著特点是其深度。它拥有22层,比之前的模型如AlexNet(仅有8层)深得多。尽管很复杂性,GoogLeNet保持了计算效率。对于当时有限的硬件资源来说,这种效率对于训练更深层次的网络至关重要。

技术突破和特征

GoogLeNet融合了几项技术创新,这些创新贡献了其高性能。其中一个特点是批量归一化,它在训练中帮助实现了更快的收敛,并减少了内部协变量偏移问题。此外,网络使用图像失真作为数据增强的形式以改善其健壮性并能够从训练数据中更好地泛化。另外,GoogLeNet实现了RMSprop,一种优化算法,它在训练中调整学习率,进一步提高了网络的性能。

影响和遗产

GoogLeNet的引入对深度学习领域产生了深远的影响。它挑战了有关神经网络设计的现有观念,特别是在深度和效率的观念。GoogLeNet的成功促进了深度神经网络的进一步研究和发展,导致了更先进架构如Inception v3和v4的创建。

GoogLeNet的初始模块成为神经网络设计的基础概念。它激发了一波专注于网络架构优化的新研究浪潮,导致更加复杂和高效的模型的出现,这些模型能够执行超出图像分类之外的广泛任务,比如对象检测和语义分割。

代码

创建一个完整的GoogLeNet实现,连同一个合成数据集和在Python中的图表,涉及到几个步骤。我们将使用TensorFlow或Keras,这些都是流行的深度学习库。让我们将任务分解为几个部分:

- 实现GoogLeNet(Inception v1)架构

- 创建一个合成数据集

- 训练模型

- 绘制结果

我们将定义GoogLeNet架构。由于它相当复杂,我将提供一个简化版以作说明。

import tensorflow as tf

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Concatenate, Flatten, Dense, Input, AveragePooling2D

from tensorflow.keras.models import Model

import numpy as np

import matplotlib.pyplot as plt

def inception_module(x, filters):

# Each branch of the module

branch1 = Conv2D(filters[0], (1, 1), padding='same', activation='relu')(x)

branch2 = Conv2D(filters[1], (1, 1), padding='same', activation='relu')(x)

branch2 = Conv2D(filters[2], (3, 3), padding='same', activation='relu')(branch2)

branch3 = Conv2D(filters[3], (1, 1), padding='same', activation='relu')(x)

branch3 = Conv2D(filters[4], (5, 5), padding='same', activation='relu')(branch3)

branch4 = MaxPooling2D((3, 3), strides=(1, 1), padding='same')(x)

branch4 = Conv2D(filters[5], (1, 1), padding='same', activation='relu')(branch4)

# Concatenate all branches

x = Concatenate()([branch1, branch2, branch3, branch4])

return x

def googlenet():

input_img = Input(shape=(224, 224, 3))

# Simplified GoogLeNet architecture

# Add Inception blocks, MaxPooling, and Fully Connected layers as required

# Example inception module application

x = inception_module(input_img, [64, 96, 128, 16, 32, 32])

# Output layer

x = Flatten()(x)

output = Dense(10, activation='softmax')(x) # 10 classes

model = Model(inputs=input_img, outputs=output)

return model

googlenet_model = googlenet()

googlenet_model.summary()

# Generating synthetic data: 1000 samples with 10 classes

x_train = np.random.random((1000, 224, 224, 3))

y_train = np.random.randint(0, 10, 1000)

# Convert labels to categorical

y_train = tf.keras.utils.to_categorical(y_train, 10)

googlenet_model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

history = googlenet_model.fit(x_train, y_train, batch_size=32, epochs=10, validation_split=0.2)

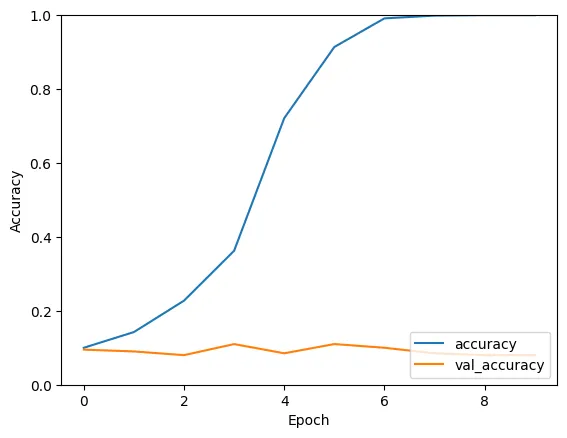

# Plotting training and validation accuracy

plt.plot(history.history['accuracy'], label='accuracy')

plt.plot(history.history['val_accuracy'], label = 'val_accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.ylim([0, 1])

plt.legend(loc='lower right')

plt.show()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 224, 224, 3)] 0 []

conv2d_17 (Conv2D) (None, 224, 224, 96) 384 ['input_1[0][0]']

conv2d_19 (Conv2D) (None, 224, 224, 16) 64 ['input_1[0][0]']

max_pooling2d_7 (MaxPoolin (None, 224, 224, 3) 0 ['input_1[0][0]']

g2D)

conv2d_16 (Conv2D) (None, 224, 224, 64) 256 ['input_1[0][0]']

conv2d_18 (Conv2D) (None, 224, 224, 128) 110720 ['conv2d_17[0][0]']

conv2d_20 (Conv2D) (None, 224, 224, 32) 12832 ['conv2d_19[0][0]']

conv2d_21 (Conv2D) (None, 224, 224, 32) 128 ['max_pooling2d_7[0][0]']

concatenate (Concatenate) (None, 224, 224, 256) 0 ['conv2d_16[0][0]',

'conv2d_18[0][0]',

'conv2d_20[0][0]',

'conv2d_21[0][0]']

flatten_3 (Flatten) (None, 12845056) 0 ['concatenate[0][0]']

dense_7 (Dense) (None, 10) 1284505 ['flatten_3[0][0]']

70

==================================================================================================

Total params: 128574954 (490.47 MB)

Trainable params: 128574954 (490.47 MB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________________________________

Epoch 1/10

25/25 [==============================] - 897s 35s/step - loss: 722.5287 - accuracy: 0.1000 - val_loss: 347.2373 - val_accuracy: 0.0950

Epoch 2/10

25/25 [==============================] - 863s 35s/step - loss: 222.1337 - accuracy: 0.1425 - val_loss: 106.1518 - val_accuracy: 0.0900

Epoch 3/10

25/25 [==============================] - 856s 34s/step - loss: 90.9722 - accuracy: 0.2275 - val_loss: 99.2874 - val_accuracy: 0.0800

Epoch 4/10

25/25 [==============================] - 853s 34s/step - loss: 39.8998 - accuracy: 0.3625 - val_loss: 69.6106 - val_accuracy: 0.1100

Epoch 5/10

25/25 [==============================] - 852s 34s/step - loss: 8.4958 - accuracy: 0.7212 - val_loss: 34.6203 - val_accuracy: 0.0850

Epoch 6/10

25/25 [==============================] - 859s 34s/step - loss: 1.0001 - accuracy: 0.9137 - val_loss: 24.5636 - val_accuracy: 0.1100

Epoch 7/10

25/25 [==============================] - 848s 34s/step - loss: 0.0874 - accuracy: 0.9912 - val_loss: 10.0715 - val_accuracy: 0.1000

Epoch 8/10

25/25 [==============================] - 857s 34s/step - loss: 0.0031 - accuracy: 0.9987 - val_loss: 12.0442 - val_accuracy: 0.0850

Epoch 9/10

25/25 [==============================] - 847s 34s/step - loss: 8.2126e-05 - accuracy: 1.0000 - val_loss: 10.0731 - val_accuracy: 0.0800

Epoch 10/10

25/25 [==============================] - 847s 34s/step - loss: 3.4480e-07 - accuracy: 1.0000 - val_loss: 10.0821 - val_accuracy: 0.0800

这份代码为你提供了一个基本框架。请注意,实际的GoogLeNet架构更为复杂,包括多个Inception模块和其它层。此例已为说明目的而大幅简化。另外,记住在合成数据上训练可能不会产生有意义的学习。对于现实世界的应用,请使用像ImageNet这样的适当数据集。

结论

GoogLeNet在深度学习和计算机视觉的历史上是一个里程碑。它的创新架构和Inception模块概念革新了神经网络的设计,为更先进和更高效的模型铺平了道路。GoogLeNet的遗产超越了其技术成就;它象征着人工智能迅猛的进步和无限的潜力。随着该领域不断发展,GoogLeNet所引入的原则和创新无疑将影响未来几代神经网络,推动人工智能领域的进一步发展。