深入剖析LangSmith: 评估由LangChain开发的三大核心组件

简介

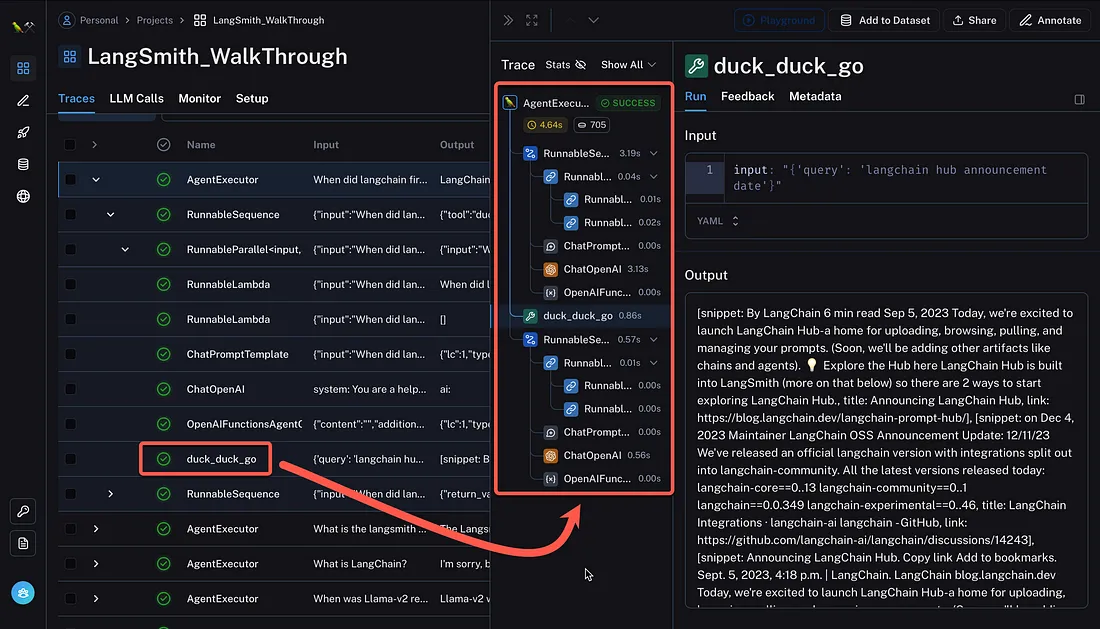

我非常喜欢笔记本与 LangSmith 图形用户界面无缝集成的方式。通过代码执行任务,并通过网络图形用户界面查看结果。

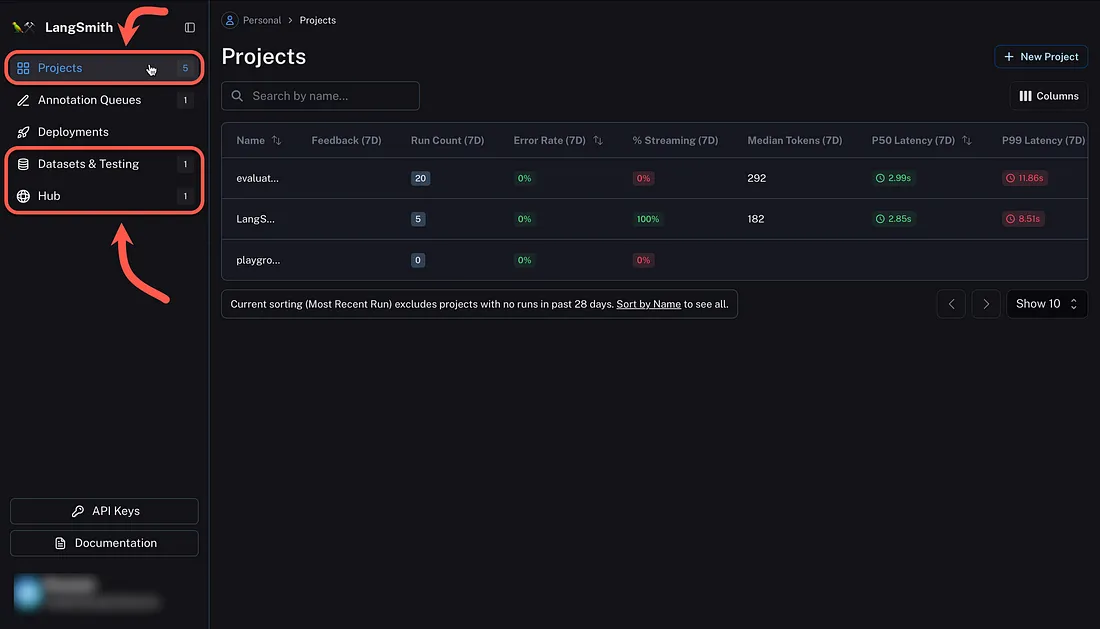

应用程序可在项目下分类,提示可通过提示中心访问。数据集和测试可保存数据,并与代理运行相关联。

LangChain 在很大程度上实现了基于 LLM 的生成式应用开发的民主化。

使用 LangChain 进行原型开发是一回事,但将应用推向生产则是另一回事。

LangSmith 是 LangChain 的配套技术,可协助实现可观察性、可检查性、测试和持续改进。

在运行自主代理时,LangSmith 尤其有用,它能显示代理序列中的不同步骤或链条。此外,当向 LLM 发送多个并行请求时,LangSmith 也能提供帮助。

在本文中,我只考虑了 LangSmith 五个工具中的三个:项目、数据集与测试和集线器。

记录跟踪时运行代理

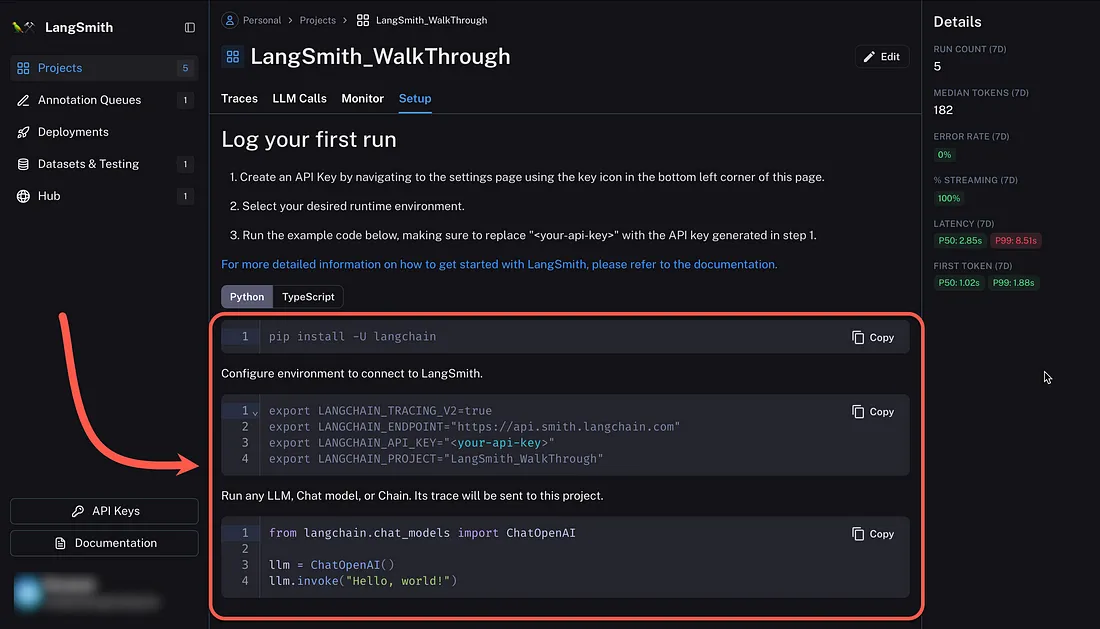

每当在 LangSmith 中创建一个新项目时,设置选项卡下都会显示代码片段,可将其包含在代码中,以便引用并将跟踪记录到 LangSmith 项目中。

下面是安装 LangChain 所需组件的 Python 代码。请注意环境变量的设置。你可以在 Colab 笔记本中完全运行此应用程序。

%pip install --upgrade --quiet langchain langsmith langchainhub --quiet

%pip install --upgrade --quiet langchain-openai tiktoken pandas duckduckgo-search --quiet

import os

from uuid import uuid4

unique_id = uuid4().hex[0:8]

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_PROJECT"] = f"LangSmith_WalkThrough"

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

os.environ["LANGCHAIN_API_KEY"] = "<Your LangSmith API Key>" # Update to your API key

# Used by the agent in this tutorial

os.environ["OPENAI_API_KEY"] = "<Your OpenAI API Key>"

定义代理

考虑到代理,请注意为该代理定义的唯一工具:

tools = [

DuckDuckGoSearchResults(

name="duck_duck_go"

), # General internet search using DuckDuckGo

]

该代理中心的提示通过下面的代码获取:

prompt = hub.pull("wfh/langsmith-agent-prompt:5d466cbc")并从提示中心检索提示:

input_variables=['agent_scratchpad', 'input']

input_types={'agent_scratchpad':

typing.List[typing.Union[langchain_core.messages.ai.AIMessage,

langchain_core.messages.human.HumanMessage,

langchain_core.messages.chat.ChatMessage,

langchain_core.messages.system.SystemMessage,

langchain_core.messages.function.FunctionMessage,

langchain_core.messages.tool.ToolMessage]]}

messages=[SystemMessagePromptTemplate(prompt=PromptTemplate(input_variables=[],

template='

You are an expert senior software engineer.

You are responsible for answering questions about LangChain.

Use functions to consult the documentation before answering.')),

HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['input'],

template='{input}')), MessagesPlaceholder(variable_name='agent_scratchpad')]

运行代理的代码:

from langsmith import Client

client = Client()

from langchain import hub

from langchain.agents import AgentExecutor

from langchain.agents.format_scratchpad import format_to_openai_function_messages

from langchain.agents.output_parsers import OpenAIFunctionsAgentOutputParser

from langchain.tools import DuckDuckGoSearchResults

from langchain_community.tools.convert_to_openai import format_tool_to_openai_function

from langchain_openai import ChatOpenAI

# Fetches the latest version of this prompt

prompt = hub.pull("wfh/langsmith-agent-prompt:5d466cbc")

llm = ChatOpenAI(

model="gpt-3.5-turbo-16k",

temperature=0,

)

tools = [

DuckDuckGoSearchResults(

name="duck_duck_go"

), # General internet search using DuckDuckGo

]

llm_with_tools = llm.bind(functions=[format_tool_to_openai_function(t) for t in tools])

runnable_agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_function_messages(

x["intermediate_steps"]

),

}

| prompt

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

agent_executor = AgentExecutor(

agent=runnable_agent, tools=tools, handle_parsing_errors=True

)

inputs = [

"What is LangChain?",

"What's LangSmith?",

"When was Llama-v2 released?",

"What is the langsmith cookbook?",

"When did langchain first announce the hub?",

]

results = agent_executor.batch([{"input": x} for x in inputs], return_exceptions=True)

以及代理的输出:

[{'input': 'What is LangChain?',

'output': 'I\'m sorry, but I couldn\'t find any information about "LangChain". Could you please provide more context or clarify your question?'},

{'input': "What's LangSmith?",

'output': 'I\'m sorry, but I couldn\'t find any information about "LangSmith". It could be a company, a product, or a person. Can you provide more context or details about what you are referring to?'},

{'input': 'When was Llama-v2 released?',

'output': 'Llama-v2 was released on July 18, 2023.'},

{'input': 'What is the langsmith cookbook?',

'output': 'The Langsmith Cookbook is a collection of recipes and cooking techniques created by Langsmith, a fictional character. It is a comprehensive guide that covers a wide range of cuisines and dishes. The cookbook includes step-by-step instructions, ingredient lists, and tips for successful cooking. Whether you are a beginner or an experienced cook, the Langsmith Cookbook can help you enhance your culinary skills and create delicious meals.'},

{'input': 'When did langchain first announce the hub?',

'output': 'LangChain first announced the LangChain Hub on September 5, 2023.'}]很明显,代理的性能并不理想,因此可以使用 LangSmith 来评估和改进代理。

下图说明了可检查性;在该图中,可以检查代理的执行链。图中显示了来自 DuckDuckGo 集成的响应片段,该片段可作为提示的上下文学习。

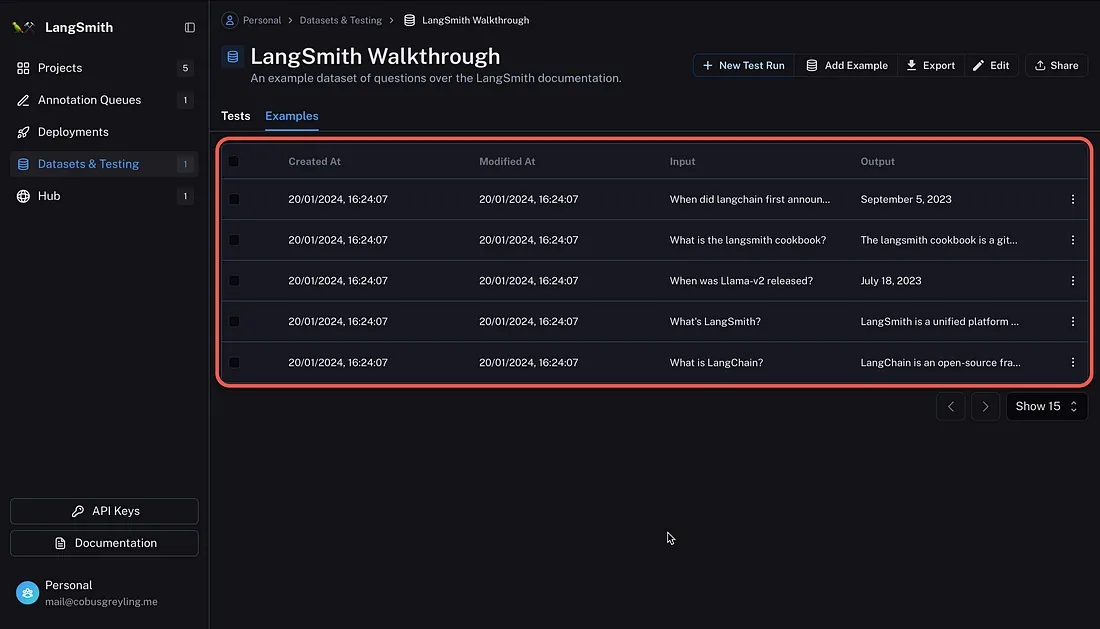

创建 LangSmith 数据集

下面显示了用于测试运行的五个输入和输出示例。这些条目将用于衡量新代理的性能。

数据集是示例的集合,只不过是输入输出对,你可以将其用作应用程序的测试用例。

示例集的加载方式如下:

outputs = [

"LangChain is an open-source framework for building applications using large language models. It is also the name of the company building LangSmith.",

"LangSmith is a unified platform for debugging, testing, and monitoring language model applications and agents powered by LangChain",

"July 18, 2023",

"The langsmith cookbook is a github repository containing detailed examples of how to use LangSmith to debug, evaluate, and monitor large language model-powered applications.",

"September 5, 2023",

]

还有

dataset_name = f"LangSmith Walkthrough"

dataset = client.create_dataset(

dataset_name,

description="An example dataset of questions over the LangSmith documentation.",

)

client.create_examples(

inputs=[{"input": query} for query in inputs],

outputs=[{"output": answer} for answer in outputs],

dataset_id=dataset.id,

)

定义代理基准

下面将定义一个使用 OpenAI 函数调用端点的代理。

from langchain import hub

from langchain.agents import AgentExecutor, AgentType, initialize_agent, load_tools

from langchain.agents.format_scratchpad import format_to_openai_function_messages

from langchain.agents.output_parsers import OpenAIFunctionsAgentOutputParser

from langchain_community.tools.convert_to_openai import format_tool_to_openai_function

from langchain_openai import ChatOpenAI

# Since chains can be stateful (e.g. they can have memory), we provide

# a way to initialize a new chain for each row in the dataset. This is done

# by passing in a factory function that returns a new chain for each row.

def create_agent(prompt, llm_with_tools):

runnable_agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_function_messages(

x["intermediate_steps"]

),

}

| prompt

| llm_with_tools

| OpenAIFunctionsAgentOutputParser()

)

return AgentExecutor(agent=runnable_agent, tools=tools, handle_parsing_errors=True)

配置评估

在用户界面中手动比较链的结果是有效的,但可能会很耗时。

自动使用指标和人工智能辅助反馈来评估代理性能更省时省力。

下面是创建自定义运行评估器的代码,可记录启发式评估。

from langsmith.evaluation import EvaluationResult, run_evaluator

from langsmith.schemas import Example, Run

@run_evaluator

def check_not_idk(run: Run, example: Example):

"""Illustration of a custom evaluator."""

agent_response = run.outputs["output"]

if "don't know" in agent_response or "not sure" in agent_response:

score = 0

else:

score = 1

# You can access the dataset labels in example.outputs[key]

# You can also access the model inputs in run.inputs[key]

return EvaluationResult(

key="not_uncertain",

score=score,

)

下面将定义自定义评估器,并将结果与地面实况标签进行比较。

使用嵌入距离等测量语义相似性。

from langchain.evaluation import EvaluatorType

from langchain.smith import RunEvalConfig

evaluation_config = RunEvalConfig(

# Evaluators can either be an evaluator type (e.g., "qa", "criteria", "embedding_distance", etc.) or a configuration for that evaluator

evaluators=[

# Measures whether a QA response is "Correct", based on a reference answer

# You can also select via the raw string "qa"

EvaluatorType.QA,

# Measure the embedding distance between the output and the reference answer

# Equivalent to: EvalConfig.EmbeddingDistance(embeddings=OpenAIEmbeddings())

EvaluatorType.EMBEDDING_DISTANCE,

# Grade whether the output satisfies the stated criteria.

# You can select a default one such as "helpfulness" or provide your own.

RunEvalConfig.LabeledCriteria("helpfulness"),

# The LabeledScoreString evaluator outputs a score on a scale from 1-10.

# You can use default criteria or write our own rubric

RunEvalConfig.LabeledScoreString(

{

"accuracy": """

Score 1: The answer is completely unrelated to the reference.

Score 3: The answer has minor relevance but does not align with the reference.

Score 5: The answer has moderate relevance but contains inaccuracies.

Score 7: The answer aligns with the reference but has minor errors or omissions.

Score 10: The answer is completely accurate and aligns perfectly with the reference."""

},

normalize_by=10,

),

],

# You can add custom StringEvaluator or RunEvaluator objects here as well, which will automatically be

# applied to each prediction. Check out the docs for examples.

custom_evaluators=[check_not_idk],

)

该提示是从 LangSmith 提示中心导入的:

from langchain import hub

# We will test this version of the prompt

prompt = hub.pull("wfh/langsmith-agent-prompt:798e7324")

print (prompt)

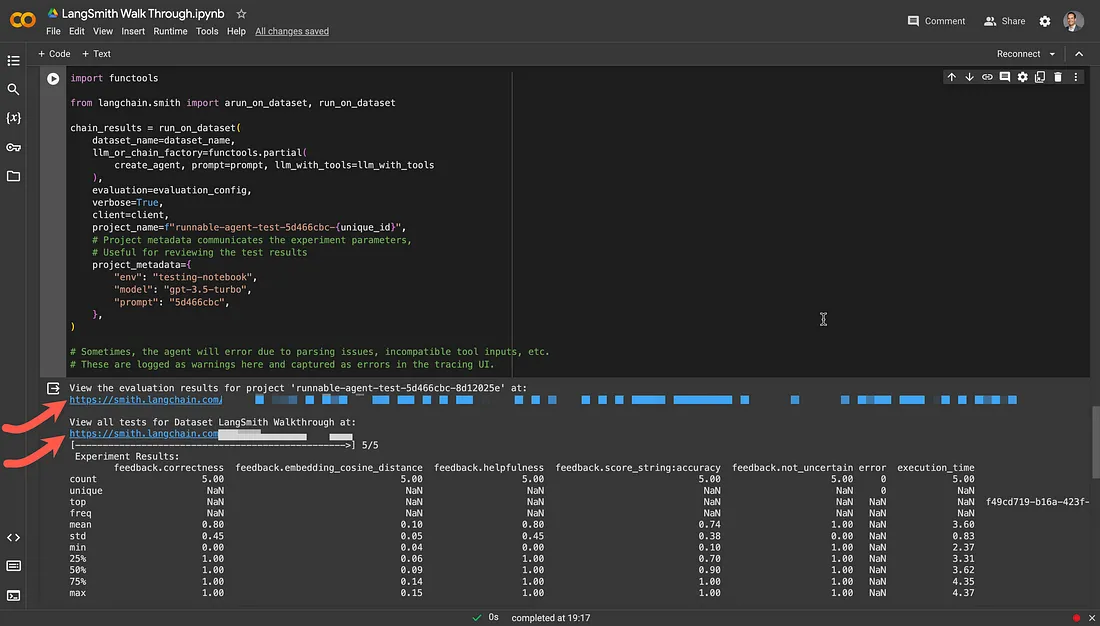

运行评估程序,从指定数据集中获取示例行。

在每个示例上运行代理,并将评估程序应用于生成的运行轨迹,同时自动生成反馈。

结果在 LangSmith 中可见。

import functools

from langchain.smith import arun_on_dataset, run_on_dataset

chain_results = run_on_dataset(

dataset_name=dataset_name,

llm_or_chain_factory=functools.partial(

create_agent, prompt=prompt, llm_with_tools=llm_with_tools

),

evaluation=evaluation_config,

verbose=True,

client=client,

project_name=f"runnable-agent-test-5d466cbc-{unique_id}",

# Project metadata communicates the experiment parameters,

# Useful for reviewing the test results

project_metadata={

"env": "testing-notebook",

"model": "gpt-3.5-turbo",

"prompt": "5d466cbc",

},

)

# Sometimes, the agent will error due to parsing issues, incompatible tool inputs, etc.

# These are logged as warnings here and captured as errors in the tracing UI.

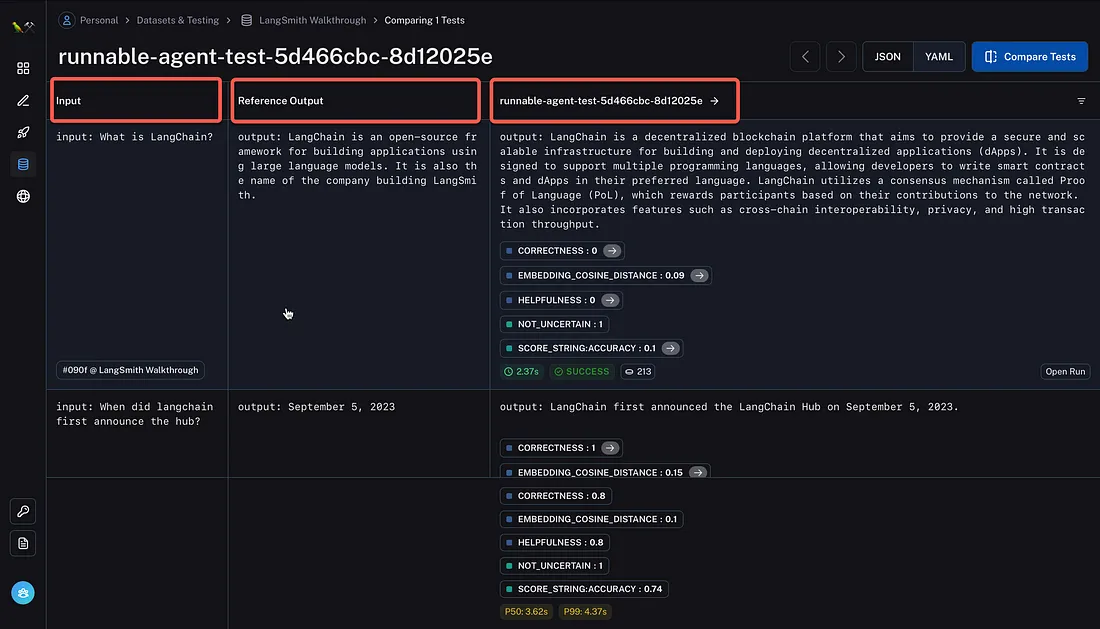

在 LangSmith 中,可以查看输入、示例参考输出和测试结果。

可检查性

请注意,在下图中,当在笔记本中运行评估时,会创建链接。第一个链接允许查看评估结果,也可以查看数据集的所有测试。