机器学习中常见优化算法的比较分析

2024年03月01日 由 alex 发表

1542

0

简介

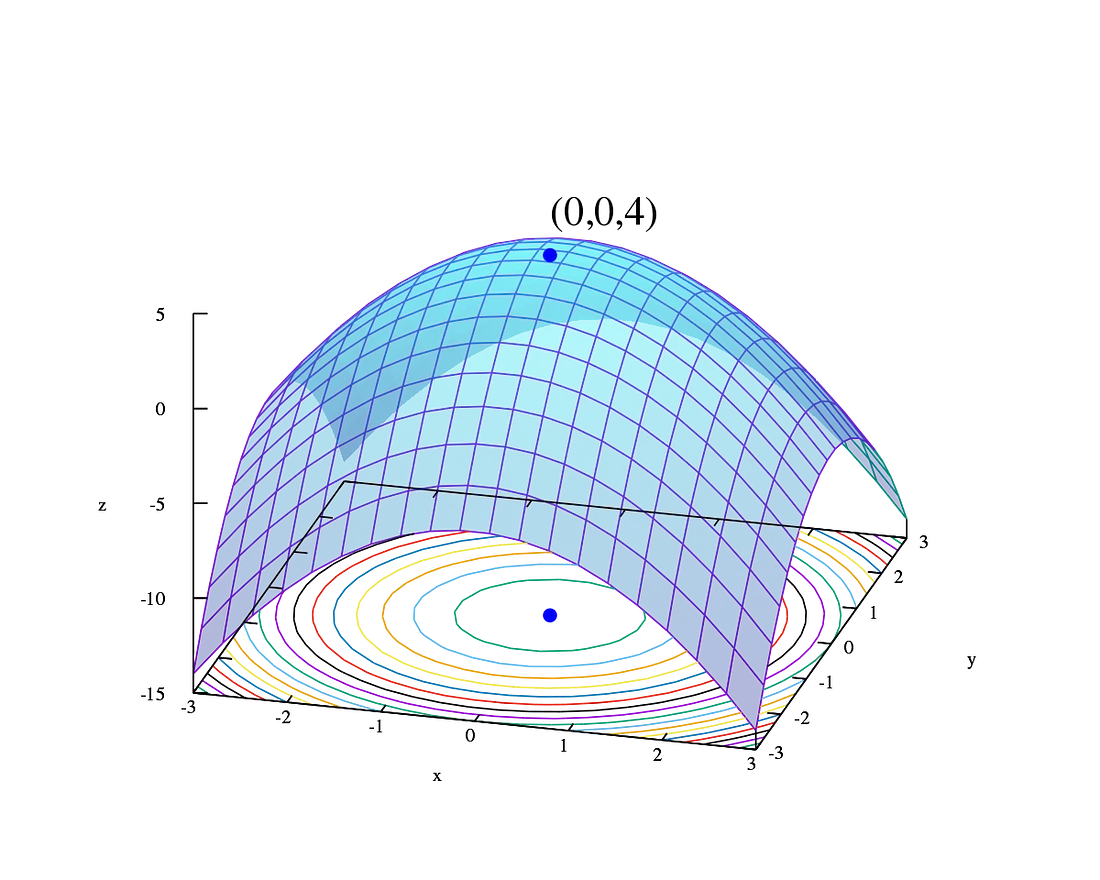

优化算法在机器学习和深度学习中至关重要,它能使损失函数最小化,进而改进模型的预测结果。每种优化算法都有自己独特的方法,在复杂的损失函数中寻找最小值。本文探讨了一些最常见的优化算法,包括 Adadelta、Adagrad、Adam、AdamW、SparseAdam、Adamax、ASGD、LBFGS、NAdam、RAdam、RMSprop、Rprop 和 SGD,深入介绍了它们的机制、优势和应用。

背景

大多数常用的方法都已支持,而且界面足够通用,将来还可以轻松集成更复杂的方法。

- 随机梯度下降法(SGD): 随机梯度下降法(SGD)是最基本但最有效的优化算法之一。它以目标函数相对于参数的梯度的相反方向更新模型参数。学习率决定了迈向最小值的步长。虽然 SGD 对于大型数据集来说简单高效,但收敛速度可能较慢,并可能在最小值附近震荡。

- Momentum 和 Nesterov 加速梯度法(NAG): 为了克服 SGD 的振荡和收敛速度慢的问题,引入了Momentum 和 Nesterov 加速梯度(NAG)技术。它们结合了动量的概念,将之前更新向量的一部分添加到当前更新中。这种方法有助于在相关方向上加速 SGD 并抑制振荡,使其比标准 SGD 更快、更稳定。

- Adagrad :Adagrad 通过根据参数调整学习率,解决了适用于所有参数的全局学习率的局限性。它对与频繁出现的特征相关的参数执行较小的更新,而对与不频繁出现的特征相关的参数执行较大的更新。这种自适应学习率使 Adagrad 特别适用于稀疏数据。

- Adadelta:Adadelta 是 Adagrad 的扩展,旨在降低其咄咄逼人的单调递减学习率。Adadelta 不需要累积所有过去的梯度平方,而是将累积过去梯度的窗口限制为固定大小,从而使其对学习机制的变化更加稳健。

- RMSprop:RMSprop 通过引入一个衰减因子来增加最近梯度的权重,从而修改了 Adagrad 累加先前梯度的方法。这使它更适合在线和非稳态问题,与 Adadelta 类似,但实现方式不同。

- Adam(自适应矩估计): Adam 结合了 Adagrad 和 RMSprop 的优点,根据梯度的第一矩和第二矩调整每个参数的学习率。这种优化器因其在实践中的有效性而被广泛采用,尤其是在深度学习应用中。

- AdamW:AdamW 是 Adam 的一个变体,它将权重衰减与优化步骤分离开来。这种修改提高了性能和训练稳定性,尤其是在深度学习模型中,权重衰减被用作一种正则化形式。

- SparseAdam:SparseAdam 是 Adam 的一种变体,旨在更高效地处理稀疏梯度。它调整了 Adam 算法,只在必要时更新模型参数,因此特别适用于自然语言处理(NLP)和其他使用稀疏数据的应用。

- Adamax:Adamax 是 Adam 算法基于无穷规范的变种。它对梯度噪声具有更强的鲁棒性,在某些情况下比 Adam 更稳定,但使用较少。

- ASGD(平均随机梯度下降法): ASGD 对一段时间内的参数值进行平均,从而在训练末期实现更平滑的收敛。这种方法尤其适用于梯度有噪声或波动的任务。

- LBFGS(有限内存Broyden–Fletcher–Goldfarb–Shanno): LBFGS 是准牛顿方法家族中的一种优化算法。它使用有限的内存近似于Broyden–Fletcher–Goldfarb–Shanno(BFGS)算法。由于内存效率高,它非常适合中小型优化问题。

- NAdam(Neaterov加速自适应动量估计): NAdam 将内斯特罗夫加速梯度与亚当结合起来,将内斯特罗夫动量的前瞻特性纳入亚当框架。这种组合通常能提高性能和收敛速度。

- RAdam (整流Adam): RAdam 在 Adam 优化器中引入了一个整流项,以动态调整自适应学习率,从而解决了一些与收敛速度和泛化性能相关的问题。它能提供更稳定、更一致的优化结果。

- Rprop(弹性反向传播): Rprop 只使用梯度的符号来调整每个参数的更新,而忽略梯度的大小。这使得它对梯度大小变化较大的问题非常有效,但不太适合迷你批量学习或深度学习应用。

代码

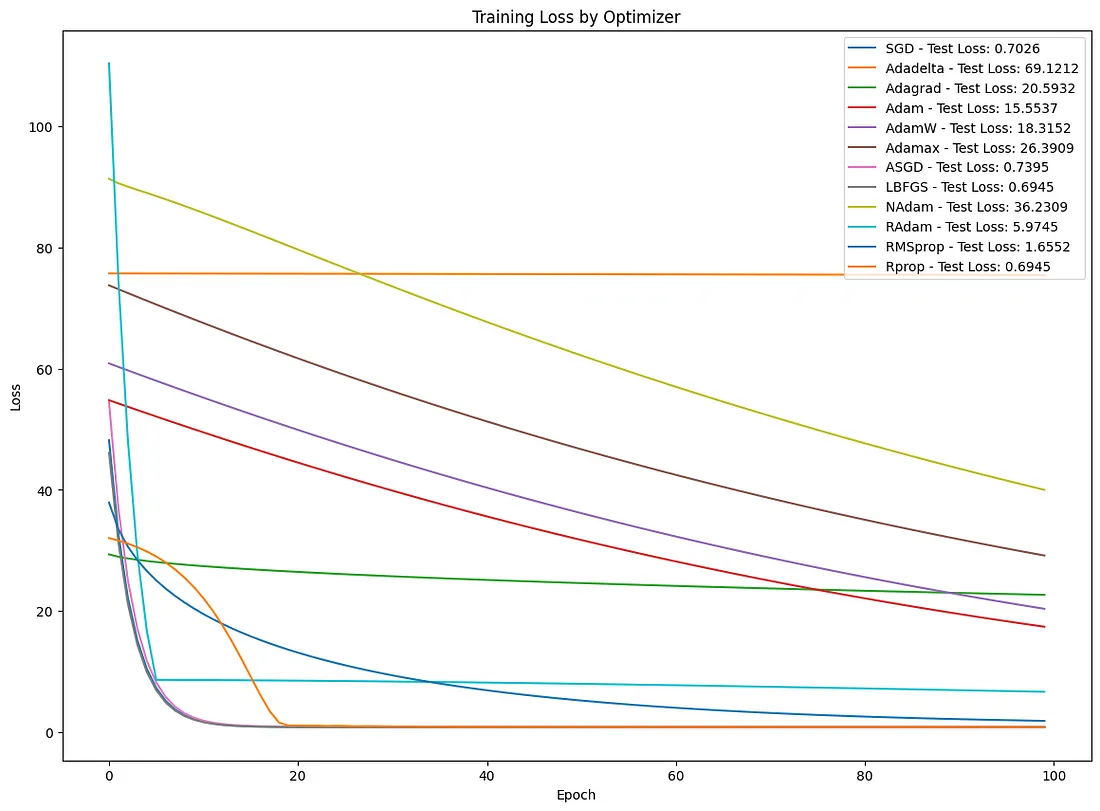

创建一个完整的 Python 示例,演示如何在合成数据集上使用这些优化器,需要几个步骤。我们将使用一个简单的回归问题作为示例,其中的任务是根据一个特征预测一个目标变量。该示例将包括创建一个合成数据集、使用 PyTorch 定义一个简单的神经网络模型、使用每个优化器训练该模型,以及绘制训练指标图以比较它们的性能。

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Generate synthetic data

np.random.seed(42)

X = np.random.rand(1000, 1) * 5 # Features

y = 2.7 * X + np.random.randn(1000, 1) * 0.9 # Target variable with noise

# Convert to torch tensors

X = torch.from_numpy(X).float()

y = torch.from_numpy(y).float()

# Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

class LinearRegressionModel(nn.Module):

def __init__(self):

super(LinearRegressionModel, self).__init__()

self.linear = nn.Linear(1, 1) # One input feature and one output

def forward(self, x):

return self.linear(x)

def train_model(optimizer_name, learning_rate=0.01, epochs=100):

model = LinearRegressionModel()

criterion = nn.MSELoss()

# Select optimizer

optimizers = {

"SGD": optim.SGD(model.parameters(), lr=learning_rate),

"Adadelta": optim.Adadelta(model.parameters(), lr=learning_rate),

"Adagrad": optim.Adagrad(model.parameters(), lr=learning_rate),

"Adam": optim.Adam(model.parameters(), lr=learning_rate),

"AdamW": optim.AdamW(model.parameters(), lr=learning_rate),

"Adamax": optim.Adamax(model.parameters(), lr=learning_rate),

"ASGD": optim.ASGD(model.parameters(), lr=learning_rate),

"NAdam": optim.NAdam(model.parameters(), lr=learning_rate),

"RAdam": optim.RAdam(model.parameters(), lr=learning_rate),

"RMSprop": optim.RMSprop(model.parameters(), lr=learning_rate),

"Rprop": optim.Rprop(model.parameters(), lr=learning_rate),

}

if optimizer_name == "LBFGS":

optimizer = optim.LBFGS(model.parameters(), lr=learning_rate, max_iter=20, history_size=100)

else:

optimizer = optimizers[optimizer_name]

train_losses = []

for epoch in range(epochs):

def closure():

if torch.is_grad_enabled():

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

if loss.requires_grad:

loss.backward()

return loss

# Special handling for LBFGS

if optimizer_name == "LBFGS":

optimizer.step(closure)

with torch.no_grad():

train_losses.append(closure().item())

else:

# Forward pass

y_pred = model(X_train)

loss = criterion(y_pred, y_train)

train_losses.append(loss.item())

# Backward pass and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Test the model

model.eval()

with torch.no_grad():

y_pred = model(X_test)

test_loss = mean_squared_error(y_test.numpy(), y_pred.numpy())

return train_losses, test_loss

optimizer_names = ["SGD", "Adadelta", "Adagrad", "Adam", "AdamW", "Adamax", "ASGD", "LBFGS", "NAdam", "RAdam", "RMSprop", "Rprop"]

plt.figure(figsize=(14, 10))

for optimizer_name in optimizer_names:

train_losses, test_loss = train_model(optimizer_name, learning_rate=0.01, epochs=100)

plt.plot(train_losses, label=f"{optimizer_name} - Test Loss: {test_loss:.4f}")

plt.xlabel("Epoch")

plt.ylabel("Loss")

plt.title("Training Loss by Optimizer")

plt.legend()

plt.show()

本例对不同优化器在简单合成数据集上的表现进行了基本比较。对于更复杂的模型和数据集,优化器之间的差异可能会更明显,而且优化器的选择会对模型性能产生重大影响。

结论

总之,每种优化器都有其优缺点,优化器的选择会极大地影响机器学习模型的性能。如何选择取决于具体问题、数据性质和模型架构。了解这些优化器的基本机制和特点对于将它们有效地应用于各种机器学习挑战至关重要。

文章来源:https://medium.com/ai-mind-labs/exploring-the-landscape-a-comparative-analysis-of-common-optimization-algorithms-in-machine-c00d48a28f7d

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消