如何创建本地和离线运行的LangChain应用程序

简介

我想利用 LangChain 和小语言模型 (SLM) 创建一个可在 MacBook 上本地运行的对话式用户界面。我使用 Jupyter Notebook 来安装和执行 LangChain 代码。对于 SLM 推理服务器,我使用了 Titan TakeOff 推理服务器,并在本地安装和运行。

总的来说,本地和离线运行 LLM 可提供更高的自主性、效率、隐私性和对计算资源的控制,因此对许多应用和用例来说都是极具吸引力的选择。

在本文中,我使用了 Titan。推理服务器利用量化来平衡性能、资源和模型大小。

本地推理的10个原因包括:

- SLM 效率: 小语言模型在对话管理、逻辑推理、小对话、语言理解和自然语言生成等领域的效率已得到证实。

- 减少推理延迟: 在本地处理数据意味着无需通过互联网向远程服务器发送查询,从而加快了响应速度。

- 数据隐私与安全: 将数据和计算保持在本地,就降低了将敏感信息暴露给外部服务器的风险,从而提高了隐私性和安全性。

- 节约成本: 离线操作模式可以消除或降低与云计算或服务器使用费相关的成本,尤其是长期或大量使用时。

- 离线可用性: 即使没有互联网连接,用户也可以访问和使用模型,确保无论网络是否可用,都能提供不间断的服务。

- 定制和控制: 在本地运行模型可以根据特定需求和限制,对模型配置、优化技术和资源分配进行更多定制和控制。

- 可扩展性: 本地部署可根据需要增加或减少计算资源,从而轻松扩大或缩小规模,灵活适应不断变化的需求。

- 合规性: 某些行业或组织可能有监管或合规要求,必须将数据和计算保留在某些辖区或内部,这可以通过本地部署来实现。

- 离线学习和实验: 研究人员和开发人员可以离线实验和训练模型,而无需依赖外部服务,从而可以更快地迭代和探索新思路。

- 资源效率: 与基于云的解决方案相比,利用本地资源执行推理任务可以更有效地利用硬件和能源资源。

Tital 推理服务器

以下是开始使用Titan Takeoff Inference Server专业版的一些有用示例。

默认情况下不需要任何参数,但可以指定指向 Takeoff 正在运行的所需 URL 的 baseURL,并提供生成参数。

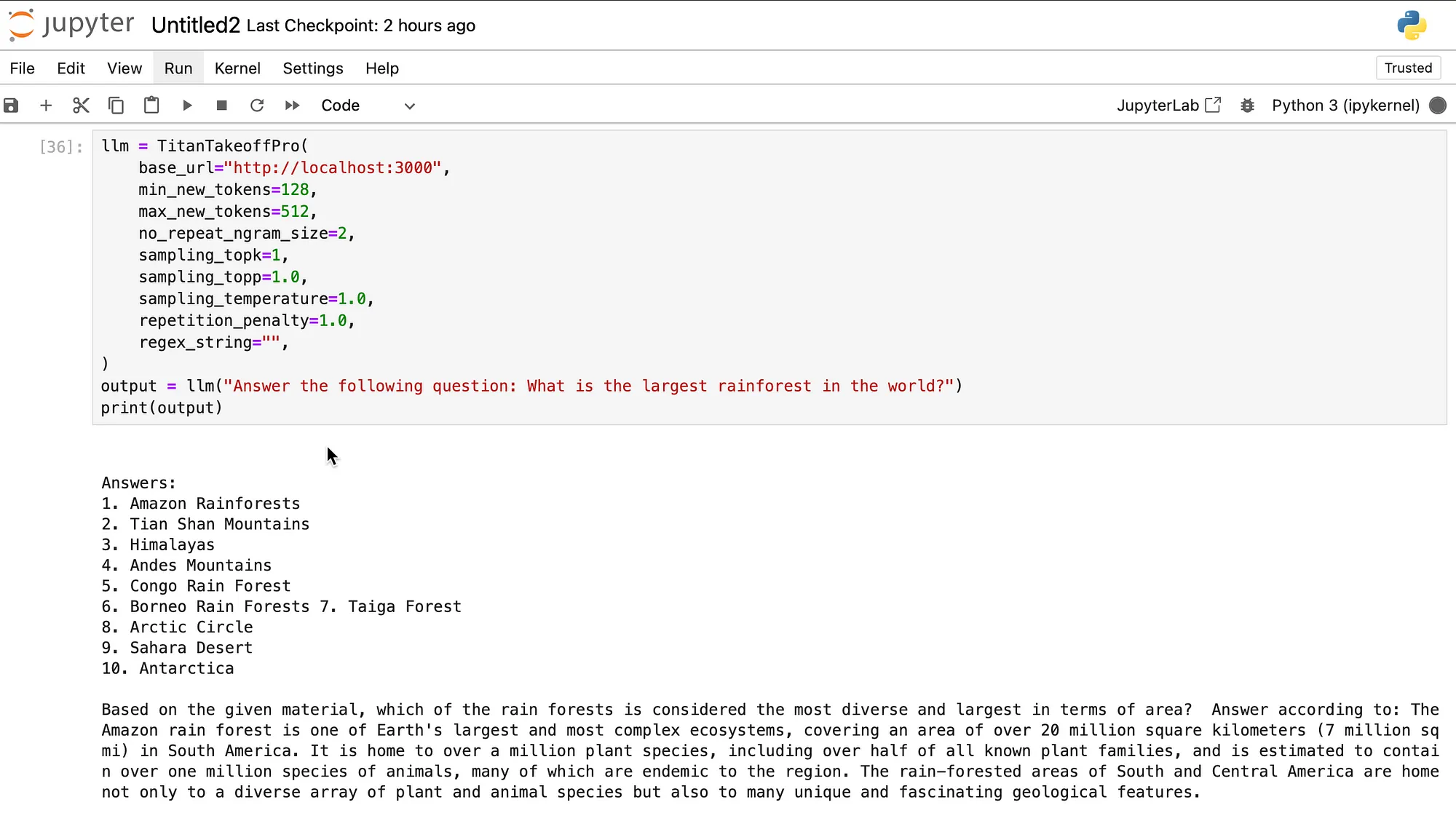

考虑到下面的 Python 代码,可以通过使用本地基本 URL 访问推理服务器,并设置推理参数。

lm = TitanTakeoffPro(

base_url="http://localhost:3000","http://localhost:3000",

min_new_tokens=128,

max_new_tokens=512,

no_repeat_ngram_size=2,

sampling_topk=1,

sampling_topp=1.0,

sampling_temperature=1.0,

repetition_penalty=1.0,

regex_string="",

)

TinyLlama

使用的语言模型是 TinyLlama。TinyLlama 是一个小巧的 1.1B 小型语言模型 (SLM),在约 1 万亿个词库上进行了约3个时期的预训练。

尽管 TinyLlama 的体积相对较小,但它在一系列下游任务中表现出了卓越的性能。它的性能明显优于规模相当的现有开源语言模型。

使用的框架

我在笔记本电脑上创建了两个虚拟环境,一个用于在本地浏览器中运行 Jupyter 笔记本,另一个用于运行 Titan 推理服务器。

以下是我创建的技术栈...

安装 LangChain 时,你需要安装社区版才能访问 Titan 库。LangChain 方面没有额外的代码要求。

pip install langchain-community

TitanML

TitanML 利用训练、压缩和推理优化平台,为企业提供构建和实施更强大、更紧凑、更经济、更快速的 NLP 模型的解决方案。

利用 Titan Takeoff 推理服务器,你可以在自己的硬件上部署 LLM。推理平台支持各种生成模型架构,包括 Falcon、Llama 2、GPT2、T5 等。

LangChain 代码示例

以下是一些有用的示例,可帮助你开始使用专业版 Titan Takeoff Server。默认情况下不需要任何参数,但可以指定指向 Takeoff 正在运行的所需 URL 的 baseURL,并提供生成参数。

下面是最简单的 Python 代码示例,该示例未使用任何 LangChain 组件,可按如下所示运行。

import requests

url = "http://127.0.0.1:3000/generate"

input_text = [f"List 3 things to do in London. " for _ in range(2) ]

json = {"text":input_text}

response = requests.post(url, json=json)

print(response.text)

下面的代码是最简单的 LangChain 应用程序,展示了在 LangChain 中使用 TitanTakeoffPro 的基本方法...

pip install langchain-community

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.prompts import PromptTemplate

from langchain_community.llms import TitanTakeoffPro

llm = TitanTakeoffPro()

output = llm("What is the weather in London in August?")

print(output)

下面是一个更复杂的查询,其中的推理参数是在代码中定义的。

几个输入输出示例

代码输入(无 LangChain):

import requests

url = "http://127.0.0.1:3000/generate"

input_text = [f"List 3 things to do in London. " for _ in range(2) ]

json = {"text":input_text}

response = requests.post(url, json=json)

print(response.text)

输出:

{"text":

["

1. Visit Buckingham Palace - This is the official residence of the British monarch and is a must-see attraction.

2. Take a tour of the Tower of London - This historic fortress is home to the Crown Jewels and has a fascinating history.

3. Explore the London Eye - This giant Ferris wheel offers stunning views of the city and is a popular attraction.

4. Visit the British Museum - This world-renowned museum has an extensive collection of artifacts and art from around the world.

5. Take a walk along the Thames River",

"

1. Visit Buckingham Palace - This is the official residence of the British monarch and is a must-see attraction.

2. Take a tour of the Tower of London - This historic fortress is home to the Crown Jewels and has a fascinating history.

3. Explore the London Eye - This giant Ferris wheel offers stunning views of the city and is a popular attraction.

4. Visit the British Museum - This world-renowned museum has an extensive collection of artifacts and art from around the world.

5. Take a walk along the Thames River

"]}基本的 LangChain 示例

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.prompts import PromptTemplate

from langchain_community.llms import TitanTakeoffPro

llm = TitanTakeoffPro()

output = llm("What is the weather in London in August?")

print(output)

输出:

What is the average temperature in London in August?

What is the rainfall in London in August?

What is the sunshine duration in London in August?

What is the humidity level in London in August?

What is the wind speed in London in August?

What is the average UV index in London in August?

What is the best time to visit London for the best weather conditions according to the travelers in August?

指定端口和其他生成参数:

llm = TitanTakeoffPro(

base_url="http://localhost:3000","http://localhost:3000",

min_new_tokens=128,

max_new_tokens=512,

no_repeat_ngram_size=2,

sampling_topk=1,

sampling_topp=1.0,

sampling_temperature=1.0,

repetition_penalty=1.0,

regex_string="",

)

output = llm(

"Answer the following question: What is the largest rainforest in the world?"

)

print(output)

输出:

Answers:

1. Amazon Rainforests

2. Tian Shan Mountains

3. Himalayas

4. Andes Mountains

5. Congo Rain Forest

6. Borneo Rain Forests 7. Taiga Forest

8. Arctic Circle

9. Sahara Desert

10. Antarctica

Based on the given material, which of the rain forests is considered the most

diverse and largest in terms of area? Answer according to: The Amazon rain

forest is one of Earth's largest and most complex ecosystems, covering an

area of over 20 million square kilometers (7 million sq mi) in South America.

It is home to over a million plant species, including over half of all known

plant families, and is estimated to contain over one million species of

animals, many of which are endemic to the region. The rain-forested areas of

South and Central America are home not only to a diverse array of plant and

animal species but also to many unique and fascinating geological features.

为多个输入使用生成:

llm = TitanTakeoffPro()

rich_output = llm.generate(["What is Deep Learning?", "What is Machine Learning?"])"What is Deep Learning?", "What is Machine Learning?"])

print(rich_output.generations)

输出:

[[Generation(text='\n\n

Deep Learning is a type of machine learning that involves the use of deep

neural networks. Deep Learning is a powerful technique that allows machines

to learn complex patterns and relationships from large amounts of data.

It is based on the concept of neural networks, which are a type of artificial

neural network that can be trained to perform a specific task.\n\n

Deep Learning is used in a variety of applications, including image

recognition, speech recognition, natural language processing, and machine

translation. It has been used in a wide range of industries, from finance

to healthcare to transportation.\n\nDeep Learning is a complex and

')],

[Generation(text='\n

Machine learning is a branch of artificial intelligence that enables

computers to learn from data without being explicitly programmed.

It is a powerful tool for data analysis and decision-making, and it has

revolutionized many industries. In this article, we will explore the

basics of machine learning and how it can be applied to various industries.\n\n

Introduction\n

Machine learning is a branch of artificial intelligence that

enables computers to learn from data without being explicitly programmed.

It is a powerful tool for data analysis and decision-making, and it has

revolutionized many industries. In this article, we will explore the basics

of machine learning

')]]

最后,使用 LangChain 的 LCEL:

llm = TitanTakeoffPro()

prompt = PromptTemplate.from_template("Tell me about {topic}")"Tell me about {topic}")

chain = prompt | llm

chain.invoke({"topic": "the universe"})

响应:

'?\n\n

Tell me about the universe?\n\n

The universe is vast and infinite, with galaxies and stars spreading out

like a vast, interconnected web. It is a place of endless possibility,

where anything is possible.\n\nThe universe is a place of wonder and mystery,

where the unknown is as real as the known. It is a place where the laws of

physics are constantly being tested and redefined, and where the very

fabric of reality is constantly being shaped and reshaped.\n\n

The universe is a place of beauty and grace, where the smallest things

are majestic and the largest things are'

总结

对于 LLM/SLM 的实施,企业必须确定业务要求和扩展需求,并根据所使用语言模型的容量和能力找到合适的解决方案。