LangGraph:构建多代理LLM框架

人工智能(AI)正在迅速改变我们的生活和工作方式,软件工程领域也正在发生深刻的变化。软件开发在塑造我们的未来方面发挥着举足轻重的作用,尤其是在人工智能和自动化时代到来之际。

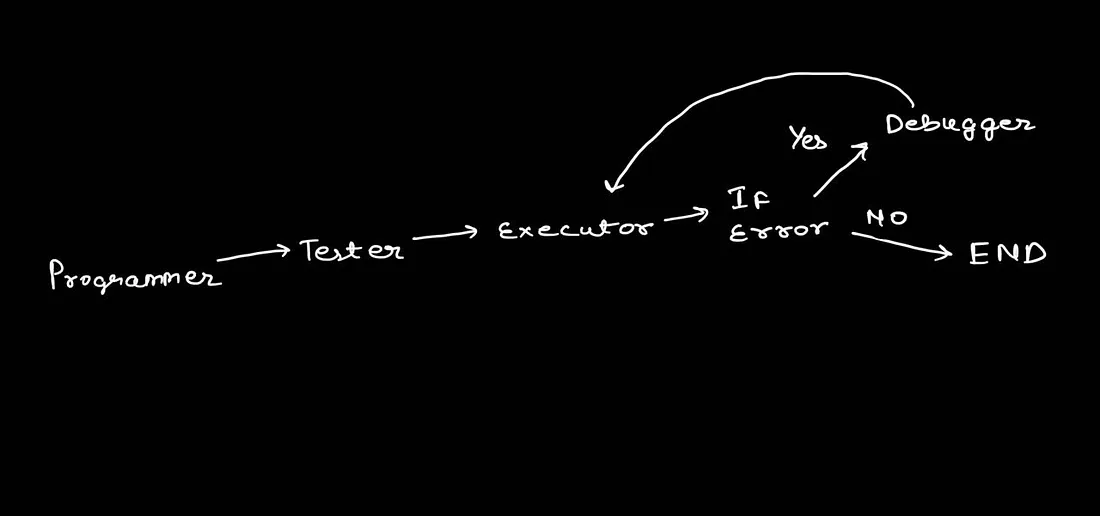

基于 LLM 的代理被越来越多地用于自动化软件开发中不可或缺的众多任务,从代码组成到测试和调试。利用多代理框架,这些代理可以自主运行,从而减少了大量人工干预的必要性。本文将深入探讨利用 LangGraph 开发多代理编码器的过程,探索其功能以及基于代理和链的 LLM 解决方案的集成。

LangGraph 简介

LangGraph 能够开发复杂的代理运行时,利用大型语言模型的功能在循环图结构中完成推理和决策任务,从而增强了 LangChain 生态系统。

LangGraph 的关键组件:

- 状态图:LangGraph 的主要图形类型是状态图(StatefulGraph),它由传递给每个节点的状态对象作为参数。图中的节点通过设置特定属性或添加现有属性来返回更新状态的操作。

- 节点: LangGraph 中的节点代表负责应用程序中特定任务的代理组件。每个节点都与状态对象交互,并根据其在代理架构中的功能返回更新状态对象。

- 边: 边连接图中的节点,定义应用程序不同组件之间的信息流和操作。它们促进节点之间的通信和协调,以实现所需的功能。

使用 LangGraph 实现

在此,我们首先定义了架构流程和不同的代理。我们将这些代理专门用于特定任务并分配角色。

代理节点 "程序员

任务:专门根据需求编写程序

class Code(BaseModel):

"""Plan to follow in future"""

code: str = Field(

description="Detailed optmized error-free Python code on the provided requirements"

)

from langchain.chains.openai_functions import create_structured_output_runnable

from langchain_core.prompts import ChatPromptTemplate

code_gen_prompt = ChatPromptTemplate.from_template(

'''**Role**: You are a expert software python programmer. You need to develop python code

**Task**: As a programmer, you are required to complete the function. Use a Chain-of-Thought approach to break

down the problem, create pseudocode, and then write the code in Python language. Ensure that your code is

efficient, readable, and well-commented.

**Instructions**:

1. **Understand and Clarify**: Make sure you understand the task.

2. **Algorithm/Method Selection**: Decide on the most efficient way.

3. **Pseudocode Creation**: Write down the steps you will follow in pseudocode.

4. **Code Generation**: Translate your pseudocode into executable Python code

*REQURIEMENT*

{requirement}'''

)

coder = create_structured_output_runnable(

Code, llm, code_gen_prompt

)

代理节点 "测试员"

任务:生成输入测试用例和预期输出

class Tester(BaseModel):

"""Plan to follow in future"""

Input: List[List] = Field(

description="Input for Test cases to evaluate the provided code"

)

Output: List[List] = Field(

description="Expected Output for Test cases to evaluate the provided code"

)

from langchain.chains.openai_functions import create_structured_output_runnable

from langchain_core.prompts import ChatPromptTemplate

test_gen_prompt = ChatPromptTemplate.from_template(

'''**Role**: As a tester, your task is to create Basic and Simple test cases based on provided Requirement and Python Code.

These test cases should encompass Basic, Edge scenarios to ensure the code's robustness, reliability, and scalability.

**1. Basic Test Cases**:

- **Objective**: Basic and Small scale test cases to validate basic functioning

**2. Edge Test Cases**:

- **Objective**: To evaluate the function's behavior under extreme or unusual conditions.

**Instructions**:

- Implement a comprehensive set of test cases based on requirements.

- Pay special attention to edge cases as they often reveal hidden bugs.

- Only Generate Basics and Edge cases which are small

- Avoid generating Large scale and Medium scale test case. Focus only small, basic test-cases

*REQURIEMENT*

{requirement}

**Code**

{code}

'''

)

tester_agent = create_structured_output_runnable(

Test, llm, test_gen_prompt

)

代理节点 "执行者"

任务:在 Python 环境中对提供的测试用例执行代码

class ExecutableCode(BaseModel):

"""Plan to follow in future"""

code: str = Field(

description="Detailed optmized error-free Python code with test cases assertion"

)

python_execution_gen = ChatPromptTemplate.from_template(

"""You have to add testing layer in the *Python Code* that can help to execute the code. You need to pass only Provided Input as argument and validate if the Given Expected Output is matched.

*Instruction*:

- Make sure to return the error if the assertion fails

- Generate the code that can be execute

Python Code to excecute:

*Python Code*:{code}

Input and Output For Code:

*Input*:{input}

*Expected Output*:{output}"""

)

execution = create_structured_output_runnable(

ExecutableCode, llm, python_execution_gen

)

代理节点 "调试器"

任务 :使用 LLM 知识调试代码,并在出现错误时将其发送回 "执行者 "代理。

class RefineCode(BaseModel):

code: str = Field(

description="Optimized and Refined Python code to resolve the error"

)

python_refine_gen = ChatPromptTemplate.from_template(

"""You are expert in Python Debugging. You have to analysis Given Code and Error and generate code that handles the error

*Instructions*:

- Make sure to generate error free code

- Generated code is able to handle the error

*Code*: {code}

*Error*: {error}

"""

)

refine_code = create_structured_output_runnable(

RefineCode, llm, python_refine_gen

)

决策边缘 "Decision_To_End"

任务: 这是一个基于 "执行 "的条件边缘。如果遇到错误,它将决定是结束执行还是发送到 "调试器 "以解决错误。

图形状态和定义图形节点

在 LangGraph 中,状态对象有助于跟踪图形状态。

class AgentCoder(TypedDict):

requirement: str #Keep Track of the requirements

code: str #Latest Code

tests: Dict[str, any] #Generated Test Cases

errors: Optional[str] #If encountered, Error Keep track of it

定义每个代理流程的功能和执行

def programmer(state):

print(f'Entering in Programmer')

requirement = state['requirement']

code_ = coder.invoke({'requirement':requirement})

return {'code':code_.code}

def debugger(state):

print(f'Entering in Debugger')

errors = state['errors']

code = state['code']

refine_code_ = refine_code.invoke({'code':code,'error':errors})

return {'code':refine_code_.code,'errors':None}

def executer(state):

print(f'Entering in Executer')

tests = state['tests']

input_ = tests['input']

output_ = tests['output']

code = state['code']

executable_code = execution.invoke({"code":code,"input":input_,'output':output_})

#print(f"Executable Code - {executable_code.code}")

error = None

try:

exec(executable_code.code)

print("Code Execution Successful")

except Exception as e:

print('Found Error While Running')

error = f"Execution Error : {e}"

return {'code':executable_code.code,'errors':error}

def tester(state):

print(f'Entering in Tester')

requirement = state['requirement']

code = state['code']

tests = tester_agent.invoke({'requirement':requirement,'code':code})

#tester.invoke({'requirement':'Generate fibbinaco series','code':code_.code})

return {'tests':{'input':tests.Input,'output':tests.Output}}

def decide_to_end(state):

print(f'Entering in Decide to End')

if state['errors']:

return 'debugger'

else:

return 'end'

使用边将不同节点相互整合,并添加条件边 "Decision_To_End"

from langgraph.graph import END, StateGraph

workflow = StateGraph(AgentCoder)

# Define the nodes

workflow.add_node("programmer", programmer)

workflow.add_node("debugger", debugger)

workflow.add_node("executer", executer)

workflow.add_node("tester", tester)

#workflow.add_node('decide_to_end',decide_to_end)

# Build graph

workflow.set_entry_point("programmer")

workflow.add_edge("programmer", "tester")

workflow.add_edge("debugger", "executer")

workflow.add_edge("tester", "executer")

#workflow.add_edge("executer", "decide_to_end")

workflow.add_conditional_edges(

"executer",

decide_to_end,

{

"end": END,

"debugger": "debugger",

},

)

# Compile

app = workflow.compile()

在这种情况下,我采用 LeetCode Problem 并将问题陈述传递给解决方案。它生成了代码和测试用例。

config = {"recursion_limit": 50}

inputs = {"requirement": requirement}

running_dict = {}

async for event in app.astream(inputs, config=config):

for k, v in event.items():

running_dict[k] = v

if k != "__end__":

print(v)

结论

基于 LLM 的代理是一个高度发展的领域,仍然需要大量的检查才能使其投入生产。这是使用基于多代理流程的原型。