【指南】使用LoRA本能微调和优化Google Gemma 2B

2024 年 2 月 21 日,Google 的 Keras 团队推出了Gemma,这是一组新的轻量级开源模型。Gemma 模型有 2B 和 7B 参数尺寸,性能显着提高。受到 Google Gemini 模型的启发,Gemma 的名字来源于拉丁语中的“宝石”一词。除了发布模型权重之外,Google 还提供了工具来鼓励开发人员创造力、促进协作并促进负责任地使用 Gemma 模型。

这些模型是在庞大的文本数据集上进行训练的,总计 6 万亿个令牌,这些令牌的来源多种多样,包括网络文档、代码示例和数学文本。这种全面的训练方法使模型能够接触各种语言风格、编程语法和数学概念,使其能够有效地处理各种任务。

在本文中,我们将讨论两个主要主题:

1. 以 4 位精度加载和使用 Gemma 2B 模型。

2. 学习如何通过指导有效地微调模型。

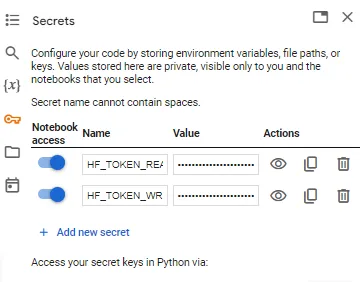

要从 Google Colab 中的 Hugging Face 访问 Gemma 2B 模型,你需要提供访问令牌。首先,你需要将“hf_token”添加到 Google Colab 密钥中。之后,你应该添加此环境变量。

import os

from google.colab import userdata

os.environ["HF_TOKEN"] = userdata.get('HF_TOKEN_READ')

为确保顺利执行,请安装所需的软件包

!pip3 install -q -U bitsandbytes==0.42.0

!pip3 install -q -U peft==0.8.2

!pip3 install -q -U trl==0.7.10

!pip3 install -q -U accelerate==0.27.1

!pip3 install -q -U datasets==2.17.0

!pip3 install -q -U transformers==4.38.0

假设你可以访问拥抱脸枢纽中的模型工件,你可以首先下载模型和标记器。此外,还需要包含一个 BitsAndBytesConfig,用于仅加权量化。

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig, GemmaTokenizer

model_id = "google/gemma-2b"

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained(model_id, token=os.environ['HF_TOKEN'])

现在,在开始微调过程之前,最好对模型的能力进行一次初步测试。为此,我们可以向模型输入一个众所周知的报价,并观察其输出结果。

text = "Quote: Imagination is more"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

输出

Quote: Imagination is more important than knowledge. Knowledge is limited. Imagination encircles the world.

-Albert Einstein

I

谷歌发布了 7B 和 2B 模型的指令调整版本。这些经过指令调整的模型采用了对话使用时必须遵守的聊天模板。Gemma 模型遵循的模板格式如下:

<开始>用户

大脑是如何工作的?<end_of_trun>

<start_of_turn>模型

试一试:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig, GemmaTokenizer

model_id = "google/gemma-2b-it"

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained(model_id, token=os.environ['HF_TOKEN'])

text = """<start_of_turn>user

How does the brain work?<end_of_turn>

<start_of_turn>model"""

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

user

Explain 'AMSI init bypass' and its purpose.

modelSure, here's a detailed explanation of the AMSI init bypass feature and its purpose:

**AMSI init bypass:**

AMSI (Advanced Microcontroller Startup Interface) init bypass is a technique used in microcontroller initialization where the initialization process is

该模型的输出结果还不错,但让我们对其进行改进。我们将利用来自 ahmed000000000/cybersec 的数据集,其中包含网络安全领域的诱导和响应对。

from datasets import load_dataset

data = load_dataset("ahmed000000000/cybersec")

现在,我们来设置 LoRA 配置。

from peft import LoraConfig

lora_config = LoraConfig(

r=8,

target_modules=["q_proj", "o_proj", "k_proj", "v_proj", "gate_proj", "up_proj", "down_proj"],

task_type="CAUSAL_LM",

)

现在,让我们创建一个自定义函数,将数据格式化为 Gemma 指令模板格式。

def formatting_func(example):

text = f"<start_of_turn>user\n{example['INSTRUCTION'][0]}<end_of_turn> <start_of_turn>model\n{example['RESPONSE'][0]}<end_of_turn>"

return [text]

初始化 SFTTrainer

import transformers

from trl import SFTTrainer

trainer = SFTTrainer(

model=model,

train_dataset=data["train"],

args=transformers.TrainingArguments(

per_device_train_batch_size=1,

gradient_accumulation_steps=4,

warmup_steps=2,

max_steps=150,

learning_rate=2e-4,

fp16=True,

logging_steps=1,

output_dir="outputs",

optim="paged_adamw_8bit"

),

peft_config=lora_config,

formatting_func=formatting_func,

)

- model: 这是你要微调的预训练语言模型(如 Gemma)。

- train_dataset(训练数据集): 该参数指定用于微调的训练数据集。

- args:包含各种训练参数,如批量大小、学习率和优化设置。其中,per_device_train_batch_size 设置每个 GPU 的批次大小,gradient_accumulation_steps 确定在执行梯度更新之前要累积多少批次,warmup_steps 指定学习率预热的步数,max_steps 设置最大训练步数、 learning_rate(学习率)定义初始学习率;fp16 启用混合精度训练,以提高训练速度和内存效率;logging_steps 确定记录训练指标的频率;output_dir 指定保存训练输出的目录。

- peft_config: 该参数指定 LoRA(低秩自适应)微调的配置,有助于降低微调大型语言模型的计算成本。

- formatting_func:格式化函数: 这是一个自定义函数,用于将训练数据格式化为 Gemma 指令模板格式,确保与模型的输入要求兼容。

启动模型训练

trainer.train()

TrainOutput(global_step=150, training_loss=0.5255898060897987, metrics={'train_runtime': 121.7084, 'train_samples_per_second': 4.93, 'train_steps_per_second': 1.232, 'total_flos': 1065426810224640.0, 'train_loss': 0.5255898060897987, 'epoch': 46.15})终于,关键时刻来临了!让我们再次用之前的提示语来考验我们的模型。

text = """<start_of_turn>user

Explain 'AMSI init bypass' and its purpose.<end_of_turn>

<start_of_turn>model"""

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

user

Explain 'AMSI init bypass' and its purpose.

model

AMSI init bypass is a security feature in Windows that allows the System Management Interface (AMSI) to be initialized even when it is not necessary. This feature is designed to provide extra protection against certain types of exploits, by ensuring that the AM

text = """<start_of_turn>user

Explain 'APT groups and operations' and its purpose.<end_of_turn>

<start_of_turn>model"""

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

user

Explain 'APT groups and operations' and its purpose.

model

APT groups and operations refer to the organization and grouping of security patches and updates by release notes or patch management tools. This feature enables organizations to organize and manage patches and updates in a structured manner, making it easier to identify important security updates and apply

结论

总之,在 LoRA 的协助下,我们使用自定义数据集成功地微调了 Gemma 2B 指令模型。我们所提供的代码框架封装了这一训练过程的实施,可随时调整以应用于任何其他数据集。