提高大型语言模型 (LLM) 性能的四种数据清理技术

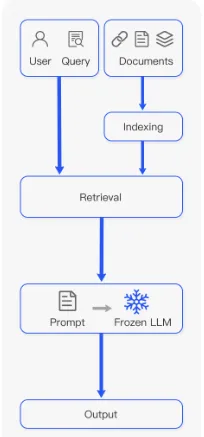

检索增强生成(RAG)过程因其增强对大语言模型(LLM)的理解、为它们提供上下文并帮助防止幻觉的潜力而受到欢迎。 RAG 过程涉及几个步骤,从分块摄取文档到提取上下文,再到用该上下文提示 LLM 模型。虽然 RAG 可以显着改善预测,但有时也会导致错误的结果。摄取文档的方式在此过程中起着至关重要的作用。例如,如果我们的“上下文文档”包含LLM的拼写错误或不寻常的字符(例如表情符号),则可能会混淆LLM对所提供上下文的理解。

在这篇文章中,我们将演示如何使用四种常见的自然语言处理 (NLP)技术来清理文本,然后再将文本摄取并转换为块以供LLM进一步处理。我们还将说明这些技术如何显着增强模型对提示的响应。

为什么清理文档很重要?

在将文本输入任何类型的机器学习算法之前,清理文本是一种标准做法。无论你使用的是监督算法还是无监督算法,甚至是为生成式人工智能(GAI)模型创建上下文,让文本保持良好的状态都有助于实现以下目的:

- 确保准确性:通过消除错误并使所有内容保持一致,你就不太可能混淆模型或出现模型幻觉。

- 提高质量: 更干净的数据可确保模型使用可靠、一致的信息,帮助我们的模型从准确的数据中进行推断。

- 便于分析: 干净的数据易于解释和分析。例如,使用纯文本训练的模型可能难以理解表格数据。

通过清理数据(尤其是非结构化数据),我们为模型提供了可靠的相关上下文,从而提高了生成率,降低了出现幻觉的概率,并提高了 GAI 的速度和性能,因为大量信息会导致等待时间延长。

我们如何实现数据清洗?

为了帮助你构建数据清理工具箱,我们将探讨四种 NLP 技术及其对模型的帮助。

第 1 步:数据清理和降噪

我们首先要删除没有意义的符号或字符,如 HTML 标记(在刮擦的情况下)、XML 解析、JSON、表情符号和标签。不必要的字符通常会混淆模型,增加上下文标记的数量,从而增加计算成本。

我们认识到没有放之四海而皆准的解决方案,因此我们将使用常见的清理技术来调整我们的方法,以适应不同的问题和文本类型:

- 标记化: 将文本分割成单个词或标记。

- 去除噪音: 消除不需要的符号、表情符号、标签和 Unicode 字符。

- 规范化: 将文本转换为小写,以保持一致性。

- 删除停止词: 剔除不增加意义的常见或重复词语,如 "a"、"in"、"of "和 "the"。

- 词母化或词干化: 将单词还原为词基或词根形式。

虽然我们很清楚其中的含义,但还是让我们应用 Python 中的常用技术来简化模型。下面的代码片段以及本帖中的所有其他代码都是在 ChatGPT 的帮助下生成的。

import re

import nltk

from nltk.tokenize import word_tokenize

from nltk.corpus import stopword

s

from nltk.stem import WordNetLemmatizer

# Sample text with emojis, hashtags, and other characters

text = “I love coding! 😊 #PythonProgramming is fun! 🐍✨ Let’s clean some text 🧹”

# Tokenization

tokens = word_tokenize(text)

# Remove Noise

cleaned_tokens = [re.sub(r’[^\w\s]’, ‘’, token) for token in tokens]

# Normalization (convert to lowercase)

cleaned_tokens = [token.lower() for token in cleaned_tokens]

# Remove Stopwords

stop_words = set(stopwords.words(‘english’))

cleaned_tokens = [token for token in cleaned_tokens if token not in stop_words]

# Lemmatization

lemmatizer = WordNetLemmatizer()

cleaned_tokens = [lemmatizer.lemmatize(token) for token in cleaned_tokens]

print(cleaned_tokens)

# output:

# [‘love’, ‘coding’, ‘pythonprogramming’, ‘fun’, ‘clean’, ‘text’]

这一过程删除了无关字符,留下了我们的模型可以理解的干净而有意义的文本:['love'、'coding'、'pythonprogramming'、'fun'、'clean'、'text']。

第 2 步:文本标准化和规范化

接下来,我们应始终优先考虑整个文本的一致性和连贯性。这对于确保准确检索和生成至关重要。在下面的 Python 示例中,让我们扫描输入的文本,查找拼写错误和其他可能导致不准确和性能下降的不一致之处。

import re

# Sample text with spelling errors

text_with_errors = “””But ’s not oherence about more language oherence .

Other important aspect is ensuring accurte retrievel by oherence product name spellings.

Additionally, refning descriptions oherenc the oherence of the contnt.”””

# Function to correct spelling errors

def correct_spelling_errors(text):

# Define dictionary of common spelling mistakes and their corrections

spelling_corrections = {

“ oherence ”: “everything”,

“ oherence ”: “refinement”,

“accurte”: “accurate”,

“retrievel”: “retrieval”,

“ oherence ”: “correcting”,

“refning”: “refining”,

“ oherenc”: “enhances”,

“ oherence”: “coherence”,

“contnt”: “content”,

}

# Iterate over each key-value pair in the dictionary and replace the

# misspelled words with their correct versions

for mistake, correction in spelling_corrections.items():

text = re.sub(mistake, correction, text)

return text

# Correct spelling errors in the sample text

cleaned_text = correct_spelling_errors(text_with_errors)

print(cleaned_text)

# output

# But it’s not everything about more language refinement.

# other important aspect is ensuring accurate retrieval by correcting product name spellings.

# Additionally, refining descriptions enhances the coherence of the content.

有了连贯一致的文本表示,我们的模型现在可以生成准确且与上下文相关的回复。这一过程还能通过语义搜索来提取最合适的语境块,尤其是在 RAG 的语境中。

第 3 步:元数据处理

元数据收集(如识别重要的关键字和实体)使我们能够轻松识别文本中的元素,我们可以利用这些元素来改进语义搜索结果,尤其是在内容推荐系统等企业应用中。这一过程为模型提供了额外的上下文,这通常是提高 RAG 性能所必需的。让我们将这一步骤应用到另一个 Python 示例中。

Import spacy

import json

# Load English language model

nlp = spacy.load(“en_core_web_sm”)

# Sample text with meta data candidates

text = “””In a blog post titled ‘The Top 10 Tech Trends of 2024,’

John Doe discusses the rise of artificial intelligence and machine learning

in various industries. The article mentions companies like Google and Microsoft

as pioneers in AI research. Additionally, it highlights emerging technologies

such as natural language processing and computer vision.”””

# Process the text with spaCy

doc = nlp(text)

# Extract named entities and their labels

meta_data = [{“text”: ent.text, “label”: ent.label_} for ent in doc.ents]

# Convert meta data to JSON format

meta_data_json = json.dumps(meta_data)

print(meta_data_json)

# output

“””

[

{“text”: “2024”, “label”: “DATE”},

{“text”: “John Doe”, “label”: “PERSON”},

{“text”: “Google”, “label”: “ORG”},

{“text”: “Microsoft”, “label”: “ORG”},

{“text”: “AI”, “label”: “ORG”},

{“text”: “natural language processing”, “label”: “ORG”},

{“text”: “computer vision”, “label”: “ORG”}

]

“””

该代码强调了 spaCy 的实体识别功能如何识别日期、人物和组织以及文本中的其他重要实体。这有助于 RAG 应用程序更好地理解上下文和词语之间的关系。

第 4 步:上下文信息处理

在处理 LLM 时,你可能通常要处理多种语言或管理大量包含各种主题的文档,这可能会让你的模型难以理解。让我们来看看可以帮助模型更好地理解数据的两种技术。

首先是语言翻译。使用谷歌翻译 API,代码将原文 "Hello, how are you? "从英语翻译成西班牙语。

From googletrans import Translator

# Original text

text = “Hello, how are you?”

# Translate text

translator = Translator()

translated_text = translator.translate(text, src=’en’, dest=’es’).text

print(“Original Text:”, text)

print(“Translated Text:”, translated_text)

主题建模包括数据聚类等技术,就像把凌乱的房间整理成整齐的类别,帮助你的模型识别文档的主题并快速整理大量信息。Latent Dirichlet allocation (LDA) 是自动化主题建模过程中最常用的技术,它是一种统计模型,可以通过仔细观察单词模式来帮助找到文本中隐藏的主题。

在下面的示例中,我们将使用 sklearn 处理一组文档并识别关键主题。

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.decomposition import LatentDirichletAllocation

# Sample documents

documents = [

"Machine learning is a subset of artificial intelligence.",

"Natural language processing involves analyzing and understanding human languages.",

"Deep learning algorithms mimic the structure and function of the human brain.",

"Sentiment analysis aims to determine the emotional tone of a text."

]

# Convert text into numerical feature vectors

vectorizer = CountVectorizer(stop_words='english')

X = vectorizer.fit_transform(documents)

# Apply Latent Dirichlet Allocation (LDA) for topic modeling

lda = LatentDirichletAllocation(n_components=2, random_state=42)

lda.fit(X)

# Display topics

for topic_idx, topic in enumerate(lda.components_):

print("Topic %d:" % (topic_idx + 1))

print(" ".join([vectorizer.get_feature_names()[i] for i in topic.argsort()[:-5 - 1:-1]]))

# output

#

#Topic 1:

#learning machine subset artificial intelligence

#Topic 2:

#processing natural language involves analyzing understanding

演示: 清理 GAI 文本输入

让我们用一个例子来说明这一切。在这个演示中,我们使用 ChatGPT 生成了两位技术专家之间的对话。我们将在对话中应用基本的清理技术,展示这些方法如何实现可靠、一致的结果。

synthetic_text = """

Sarah (S): Technology Enthusiast

Mark (M): AI Expert

S: Hey Mark! How's it going? Heard about the latest advancements in Generative AI (GA)?

M: Hey Sarah! Yes, I've been diving deep into the realm of GA lately. It's fascinating how it's shaping the future of technology!

S: Absolutely! I mean, GA has been making waves across various industries. What do you think is driving its significance?

M: Well, GA, especially Retrieval Augmented Generative (RAG), is revolutionizing content generation. It's not just about regurgitating information anymore; it's about creating contextually relevant and engaging content.

S: Right! And with Machine Learning (ML) becoming more sophisticated, the possibilities seem endless.

M: Exactly! With advancements in ML algorithms like GPT (Generative Pre-trained Transformer), we're seeing unprecedented levels of creativity in AI-generated content.

S: But what about concerns regarding bias and ethics in GA?

M: Ah, the age-old question! While it's true that GA can inadvertently perpetuate biases present in the training data, there are techniques like Adversarial Training (AT) that aim to mitigate such issues.

S: Interesting! So, where do you see GA headed in the next few years?

M: Well, I believe we'll witness a surge in applications leveraging GA for personalized experiences. From virtual assistants to content creation tools, GA will become ubiquitous in our daily lives.

S: That's exciting! Imagine AI-powered virtual companions tailored to our preferences.

M: Indeed! And with advancements in Natural Language Processing (NLP) and computer vision, these virtual companions will be more intuitive and lifelike than ever before.

S: I can't wait to see what the future holds!

M: Agreed! It's an exciting time to be in the field of AI.

S: Absolutely! Thanks for sharing your insights, Mark.

M: Anytime, Sarah. Let's keep pushing the boundaries of Generative AI together!

S: Definitely! Catch you later, Mark!

M: Take care, Sarah!

"""

步骤 1:基本清理

首先,删除对话中的表情符号、标签和 Unicode 字符。

# Sample text with emojis, hashtags, and unicode characters

# Tokenization

tokens = word_tokenize(synthetic_text)

# Remove Noise

cleaned_tokens = [re.sub(r'[^\w\s]', '', token) for token in tokens]

# Normalization (convert to lowercase)

cleaned_tokens = [token.lower() for token in cleaned_tokens]

# Remove Stopwords

stop_words = set(stopwords.words('english'))

cleaned_tokens = [token for token in cleaned_tokens if token not in stop_words]

# Lemmatization

lemmatizer = WordNetLemmatizer()

cleaned_tokens = [lemmatizer.lemmatize(token) for token in cleaned_tokens]

print(cleaned_tokens)

步骤2:准备提示

接下来,我们将制作一个提示,要求模型根据从我们的合成对话中收集到的信息,以友好的客户服务人员的身份做出回应。

MESSAGE_SYSTEM_CONTENT = "You are a customer service agent that helps

a customer with answering questions. Please answer the question based on the

provided context below.

Make sure not to make any changes to the context if possible,

when prepare answers so as to provide accurate responses. If the answer

cannot be found in context, just politely say that you do not know,

do not try to make up an answer."

步骤 3:准备交互

让我们准备与模型的交互。在本例中,我们将使用 GPT-4。

def response_test(question:str, context:str, model:str = "gpt-4"):

response = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": MESSAGE_SYSTEM_CONTENT,

},

{"role": "user", "content": question},

{"role": "assistant", "content": context},

],

)

return response.choices[0].message.content

步骤 4:准备问题

最后,让我们向模型提问,并比较清洁前后的结果。

question1 = "What are some specific techniques in Adversarial Training (AT)

that can help mitigate biases in Generative AI models?"

在清洁之前,我们的模型会产生这样的响应:

response = response_test(question1, synthetic_text)

print(response)

#Output

# I'm sorry, but the context provided doesn't contain specific techniques in Adversarial Training (AT) that can help mitigate biases in Generative AI models.

经过清理后,模型生成了如下响应。通过基本的清洁技术加深理解后,模型可以提供更全面的答案。

response = response_test(question1, new_content_string)

print(response)

#Output:

# The context mentions Adversarial Training (AT) as a technique that can

# help mitigate biases in Generative AI models. However, it does not provide

#any specific techniques within Adversarial Training itself.

RAG 模型具有多种优势,包括通过提供相关上下文来增强人工智能生成结果的可靠性和一致性。这种上下文化大大提高了人工智能生成内容的准确性。

要充分发挥 RAG 模型的作用,在文档摄取过程中必须采用强大的数据清理技术。这些技术可以解决文本数据中的差异、不精确术语和其他潜在错误,从而显著提高输入数据的质量。当在更干净、更可靠的数据上运行时,RAG 模型就能提供更准确、更有意义的结果,使人工智能用例具备更好的跨领域决策和解决问题的能力。