使用LlamaParse、Langchain和Groq对复杂PD进行RAG

检索增强生成(RAG)是一种利用大型语言模型(LLM)从非结构化数据源中自动搜索、合成、提取和规划知识的新方法。在过去的一年中,这种方法因其利用上下文信息增强 LLM 应用的能力而备受瞩目。RAG 数据堆栈由几个关键部分组成:

- 加载数据: 首先,从文本文档、网站或数据库等各种来源获取数据。这些数据可以是原始格式,也可以是预处理格式。

- 处理数据: 数据需要经过预处理步骤,以便为进一步分析进行清理和结构化。这可能包括标记化、词干化和删除停滞词等任务。

- 嵌入数据: 每条数据都被转换成一种数字表示,称为嵌入。这种嵌入能捕捉数据的语义信息,使 LLM 更容易理解和处理数据。

- 矢量数据库: 嵌入存储在矢量数据库中,可根据相似度指标进行高效检索。该数据库可在生成过程中快速访问相关数据点。

- 检索和提示: 在生成过程中,LLM 可以根据当前输入的上下文从向量数据库中检索相关数据点。这种检索机制有助于 LLM 提供更准确、与上下文更相关的输出结果。

总之,RAG 方法使 LLM 能够系统、高效地利用外部知识源,从而增强了 LLM 的能力。这将为自然语言理解、信息检索和决策等各个领域带来更强大的上下文感知应用。

构建生产级 RAG 仍然是一个复杂而微妙的问题。与之相关的一些挑战如下:

- 结果不够准确: 应用程序无法为长尾输入任务/查询生成令人满意的结果。

- 需要调整的参数太多: 不清楚哪些参数横跨数据解析、摄取和检索。

- PDF 尤其是一个问题:我有很多格式混乱的复杂文档。如何以正确的方式表示这些内容,以便 LLM 能够理解?

- 数据同步是一个挑战: 生产数据经常定期更新,不断同步新数据会带来一系列新的挑战。

为了解决上述问题,2024 年 2 月 20 日,LlamaIndex 推出了 LalmaCloud 和 LlamaParse,这是新一代托管解析、摄取和检索服务,旨在为我们的 LLM 和 RAG 应用程序提供生产级上下文增强功能。

创立 LalmaCloud 的主要初衷是专注于编写业务逻辑,而不是数据处理。处理大量生产数据,立即提高响应质量。它有以下两个组件:

- LlamaParse: 专有解析功能,适用于包含表格和数字等嵌入对象的复杂文档。LlamaParse 与 LlamaIndex 的摄取和检索直接集成,让你可以在复杂的半结构化文档上建立检索。它承诺能够回答以前根本无法回答的复杂问题。

- 托管摄取和检索 API: 该应用程序接口可让你为 RAG 应用程序轻松加载、处理和存储数据,并以任何语言使用这些数据。由 LlamaHub 中的数据源(包括 LlamaParse)和数据存储集成提供支持。

什么是 LlamaParse?

LlamaParse 是一项专有的解析服务,它能将带有复杂表格的 PDF 文件解析为结构良好的Markdown格式,效果令人难以置信。

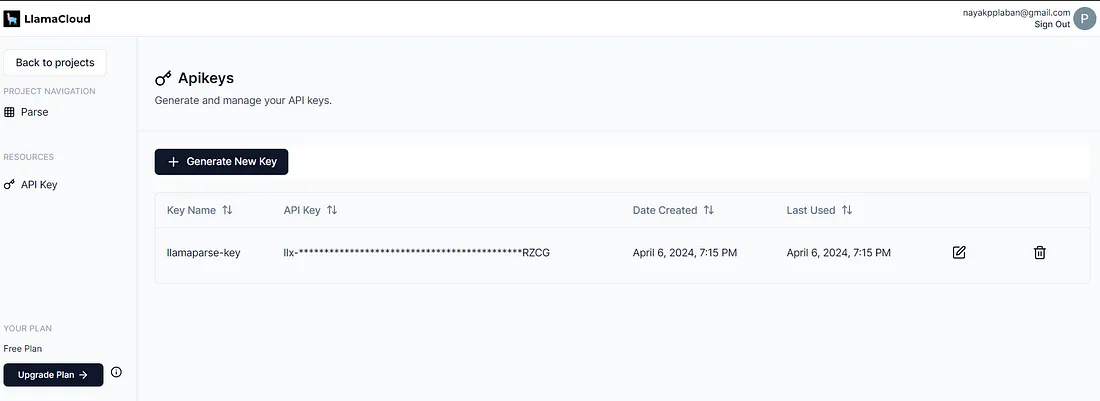

该服务采用公开预览模式:人人都可使用,但有使用限制(每天1千页),每周免费7000页。然后每页收费 0.003 美元(每 1000 页收费 3 美元)。它作为一项独立服务运行,也可插入受管理的摄取和检索 API中

from llama_parse import LlamaParse

parser = LlamaParse(

api_key="llx-...", # can also be set in your env as LLAMA_CLOUD_API_KEY

result_type="markdown", # "markdown" and "text" are available

verbose=True

)

目前,LlamaParse 主要支持带表格的 PDF 文件,但他们也在不断完善对数字的支持,并扩展了最常用的文档类型:.docx、.pptx 和 .html,作为下一步改进的一部分。

Groq 是什么?

Groq 成立于 2016 年,总部位于加利福尼亚州山景城,是一家人工智能解决方案初创公司,专注于超低延迟人工智能推理。该公司在人工智能计算性能方面取得了重大进展,是人工智能技术领域的重要参与者。Groq 已将其名称注册为商标,并组建了一支全球团队,致力于实现人工智能的平民化。

Groq 的语言处理单元(LPU)是一项尖端技术,旨在大幅提高人工智能计算性能,尤其是大型语言模型(LLM)的计算性能。Groq LPU 系统的主要目标是提供具有卓越推理性能的实时、低延迟体验。Groq 的成就之一,是在 Meta AI 的 Llama-2 70B 模型上超过了每用户每秒 300 个词组的基准,这在业内是一项重大进步。

Groq LPU 系统的超低延迟能力尤为突出,这对支持人工智能技术至关重要。它专为顺序和计算密集型 GenAI 语言处理而定制,性能优于传统的 GPU 解决方案。这使它能够高效地完成自然语言创建和理解等任务。

Groq LPU 系统的核心是第一代 GroqChip,它采用了张量流架构,在速度、效率、准确性和成本效益方面进行了优化。该芯片在基础 LLM 速度方面创造了新纪录,以每用户每秒代币计算,超越了市场上的现有解决方案。Groq 雄心勃勃地计划在两年内部署 100 万个人工智能推理芯片,展示了其对人工智能加速技术的执着追求。

什么是 LPU 推理引擎?

LPU Inference Engine(LPU是Language Processing Unit™(语言处理单元)的缩写)是一种新型端到端处理单元系统,可为计算密集型应用(如人工智能语言应用(LLM))提供最快的推理速度。

为什么比 GPU 更快?

LPU 旨在克服 LLM 的两个瓶颈:计算密度和内存带宽。就 LLM 而言,LPU 比 GPU 和 CPU 拥有更大的计算能力。这就减少了每个单词的计算时间,从而可以更快地生成文本序列。此外,由于消除了外部内存瓶颈,LPU 推理引擎在 LLM 上的性能比 GPU 高出几个数量级。

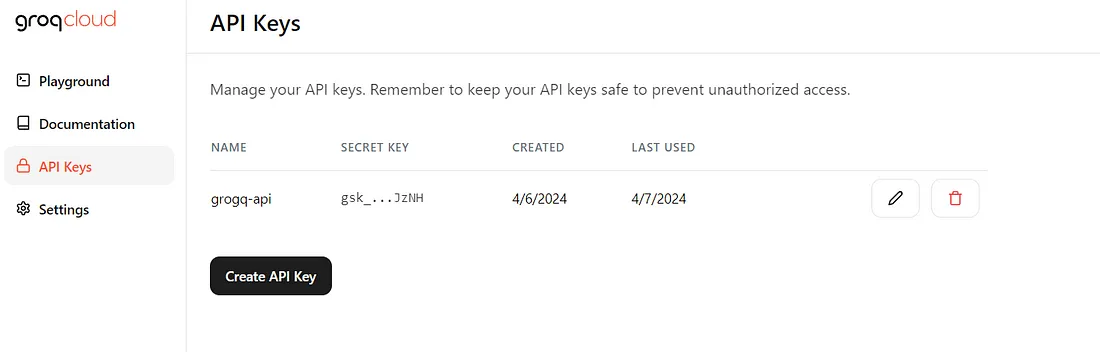

开始使用 Groq

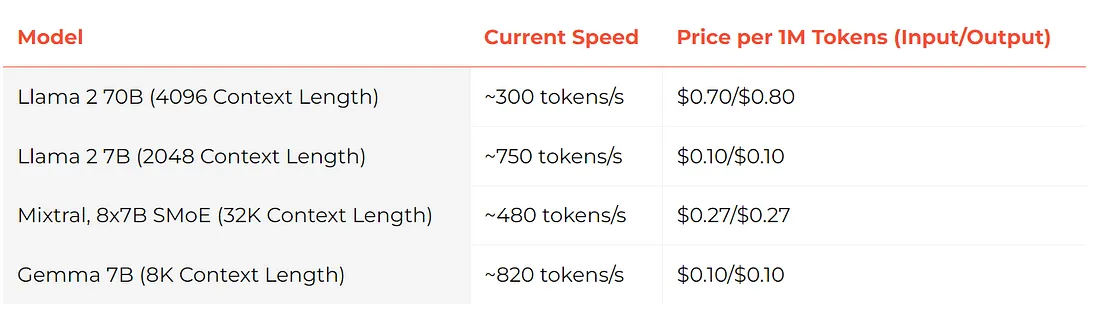

目前,Groq 为在 Groq LPU(语言处理单元)上运行的大型语言模型提供免费使用的 API 端点。

Groq 保证,每百万代币的价格将低于已公布的同等模型供应商所公布的价格。其他模型,如 Mistral 和 CodeLlama,可根据客户的具体要求提供。

要开始使用,请访问此页面并点击登录。页面如下所示:

什么是 Langchain?

LangChain 是一个开源框架,旨在简化使用大型语言模型 (LLM) 创建应用程序的过程。它为链提供了一个标准接口,与其他工具进行了大量集成,并为常见应用提供了端到端的链。

Langchain 关键概念:

- 组件: 组件是模块化的构件,可用于构建功能强大的应用程序。组件包括 LLM 封装器、提示模板和用于相关信息检索的索引。

- 链: 链允许我们将多个组件组合在一起,以解决特定任务。链可以使复杂的应用程序更加模块化,调试和维护更加简单,从而使复杂应用程序的实施更加容易。

- 代理: 代理允许 LLM 与环境交互。例如,使用外部 API 执行特定操作。

代码实现

代码在 Google Colab(cpu)中实现

安装所需的依赖项

%%writefile requirements.txt

langchain

langchain-community

llama-parse

fastembed

chromadb

python-dotenv

langchain-groq

chainlit

fastembed

unstructured[md]

!pip install -r requirements.txt设置环境变量

from google.colab import userdata

llamaparse_api_key = userdata.get('LLAMA_CLOUD_API_KEY')

groq_api_key = userdata.get("GROQ_API_KEY")

导入所需的依赖项

##### LLAMAPARSE #####

from llama_parse import LlamaParse

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.embeddings.fastembed import FastEmbedEmbeddings

from langchain_community.vectorstores import Chroma

from langchain_community.document_loaders import DirectoryLoader

from langchain_community.document_loaders import UnstructuredMarkdownLoader

from langchain.prompts import PromptTemplate

from langchain.chains import RetrievalQA

#

from groq import Groq

from langchain_groq import ChatGroq

#

import joblib

import os

import nest_asyncio # noqa: E402

nest_asyncio.apply()

LlamaParse 参数

* api_key: str = Field(

default="",

description="The API key for the LlamaParse API.",

)

* base_url: str = Field(

default=DEFAULT_BASE_URL,

description="The base URL of the Llama Parsing API.",

)

* result_type: ResultType = Field(

default=ResultType.TXT, description="The result type for the parser."

)

* num_workers: int = Field(

default=4,

gt=0,

lt=10,

description="The number of workers to use sending API requests for parsing."

)

* check_interval: int = Field(

default=1,

description="The interval in seconds to check if the parsing is done.",

)

* max_timeout: int = Field(

default=2000,

description="The maximum timeout in seconds to wait for the parsing to finish.",

)

* verbose: bool = Field(

default=True, description="Whether to print the progress of the parsing."

)

* language: Language = Field(

default=Language.ENGLISH, description="The language of the text to parse."

)

* parsing_instruction: Optional[str] = Field(

default="",

description="The parsing instruction for the parser."

)

加载和解析输入数据的辅助函数

!mkdir data

#

def load_or_parse_data():

data_file = "./data/parsed_data.pkl"

if os.path.exists(data_file):

# Load the parsed data from the file

parsed_data = joblib.load(data_file)

else:

# Perform the parsing step and store the result in llama_parse_documents

parsingInstructionUber10k = """The provided document is a quarterly report filed by Uber Technologies,

Inc. with the Securities and Exchange Commission (SEC).

This form provides detailed financial information about the company's performance for a specific quarter.

It includes unaudited financial statements, management discussion and analysis, and other relevant disclosures required by the SEC.

It contains many tables.

Try to be precise while answering the questions"""

parser = LlamaParse(api_key=llamaparse_api_key,

result_type="markdown",

parsing_instruction=parsingInstructionUber10k,

max_timeout=5000,)

llama_parse_documents = parser.load_data("./data/uber_10q_march_2022 (1).pdf")

# Save the parsed data to a file

print("Saving the parse results in .pkl format ..........")

joblib.dump(llama_parse_documents, data_file)

# Set the parsed data to the variable

parsed_data = llama_parse_documents

return parsed_data

将数据块载入向量存储的辅助函数。

# Create vector database

def create_vector_database():

"""

Creates a vector database using document loaders and embeddings.

This function loads urls,

splits the loaded documents into chunks, transforms them into embeddings using OllamaEmbeddings,

and finally persists the embeddings into a Chroma vector database.

"""

# Call the function to either load or parse the data

llama_parse_documents = load_or_parse_data()

print(llama_parse_documents[0].text[:300])

with open('data/output.md', 'a') as f: # Open the file in append mode ('a')

for doc in llama_parse_documents:

f.write(doc.text + '\n')

markdown_path = "/content/data/output.md"

loader = UnstructuredMarkdownLoader(markdown_path)

#loader = DirectoryLoader('data/', glob="**/*.md", show_progress=True)

documents = loader.load()

# Split loaded documents into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=2000, chunk_overlap=100)

docs = text_splitter.split_documents(documents)

#len(docs)

print(f"length of documents loaded: {len(documents)}")

print(f"total number of document chunks generated :{len(docs)}")

#docs[0]

# Initialize Embeddings

embed_model = FastEmbedEmbeddings(model_name="BAAI/bge-base-en-v1.5")

# Create and persist a Chroma vector database from the chunked documents

vs = Chroma.from_documents(

documents=docs,

embedding=embed_model,

persist_directory="chroma_db_llamaparse1", # Local mode with in-memory storage only

collection_name="rag"

)

#query it

#query = "what is the agend of Financial Statements for 2022 ?"

#found_doc = qdrant.similarity_search(query, k=3)

#print(found_doc[0][:100])

#print(qdrant.get())

print('Vector DB created successfully !')

return vs,embed_model

处理数据并创建向量存储

vs,embed_model = create_vector_database()实例化 LLM

chat_model = ChatGroq(temperature=0,

model_name="mixtral-8x7b-32768",

api_key=userdata.get("GROQ_API_KEY"),)

实例化矢量存储

vectorstore = Chroma(embedding_function=embed_model,

persist_directory="chroma_db_llamaparse1",

collection_name="rag")

#

retriever=vectorstore.as_retriever(search_kwargs={'k': 3})

创建自定义提示模板

custom_prompt_template = """Use the following pieces of information to answer the user's question.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Context: {context}

Question: {question}

Only return the helpful answer below and nothing else.

Helpful answer:

"""

格式化提示的辅助函数

def set_custom_prompt():

"""

Prompt template for QA retrieval for each vectorstore

"""

prompt = PromptTemplate(template=custom_prompt_template,

input_variables=['context', 'question'])

return prompt

#

prompt = set_custom_prompt()

prompt

########################### RESPONSE ###########################

PromptTemplate(input_variables=['context', 'question'], template="Use the following pieces of information to answer the user's question.\nIf you don't know the answer, just say that you don't know, don't try to make up an answer.\n\nContext: {context}\nQuestion: {question}\n\nOnly return the helpful answer below and nothing else.\nHelpful answer:\n")

实例化检索问题解答链

qa = RetrievalQA.from_chain_type(llm=chat_model,

chain_type="stuff",

retriever=retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": prompt})

调用检索问题解答链

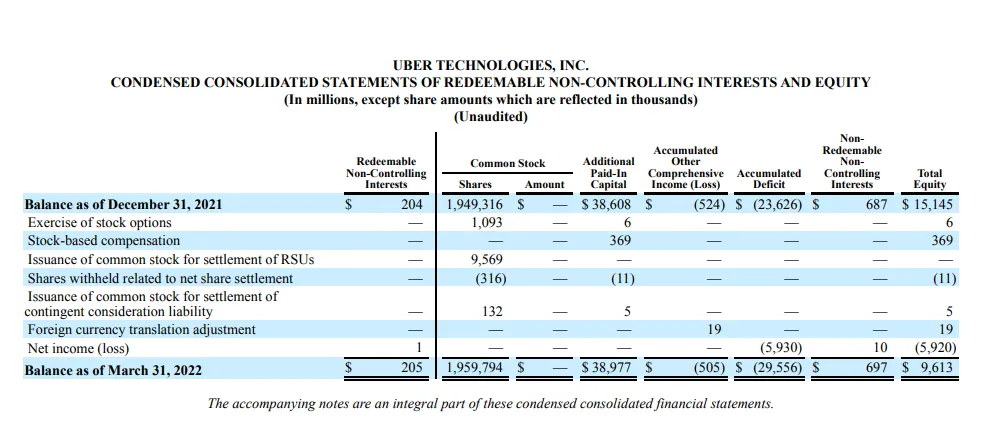

response = qa.invoke({"query": "what is the Balance of UBER TECHNOLOGIES, INC.as of December 31, 2021?"})

合成响应

response['result']

########################### RESPONSE ###########################

Based on the provided balance sheet of Uber Technologies, Inc. as of December 31, 2021, the total assets are $38,774 million, total liabilities are $23,425 million, and total equity is $9,613 million.

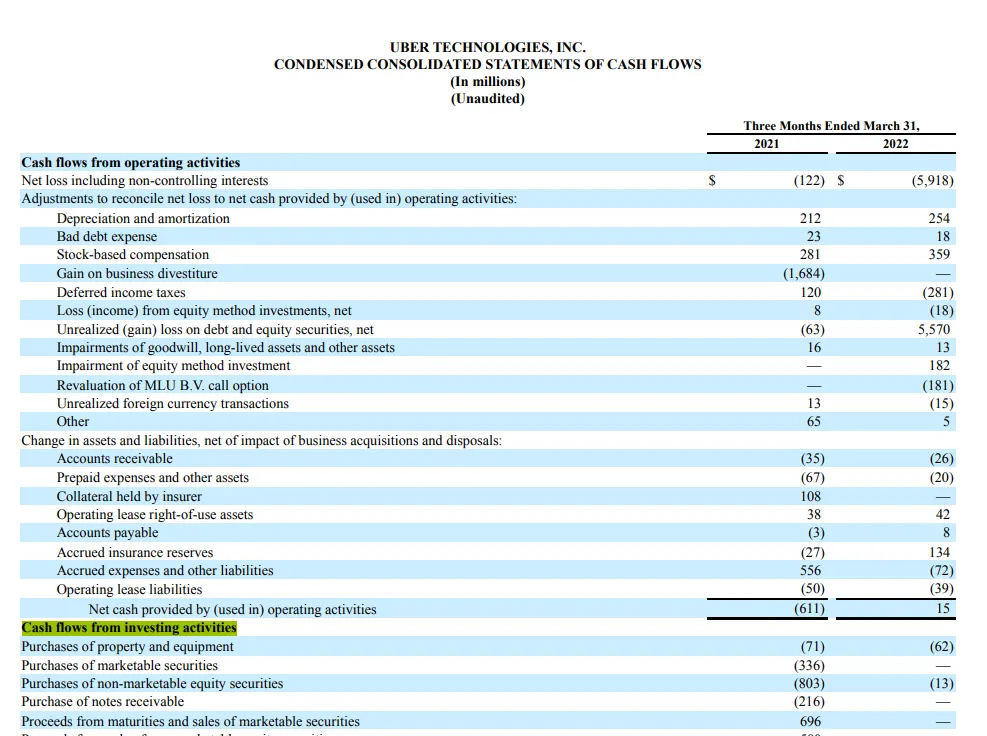

问题2

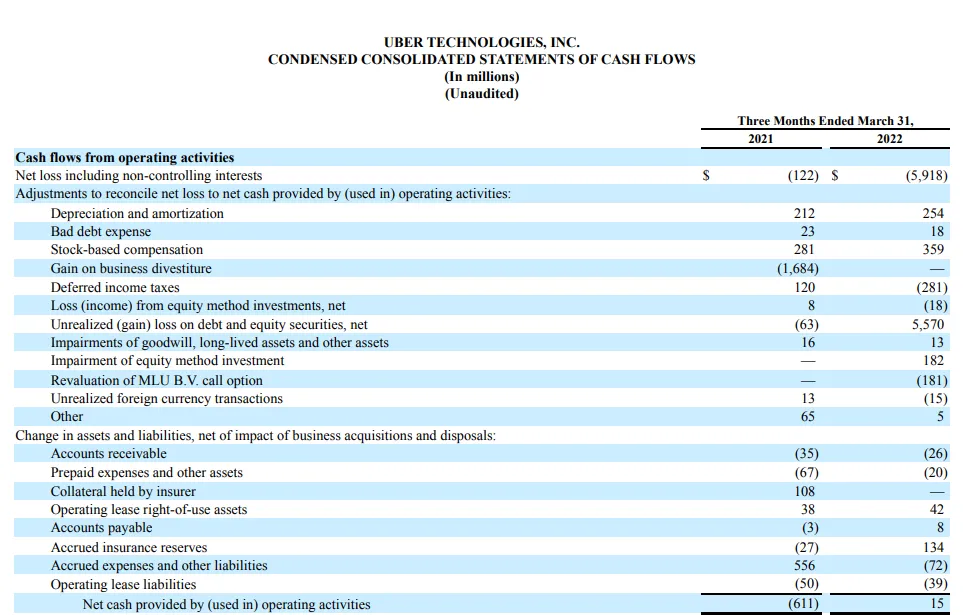

response = qa.invoke({"query": "What is the Cash flows from operating activities associated with bad expense specified in the document ?"})

response['result']

######################## RESPONSE ###############################

The Cash flows from operating activities associated with bad debt expense is 23 for the year 2021 and 18 for the year 2022.

问题3

response = qa.invoke({"query": "what is Loss (income) from equity method investments, net ?"})

response["result"]

############################### RESPONSE ##############################

The loss from equity method investments, net, is calculated as the sum of the impairment of equity method investment and the revaluation of MLU B.V. call option, which amounted to $182 million and $181 million, respectively. This results in a total loss from equity method investments, net, of $363 million. This loss is included in the net loss attributable to Uber Technologies, Inc. of $5.9 billion.

问题4

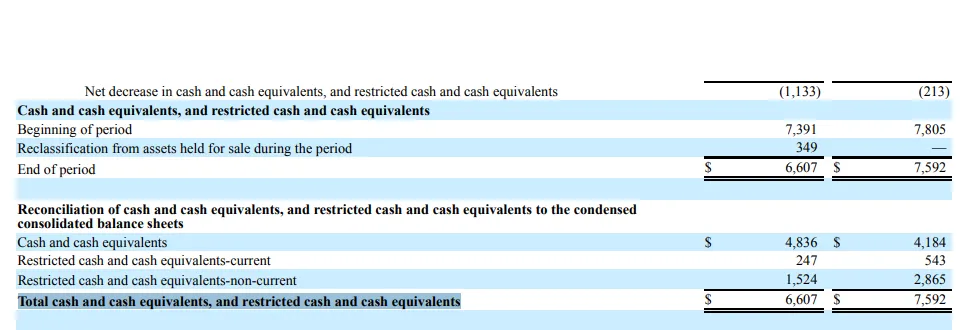

response = qa.invoke({"query": "What is the Total cash and cash equivalents, and restricted cash and cash equivalents for reconciliation ?"})

response['result']

######################## RESPONSE #####################################

The total cash and cash equivalents, and restricted cash and cash equivalents for reconciliation is $6,607 million. This amount is obtained by adding the cash and cash equivalents of $4,836 million and the restricted cash and cash equivalents - current of $247 million, and the restricted cash and cash equivalents - non-current of $1,524 million.

问题5

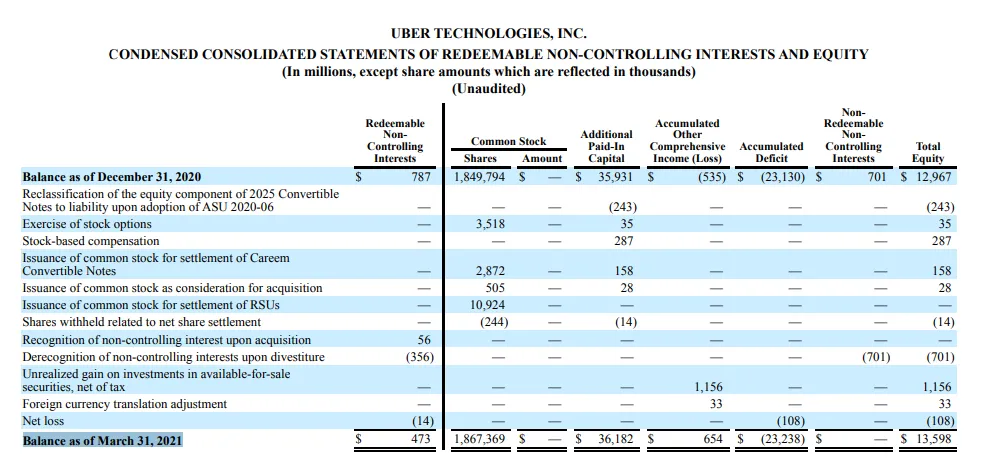

response = qa.invoke({"query":"Based on the CONDENSED CONSOLIDATED STATEMENTS OF REDEEMABLE NON-CONTROLLING INTERESTS AND EQUITY what is the Balance as of March 31, 2021?"})

print(response['result'])

############# RESPONSE ##################

The balance as of March 31, 2021 was $473 for Redeemable Non-Controlling Interests, 1,867,369 shares for Common Stock, $— for Additional Paid-In Capital, $36,182 for Other Comprehensive Income (Loss), $654 for Non-Controlling Interests, and $654 for Total Equity.

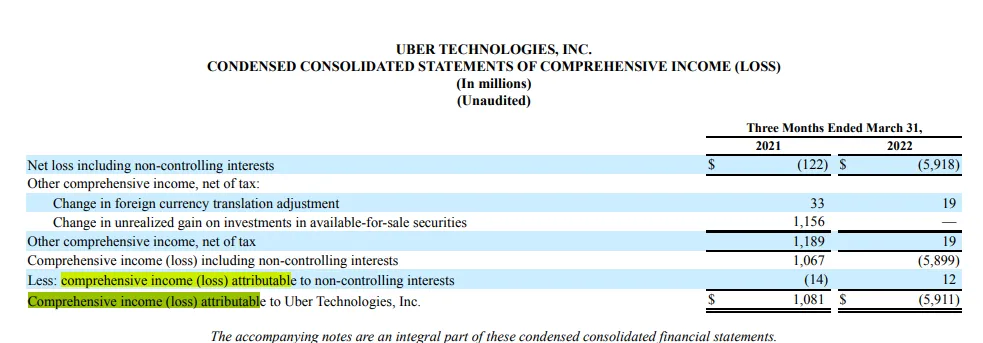

问题6

response = qa.invoke({"query":"Based on the condensed consolidated statements of comprehensive Income(loss) what is the Comprehensive income (loss) attributable to Uber Technologies, Inc.for the three months ended March 31, 2022"})

response['result']

######################### RESPONSE ####################################

The Comprehensive income (loss) attributable to Uber Technologies, Inc. for the three months ended March 31, 2022 was $(5,911) million. This information can be found on the Uber Technologies, Inc. - Condensed Consolidated Statements of Comprehensive Income (Loss) provided in the quarterly report.

问题7

response = qa.invoke({"query":"Based on the condensed consolidated statements of comprehensive Income(loss) what is the Comprehensive income (loss) attributable to Uber Technologies?"})

response['result']

##################### RESPONSE #################################

The Comprehensive income (loss) attributable to Uber Technologies, Inc. for the three months ended March 31, 2021 is $1,081 million, and for the three months ended March 31, 2022 is -$5,911 million.

问题8

response = qa.invoke({"query":"Based on the condensed consolidated statements of comprehensive Income(loss) what is the Net loss including non-controlling interests"})

response['result']

################ RESPONSE #######################################

The Net loss including non-controlling interests is $(122) million for the three months ended March 31, 2021 and $(5,918) million for the three months ended March 31, 2022.

问题9

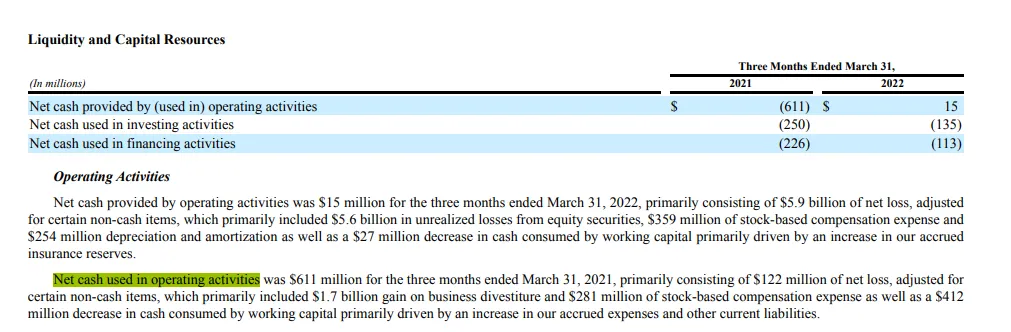

response = qa.invoke({"query":"what is the Net cash used in operating activities for Mrach 31,2021? "})

response['result']

############## RESPONSE ###############################

Net cash used in operating activities for March 31, 2021 was $611 million.

问题10

query = "Based on the CONDENSED CONSOLIDATED STATEMENTS OF CASH FLOWS What is the value of Purchases of property and equipment ?"

response = qa.invoke({"query":query})

response['result']

####################### RESPONSE ######################################

The value of purchases of property and equipment for the three months ended March 31, 2021 and 2022 can be found in the 'Cash flows from investing activities' section of the condensed consolidated statements of cash flows.

For the three months ended March 31, 2021: $71 million

For the three months ended March 31, 2022: $62 million

问题11

query = "Based on the CONDENSED CONSOLIDATED STATEMENTS OF CASH FLOWS what is the Purchases of property and equipment for the year 2022?"

response = qa.invoke({"query":query})

response['result']

########### RESPONSE #####################################

The purchases of property and equipment for the year 2022 based on the CONDENSED CONSOLIDATED STATEMENTS OF CASH FLOWS is -62.

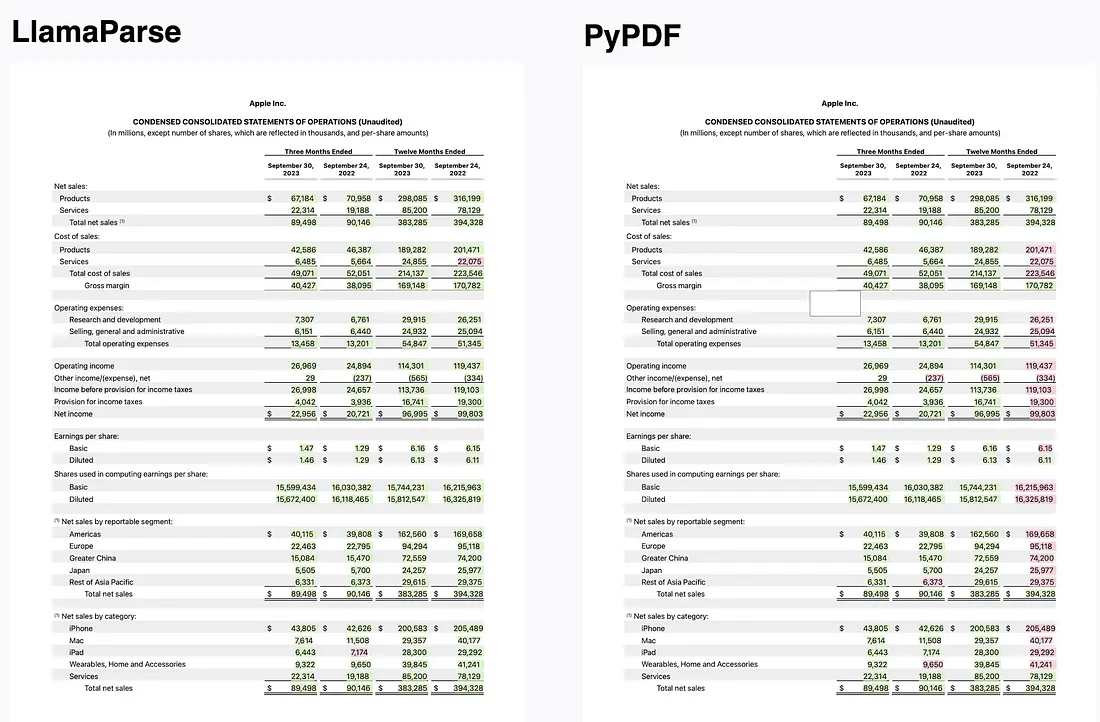

从上述实现中我们可以看出,LLamaParse 在解析复杂的 pdf 文档方面表现相对较好。尽管我们还需要对不同的表格结构进行更多实验。以下是 LlamaParse 与 PyPDF 的比较

结论

通过上述实现,我们可以得出结论:LlamaParse 在将带有复杂表格的 PDF 解析为结构良好的 Markdown 格式方面表现出色。这种表示法可直接插入开源库中的高级 Markdown 解析和递归检索算法。最终结果是,我们能够在复杂的文档上构建 RAG,从而回答表格数据和非结构化数据的问题。