如何修复LSTM时间序列预测中的常见错误

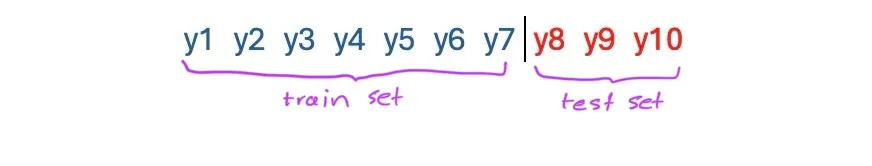

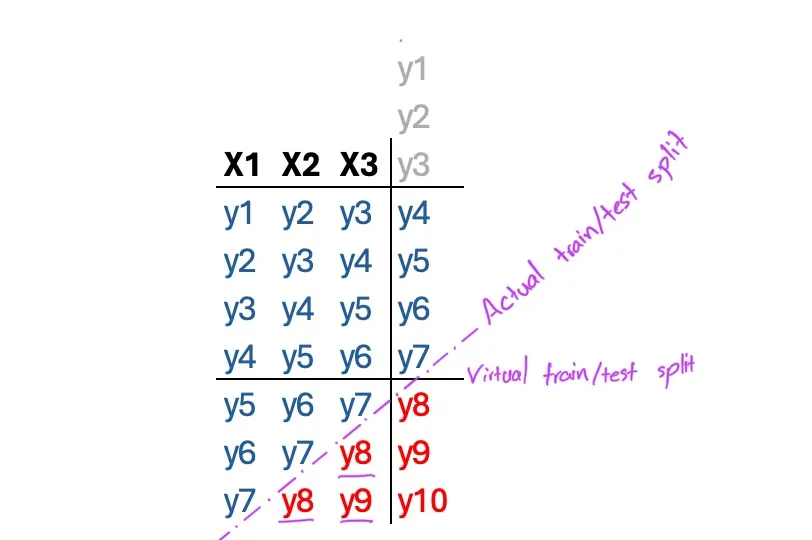

当使用 LSTM 进行时间序列预测时,人们往往会陷入一个常见的陷阱。为了解释它,我们需要回顾一下回归器和预测器是如何工作的。这是预测算法处理时间序列的方式:

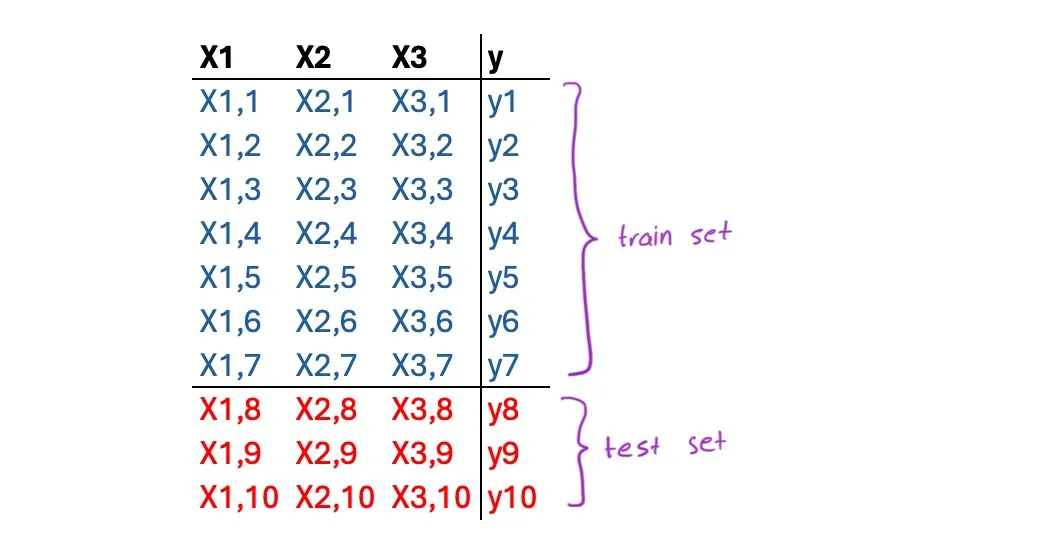

同时,回归问题如下所示:

因为 LSTM 是一个回归器,所以我们需要将时间序列转化为回归问题。有多种方法可以做到这一点,但在本节中,我们将讨论窗口方法和多步骤方法、它们的工作原理,特别是如何避免使用它们时的常见错误。

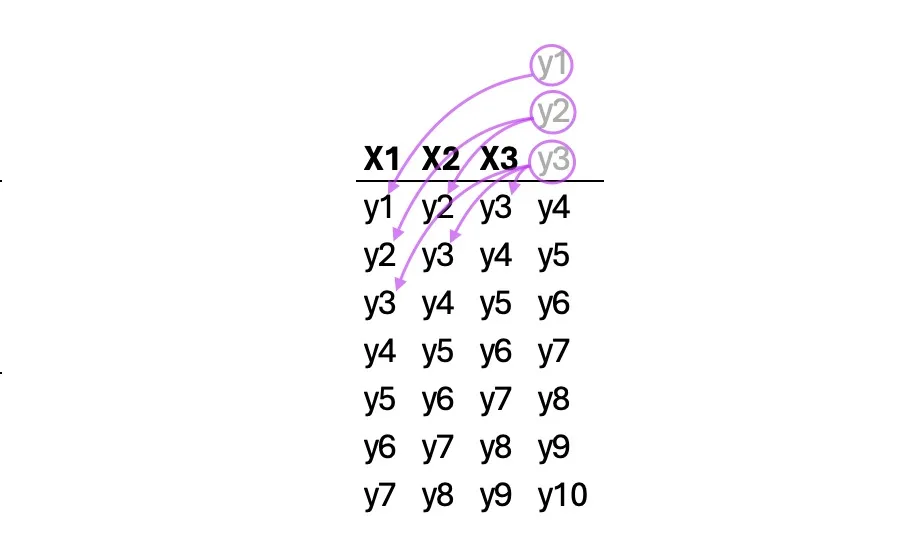

在窗口方法中,时间序列与每个时间步的先前值耦合作为称为窗口的虚拟特征。这里我们有一个大小为 3 的窗口:

以下函数根据单个时间序列创建窗口方法数据集。用户应该选择先前值的数量(通常称为回顾)。生成的数据集将具有对角重复,并且根据回溯值,样本数量会有所不同:

def window(sequences, look_back):

X, y = [], []

for i in range(len(sequences)-look_back-1):

x = sequences[i:(i+look_back)]

X.append(x)

y.append(sequences[i + look_back])

return np.array(X), np.array(y)

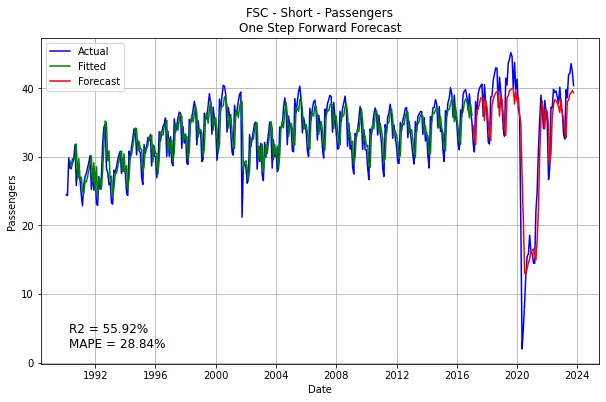

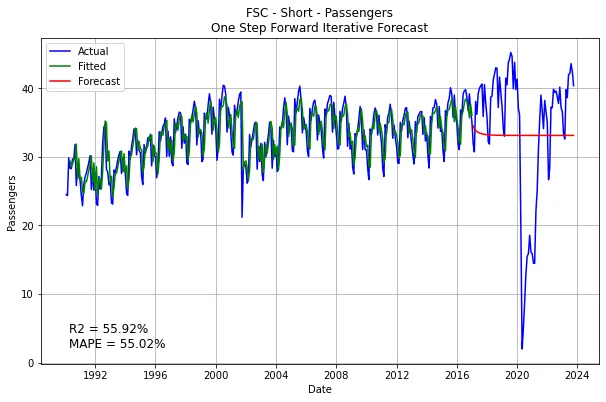

现在,让我们来检验一下结果。模型训练完成后,要对测试集进行测试。许多资料和教程都提出了类似的结果汇编方法。不过,正如后面将解释的那样,这种方法并不可信。但现在,让我们来看看代码和结果是什么样的:

look_back = 3

X, y = window(ts_data, look_back)

# Train-test split

train_ratio = 0.8

train_size = int(train_ratio * len(ts_data))

X_train, X_test = X[:train_size-look_back], X[train_size-look_back:]

y_train, y_test = y[:train_size-look_back], y[train_size-look_back:]

# Create and train LSTM model

model = Sequential()

model.add(LSTM(units=72, activation='tanh', input_shape=(look_back, 1)))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='Adam', metrics=['mape'])

model.fit(x=X_train, y=y_train, epochs=500, batch_size=18, verbose=2)

# Make predictions

forecasts = model.predict(X_test)

lstm_fits = model.predict(X_train)

# Calculate metrics

mape = mean_absolute_percentage_error(y_test, forecasts)

r2 = r2_score(y_train, lstm_fits)

# Initialize dates

date_range = pd.date_range(start='1990-01-01', end='2023-09-30', freq='M')

# Add empty values in fits to match the original time series

fits = np.full(train_size, np.nan)

for i in range(train_size-look_back):

fits[i+look_back] = lstm_fits[i]

# Plot actual, fits, and forecasts

plt.figure(figsize=(10, 6))

plt.plot(date_range, ts_data, label='Actual', color='blue')

plt.plot(date_range[:train_size], fits, label='Fitted', color='green')

plt.plot(date_range[train_size:], forecasts, label='Forecast', color='red')

plt.title('FSC - Short - Passengers\nOne Step Forward Forecast')

plt.xlabel('Date')

plt.ylabel('Passengers')

plt.legend()

plt.text(0.05, 0.05, f'R2 = {r2*100:.2f}%\nMAPE = {mape*100:.2f}%', transform=plt.gca().transAxes, fontsize=12)

plt.grid(True)

plt.show()

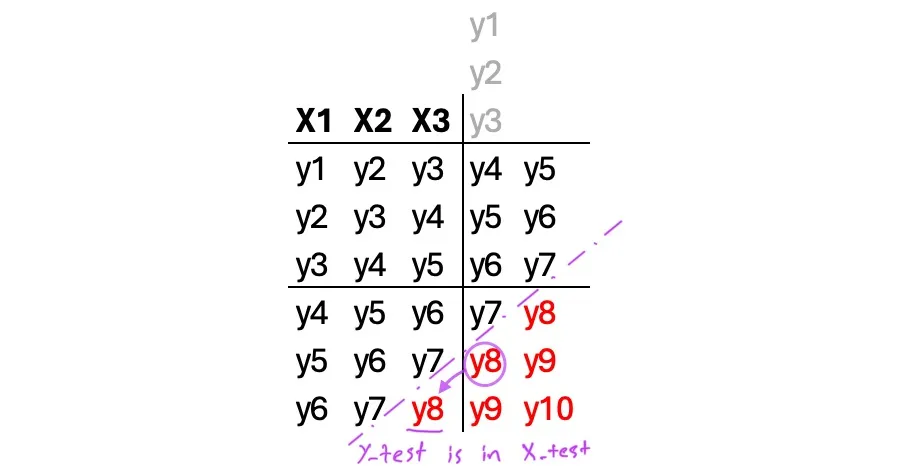

问题: 结果看起来不错。但在查看样本测试集时,我们发现了一个奇特的缺陷:

例如,在生成 y9 时,y8 已被用作模型的输入。虽然它不是用于训练,但考虑到我们是在预测未来的时间步,将未来值纳入模型是很奇怪的。

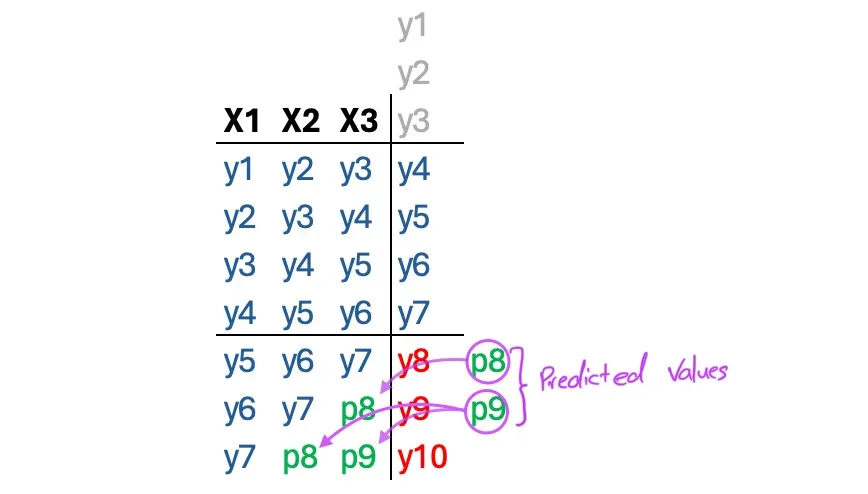

解决方法:用上一个实例的预测值替换输入值的迭代测试集可以解决这个问题。在这种安排下,模型就像传统的预测算法一样,建立在自身预测的基础上:

下面的循环就是这样做的:

# Iterative prediction and substitution

for i in range(len(X_test)):

forecasts[i] = model.predict(X_test[i].reshape(1, look_back, 1))

if i != len(X_test)-1:

X_test[i+1,look_back-1] = forecasts[i]

for j in range(look_back-1):

X_test[i+1,j] = X_test[i,j+1]

结果虽然不那么可靠,但至少是真实的:

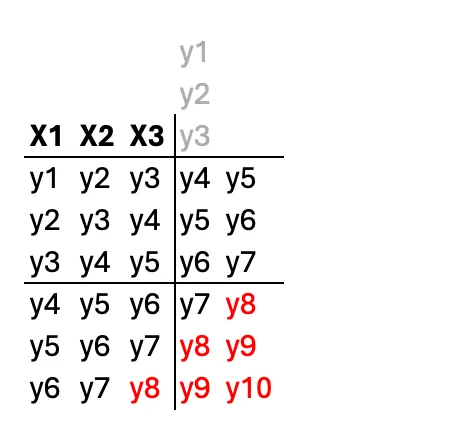

多步骤法与窗口法类似,但目标步骤更多。下面是两个前进步骤的示例:

事实上,对于这种方法,用户必须选择 n_steps_in 和 n_steps_out。下面的代码将一个简单的时间序列转换成一个数据集,以便进行多步 LSTM 训练:

# split a univariate sequence into samples with multi-steps

def split_sequences(sequences, n_steps_in, n_steps_out):

X, y = list(), list()

for i in range(len(sequences)):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out

# check if we are beyond the sequence

if out_end_ix > len(sequences):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix], sequences[end_ix:out_end_ix]

X.append(seq_x)

y.append(seq_y)

return np.array(X), np.array(y)

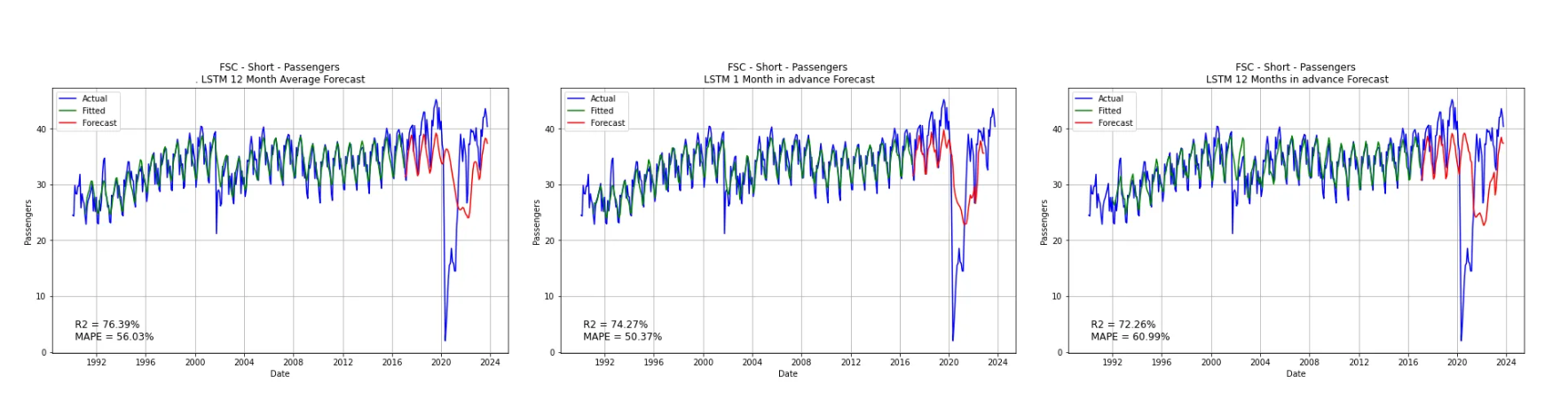

现在,不仅特征而且目标都有对角重复,这意味着为了与时间序列进行比较,我们要么必须对它们进行平均,要么选择其中一个预测。在下面的代码中,生成第一个、最后一个和平均预测的结果,然后是其绘图。需要说明的是,这里的第一次预测是指提前一个月的预测,最后一次预测是指提前12个月的预测。

n_steps_in = 12

n_steps_out = 12

X, y = split_sequences(ts_data, n_steps_in, n_steps_out)

X = X.reshape(X.shape[0], X.shape[1], 1)

y = y.reshape(y.shape[0], y.shape[1], 1)

# Train-test split

train_ratio = 0.8

train_size = int(train_ratio * len(ts_data))

X_train, X_test = X[:train_size-n_steps_in-n_steps_out+1], X[train_size-n_steps_in-n_steps_out+1:]

y_train = y[:train_size-n_steps_in-n_steps_out+1]

y_test = ts_data[train_size:]

# Create and train LSTM model

model = Sequential()

model.add(LSTM(units=72, activation='tanh', input_shape=(n_steps_in, 1)))

model.add(Dense(units=n_steps_out))

model.compile(loss='mean_squared_error', optimizer='Adam', metrics=['mape'])

model.fit(x=X_train, y=y_train, epochs=500, batch_size=18, verbose=2)

# Make predictions

lstm_predictions = model.predict(X_test)

lstm_fitted = model.predict(X_train)

forecasts = [np.diag(np.fliplr(lstm_predictions), i).mean() for i in range(0, -lstm_predictions.shape[0], -1)]

fits = [np.diag(np.fliplr(lstm_fitted), i).mean() for i in range(lstm_fitted.shape[1]+n_steps_in - 1, -lstm_fitted.shape[0], -1)]

forecasts1 = lstm_predictions[n_steps_out-1:,0]

fits1 = model.predict(X)[:train_size-n_steps_in,0]

forecasts12 = lstm_predictions[:,n_steps_out-1]

fits12 = lstm_fitted[:,n_steps_out-1]

# Metrics

av_mape = mean_absolute_percentage_error(y_test, forecasts)

av_r2 = r2_score(ts_data[n_steps_in:train_size], fits[n_steps_in:])

one_mape = mean_absolute_percentage_error(y_test[:-n_steps_out+1], forecasts1)

one_r2 = r2_score(ts_data[n_steps_in:train_size], fits1)

twelve_mape = mean_absolute_percentage_error(y_test, forecasts12)

twelve_r2 = r2_score(ts_data[n_steps_in+n_steps_out-1:train_size], fits12)

date_range = pd.date_range(start='1990-01-01', end='2023-09-30', freq='M')

# Plot actual, fits, and forecasts

plt.figure(figsize=(10, 6))

plt.plot(date_range, ts_data, label='Actual', color='blue')

plt.plot(date_range[:train_size], fits, label='Fitted', color='green')

plt.plot(date_range[train_size:], forecasts, label='Forecast', color='red')

plt.title('FSC - Short - Passengers\n. LSTM 12 Month Average Forecast')

plt.xlabel('Date')

plt.ylabel('Passengers')

plt.legend()

plt.text(0.05, 0.05, f'R2 = {av_r2*100:.2f}%\nMAPE = {av_mape*100:.2f}%', transform=plt.gca().transAxes, fontsize=12)

plt.grid(True)

plt.show()

plt.figure(figsize=(10, 6))

plt.plot(date_range, ts_data, label='Actual', color='blue')

plt.plot(date_range[n_steps_in:train_size], fits1, label='Fitted', color='green')

plt.plot(date_range[train_size:-n_steps_out+1], forecasts1, label='Forecast', color='red')

plt.title('FSC - Short - Passengers\n LSTM 1 Month in advance Forecast')

plt.xlabel('Date')

plt.ylabel('Passengers')

plt.legend()

plt.text(0.05, 0.05, f'R2 = {one_r2*100:.2f}%\nMAPE = {one_mape*100:.2f}%', transform=plt.gca().transAxes, fontsize=12)

plt.grid(True)

plt.show()

plt.figure(figsize=(10, 6))

plt.plot(date_range, ts_data, label='Actual', color='blue')

plt.plot(date_range[n_steps_in+n_steps_out-1:train_size], fits12, label='Fitted', color='green')

plt.plot(date_range[train_size:], forecasts12, label='Forecast', color='red')

plt.title('FSC - Short - Passengers\n LSTM 12 Months in advance Forecast')

plt.xlabel('Date')

plt.ylabel('Passengers')

plt.legend()

plt.text(0.05, 0.05, f'R2 = {twelve_r2*100:.2f}%\nMAPE = {twelve_mape*100:.2f}%', transform=plt.gca().transAxes, fontsize=12)

plt.grid(True)

plt.show()

问题: 这里仍然存在与 "窗口方法 "相同的问题:

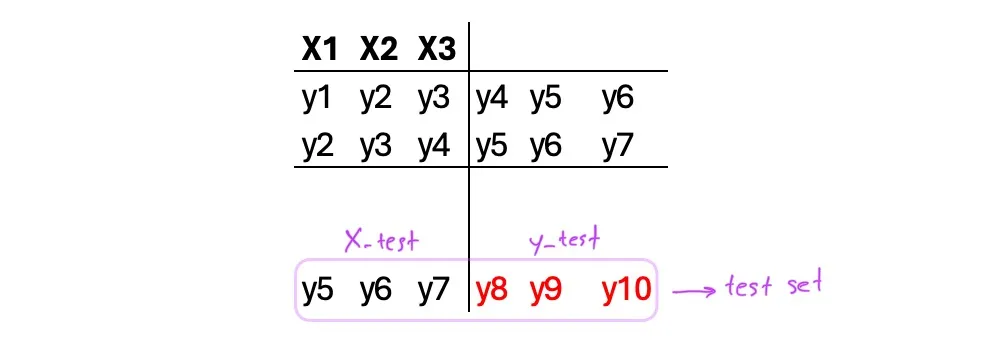

解决方法:我们可以采用与 Window 方法类似的方法。但我们也可以换个方向,选择 n_steps_out 与 test_size 相同。这样,测试集就会缩小到只有一个:

下面的函数正是如此。它需要时间序列、训练大小和样本数量。我将其命名为可比性,是因为这个版本实际上可以与其他预测算法进行比较:

def split_sequences_comparable(sequences, n_samples, train_size):

# Steps

n_steps_out = len(sequences) - train_size

n_steps_in = train_size - n_steps_out - n_samples + 1

# End sets

X_test = sequences[n_samples + n_steps_out - 1:train_size]

X_forecast = sequences[-n_steps_in:]

X, y = list(), list()

for i in range(n_samples):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix], sequences[end_ix:out_end_ix]

X.append(seq_x)

y.append(seq_y)

return np.array(X), np.array(y), np.array(X_test), np.array(X_forecast), n_steps_in, n_steps_out

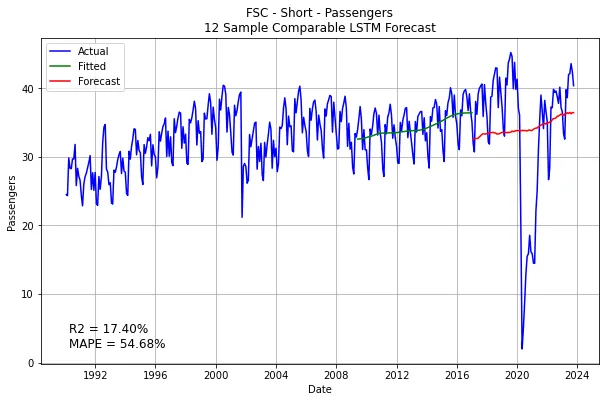

对于这个函数,由于输出步数已经固定,我决定由用户选择样本数和训练大小,然后计算最大可能的输入步数。下面是执行代码及其结果:

n_samples = 12

train_size = 321

X, y, X_test, X_forecast, n_steps_in, n_steps_out = split_sequences_comparable(ts_data, n_samples, train_size)

y_test = ts_data[train_size:]

# Reshaping

X = X.reshape(X.shape[0], X.shape[1], 1)

X_test = X_test.reshape(X_test.shape[1], X_test.shape[0], 1)

y = y.reshape(y.shape[0], y.shape[1])

y_test = y_test.reshape(y_test.shape[1], y_test.shape[0], 1)

# Create and train LSTM model

model = Sequential()

model.add(LSTM(units=154, activation='tanh', input_shape=(n_steps_in, 1)))

model.add(Dense(units=n_steps_out))

model.compile(loss='mean_squared_error', optimizer='Adam', metrics=['mape'])

model.fit(x=X, y=y, epochs=500, batch_size=18, verbose=2)

# Make predictions

lstm_predictions = model.predict(X_test)

predictions = lstm_predictions.reshape(lstm_predictions.shape[1])

lstm_fitted = model.predict(X)

fits = [np.diag(np.fliplr(lstm_fitted), i).mean() for i in range(lstm_fitted.shape[1]+n_steps_in - 1, -lstm_fitted.shape[0], -1)]

# Metrics

mape = mean_absolute_percentage_error(y_test, predictions)

r2 = r2_score(ts_data[n_steps_in:train_size], fits[n_steps_in:])

# Plot actual, fits, and forecasts

plt.figure(figsize=(10, 6))

plt.plot(date_range, ts_data, label='Actual', color='blue')

plt.plot(date_range[:train_size], fits, label='Fitted', color='green')

plt.plot(date_range[train_size:], predictions, label='Forecast', color='red')

plt.title('FSC - Short - Passengers\n12 Sample Comparable LSTM Forecast')

plt.xlabel('Date')

plt.ylabel('Passengers')

plt.legend()

plt.text(0.05, 0.05, f'R2 = {r2*100:.2f}%\nMAPE = {mape*100:.2f}%\', transform=plt.gca().transAxes, fontsize=12)

plt.grid(True)

plt.show()

这是我们目前得到的最可信的结果。但是,如果使用我创造的一种新方法,我们会得到更好的结果。本系列稍后将讨论这种方法(循环方法)。