大语言模型(LLM)微调:特征存储的作用

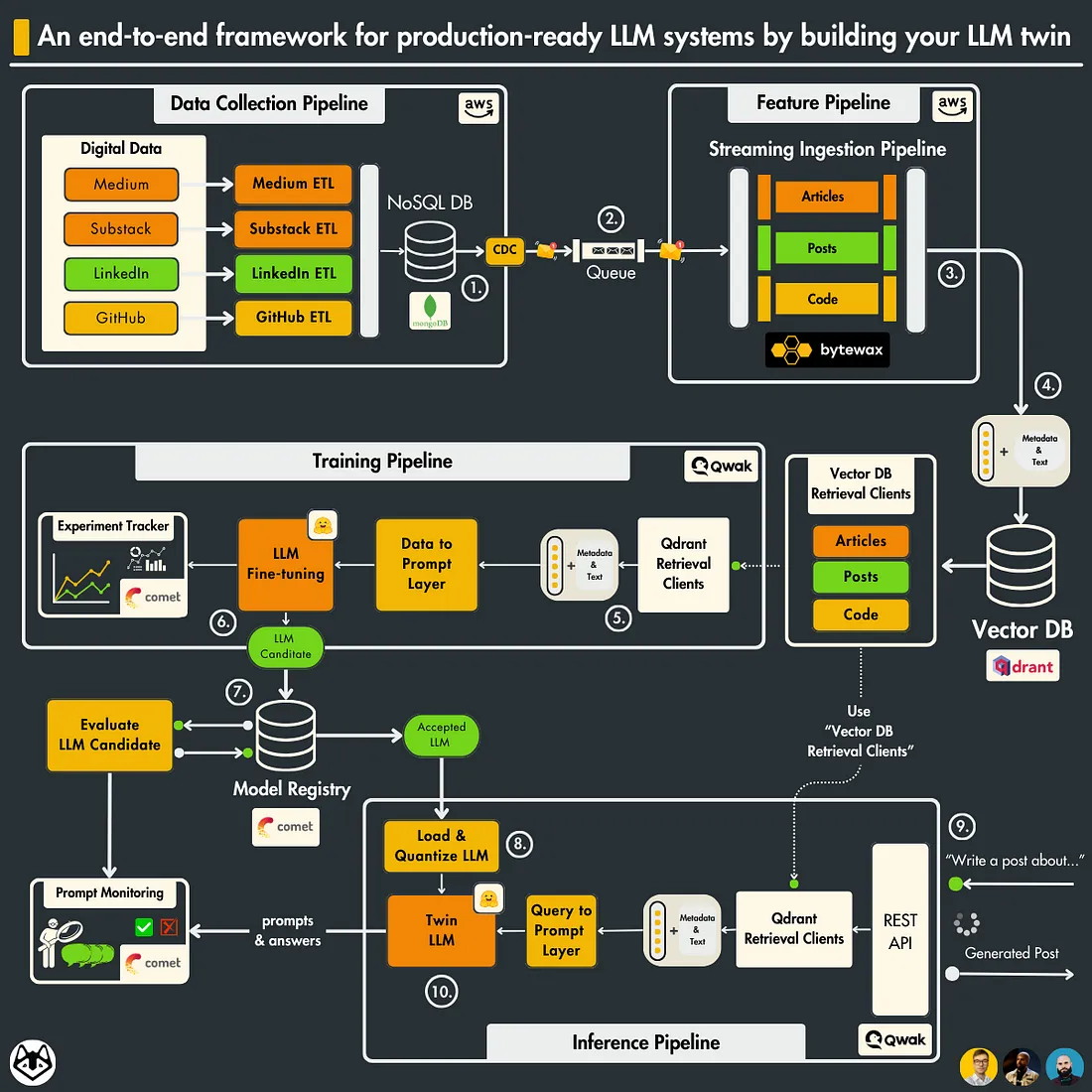

你的LLM Twin是什么?它是一个人工智能角色,通过将你的风格、个性和声音融入法学硕士,像你一样写作。

LLM Twin的架构分为4 个 Python 微服务:

- 数据收集管道:从各种社交媒体平台抓取你的数字数据。通过一系列 ETL 管道清理、规范化数据并将其加载到 NoSQL DB。使用 CDC 模式将数据库更改发送到队列。 (部署在AWS上)

- 功能管道:通过 Bytewax 流管道使用队列中的消息。每条消息都将被实时清理、分块、嵌入(使用超级链接)并加载到 Qdrant 矢量数据库中。 (部署在AWS上)

- 训练管道: 根据你的数字数据创建自定义数据集。 使用 QLoRA 微调法学硕士。使用 Comet ML 的实验跟踪器来监控实验。评估最佳模型并将其保存到 Comet 的模型注册表中。 (部署在Qwak上)

- 推理管道:从 Comet 的模型注册表加载并量化经过微调的 LLM。将其部署为 REST API。使用 RAG 增强提示。使用你的 LLM 双胞胎生成内容。使用Comet的提示监控仪表板监控LLM(部署在Qwak上)

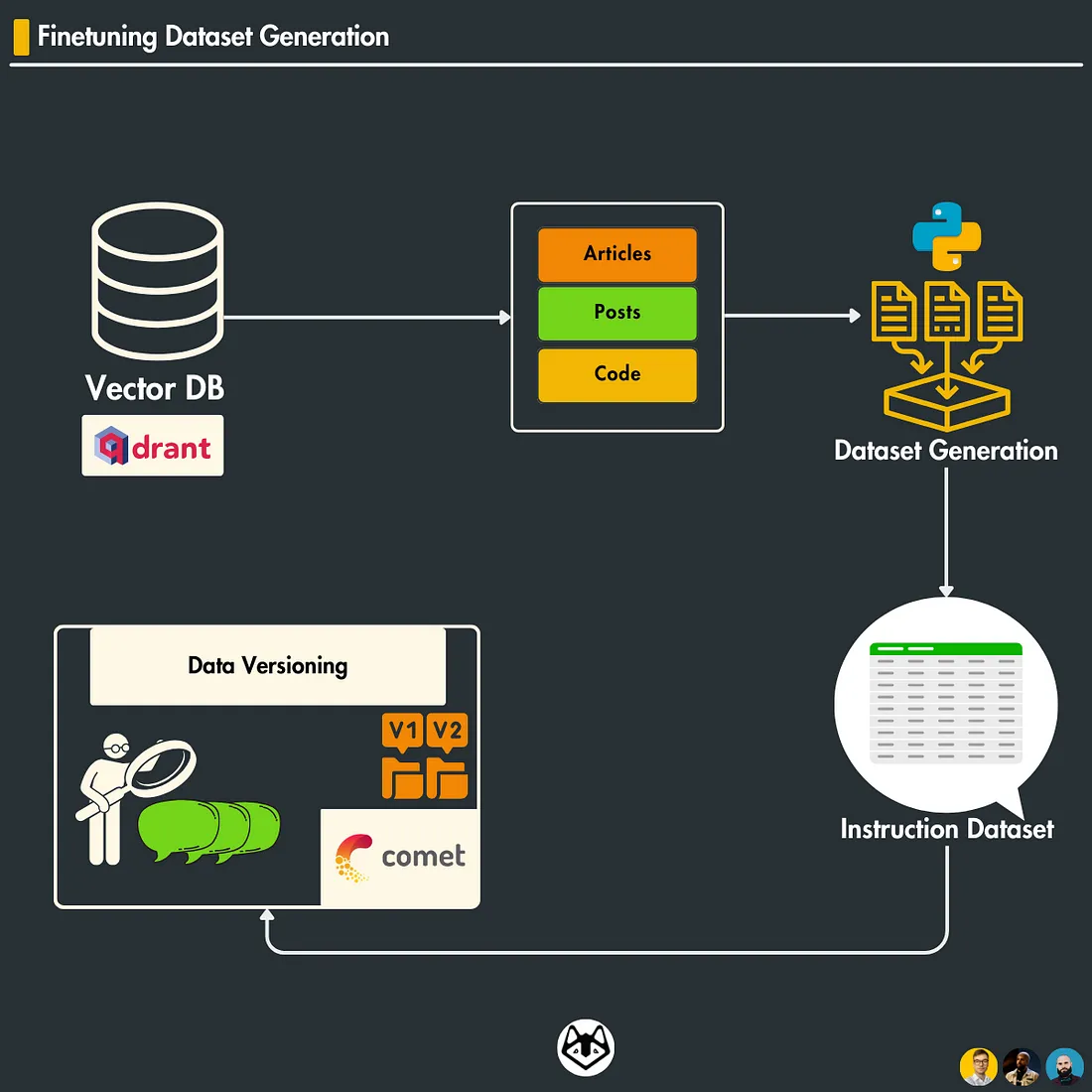

准备微调数据集

大型语言模型 (LLM) 改变了我们与机器的交互方式。这些功能强大的模型对人类语言有着非凡的理解能力,使它们能够翻译文本、编写不同类型的创意内容格式,并以信息丰富的方式回答你的问题。

为什么数据很重要?

让我们来探讨一下,为什么精心准备的高质量数据集对成功的 LLM 微调至关重要:

- 特异性是关键: 像 Mistral 这样的 LLM 是在大量普通文本数据的基础上训练出来的。这使它们对语言有了广泛的了解,但并不总是与你希望模型执行的特定任务相一致。精心策划的数据集可以帮助模型理解你的领域、词汇和你期望的输出类型的细微差别。

- 语境学习: 高质量的数据集可提供丰富的上下文,LLM 可利用这些上下文来学习领域内单词之间的模式和关系。这种上下文使模型能够为你的特定应用生成更相关、更准确的响应。

- 避免偏差:不平衡或不完善的数据集可能会给 LLM 带来偏差,影响其性能并导致不公平或不理想的结果。准备充分的数据集有助于降低这些风险。

今天,我们将学习如何为我们的特定任务生成自定义数据集:内容生成。

了解数据类型

我们的数据包括两种主要类型:帖子和文章。每种类型都有不同的用途,其结构可满足特定需求:

- 帖子: 帖子:帖子通常篇幅较短,更具活力,通常是社交平台或论坛上用户生成的内容。它们的特点是形式多样、语言随意,能捕捉到用户的实时互动和意见。

- 文章: 这类文章结构更严谨,内容更丰富,通常来自新闻机构或博客。文章提供深入分析或报道,格式包括标题、小标题和多个段落,提供有关特定主题的全面信息。

- 代码:来源于 GitHub 等资源库,这种数据类型包含脚本和编程片段,对法律硕士学习和理解技术语言至关重要。

这两种数据类型在插入过程中都需要仔细处理,以保持其完整性,并确保正确存储,以便在 MongoDB 中进行进一步处理和分析。这包括管理格式问题和确保整个数据库的数据一致性。

系统设计和数据流

在实际应用中,数据不是手动插入 MongoDB,而是通过结构良好的数据收集管道流动。

- 数据收集: 最初,数据会从各种来源自动收集,如社交媒体平台、新闻源和其他数字渠道。这一自动化流程可确保源源不断地将新鲜数据输入管道。

如何建立一个实时功能管道,它可以:

- 利用变更数据捕获 (CDC)

- 从 RabbitMQ 队列获取数据

- 使用 Bytewax 流引擎处理用于微调 LLM 和 RAG 的数据流

- 将微调和 RAG 数据加载到 Qdrant 向量数据库中

在生产环境中拥有像 Qdrant 这样的特征存储的重要性:

- 数据清理: 收集数据后,数据要经过一个清洗过程,以去除不一致之处、纠正格式问题,并为存储做好准备。这一步骤对于确保存储在 MongoDB 中的数据具有高质量并可用于分析至关重要。

- Qdrant 的作用: 在更广泛的系统架构中,Qdrant的作用是功能存储。数据存储在 MongoDB 中后,会对其进行进一步处理,提取相关特征并存储在 Qdrant 中。这样,机器学习模型和其他分析任务就能随时访问数据,从而提高系统效率。

测试该模块

- 手动插入测试: 在本课中,我们手动将数据插入 MongoDB,以模拟如何存储经过清理的数据。这一步骤有助于我们在受控环境中了解和测试数据集创建过程。

- 从 Qdrant 手动检索: 在测试或开发功能时,可以从 Qdrant 手动检索数据。这一步骤包括查询特征存储以获取特定的特征向量,这样就可以在微调等特定任务中使用它们。

将数据插入 MongoDB

在开始为 LLM Twin 生成微调数据集的逻辑之前,我们需要 MongoDB 中的数据。

请确保你的本地设置已为整个代码做好准备。

下载数据集

利用所提供脚本中的 download_dataset 函数从 Google Drive 获取数据文件。该函数会检查数据目录是否存在,如果为空,则下载必要的 JSON 文件。每个文件代表不同类型的内容--文章、帖子和存储库--这对多样化的微调至关重要。

下载数据后,使用以下函数将内容插入 MongoDB:

- 插入帖子: insert_posts 函数从 JSON 文件中读取帖子数据,并使用 PostDocument 模型将其存储到数据库中。优雅地处理异常,确保所有文章都被尝试,并记录成功插入文章的数量。

- 插入文章: 同样,insert_articles 函数也处理文章数据。它涉及读取文件、修复 JSON 格式问题以及使用 ArticleDocument 模型保存数据。

环境变量

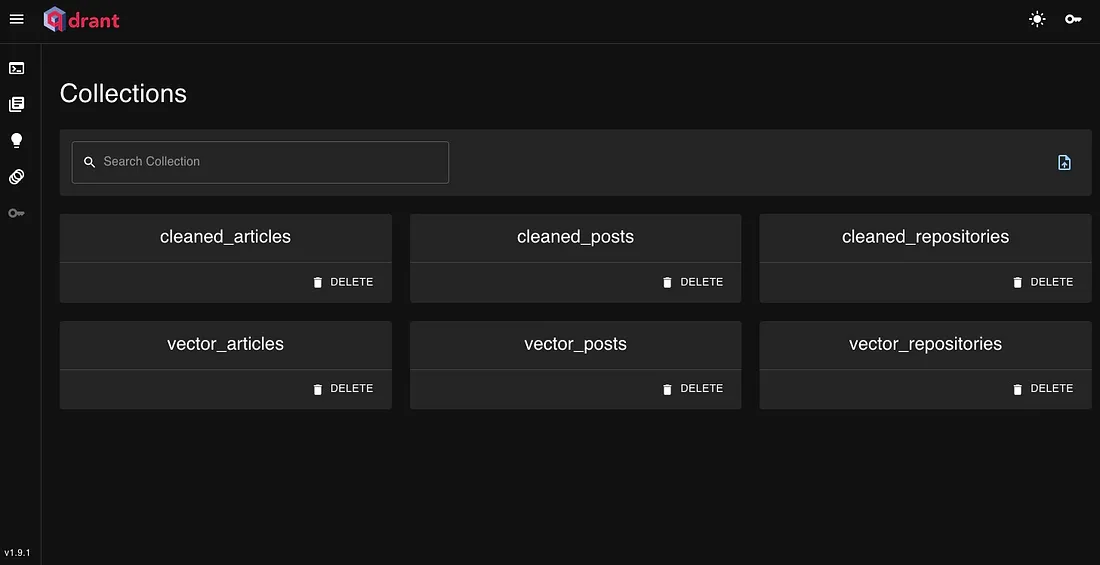

要为 Qdrant 配置环境,请设置以下变量:

Docker 变量

- QDRANT_HOST:运行 Qdrant 服务器的主机名或 IP 地址。

- QDRANT_PORT:Qdrant 的监听端口,Docker 设置通常为 6333。

Qdrant 云变量

- qdrant_cloud_url: 访问 Qdrant 云服务的 URL。

- QDRANT_APIKEY:用于验证 Qdrant 云的 API 密钥。

此外,你还可以通过配置文件中的设置来控制连接模式(云或 Docker)。更多详情请查看 db/qdrant.py

USE_QDRANT_CLOUD: True # Set to False to use Docker setup如果数据管道成功,你应该在 Qdrant 云中看到 3 个集合:cleaned_articles(已清理文章)、cleaned_posts(已清理帖子)、cleaned_repositories(已清理存储库)。

检索清理过的数据

一旦有了经过清理的数据集,下一步就是有效地利用它。Qdrant 是一个强大的特征存储库,专门存储为机器学习应用准备的数据。在我们的案例中,这些数据用于微调任务。

特征库的重要性

特征库通过以下方式在机器学习工作流中发挥着至关重要的作用:

- 集中数据管理: 它可以集中管理特征数据,使其在多个机器学习模型和项目中均可访问和重用。

- 确保一致性: 一致的特征计算可确保在训练和预测阶段采用相同的数据预处理步骤,从而减少错误。

- 提高效率: 通过存储预先计算的特征,可大大加快实验过程,从而快速测试不同的模型。

- 轻松扩展: 随着项目的增长,特征库可以有效管理数据操作的扩展,支持更大的数据集和更复杂的特征工程任务。

通过利用 Qdrant 这样的特征库,团队可以提高机器学习项目的可重复性和可扩展性。

我们将使用来自 db/qdrant 模块的客户端:

from qdrant_client import QdrantClient

from qdrant_client.http.exceptions import UnexpectedResponse

from qdrant_client.http.models import Batch, Distance, VectorParams

import logger_utils

from settings import settings

logger = logger_utils.get_logger(__name__)

class QdrantDatabaseConnector:

_instance: QdrantClient = None

def __init__(self):

if self._instance is None:

try:

if settings.USE_QDRANT_CLOUD:

self._instance = QdrantClient(

url=settings.QDRANT_CLOUD_URL,

api_key=settings.QDRANT_APIKEY,

)

else:

self._instance = QdrantClient(

host=settings.QDRANT_DATABASE_HOST,

port=settings.QDRANT_DATABASE_PORT,

)

except UnexpectedResponse:

logger.exception(

"Couldn't connect to the database.",

host=settings.QDRANT_DATABASE_HOST,

port=settings.QDRANT_DATABASE_PORT,

)

raise

def get_collection(self, collection_name: str):

return self._instance.get_collection(collection_name=collection_name)

def create_non_vector_collection(self, collection_name: str):

self._instance.create_collection(collection_name=collection_name, vectors_config={})

def create_vector_collection(self, collection_name: str):

self._instance.create_collection(

collection_name=collection_name,

vectors_config=VectorParams(size=settings.EMBEDDING_SIZE, distance=Distance.COSINE),

)

def write_data(self, collection_name: str, points: Batch):

try:

self._instance.upsert(collection_name=collection_name, points=points)

except Exception:

logger.exception("An error occurred while inserting data.")

raise

def scroll(self, collection_name: str, limit: int):

return self._instance.scroll(collection_name=collection_name, limit=limit)

def close(self):

if self._instance:

self._instance.close()

logger.info("Connected to database has been closed.")

connection = QdrantDatabaseConnector()

要从 Qdrant 数据库中轻松获取数据,可以使用 Python 函数 fetch_all_cleaned_content。该函数演示了如何高效地从指定集合中获取已清理内容的列表:

from db.qdrant import connection as client

def fetch_all_cleaned_content(self, collection_name: str) -> list:

all_cleaned_contents = []

scroll_response = client.scroll(collection_name=collection_name, limit=10000)

points = scroll_response[0]

for point in points:

cleaned_content = point.payload["cleaned_content"]

if cleaned_content:

all_cleaned_contents.append(cleaned_content)

return all_cleaned_contents

- 初始化滚动: 首先使用滚动方法在数据库中滚动,这样可以获取大量数据。指定集合名称并设置限制,以便有效管理数据量。

- 访问点: 从初始滚动响应中检索点。每个点都包含存储实际数据的有效载荷。

- 提取数据: 循环访问这些点,从每个有效载荷中提取经过清理的内容。在添加到结果列表之前,会检查这些内容是否存在。

- 返回结果: 该函数会返回一个列表,其中包含从数据库中提取的所有已清理内容,从而使整个过程简单明了、易于管理。

生成微调数据集

- 挑战: 手动创建用于微调 Mistral-7B 等语言模型的数据集既耗时又容易出错。

- 解决方案 指导数据集 指导数据集是指导语言模型完成特定任务(如新闻分类)的有效方法。

- 方法: 虽然指令数据集可以手动构建或从现有资源中提取,但由于时间和预算的限制,我们将利用 OpenAI 的 GPT 3.5-turbo 等功能强大的 LLM。

使用 Qdrant 数据制作指令

让我们分析一下来自 Qdrant 的样本数据点,以演示如何为生成指令数据集提取指令:

数据点:

{

"author_id": "2",

"cleaned_content": "Do you want to learn to build hands-on LLM systems using good LLMOps practices? A new Medium series is coming up for the Hands-on LLMs course\n.\nBy finishing the Hands-On LLMs free course, you will learn how to use the 3-pipeline architecture & LLMOps good practices to design, build, and deploy a real-time financial advisor powered by LLMs & vector DBs.\nWe will primarily focus on the engineering & MLOps aspects.\nThus, by the end of this series, you will know how to build & deploy a real ML system, not some isolated code in Notebooks.\nThere are 3 components you will learn to build during the course:\n- a real-time streaming pipeline\n- a fine-tuning pipeline\n- an inference pipeline\n.\nWe have already released the code and video lessons of the Hands-on LLM course.\nBut we are excited to announce an 8-lesson Medium series that will dive deep into the code and explain everything step-by-step.\nWe have already released the first lesson of the series \nThe LLMs kit: Build a production-ready real-time financial advisor system using streaming pipelines, RAG, and LLMOps: \n[URL]\n In Lesson 1, you will learn how to design a financial assistant using the 3-pipeline architecture (also known as the FTI architecture), powered by:\n- LLMs\n- vector DBs\n- a streaming engine\n- LLMOps\n.\n The rest of the articles will be released by the end of January 2024.\nFollow us on Medium's Decoding ML publication to get notified when we publish the other lessons: \n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience",

"platform": "linkedin",

"type": "posts"

},

{

"author_id": "2",

"cleaned_content": "RAG systems are far from perfect This free course teaches you how to improve your RAG system.\nI recently finished the Advanced Retrieval for AI with Chroma free course from\nDeepLearning.AI\nIf you are into RAG, I find it among the most valuable learning sources.\nThe course already assumes you know what RAG is.\nIts primary focus is to show you all the current issues of RAG and why it is far from perfect.\nAfterward, it shows you the latest SoTA techniques to improve your RAG system, such as:\n- query expansion\n- cross-encoder re-ranking\n- embedding adaptors\nI am not affiliated with\nDeepLearning.AI\n(I wouldn't mind though).\nThis is a great course you should take if you are into RAG systems.\nThe good news is that it is free and takes only 1 hour.\nCheck it out \n Advanced Retrieval for AI with Chroma:\n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience\n.\n Follow me for daily lessons about ML engineering and MLOps.[URL]",

"image": null,

"platform": "linkedin",

"type": "posts"

}

过程:

得出指令: 我们可以利用这些见解为 GPT 3.5-涡轮增压制定指令:

- 说明 1:"撰写一篇 LinkedIn 帖子,推广关于构建 LLM 系统的新教育课程,重点关注 LLMOps。使用相关的标签,语气既要有信息量,又要引人入胜。"

- 说明 2:"撰写一篇 LinkedIn 帖子,解释在实时财务咨询应用中使用 LLM 和矢量数据库的好处。强调 LLMOps 对于成功部署的重要性"。

使用 GPT 3.5-turbo 生成数据集

- 输入说明: 我们将把从 Qdrant 数据点以及其他类似数据点生成的内容输入 GPT 3.5-turbo。

- LLM 输出: 然后,GPT 3.5-turbo 会为每个 LinkedIn 风格的文本内容生成指令。

结果:这一过程将产生一个指令-输出对数据集,用于微调 Mistral-7B 的内容生成。

实际示例

在本节中,我们将使用一批从各种帖子中提取的内容来演示上述方法的实际应用。

该示例将使用我们概述的格式模拟为 LLM 创建训练数据集的过程。

试想一下,我们想从这个 ↓ 生成一个训练数据集。

{

"author_id": "2",

"cleaned_content": "Do you want to learn to build hands-on LLM systems using good LLMOps practices? A new Medium series is coming up for the Hands-on LLMs course\n.\nBy finishing the Hands-On LLMs free course, you will learn how to use the 3-pipeline architecture & LLMOps good practices to design, build, and deploy a real-time financial advisor powered by LLMs & vector DBs.\nWe will primarily focus on the engineering & MLOps aspects.\nThus, by the end of this series, you will know how to build & deploy a real ML system, not some isolated code in Notebooks.\nThere are 3 components you will learn to build during the course:\n- a real-time streaming pipeline\n- a fine-tuning pipeline\n- an inference pipeline\n.\nWe have already released the code and video lessons of the Hands-on LLM course.\nBut we are excited to announce an 8-lesson Medium series that will dive deep into the code and explain everything step-by-step.\nWe have already released the first lesson of the series \nThe LLMs kit: Build a production-ready real-time financial advisor system using streaming pipelines, RAG, and LLMOps: \n[URL]\n In Lesson 1, you will learn how to design a financial assistant using the 3-pipeline architecture (also known as the FTI architecture), powered by:\n- LLMs\n- vector DBs\n- a streaming engine\n- LLMOps\n.\n The rest of the articles will be released by the end of January 2024.\nFollow us on Medium's Decoding ML publication to get notified when we publish the other lessons: \n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience",

},

{

"author_id": "2",

"cleaned_content": "RAG systems are far from perfect This free course teaches you how to improve your RAG system.\nI recently finished the Advanced Retrieval for AI with Chroma free course from\nDeepLearning.AI\nIf you are into RAG, I find it among the most valuable learning sources.\nThe course already assumes you know what RAG is.\nIts primary focus is to show you all the current issues of RAG and why it is far from perfect.\nAfterward, it shows you the latest SoTA techniques to improve your RAG system, such as:\n- query expansion\n- cross-encoder re-ranking\n- embedding adaptors\nI am not affiliated with\nDeepLearning.AI\n(I wouldn't mind though).\nThis is a great course you should take if you are into RAG systems.\nThe good news is that it is free and takes only 1 hour.\nCheck it out \n Advanced Retrieval for AI with Chroma:\n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience\n.\n Follow me for daily lessons about ML engineering and MLOps.[URL]",

}

到这 ↓

[

{

"instruction": "Share the announcement of the upcoming Medium series on building hands-on LLM systems using good LLMOps practices, focusing on the 3-pipeline architecture and real-time financial advisor development. Follow the Decoding ML publication on Medium for notifications on future lessons.",

"content": "Do you want to learn to build hands-on LLM systems using good LLMOps practices? A new Medium series is coming up for the Hands-on LLMs course\n.\nBy finishing the Hands-On LLMs free course, you will learn how to use the 3-pipeline architecture & LLMOps good practices to design, build, and deploy a real-time financial advisor powered by LLMs & vector DBs.\nWe will primarily focus on the engineering & MLOps aspects.\nThus, by the end of this series, you will know how to build & deploy a real ML system, not some isolated code in Notebooks.\nThere are 3 components you will learn to build during the course:\n- a real-time streaming pipeline\n- a fine-tuning pipeline\n- an inference pipeline\n.\nWe have already released the code and video lessons of the Hands-on LLM course.\nBut we are excited to announce an 8-lesson Medium series that will dive deep into the code and explain everything step-by-step.\nWe have already released the first lesson of the series \nThe LLMs kit: Build a production-ready real-time financial advisor system using streaming pipelines, RAG, and LLMOps: \n[URL]\n In Lesson 1, you will learn how to design a financial assistant using the 3-pipeline architecture (also known as the FTI architecture), powered by:\n- LLMs\n- vector DBs\n- a streaming engine\n- LLMOps\n.\n The rest of the articles will be released by the end of January 2024.\nFollow us on Medium's Decoding ML publication to get notified when we publish the other lessons: \n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience"

},

{

"instruction": "Promote the free course 'Advanced Retrieval for AI with Chroma' from DeepLearning.AI that aims to improve RAG systems and takes only 1 hour to complete. Share the course link and encourage followers to check it out for the latest techniques in query expansion, cross-encoder re-ranking, and embedding adaptors.",

"content": "RAG systems are far from perfect This free course teaches you how to improve your RAG system.\nI recently finished the Advanced Retrieval for AI with Chroma free course from\nDeepLearning.AI\nIf you are into RAG, I find it among the most valuable learning sources.\nThe course already assumes you know what RAG is.\nIts primary focus is to show you all the current issues of RAG and why it is far from perfect.\nAfterward, it shows you the latest SoTA techniques to improve your RAG system, such as:\n- query expansion\n- cross-encoder re-ranking\n- embedding adaptors\nI am not affiliated with\nDeepLearning.AI\n(I wouldn't mind though).\nThis is a great course you should take if you are into RAG systems.\nThe good news is that it is free and takes only 1 hour.\nCheck it out \n Advanced Retrieval for AI with Chroma:\n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience\n.\n Follow me for daily lessons about ML engineering and MLOps.[URL]"

},

我们正在增强 LLM 为每个数据点生成特定指令的能力,目的是让模型浸透内容创建方面的专业知识。

步骤 1:设置数据点

首先,让我们定义一组数据点样本。这些数据点代表不同的内容,我们希望从中生成 LinkedIn 发布说明。

{

"author_id": "2",

"cleaned_content": "Do you want to learn to build hands-on LLM systems using good LLMOps practices? A new Medium series is coming up for the Hands-on LLMs course\n.\nBy finishing the Hands-On LLMs free course, you will learn how to use the 3-pipeline architecture & LLMOps good practices to design, build, and deploy a real-time financial advisor powered by LLMs & vector DBs.\nWe will primarily focus on the engineering & MLOps aspects.\nThus, by the end of this series, you will know how to build & deploy a real ML system, not some isolated code in Notebooks.\nThere are 3 components you will learn to build during the course:\n- a real-time streaming pipeline\n- a fine-tuning pipeline\n- an inference pipeline\n.\nWe have already released the code and video lessons of the Hands-on LLM course.\nBut we are excited to announce an 8-lesson Medium series that will dive deep into the code and explain everything step-by-step.\nWe have already released the first lesson of the series \nThe LLMs kit: Build a production-ready real-time financial advisor system using streaming pipelines, RAG, and LLMOps: \n[URL]\n In Lesson 1, you will learn how to design a financial assistant using the 3-pipeline architecture (also known as the FTI architecture), powered by:\n- LLMs\n- vector DBs\n- a streaming engine\n- LLMOps\n.\n The rest of the articles will be released by the end of January 2024.\nFollow us on Medium's Decoding ML publication to get notified when we publish the other lessons: \n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience",

"platform": "linkedin",

"type": "posts"

},

{

"author_id": "2",

"cleaned_content": "RAG systems are far from perfect This free course teaches you how to improve your RAG system.\nI recently finished the Advanced Retrieval for AI with Chroma free course from\nDeepLearning.AI\nIf you are into RAG, I find it among the most valuable learning sources.\nThe course already assumes you know what RAG is.\nIts primary focus is to show you all the current issues of RAG and why it is far from perfect.\nAfterward, it shows you the latest SoTA techniques to improve your RAG system, such as:\n- query expansion\n- cross-encoder re-ranking\n- embedding adaptors\nI am not affiliated with\nDeepLearning.AI\n(I wouldn't mind though).\nThis is a great course you should take if you are into RAG systems.\nThe good news is that it is free and takes only 1 hour.\nCheck it out \n Advanced Retrieval for AI with Chroma:\n[URL]\nhashtag\n#\nmachinelearning\nhashtag\n#\nmlops\nhashtag\n#\ndatascience\n.\n Follow me for daily lessons about ML engineering and MLOps.[URL]",

"image": null,

"platform": "linkedin",

"type": "posts"

}

步骤 2:使用 DataFormatter 类

我们将使用 DataFormatter 类把这些数据点格式化为 LLM 的结构化提示。下面是如何使用该类来准备内容:

data_type = "posts"

USER_PROMPT = (

f"I will give you batches of contents of {data_type}. Please generate me exactly 1 instruction for each of them. The {data_type} text "

f"for which you have to generate the instructions is under Content number x lines. Please structure the answer in json format,"

f"ready to be loaded by json.loads(), a list of objects only with fields called instruction and content. For the content field, copy the number of the content only!."

f"Please do not add any extra characters and make sure it is a list with objects in valid json format!\n"

)

class DataFormatter:

@classmethod

def format_data(cls, data_points: list, is_example: bool, start_index: int) -> str:

text = ""

for index, data_point in enumerate(data_points):

if not is_example:

text += f"Content number {start_index + index }\n"

text += str(data_point) + "\n"

return text

@classmethod

def format_batch(cls, context_msg: str, data_points: list, start_index: int) -> str:

delimiter_msg = context_msg

delimiter_msg += cls.format_data(data_points, False, start_index)

return delimiter_msg

@classmethod

def format_prompt(cls, inference_posts: list, start_index: int):

initial_prompt = USER_PROMPT

initial_prompt += f"You must generate exactly a list of {len(inference_posts)} json objects, using the contents provided under CONTENTS FOR GENERATION\n"

initial_prompt += cls.format_batch(

"\nCONTENTS FOR GENERATION: \n", inference_posts, start_index

)

return initial_prompt

format_prompt 函数的输出:

I will give you batches of contents of posts. Please generate me exactly 1 instruction for each of them. The posts text for which you have to generate the instructions is under Content number x lines. Please structure the answer in json format,ready to be loaded by json.loads(), a list of objects only with fields called instruction and content. For the content field, copy the number of the content only!.Please do not add any extra characters and make sure it is a list with objects in valid json format!

You must generate exactly a list of 1 json objects, using the contents provided under CONTENTS FOR GENERATION

CONTENTS FOR GENERATION:

Content number 0

Do you want to learn to build hands-on LLM systems using good LLMOps practices? A new Medium series is coming up for the Hands-on LLMs course

.

By finishing the Hands-On LLMs free course, you will learn how to use the 3-pipeline architecture & LLMOps good practices to design, build, and deploy a real-time financial advisor powered by LLMs & vector DBs.

We will primarily focus on the engineering & MLOps aspects.

Thus, by the end of this series, you will know how to build & deploy a real ML system, not some isolated code in Notebooks.

There are 3 components you will learn to build during the course:

- a real-time streaming pipeline

- a fine-tuning pipeline

- an inference pipeline

步骤 3:使用数据集生成器类自动生成微调数据

为了自动生成微调数据,我们设计了 DatasetGenerator 类。该类旨在简化从获取数据到将训练数据记录到 Comet ML 的过程。

DatasetGenerator 类初始化时包含三个组件:管理文件 I/O 的文件处理程序、与 LLM 交互的 API 通信程序,以及准备数据的数据格式器:

class DatasetGenerator:

def __init__(self, file_handler, api_communicator, data_formatter):

self.file_handler = file_handler

self.api_communicator = api_communicator

self.data_formatter = data_formatter

生成微调数据

DatasetGenerator 类中的 generate_training_data 方法处理数据生成的整个生命周期:

def generate_training_data(self, collection_name: str, batch_size: int = 1):

all_contents = self.fetch_all_cleaned_content(collection_name)

response = []

for i in range(0, len(all_contents), batch_size):

batch = all_contents[i : i + batch_size]

initial_prompt = self.data_formatter.format_prompt(batch, i)

response += self.api_communicator.send_prompt(initial_prompt)

for j in range(i, i + batch_size):

response[j]["content"] = all_contents[j]

self.push_to_comet(response, collection_name)

获取内容

fetch_all_cleaned_content 方法可从指定的集合中获取所有相关内容,并为处理做好准备:

def fetch_all_cleaned_content(self, collection_name: str) -> list:

all_cleaned_contents = []

scroll_response = client.scroll(collection_name=collection_name, limit=10000)

points = scroll_response[0]

for point in points:

cleaned_content = point.payload["cleaned_content"]

if cleaned_content:

all_cleaned_contents.append(cleaned_content)

return all_cleaned_contents

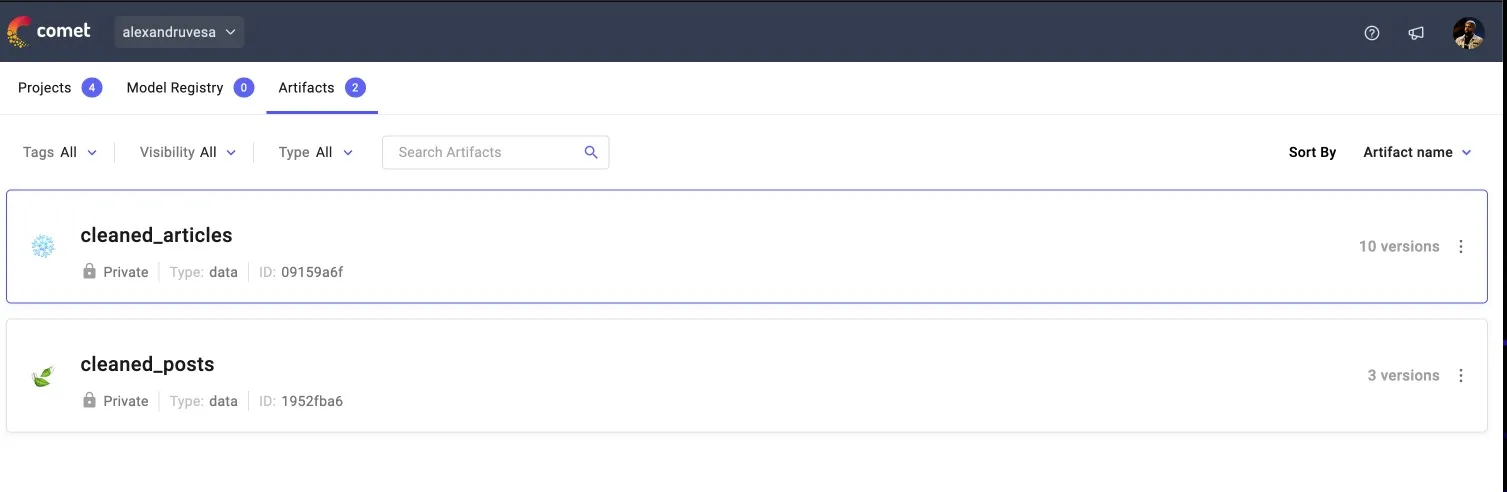

CometML - 数据版本管理

我们将重点讨论机器学习操作(MLOps)的一个关键方面--数据版本化。

我们将具体探讨如何使用 Comet ML 来实现这一点,Comet ML 是一个促进机器学习项目中实验管理和可重复性的平台。

CometML 是一个基于云的平台,为机器学习中的实验和模型提供跟踪、比较、解释和优化工具。CometML 帮助数据科学家和团队更好地管理和协作机器学习实验。

为什么使用 CometML?

- 工件: 利用工件管理来捕获、版本和管理数据快照和模型,这有助于保持数据的完整性,并有效追踪实验脉络。

- 实验跟踪: CometML 可自动跟踪代码、实验和结果,让你可以直观地比较不同的运行和配置。

- 模型优化: 它提供了并排比较不同模型、分析超参数和跟踪各种指标的模型性能的工具。

- 协作与共享: 与同事或 ML 社区共享研究结果和模型,加强团队协作和知识转移。

- 可重复性: 通过记录实验设置的每个细节,CometML 可确保实验的可重复性,使调试和迭代变得更加容易。

CometML 变量

将 CometML 集成到项目中时,需要设置几个环境变量来管理身份验证和配置:

- COMET_API_KEY:你的唯一 API 密钥,用于验证你与 CometML API 的交互。

- COMET_PROJECT: 项目名称,你的实验将记录在该名称下。

- COMET_WORKSPACE:组织各种项目和实验的工作区名称。

获取 CometML 变量

要获取并设置项目所需的 CometML 变量,请按照以下步骤操作:

1. 创建账户或登录:

- 访问 CometML 网站,如果已有账户,请登录;如果是新用户,请注册。

2. 创建新项目:

- 登录后,进入仪表板。在这里,点击 "新项目 "并输入项目的相关详细信息,即可创建一个新项目。

3. 访问 API 密钥:

- 创建项目后,你需要获取 API 密钥。点击右上角的个人资料,进入账户设置。从菜单中选择 "API 密钥",你会看到一个生成或复制现有 API 密钥的选项。

4. 设置环境变量:

- 将获得的 COMET_API_KEY 添加到环境变量中,同时添加已设置的 COMET_PROJECT 和 COMET_WORKSPACE 名称。

数据版本管理在 MLO 中的重要性

数据版本化是指记录用于训练机器学习模型的数据集的多个版本。这种做法至关重要,原因有以下几点:

- 可重复性: 它可以确保使用完全相同的数据重复进行实验,这对于验证和比较机器学习模型至关重要。

- 模型诊断和审计: 如果模型的性能发生了意外变化,数据版本化可以让团队恢复到以前的数据状态,从而发现问题。

- 协作与实验: 团队可以使用不同的数据版本进行实验,以了解变化对模型性能的影响,而不会丢失原始数据设置。

- 合规性: 在许多行业,跟踪数据修改和训练环境是遵守法规的必要条件。

提供的 push_too_comet 函数是这一过程的关键部分。

def push_to_comet(self, data: list, collection_name: str):

try:

logging.info(f"Starting to push data to Comet: {collection_name}")

# Assuming the settings module has been properly configured with the required attributes

experiment = Experiment(

api_key=settings.COMET_API_KEY,

project_name=settings.COMET_PROJECT,

workspace=settings.COMET_WORKSPACE,

)

file_name = f"{collection_name}.json"

logging.info(f"Writing data to file: {file_name}")

with open(file_name, "w") as f:

json.dump(data, f)

logging.info("Data written to file successfully")

artifact = Artifact(collection_name)

artifact.add(file_name)

logging.info(f"Artifact created and file added: {file_name}")

experiment.log_artifact(artifact)

experiment.end()

logging.info("Data pushed to Comet successfully and experiment ended")

except Exception as e:

logging.error(f"Failed to push data to Comet: {e}", exc_info=True)

功能组件分解

- 实验初始化: 使用项目设置创建实验。这将所有操作(如记录工件)与特定的实验运行绑定。

- 数据保存: 数据以 JSON 文件的形式保存在本地。这种文件格式用途广泛,是数据交换的理想选择。

- 工件创建和日志记录: 工件是 Comet ML 中的一个版本化对象,可与实验相关联。通过记录工件,可以记录整个项目生命周期中使用的所有数据版本。

运行调用 push_too_comet 函数的脚本后,Comet ML 将更新新的数据工件,每个工件代表不同的数据集版本。这是确保在 MLOps 环境中记录和跟踪所有数据版本的关键一步。

每个版本都有时间戳,并存储有唯一的 ID,以便跟踪随时间发生的变化,或在必要时恢复到以前的版本。

数据集的最终版本(如 cleaned_articles.json)将为微调任务做好准备。

该 JSON 文件将包含成对的指令和内容。

指令由 LLM 生成,用于指导模型执行特定任务或理解特定上下文,而内容则包括通过管道抓取和处理的数据。

这种格式不仅能确保对每个数据集版本进行跟踪,还能通过有针对性的微调来提高机器学习模型的性能。

下面是一个示例,说明最终版本的 cleaned_articles.json可能是什么样子,准备好执行微调任务:

[

{

"instruction": "Design and build a production-ready feature pipeline using Bytewax as a stream engine, RabbitMQ queue, and Qdrant vector DB. Include steps for processing raw data, data snapshots, Pydantic models, and loading cleaned features to the vector DB. Deploy the pipeline to AWS and integrate with previous components.",

"content": "SOTA Python Streaming Pipelines for Fine-tuning LLMs and RAG \\u2014 in Real-Time!Use a Python streaming engine to populate a feature store from 4+ data sourcesStreaming Pipelines for LLMs and RAG | Decoding MLOpen in appSign upSign inWriteSign upSign inMastodonLLM TWIN COURSE: BUILDING YOUR PRODUCTION-READY AI REPLICASOTA Python Streaming Pipelines for Fine-tuning LLMs and RAG in Real-Time!Use a Python streaming engine to populate a feature store from 4+ data sourcesPaul IusztinFollowPublished inDecoding ML18 min read6 days ago698ListenShare the 4th out of 11 lessons of the LLM Twin free courseWhat is your LLM Twin? It is an AI character that writes like yourself by incorporating your style, personality and voice into an LLM.Image by DALL-EWhy is this course different?By finishing the LLM Twin: Building Your Production-Ready AI Replica free course, you will learn how to design, train, and deploy a production-ready LLM twin of yourself powered by LLMs, vector DBs, and LLMOps good practices.Why should you care? \\U0001faf5 No more isolated scripts or Notebooks! Learn production ML by building and deploying an end-to-end production-grade LLM system.What will you learn to build by the end of this course?You will learn how to architect and build a real-world LLM system from start to finish from data collection to deployment.You will also learn to leverage MLOps best practices, such as experiment trackers, model registries, prompt monitoring, and versioning.The end goal? Build and deploy your own LLM twin.The architecture of the LLM twin is split into 4 Python microservices:the data collection pipeline: crawl your digital data from various social media platforms. Clean, normalize and load the data to a NoSQL DB through a series of ETL pipelines. Send database changes to a queue using the CDC pattern. (deployed on AWS)the feature pipeline: consume messages from a queue through a Bytewax streaming pipeline. Every message will be cleaned, chunked, embedded (using Superlinked), and loaded into a Qdrant vector DB in real-time. (deployed on AWS)the training pipeline: create a custom dataset based on your digital data. Fine-tune an LLM using QLoRA. Use Comet MLs experiment tracker to monitor the experiments. Evaluate and save the best model to Comets model registry. (deployed on Qwak)the inference pipeline: load and quantize the fine-tuned LLM from Comets model registry. Deploy it as a REST API. Enhance the prompts using RAG. Generate content using your LLM twin. Monitor the LLM using Comets prompt monitoring dashboard. (deployed on Qwak)LLM twin system architecture [Image by the Author]Along the 4 microservices, you will learn to integrate 3 serverless tools:Comet ML as your ML Platform;Qdrant as your vector DB;Qwak as your ML infrastructure;Who is this for?Audience: MLE, DE, DS, or SWE who want to learn to engineer production-ready LLM systems using LLMOps good principles.Level: intermediatePrerequisites: basic knowledge of Python, ML, and the cloudHow will you learn?The course contains 11 hands-on written lessons and the open-source code you can access on GitHub.You can read everything at your own pace. To get the most out of this course, we encourage you to clone and run the repository while you cover the lessons.Costs?The articles and code are completely free. They will always remain free.But if you plan to run the code while reading it, you have to know that we use several cloud tools that might generate additional costs.The cloud computing platforms (AWS, Qwak) have a pay-as-you-go pricing plan. Qwak offers a few hours of free computing. Thus, we did our best to keep costs to a minimum.For the other serverless tools (Qdrant, Comet), we will stick to their freemium version, which is free of charge.Meet your teachers!The course is created under the Decoding ML umbrella by:Paul Iusztin | Senior ML & MLOps EngineerAlex Vesa | Senior AI EngineerAlex Razvant | Senior ML & MLOps EngineerLessonsThe course is split into 11 lessons. Every Medium article will be its own lesson.An End-to-End Framework for Production-Ready LLM Systems by Building Your LLM TwinThe Importance of Data Pipelines in the Era of Generative AIChange Data Capture: Enabling Event-Driven ArchitecturesSOTA Python Streaming Pipelines for Fine-tuning LLMs and RAG in Real-Time!Vector DB retrieval clients [Module 2] WIPTraining data preparation [Module 3] WIPFine-tuning LLM [Module 3] WIPLLM evaluation [Module 4] WIPQuantization [Module 5] WIPBuild the digital twin inference pipeline [Module 6] WIPDeploy the digital twin as a REST API [Module 6] WIP Check out the code on GitHub [1] and support us with a Lets start with Lesson 4 Lesson 4: Python Streaming Pipelines for Fine-tuning LLMs and RAG in Real-Time!In the 4th lesson, we will focus on the feature pipeline.The feature pipeline is the first pipeline presented in the 3 pipeline architecture: feature, training and inference pipelines.A feature pipeline is responsible for taking raw data as input, processing it into features, and storing it in a feature store, from which the training & inference pipelines will use it.The component is completely isolated from the training and inference code. All the communication is done through the feature store.To avoid repeating myself, if you are unfamiliar with the 3 pipeline architecture, check out Lesson 1 for a refresher.By the end of this article, you will learn to design and build a production-ready feature pipeline that:uses Bytewax as a stream engine to process data in real-time;ingests data from a RabbitMQ queue;uses SWE practices to process multiple data types: posts, articles, code;cleans, chunks, and embeds data for LLM fine-tuning and RAG;loads the features to a Qdrant vector DB.Note: In our use case, the feature pipeline is also a streaming pipeline, as we use a Bytewax streaming engine. Thus, we will use these words interchangeably.We will wrap up Lesson 4 by showing you how to deploy the feature pipeline to AWS and integrate it with the components from previous lessons: data collection pipeline, MongoDB, and CDC.In the 5th lesson, we will go through the vector DB retrieval client, where we will teach you how to query the vector DB and improve the accuracy of the results using advanced retrieval techniques.Excited? Lets get started!The architecture of the feature/streaming pipeline.Table of ContentsWhy are we doing this?System design of the feature pipelineThe Bytewax streaming flowPydantic data modelsLoad data to QdrantThe dispatcher layerPreprocessing steps: Clean, chunk, embedThe AWS infrastructureRun the code locallyDeploy the code to AWS & Run it from the cloudConclusion Check out the code on GitHub [1] and support us with a 1. Why are we doing this?A quick reminder from previous lessonsTo give you some context, in Lesson 2, we crawl data from LinkedIn, Medium, and GitHub, normalize it, and load it to MongoDB.In Lesson 3, we are using CDC to listen to changes to the MongoDB database and emit events in a RabbitMQ queue based on any CRUD operation done on MongoDB.and here we are in Lesson 4, where we are building the feature pipeline that listens 24/7 to the RabbitMQ queue for new events to process and load them to a Qdrant vector DB.The problem we are solvingIn our LLM Twin use case, the feature pipeline constantly syncs the MongoDB warehouse with the Qdrant vector DB while processing the raw data into features.Important: In our use case, the Qdrant vector DB will be our feature store.Why we are solving itThe feature store will be the central point of access for all the features used within the training and inference pipelines.For consistency and simplicity, we will refer to different formats of our text data as features. The training pipeline will use the feature store to create fine-tuning datasets for your LLM twin. The inference pipeline will use the feature store for RAG.For reliable results (especially for RAG), the data from the vector DB must always be in sync with the data from the data warehouse.The question is, what is the best way to sync these 2?Other potential solutionsThe most common solution is probably to use a batch pipeline that constantly polls from the warehouse, computes a difference between the 2 databases, and updates the target database.The issue with this technique is that computing the difference between the 2 databases is extremely slow and costly.Another solution is to use a push technique using a webhook. Thus, on any CRUD change in the warehouse, you also update the source DB.The biggest issue here is that if the webhook fails, you have to implement complex recovery logic.Lesson 3 on CDC covers more of this.2. System design of the feature pipeline: our solutionOur solution is based on CDC, a queue, a streaming engine, and a vector DB: CDC adds any change made to the Mongo DB to the queue (read more in Lesson 3). the RabbitMQ queue stores all the events until they are processed. The Bytewax streaming engine cleans, chunks, and embeds the data. A streaming engine works naturally with a queue-based system. The data is uploaded to a Qdrant vector DB on the flyWhy is this powerful?Here are 4 core reasons:The data is processed in real-time.Out-of-the-box recovery system: If the streaming pipeline fails to process a message will be added back to the queueLightweight: No need for any diffs between databases or batching too many recordsNo I/O bottlenecks on the source database It solves all our problems!The architecture of the feature/streaming pipeline.How is the data stored?We store 2 snapshots of our data in the feature store. Here is why Remember that we said that the training and inference pipeline will access the features only from the feature store, which, in our case, is the Qdrant vector DB?Well, if we had stored only the chunked & embedded version of the data, that would have been useful only for RAG but not for fine-tuning.Thus, we make an additional snapshot of the cleaned data, which will be used by the training pipeline.Afterward, we pass it down the streaming flow for chunking & embedding.How do we process multiple data types?How do you process multiple types of data in a single streaming pipeline without writing spaghetti code?Yes, that is for you, data scientists! Jokingam I?We have 3 data types: posts, articles, and code.Each data type (and its state) will be modeled using Pydantic models.To process them we will write a dispatcher layer, which will use a creational factory pattern [9] to instantiate a handler implemented for that specific data type (post, article, code) and operation (cleaning, chunking, embedding).The handler follows the strategy behavioral pattern [10].Intuitively, you can see the combination between the factory and strategy patterns as follows:Initially, we know we want to clean the data, but as we dont know the data type, we cant know how to do so.What we can do, is write the whole code around the cleaning code and abstract away the login under a Handler() interface (aka the strategy).When we get a data point, the factory class creates the right cleaning handler based on its type.Ultimately the handler is injected into the rest of the system and executed.By doing so, we can easily isolate the logic for a given data type & operation while leveraging polymorphism to avoid filling up the code with 1000x if else statements.We will dig into the implementation in future sections.Streaming over batchYou may ask why we need a streaming engine instead of implementing a batch job that polls the messages at a given frequency.That is a valid question.The thing is thatNowadays, using tools such as Bytewax makes implementing streaming pipelines a lot more frictionless than using their JVM alternatives.The key aspect of choosing a streaming vs. a batch design is real-time synchronization between your source and destination DBs.In our particular case, we will process social media data, which changes fast and irregularly.Also, for our digital twin, it is important to do RAG on up-to-date data. We dont want to have any delay between what happens in the real world and what your LLM twin sees.That being said choosing a streaming architecture seemed natural in our use case.3. The Bytewax streaming flowThe Bytewax flow is the central point of the streaming pipeline. It defines all the required steps, following the next simplified pattern: input -> processing -> output.As I come from the AI world, I like to see it as the graph of the streaming pipeline, where you use the input(), map(), and output() Bytewax functions to define your graph, which in the Bytewax world is called a flow.As you can see in the code snippet below, we ingest posts, articles or code messages from a RabbitMQ queue. After we clean, chunk and embed them. Ultimately, we load the cleaned and embedded data to a Qdrant vector DB, which in our LLM twin use case will represent the feature store of our system.To structure and validate the data, between each Bytewax step, we map and pass a different Pydantic model based on its current state: raw, cleaned, chunked, or embedded.Bytewax flow GitHub Code We have a single streaming pipeline that processes everything.As we ingest multiple data types (posts, articles, or code snapshots), we have to process them differently.To do this the right way, we implemented a dispatcher layer that knows how to apply data-specific operations based on the type of message.More on this in the next sections Why Bytewax?Bytewax is an open-source streaming processing framework that:- is built in Rust for performance- has Python bindings for leveraging its powerful ML ecosystem so, for all the Python fanatics out there, no more JVM headaches for you.Jokes aside, here is why Bytewax is so powerful - Bytewax local setup is plug-and-play- can quickly be integrated into any Python project (you can go wild even use it in Notebooks)- can easily be integrated with other Python packages (NumPy, PyTorch, HuggingFace, OpenCV, SkLearn, you name it)- out-of-the-box connectors for Kafka and local files, or you can quickly implement your ownWe used Bytewax to build the streaming pipeline for the LLM Twin course and loved it.To learn more about Bytewax, go and check them out. They are open source, so no strings attached Bytewax [2] 4. Pydantic data modelsLets take a look at what our Pydantic models look like.First, we defined a set of base abstract models for using the same parent class across all our components.Pydantic base model structure GitHub Code Afterward, we defined a hierarchy of Pydantic models for:all our data types: posts, articles, or codeall our states: raw, cleaned, chunked, and embeddedThis is how the set of classes for the posts will look like Pydantic posts model structure GitHub Code We repeated the same process for the articles and code model hierarchy.Check out the other data classes on our GitHub.Why is keeping our data in Pydantic models so powerful?There are 4 main criteria:every field has an enforced type: you are ensured the data types are going to be correctthe fields are automatically validated based on their type: for example, if the field is a string and you pass an int, it will through an errorthe data structure is clear and verbose: no more clandestine dicts that you never know what is in themyou make your data the first-class citizen of your program5. Load data to QdrantThe first step is to implement our custom Bytewax DynamicSink class Qdrant DynamicSink GitHub Code Next, for every type of operation we need (output cleaned or embedded data ) we have to subclass the StatelessSinkPartition Bytewax class (they also provide a stateful option more in their docs)An instance of the class will run on every partition defined within the Bytewax deployment.In the course, we are using a single partition per worker. But, by adding more partitions (and workers), you can quickly scale your Bytewax pipeline horizontally.Qdrant worker partitions GitHub Code Note that we used Qdrants Batch method to upload all the available points at once. By doing so, we reduce the latency on the network I/O side: more on that here [8] The RabbitMQ streaming input follows a similar pattern. Check it out here 6. The dispatcher layerNow that we have the Bytewax flow and all our data models.How do we map a raw data model to a cleaned data model? All our domain logic is modeled by a set of Handler() classes.For example, this is how the handler used to map a PostsRawModel to a PostCleanedModel looks like Handler hierarchy of classes GitHub Code Check out the other handlers on our GitHub: ChunkingDataHandler and EmbeddingDataHandlerIn the next sections, we will explore the exact cleaning, chunking and embedding logic.Now, to build our dispatcher, we need 2 last components:a factory class: instantiates the right handler based on the type of the eventa dispatcher class: the glue code that calls the factory class and handlerHere is what the cleaning dispatcher and factory look like The dispatcher and factory classes GitHub Code Check out the other dispatchers on our GitHub.By repeating the same logic, we will end up with the following set of dispatchers:RawDispatcher (no factory class required as the data is not processed)CleaningDispatcher (with a ChunkingHandlerFactory class)ChunkingDispatcher (with a ChunkingHandlerFactory class)EmbeddingDispatcher (with an EmbeddingHandlerFactory class)7. Preprocessing steps: Clean, chunk, embedHere we will focus on the concrete logic used to clean, chunk, and embed a data point.Note that this logic is wrapped by our handler to be integrated into our dispatcher layer using the Strategy behavioral pattern [10].We already described that in the previous section. Thus, we will directly jump into the actual logic here, which can be found in the utils module of our GitHub repository.Note: These steps are experimental. Thus, what we present here is just the first iteration of the system. In a real-world scenario, you would experiment with different cleaning, chunking or model versions to improve it on your data.CleaningThis is the main utility function used to clean the text for our posts, articles, and code.Out of simplicity, we used the same logic for all the data types, but after more investigation, you would probably need to adapt it to your specific needs.For example, your posts might start containing some weird characters, and you dont want to run the unbold_text() or unitalic_text() functions on your code data point as is completely redundant.Cleaning logic GitHub Code Most of the functions above are from the unstructured [3] Python package. It is a great tool for quickly finding utilities to clean text data. More examples of unstructured here [3] One key thing to notice is that at the cleaning step, we just want to remove all the weird, non-interpretable characters from the text.Also, we want to remove redundant data, such as extra whitespace or URLs, as they do not provide much value.These steps are critical for our tokenizer to understand and efficiently transform our string input into numbers that will be fed into the transformer models.Note that when using bigger models (transformers) + modern tokenization techniques, you dont need to standardize your dataset too much.For example, it is redundant to apply lemmatization or stemming, as the tokenizer knows how to split your input into a commonly used sequence of characters efficiently, and the transformers can pick up the nuances of the words. What is important at the cleaning step is to throw out the noise.ChunkingWe are using Langchain to chunk our text.We use a 2 step strategy using Langchains RecursiveCharacterTextSplitter [4] and SentenceTransformersTokenTextSplitter [5]. As seen below Chunking logic GitHub Code Overlapping your chunks is a common pre-indexing RAG technique, which helps to cluster chunks from the same document semantically.Again, we are using the same chunking logic for all of our data types, but to get the most out of it, we would probably need to tweak the separators, chunk_size, and chunk_overlap parameters for our different use cases.But our dispatcher + handler architecture would easily allow us to configure the chunking step in future iterations.EmbeddingThe data preprocessing, aka the hard part is done.Now we just have to call an embedding model to create our vectors.Embedding logic GitHub Code We used the all-MiniLm-L6-v2 [6] from the sentence-transformers library to embed our articles and posts: a lightweight embedding model that can easily run in real-time on a 2 vCPU machine.As the code data points contain more complex relationships and specific jargon to embed, we used a more powerful embedding model: hkunlp/instructor-xl [7].This embedding model is unique as it can be customized on the fly with instructions based on your particular data. This allows the embedding model to specialize on your data without fine-tuning, which is handy for embedding pieces of code.8. The AWS infrastructureIn Lesson 2, we covered how to deploy the data collection pipeline that is triggered by a link to Medium, Substack, LinkedIn or GitHub crawls the given link saves the crawled information to a MongoDB.In Lesson 3, we explained how to deploy the CDC components that emit events to a RabbitMQ queue based on any CRUD operation done to MongoDB.What is left is to deploy the Bytewax streaming pipeline and Qdrant vector DB.We will use Qdrants self-hosted option, which is easy to set up and scale.To test things out, they offer a Free Tier plan for up to a 1GB cluster, which is more than enough for our course. We explained in our GitHub repository how to configure Qdrant.AWS infrastructure of the feature/streaming pipeline.The last piece of the puzzle is the Bytewax streaming pipeline.As we dont require a GPU and the streaming pipeline needs to run 24/7, we will deploy it to AWS Fargate, a cost-effective serverless solution from AWS.As a serverless solution, Fargate allows us to deploy our code quickly and scale it fast in case of high traffic.How do we deploy the streaming pipeline code to Fargate?Using GitHub Actions, we wrote a CD pipeline that builds a Docker image on every new commit made on the main branch.After, the Docker image is pushed to AWS ECR. Ultimately, Fargate pulls the latest version of the Docker image.This is a common CD pipeline to deploy your code to AWS services.Why not use lambda functions, as we did for the data pipeline?An AWS lambda function executes a function once and then closes down.This worked perfectly for the crawling logic, but it won't work for our streaming pipeline, which has to run 24/7.9. Run the code locallyTo quickly test things up, we wrote a docker-compose.yaml file to spin up the MongoDB, RabbitMQ queue and Qdrant vector db.You can spin up the Docker containers using our Makefile by running the following:make local-start-infraTo fully test the Bytewax streaming pipeline, you have to start the CDC component by running:make local-start-cdcUltimately, you start the streaming pipeline:make local-bytewaxTo simulate the data collection pipeline, mock it as follows:make local-insert-data-mongoThe README of our GitHub repository provides more details on how to run and set up everything.10. Deploy the code to AWS & Run it from the cloudThis article is already too long, so I wont go into the details of how to deploy the AWS infrastructure described above and test it out here.But to give you some insights, we have used Pulumi as our infrastructure as a code (IaC) tool, which will allow you to spin it quickly with a few commands.Also, I wont let you hang on to this one. We made a promise and We prepared step-by-step instructions in the README of our GitHub repository on how to use Pulumni to spin up the infrastructure and test it out.ConclusionNow you know how to write streaming pipelines like a PRO!In Lesson 4, you learned how to:design a feature pipeline using the 3-pipeline architecturewrite a streaming pipeline using Bytewax as a streaming engineuse a dispatcher layer to write a modular and flexible application to process multiple types of data (posts, articles, code)load the cleaned and embedded data to Qdrantdeploy the streaming pipeline to AWS This is only the ingestion part used for fine-tuning LLMs and RAG.In Lesson 5, you will learn how to write a retrieval client for the 3 data types using good SWE practices and improve the retrieval accuracy using advanced retrieval & post-retrieval techniques. See you there! Check out the code on GitHub [1] and support us with a Enjoyed This Article?Join the Decoding ML Newsletter for battle-tested content on designing, coding, and deploying production-grade ML & MLOps systems. Every week. For FREE Decoding ML Newsletter | Paul Iusztin | SubstackJoin for battle-tested content on designing, coding, and deploying production-grade ML & MLOps systems. Every week. Fordecodingml.substack.comReferencesLiterature[1] Your LLM Twin Course GitHub Repository (2024), Decoding ML GitHub Organization[2] Bytewax, Bytewax Landing Page[3] Unstructured Cleaning Examples, Unstructured Documentation[4] Recursively split by character, LangChains Documentation[5] Split by tokens, LangChains Documentation[6] sentence-transformers/all-MiniLM-L6-v2, HuggingFace[7] hkunlp/instructor-xl, HuggingFace[8] Qdrant, Qdrant Documentation[9] Abstract Factory Pattern, Refactoring Guru[10] Strategy Pattern, Refactoring GuruImagesIf not otherwise stated, all images are created by the author.Sign up to discover human stories that deepen your understanding of the world.FreeDistraction-free reading. No ads.Organize your knowledge with lists and highlights.Tell your story. Find your audience.Sign up for freeMembershipAccess the best member-only stories.Support independent authors.Listen to audio narrations.Read offline.Join the Partner Program and earn for your writing.Try for $5/monthMl System DesignMachine LearningArtificial IntelligenceData ScienceSoftware Engineering698698FollowWritten by Paul Iusztin2.4K FollowersEditor for Decoding ML Senior ML Engineer | Helping machine learning engineers design and productionize ML systems. | Decoding ML Newsletter: [URL] from Paul Iusztin and Decoding MLPaul IusztininDecoding MLAn End-to-End Framework for Production-Ready LLM Systems by Building Your LLM TwinFrom data gathering to productionizing LLMs using LLMOps good practices.16 min readMar 16, 20241.5K10Razvant AlexandruinDecoding MLHow to build a Real-Time News Search Engine using Serverless Upstash Kafka and Vector DBA hands-on guide to implementing a live news aggregating streaming pipeline with Apache Kafka, Bytewax, and Upstash Vector Database.19 min readApr 13, 20246873Paul IusztininDecoding MLThe LLMs kit: Build a production-ready real-time financial advisor system using streamingLesson 1: LLM architecture system design using the 3-pipeline pattern12 min readJan 5, 2024383Paul IusztininDecoding MLA Real-time Retrieval System for RAG on Social Media DataUse a streaming engine to populate a vector DB in real-time. Improve RAG accuracy using rerank & UMAP.12 min readMar 30, 2024305See all from Paul IusztinSee all from Decoding MLRecommended from MediumVipra SinghBuilding LLM Applications: Serving LLMs (Part 9)Learn Large Language Models ( LLM ) through the lens of a Retrieval Augmented Generation ( RAG ) Application.49 min readApr 18, 20243741A B Vijay KumarMulti-Agent System\\u200a\\u200aCrew.AIMulti-Agent systems are LLM applications that are changing the automation landscape with intelligent bots.7 min readApr 18, 20241574ListsPredictive Modeling w/ Python20 stories1126 savesNatural Language Processing1402 stories899 savesPractical Guides to Machine Learning10 stories1352 savesdata science and AI40 stories137 savesFabio MatricardiinGenerative AILlama3 is out and you can run it on your Computer!After only 1 day from the release, here is how you can run even on your Laptop with CPU only the latest Meta-AI model.8 min read6 days ago1.2K13Gavin LiinAI AdvancesRun the strongest open-source LLM model: Llama3 70B with just a single 4GB GPU!The strongest open source LLM model Llama3 has been released, Here is how you can run Llama3 70B locally with just 4GB GPU, even on Macbook4 min read4 days ago7982Plaban NayakinThe AI ForumSemantic Chunking for RAGWhat is Chunking\\xa0?17 min read4 days ago1651Damian GilinTowards Data ScienceAdvanced Retriever Techniques to Improve Your RAGsMaster Advanced Information Retrieval: Cutting-edge Techniques to Optimize the Selection of Relevant Documents with Langchain to Create18 min readApr 17, 20245393See more recommendationsHelpStatusAboutCareersBlogPrivacyTermsText to speechTeams\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nTo make Medium work, we log user data. By using Medium, you agree to our Privacy Policy, including cookie policy."

},

{

"instruction": "Focus on creating battle-tested content on building production-grade ML systems using good SWE and MLOps practices.",

"content": "About Decoding MLThe hub for continuous learning on ML system design, ML engineering, MLOps, LLMs and computer vision.About Decoding ML. The hub for continuous learning on ML | by Paul Iusztin | Decoding ML | MediumOpen in appSign upSign inWriteSign upSign inMastodonAbout Decoding MLThe hub for continuous learning on ML system design, ML engineering, MLOps, LLMs and computer vision.Paul IusztinFollowPublished inDecoding ML2 min readFeb 19, 202491ListenShareDecoding ML is a publication that creates battle-tested content on building production-grade ML systems leveraging good SWE and MLOps practices.Our motto is More engineering, less F1 scores.Following Decoding ML, you will learn about the entire lifecycle of an ML system, from system design to deploying and monitoring.Decoding ML is the hub for continuous learning on:ML system designML engineeringMLOpsLarge language modelsComputer visionWe are all about end-to-end ML use cases that can directly be applied in the real world no stories just hands-on content.The minds behind Decoding ML Alex Vesa (left), Paul Iusztin (middle) and Alexandru Razvant (right)The minds behind Decoding ML are Vesa Alexandru, Paul Iusztin and Razvant Alexandru.Our passion for constantly learning and engineering production-ready ML systems inevitably turned into Decoding ML.With our 10+ years of hands-on experience in the industry in:Data ScienceDeep LearningComputer VisionGenerative AIML InfrastructureMLOpsSoftware Engineeringwe decided it is about time to share it with the world!Why follow?Join Decoding ML for battle-tested content on designing, coding, and deploying production-grade ML & MLOps systems. Every week. For FREE.No more bedtime stories in Jupyter Notebooks. DML is all about hands-on advice from our 10+ years of experience in AI.We are also on: Newsletter GitHubSign up to discover human stories that deepen your understanding of the world.FreeDistraction-free reading. No ads.Organize your knowledge with lists and highlights.Tell your story. Find your audience.Sign up for freeMembershipAccess the best member-only stories.Support independent authors.Listen to audio narrations.Read offline.Join the Partner Program and earn for your writing.Try for $5/monthAbout9191FollowWritten by Paul Iusztin2.4K FollowersEditor for Decoding ML Senior ML Engineer | Helping machine learning engineers design and productionize ML systems. | Decoding ML Newsletter: [URL] from Paul Iusztin and Decoding MLPaul IusztininDecoding MLAn End-to-End Framework for Production-Ready LLM Systems by Building Your LLM TwinFrom data gathering to productionizing LLMs using LLMOps good practices.16 min readMar 16, 20241.5K10Razvant AlexandruinDecoding MLHow to build a Real-Time News Search Engine using Serverless Upstash Kafka and Vector DBA hands-on guide to implementing a live news aggregating streaming pipeline with Apache Kafka, Bytewax, and Upstash Vector Database.19 min readApr 13, 20246873Paul IusztininDecoding MLThe LLMs kit: Build a production-ready real-time financial advisor system using streamingLesson 1: LLM architecture system design using the 3-pipeline pattern12 min readJan 5, 2024383Paul IusztininDecoding MLA Real-time Retrieval System for RAG on Social Media DataUse a streaming engine to populate a vector DB in real-time. Improve RAG accuracy using rerank & UMAP.12 min readMar 30, 2024305See all from Paul IusztinSee all from Decoding MLRecommended from MediumQwakHow to Build an End-to-End ML Pipeline in 2024Learn to build an end-to-end ML pipeline and streamline your ML workflows in 2024, from data ingestion to model deployment and performance24 min readApr 7, 20242071Paul IusztininDecoding MLThe LLMs kit: Build a production-ready real-time financial advisor system using streamingLesson 1: LLM architecture system design using the 3-pipeline pattern12 min readJan 5, 2024383ListsMedium's Huge List of Publications Accepting Submissions285 stories2516 savesYesmine RouisinTheFork Engineering BlogA Guide to MLOps with Airflow and MLflowIntroduction11 min readNov 6, 2023319ManralaiMastering MLOps for FreeYour Go-To GitHub Repositories Collection4 min readJan 28, 20246463Vipra SinghBuilding LLM Applications: Evaluation (Part 8)Learn Large Language Models ( LLM ) through the lens of a Retrieval Augmented Generation ( RAG ) Application.47 min readApr 8, 20241891Ramazan OlmezEnd-to-End Machine Learning Project: Churn PredictionThe main objective of this article is to develop an end-to-end machine learning project. For a model to be truly useful, it needs to be18 min readFeb 22, 202487See more recommendationsHelpStatusAboutCareersBlogPrivacyTermsText to speechTeams\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nTo make Medium work, we log user data. By using Medium, you agree to our Privacy Policy, including cookie policy."

},

{

"instruction": "Design and build a production-ready feature pipeline using Bytewax as a stream engine to process data in real-time, ingest data from a RabbitMQ queue, implement SWE practices to process multiple data types, clean, chunk, and embed data for LLM fine-tuning and RAG, and load features to a Qdrant vector DB.",