预测时间序列数据:使用机器学习、生成式人工智能和深度学习

时间序列数据预测是各行各业(从金融和医疗保健到营销和物流)的一个重要方面。根据历史数据预测未来值的能力可以显著改善决策流程和运营效率。随着机器学习、生成式人工智能和深度学习的发展,现在有了更多复杂的方法来解决时间序列预测问题。本文将探讨可用于时间序列数据预测的不同方法和模型。

了解时间序列数据

时间序列数据是在特定时间间隔内收集或记录的一系列数据点。例如股票价格、天气数据、销售数字和传感器读数。时间序列预测的目的是利用过去的观察结果来预测未来的数值,由于数据本身的复杂性和模式,这可能具有挑战性。

1. 机器学习方法

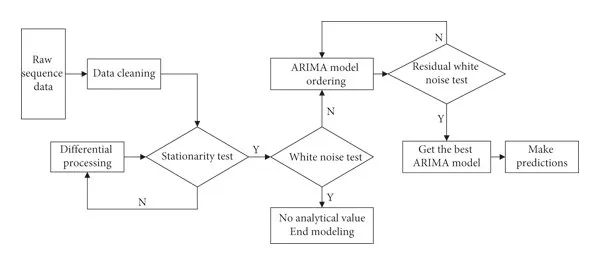

1.1 ARIMA(自回归整合移动平均法)

- ARIMA 是一种经典的时间序列预测统计方法。它结合了自回归 (AR) 模型、差分(使数据静止)和移动平均 (MA) 模型。

使用示例 :

import pandas as pd

from statsmodels.tsa.arima.model import ARIMA

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Fit ARIMA model

model = ARIMA(time_series_data['Value'], order=(5, 1, 0)) # (p,d,q)

model_fit = model.fit()

# Make predictions

predictions = model_fit.forecast(steps=10)

print(predictions)

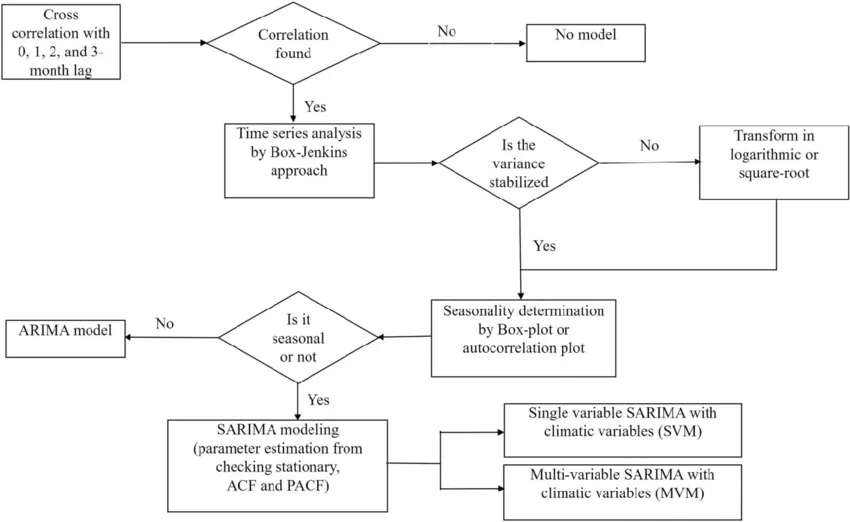

1.2 SARIMA(季节性 ARIMA)

- SARIMA 通过考虑季节效应扩展了 ARIMA。它适用于具有季节性模式的数据,如月度销售数据。

使用示例 :

import pandas as pd

import numpy as np

from statsmodels.tsa.statespace.sarimax import SARIMAX

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Fit SARIMA model

model = SARIMAX(time_series_data['Value'], order=(1, 1, 1), seasonal_order=(1, 1, 1, 12)) # (p,d,q) (P,D,Q,s)

model_fit = model.fit(disp=False)

# Make predictions

predictions = model_fit.forecast(steps=10)

print(predictions)

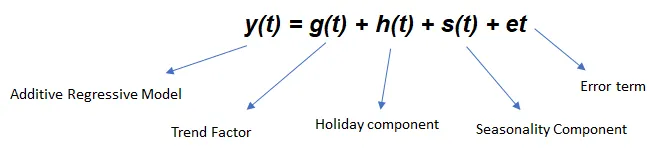

1.3 先知

- Prophet 由 Facebook 开发,是一款用于预测时间序列数据的强大工具,可以处理缺失数据和异常值,并提供可靠的不确定性区间。

使用示例 :

from fbprophet import Prophet

import pandas as pd

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.rename(columns={'Date': 'ds', 'Value': 'y'}, inplace=True)

# Fit Prophet model

model = Prophet()

model.fit(time_series_data)

# Make future dataframe and predictions

future = model.make_future_dataframe(periods=10)

forecast = model.predict(future)

print(forecast[['ds', 'yhat', 'yhat_lower', 'yhat_upper']])

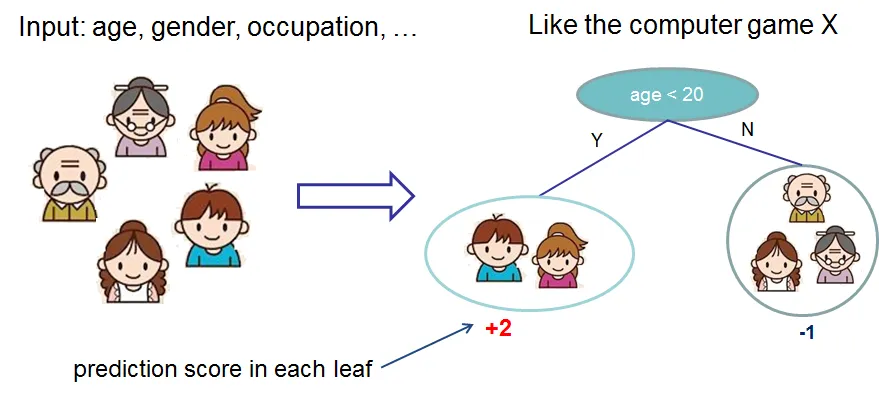

1.4 XGBoost

1.4 XGBoost

- XGBoost 是一个梯度提升框架,可用于时间序列预测,它将问题转化为监督学习任务,将之前的时间步骤视为特征。

使用示例 :

import pandas as pd

import numpy as np

from xgboost import XGBRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for supervised learning

def create_lag_features(data, lag=1):

df = data.copy()

for i in range(1, lag + 1):

df[f'lag_{i}'] = df['Value'].shift(i)

return df.dropna()

lag = 5

data_with_lags = create_lag_features(time_series_data, lag=lag)

X = data_with_lags.drop('Value', axis=1)

y = data_with_lags['Value']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, shuffle=False)

# Fit XGBoost model

model = XGBRegressor(objective='reg:squarederror', n_estimators=1000)

model.fit(X_train, y_train)

# Make predictions

y_pred = model.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f'Mean Squared Error: {mse}')

2. 生成式人工智能方法

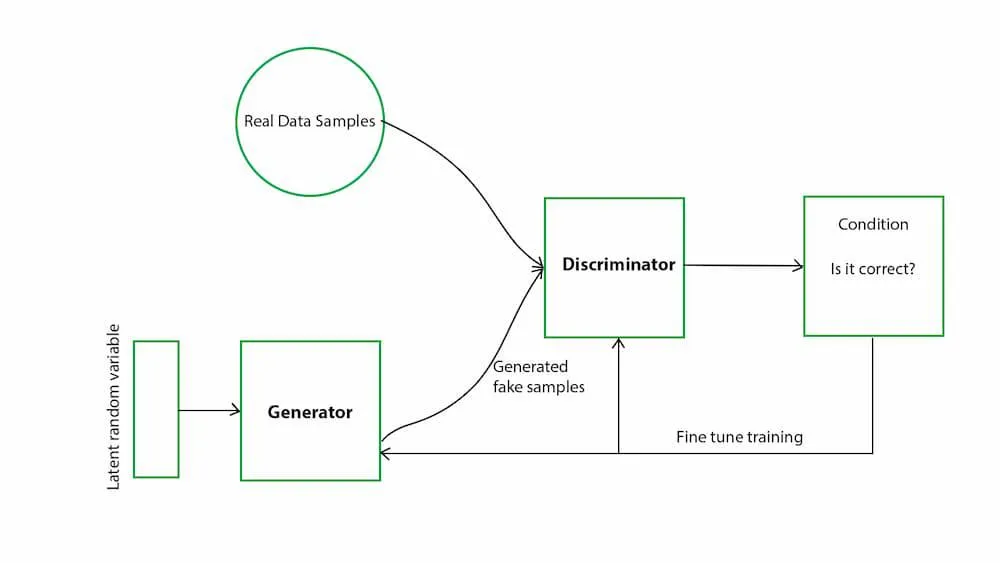

2.1 GANs(生成对抗网络)

- GAN由生成器和判别器组成。对于时间序列预测,GANs 可以通过学习基础数据分布生成可信的未来序列。

使用示例 :

import numpy as np

import pandas as pd

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM, Conv1D, MaxPooling1D, Flatten, LeakyReLU, Reshape

from tensorflow.keras.optimizers import Adam

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for GAN

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

X_train, y_train = create_dataset(scaled_data, time_step)

X_train = X_train.reshape(X_train.shape[0], X_train.shape[1], 1)

# GAN components

def build_generator():

model = Sequential()

model.add(Dense(100, input_dim=time_step))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(time_step, activation='tanh'))

model.add(Reshape((time_step, 1)))

return model

def build_discriminator():

model = Sequential()

model.add(LSTM(50, input_shape=(time_step, 1)))

model.add(Dense(1, activation='sigmoid'))

return model

# Build and compile the discriminator

discriminator = build_discriminator()

discriminator.compile(loss='binary_crossentropy', optimizer=Adam(0.0002, 0.5), metrics=['accuracy'])

# Build the generator

generator = build_generator()

# The generator takes noise as input and generates data

z = Input(shape=(time_step,))

generated_data = generator(z)

# For the combined model, we will only train the generator

discriminator.trainable = False

# The discriminator takes generated data as input and determines validity

validity = discriminator(generated_data)

# The combined model (stacked generator and discriminator)

combined = Model(z, validity)

combined.compile(loss='binary_crossentropy', optimizer=Adam(0.0002, 0.5))

# Training the GAN

epochs = 10000

batch_size = 32

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

for epoch in range(epochs):

# ---------------------

# Train Discriminator

# ---------------------

# Select a random batch of real data

idx = np.random.randint(0, X_train.shape[0], batch_size)

real_data = X_train[idx]

# Generate a batch of fake data

noise = np.random.normal(0, 1, (batch_size, time_step))

gen_data = generator.predict(noise)

# Train the discriminator

d_loss_real = discriminator.train_on_batch(real_data, valid)

d_loss_fake = discriminator.train_on_batch(gen_data, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# Train Generator

# ---------------------

noise = np.random.normal(0, 1, (batch_size, time_step))

# Train the generator (to have the discriminator label samples as valid)

g_loss = combined.train_on_batch(noise, valid)

# Print the progress

if epoch % 1000 == 0:

print(f"{epoch} [D loss: {d_loss[0]} | D accuracy: {100*d_loss[1]}] [G loss: {g_loss}]")

# Make predictions

noise = np.random.normal(0, 1, (1, time_step))

generated_prediction = generator.predict(noise)

generated_prediction = scaler.inverse_transform(generated_prediction)

print(generated_prediction)

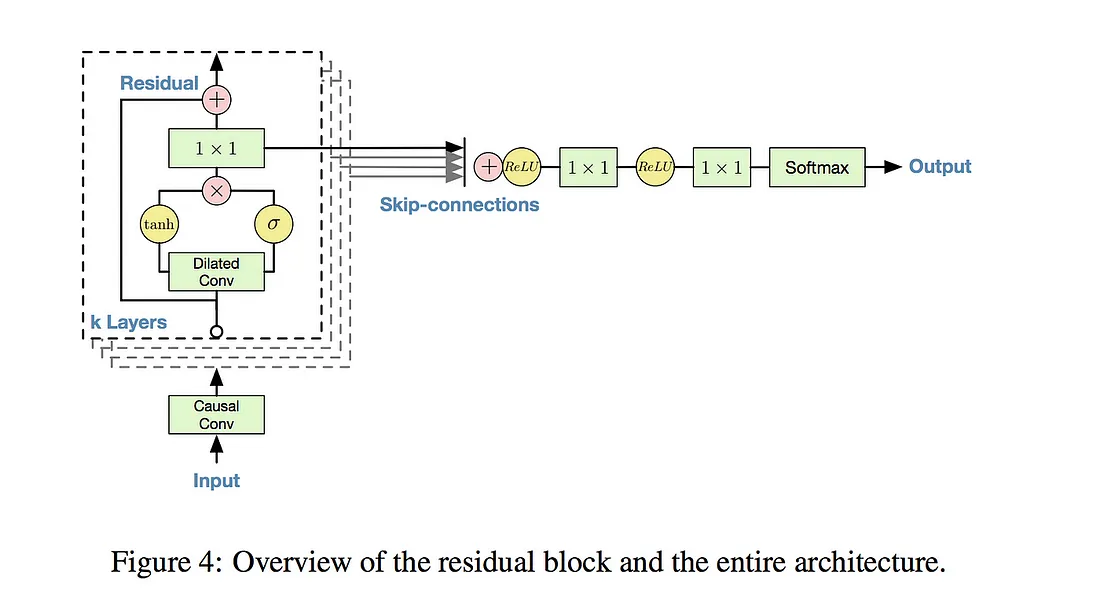

2.2 WaveNet

- WaveNet 由 DeepMind 开发,是一种深度生成模型,最初设计用于音频生成,但现在已用于时间序列预测,尤其是音频和语音领域。

使用示例 :

import numpy as np

import pandas as pd

import tensorflow as tf

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Conv1D, Add, Activation, Multiply, Lambda, Dense, Flatten

from tensorflow.keras.optimizers import Adam

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for WaveNet

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

X, y = create_dataset(scaled_data, time_step)

X = X.reshape(X.shape[0], X.shape[1], 1)

# Define WaveNet model

def residual_block(x, dilation_rate):

tanh_out = Conv1D(32, kernel_size=2, dilation_rate=dilation_rate, padding='causal', activation='tanh')(x)

sigm_out = Conv1D(32, kernel_size=2, dilation_rate=dilation_rate, padding='causal', activation='sigmoid')(x)

out = Multiply()([tanh_out, sigm_out])

out = Conv1D(32, kernel_size=1, padding='same')(out)

out = Add()([out, x])

return out

input_layer = Input(shape=(time_step, 1))

out = Conv1D(32, kernel_size=2, padding='causal', activation='tanh')(input_layer)

skip_connections = []

for i in range(10):

out = residual_block(out, 2**i)

skip_connections.append(out)

out = Add()(skip_connections)

out = Activation('relu')(out)

out = Conv1D(1, kernel_size=1, activation='relu')(out)

out = Flatten()(out)

out = Dense(1)(out)

model = Model(input_layer, out)

model.compile(optimizer=Adam(learning_rate=0.001), loss='mean_squared_error')

# Train the model

model.fit(X, y, epochs=10, batch_size=16)

# Make predictions

predictions = model.predict(X)

predictions = scaler.inverse_transform(predictions)

print(predictions)

3. 深度学习方法

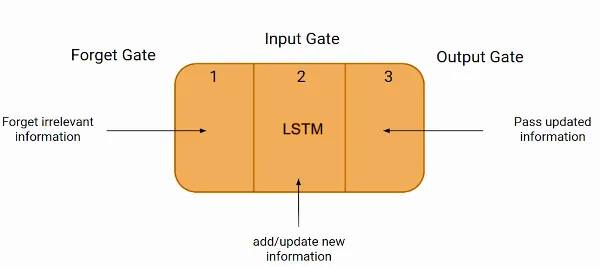

3.1 LSTM(长短期记忆)

- LSTM 网络是一种能够学习长期依赖关系的递归神经网络(RNN)。由于能够捕捉时间模式,它们被广泛用于时间序列预测。

使用示例 :

import numpy as np

import pandas as pd

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

from sklearn.preprocessing import MinMaxScaler

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for LSTM

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

train_size = int(len(scaled_data) * 0.8)

train_data = scaled_data[:train_size]

test_data = scaled_data[train_size:]

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

X_train, y_train = create_dataset(train_data, time_step)

X_test, y_test = create_dataset(test_data, time_step)

X_train = X_train.reshape(X_train.shape[0], X_train.shape[1], 1)

X_test = X_test.reshape(X_test.shape[0], X_test.shape[1], 1)

# Build LSTM model

model = Sequential()

model.add(LSTM(50, return_sequences=True, input_shape=(time_step, 1)))

model.add(LSTM(50, return_sequences=False))

model.add(Dense(25))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')

model.fit(X_train, y_train, batch_size=1, epochs=1)

# Make predictions

train_predict = model.predict(X_train)

test_predict = model.predict(X_test)

train_predict = scaler.inverse_transform(train_predict)

test_predict = scaler.inverse_transform(test_predict)

print(test_predict)

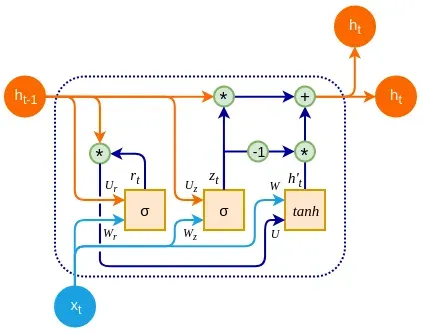

3.2 GRU

GRU 是 LSTM 的一种变体,它更为简单,通常在时间序列任务中表现同样出色。GRU 用于序列建模和捕捉时间依赖性。

使用示例 :

import numpy as np

import pandas as pd

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import GRU, Dense

from sklearn.preprocessing import MinMaxScaler

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for GRU

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

train_size = int(len(scaled_data) * 0.8)

train_data = scaled_data[:train_size]

test_data = scaled_data[train_size:]

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

X_train, y_train = create_dataset(train_data, time_step)

X_test, y_test = create_dataset(test_data, time_step)

X_train = X_train.reshape(X_train.shape[0], X_train.shape[1], 1)

X_test = X_test.reshape(X_test.shape[0], X_test.shape[1], 1)

# Build GRU model

model = Sequential()

model.add(GRU(50, return_sequences=True, input_shape=(time_step, 1)))

model.add(GRU(50, return_sequences=False))

model.add(Dense(25))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')

model.fit(X_train, y_train, batch_size=1, epochs=1)

# Make predictions

train_predict = model.predict(X_train)

test_predict = model.predict(X_test)

train_predict = scaler.inverse_transform(train_predict)

test_predict = scaler.inverse_transform(test_predict)

print(test_predict)

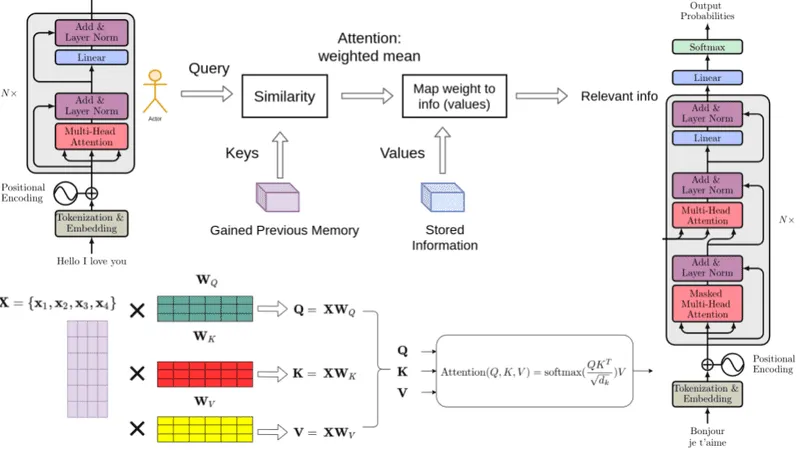

3.3 变换器模型

变换器因其在 NLP 任务中的成功而闻名,现已被用于时间序列预测。时态融合变换器(TFT)等模型利用注意力机制来有效处理时态数据。

使用示例 :

import numpy as np

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM, Conv1D, MaxPooling1D, Flatten, MultiHeadAttention, LayerNormalization, Dropout

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

train_size = int(len(scaled_data) * 0.8)

train_data = scaled_data[:train_size]

test_data = scaled_data[train_size:]

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

X_train, y_train = create_dataset(train_data, time_step)

X_test, y_test = create_dataset(test_data, time_step)

X_train = X_train.reshape(X_train.shape[0], X_train.shape[1], 1)

X_test = X_test.reshape(X_test.shape[0], X_test.shape[1], 1)

# Build Transformer model

model = Sequential()

model.add(MultiHeadAttention(num_heads=4, key_dim=2, input_shape=(time_step, 1)))

model.add(LayerNormalization())

model.add(Dense(50, activation='relu'))

model.add(Dropout(0.1))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')

model.fit(X_train, y_train, batch_size=1, epochs=1)

# Make predictions

train_predict = model.predict(X_train)

test_predict = model.predict(X_test)

train_predict = scaler.inverse_transform(train_predict)

test_predict = scaler.inverse_transform(test_predict)

print(test_predict)

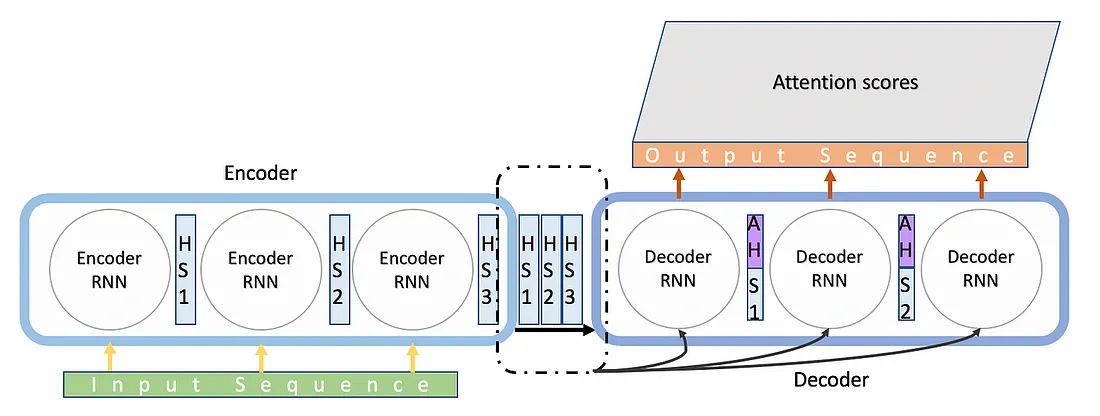

3.4 Seq2Seq(序列到序列)

t numpy as np

t numpy as np

import pandas as pd

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, LSTM, Dense

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for Seq2Seq

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

X, y = create_dataset(scaled_data, time_step)

X = X.reshape(X.shape[0], X.shape[1], 1)

# Define Seq2Seq model

encoder_inputs = Input(shape=(time_step, 1))

encoder = LSTM(50, return_state=True)

encoder_outputs, state_h, state_c = encoder(encoder_inputs)

decoder_inputs = Input(shape=(time_step, 1))

decoder_lstm = LSTM(50, return_sequences=True, return_state=True)

decoder_outputs, _, _ = decoder_lstm(decoder_inputs, initial_state=[state_h, state_c])

decoder_dense = Dense(1)

decoder_outputs = decoder_dense(decoder_outputs)

model = Model([encoder_inputs, decoder_inputs], decoder_outputs)

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit([X, X], y, epochs=10, batch_size=16)

# Make predictions

predictions = model.predict([X, X])

predictions = scaler.inverse_transform(predictions)

print(predictions)

3.5 TCN(时序卷积网络)

t numpy as np

import pandas as pd

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, LSTM, Dense

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for Seq2Seq

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

X, y = create_dataset(scaled_data, time_step)

X = X.reshape(X.shape[0], X.shape[1], 1)

# Define Seq2Seq model

encoder_inputs = Input(shape=(time_step, 1))

encoder = LSTM(50, return_state=True)

encoder_outputs, state_h, state_c = encoder(encoder_inputs)

decoder_inputs = Input(shape=(time_step, 1))

decoder_lstm = LSTM(50, return_sequences=True, return_state=True)

decoder_outputs, _, _ = decoder_lstm(decoder_inputs, initial_state=[state_h, state_c])

decoder_dense = Dense(1)

decoder_outputs = decoder_dense(decoder_outputs)

model = Model([encoder_inputs, decoder_inputs], decoder_outputs)

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit([X, X], y, epochs=10, batch_size=16)

# Make predictions

predictions = model.predict([X, X])

predictions = scaler.inverse_transform(predictions)

print(predictions)

3.5 TCN(时序卷积网络)

Seq2Seq 模型用于预测数据序列。这些模型最初是为语言翻译而开发的,通过学习从输入序列到输出序列的映射,它们对时间序列预测非常有效。

使用示例 :

import numpy as np

import pandas as pd

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, LSTM, Dense

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for Seq2Seq

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

X, y = create_dataset(scaled_data, time_step)

X = X.reshape(X.shape[0], X.shape[1], 1)

# Define Seq2Seq model

encoder_inputs = Input(shape=(time_step, 1))

encoder = LSTM(50, return_state=True)

encoder_outputs, state_h, state_c = encoder(encoder_inputs)

decoder_inputs = Input(shape=(time_step, 1))

decoder_lstm = LSTM(50, return_sequences=True, return_state=True)

decoder_outputs, _, _ = decoder_lstm(decoder_inputs, initial_state=[state_h, state_c])

decoder_dense = Dense(1)

decoder_outputs = decoder_dense(decoder_outputs)

model = Model([encoder_inputs, decoder_inputs], decoder_outputs)

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit([X, X], y, epochs=10, batch_size=16)

# Make predictions

predictions = model.predict([X, X])

predictions = scaler.inverse_transform(predictions)

print(predictions)

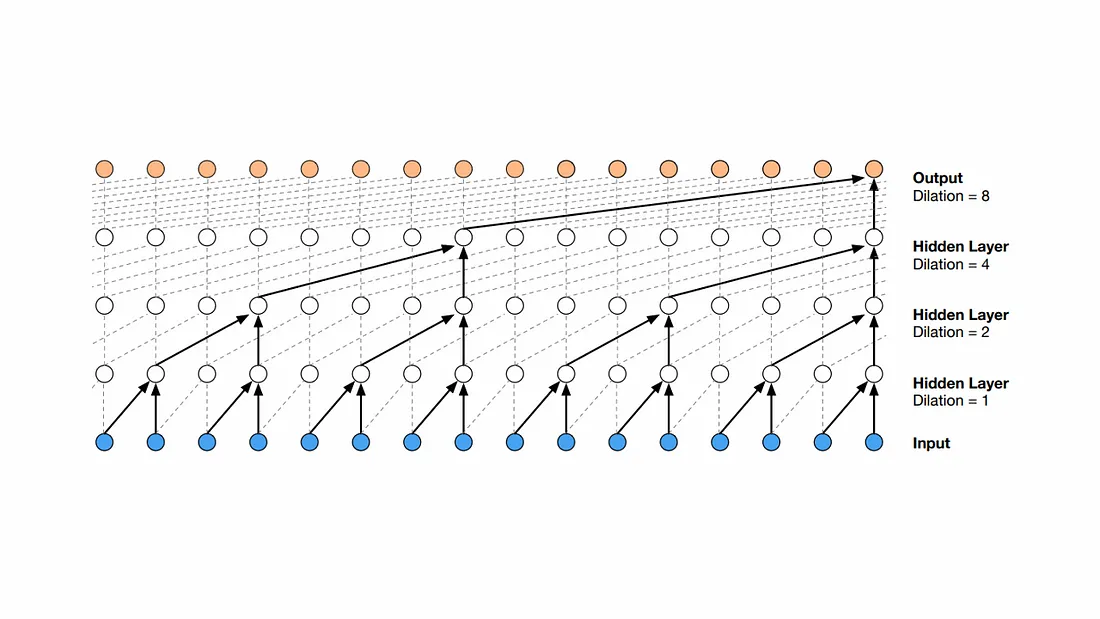

3.5 TCN(时序卷积网络)

TCN 使用扩张卷积来捕捉时间序列数据中的长期依赖关系。它们为序列数据建模提供了 RNNs 的稳健替代方案。

使用示例 :

import numpy as np

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv1D, Dense, Flatten

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for TCN

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

X, y = create_dataset(scaled_data, time_step)

X = X.reshape(X.shape[0], X.shape[1], 1)

# Define TCN model

model = Sequential()

model.add(Conv1D(filters=64, kernel_size=2, dilation_rate=1, activation='relu', input_shape=(time_step, 1)))

model.add(Conv1D(filters=64, kernel_size=2, dilation_rate=2, activation='relu'))

model.add(Conv1D(filters=64, kernel_size=2, dilation_rate=4, activation='relu'))

model.add(Flatten())

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit(X, y, epochs=10, batch_size=16)

# Make predictions

predictions = model.predict(X)

predictions = scaler.inverse_transform(predictions)

print(predictions)

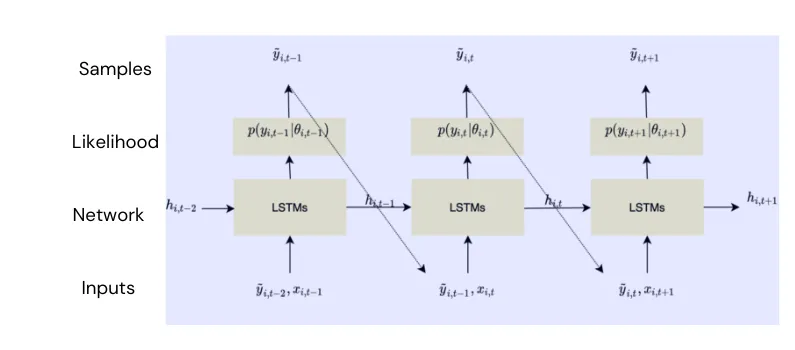

3.6 DeepAR

DeepAR 由亚马逊开发,是一种专为时间序列预测而设计的自回归递归网络。它可以处理多个时间序列,并能捕捉复杂的模式。

使用示例 :

import numpy as np

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense, Flatten

# Load your time series data

time_series_data = pd.read_csv('time_series_data.csv')

time_series_data['Date'] = pd.to_datetime(time_series_data['Date'])

time_series_data.set_index('Date', inplace=True)

# Prepare data for DeepAR-like model

def create_dataset(dataset, time_step=1):

X, Y = [], []

for i in range(len(dataset)-time_step-1):

a = dataset[i:(i+time_step), 0]

X.append(a)

Y.append(dataset[i + time_step, 0])

return np.array(X), np.array(Y)

time_step = 10

scaler = MinMaxScaler(feature_range=(0, 1))

scaled_data = scaler.fit_transform(time_series_data['Value'].values.reshape(-1, 1))

X, y = create_dataset(scaled_data, time_step)

X = X.reshape(X.shape[0], X.shape[1], 1)

# Define DeepAR-like model

model = Sequential()

model.add(LSTM(50, return_sequences=True, input_shape=(time_step, 1)))

model.add(LSTM(50))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mean_squared_error')

# Train the model

model.fit(X, y, epochs=10, batch_size=16)

# Make predictions

predictions = model.predict(X)

predictions = scaler.inverse_transform(predictions)

print(predictions)

时间序列数据预测是一个复杂而又迷人的领域,它极大地受益于机器学习、生成式人工智能和深度学习的进步。通过利用 ARIMA、Prophet、LSTM 和 Transformers 等模型,从业人员可以发现数据中隐藏的模式并做出准确的预测。随着技术的不断发展,可用于时间序列预测的工具和方法只会变得更加复杂,从而为各个领域的创新和改进提供新的机遇。