NVIDIA Nim:如何改变生成式AI应用程序的部署

自 2022 年 ChatGPT 问世以来,生成式人工智能产品的数量急剧增加。近年来,从 LLM 到文本到图像模型,再到即将推出的文本到视频模型,生成式人工智能领域一直呈指数级发展。从代码生成到文本生成,再到图像生成,每个行业都有其广泛的应用。对于企业来说,思考 "如何将这些复杂的人工智能系统应用到现有的基础设施中 "成为一项至关重要的任务。

将这些超级强大的模型部署到生产环境中既不容易,也不省时省力。距离 2025 年还有一半的时间,企业必须超越 API 调用预训练的大型语言模型,深入思考如何将这些全面的模型部署到生产环境中。

企业绝对需要对日志记录、监控和安全等方面进行控制,同时还要努力将人工智能集成到现有的基础设施中。对于许多企业来说,内部制造可能并不是可行的解决方案,因为这需要专业知识、工具和资源,而他们可能并不具备这些条件。这时,NVIDIA NIM 出现了。

什么是 NVIDIA NIM?

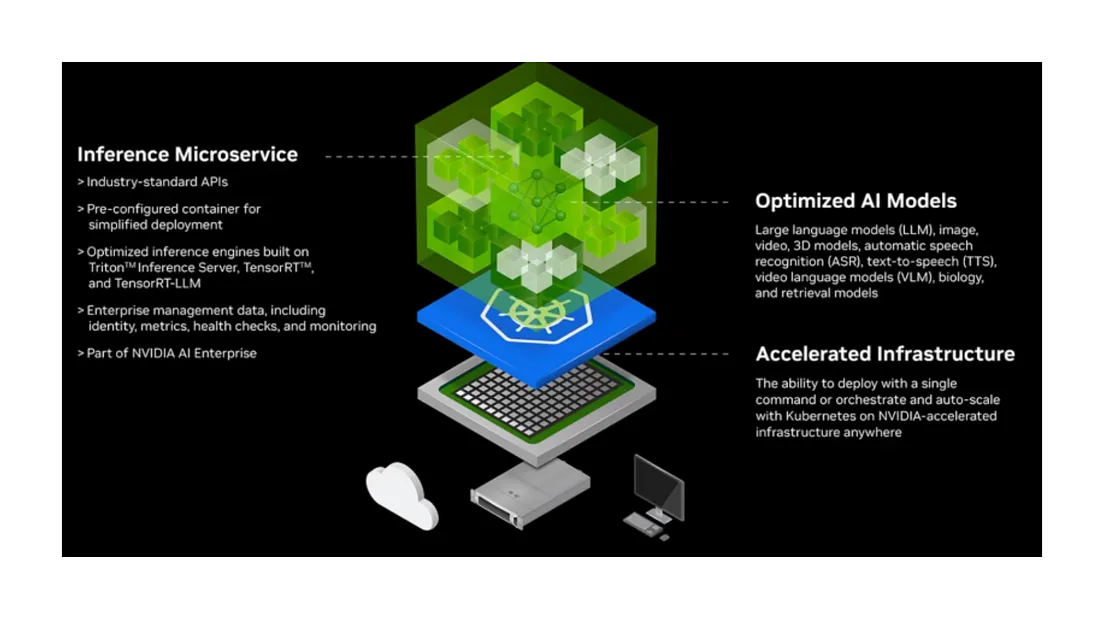

Nvidia Inference Microservice(NIM): 简单地说,NIM 是云原生微服务的集合,有助于在 GPU 加速工作站、云环境和数据中心上部署生成式人工智能模型。它们缩短了生成模型进入市场的整体时间,从而简化了企业从开发到生产的整个流程。

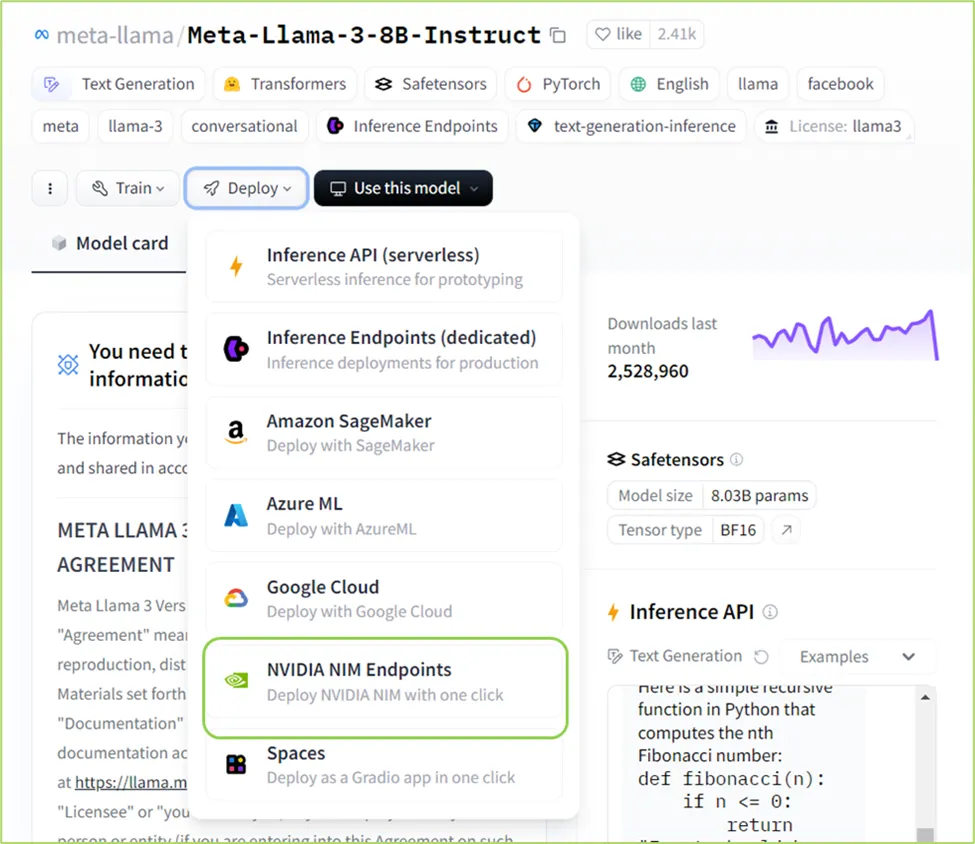

NVIDIA NIM是NVIDIA人工智能基础架构的一个组成部分,它通过NIM提供端到端的解决方案,让用户可以访问即用型人工智能模型,将其打包并部署到NVIDIA加速的生产环境中。例如,专为在 NIM 上运行而优化的 LLAMA3 模型可加速推理性能。

新一轮的生成式人工智能应用为 LLM 架构带来了复杂性,例如多模态 LLM,它具备从文本生成到图像生成到视频生成到音频生成的各种功能,以及它们之间的更多排列组合。因此,作为计算领域的市场领导者,NVIDIA开始引入 NIM,帮助开发人员不仅开发终端,而且以标准化和可扩展的方式部署这些生成式人工智能应用。

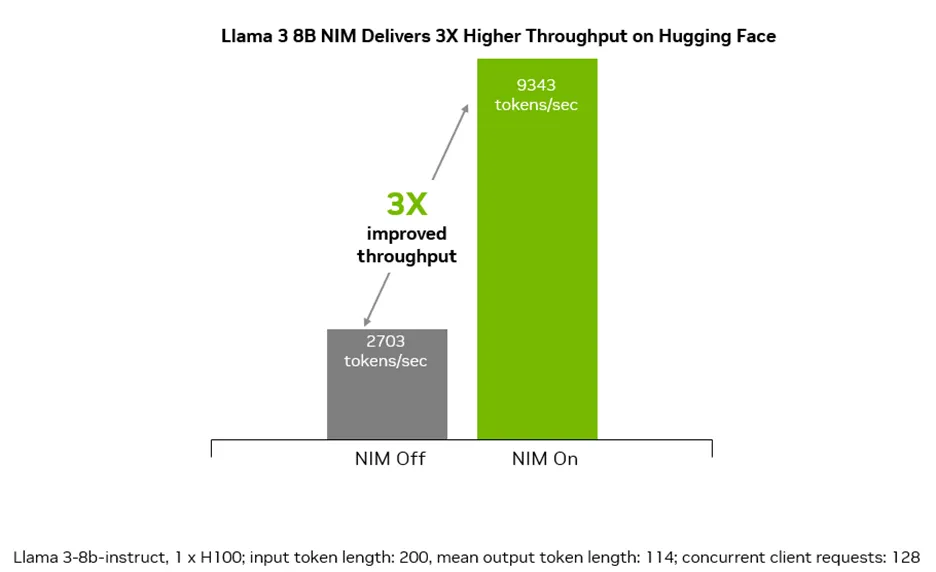

NIM 还能让企业最大限度地利用其基础设施投资。例如,在 NIM 中运行 Meta Llama 3-8B,在加速基础设施上生成的人工智能令牌数量比不使用 NIM 时多 3 倍。这样,企业就能提高效率,使用相同数量的计算基础设施生成更多响应。

Nvidia NIM 的优势

随时随地进行操作

该模型可在云计算、本地内部数据中心和本地工作站等各种基础设施上部署,包括但不限于 NVIDIA RTX、NVIDIA DGX 等。

通过 API 轻松实现

现在,使用简单的 API 调用开发 AI 应用程序看起来更容易了。适合在企业内部实施各种快节奏、可扩展的人工智能技术。

使用特定领域的模型

NVIDIA NIM 提供语言、图像、音频医疗保健等多种特定领域解决方案,为你的特定用例提供优化性能。

利用推理引擎提供更好的用户体验

NIM 利用针对每个模型硬件设置进行了特别调整的推理引擎,这不仅在加速环境中提供了更低的延迟/更高的性能,还降低了在专有数据源上优化模型的相关成本。此外,NIM 部署的应用程序可以动态扩展,以满足企业不断变化的需求,并管理随着时间推移而增加的工作量。

开源人工智能即用模型

NIM 支持社区人工智能模型,如 Llama-3、Mistral、Gemma,其中还包括其他开源模型。

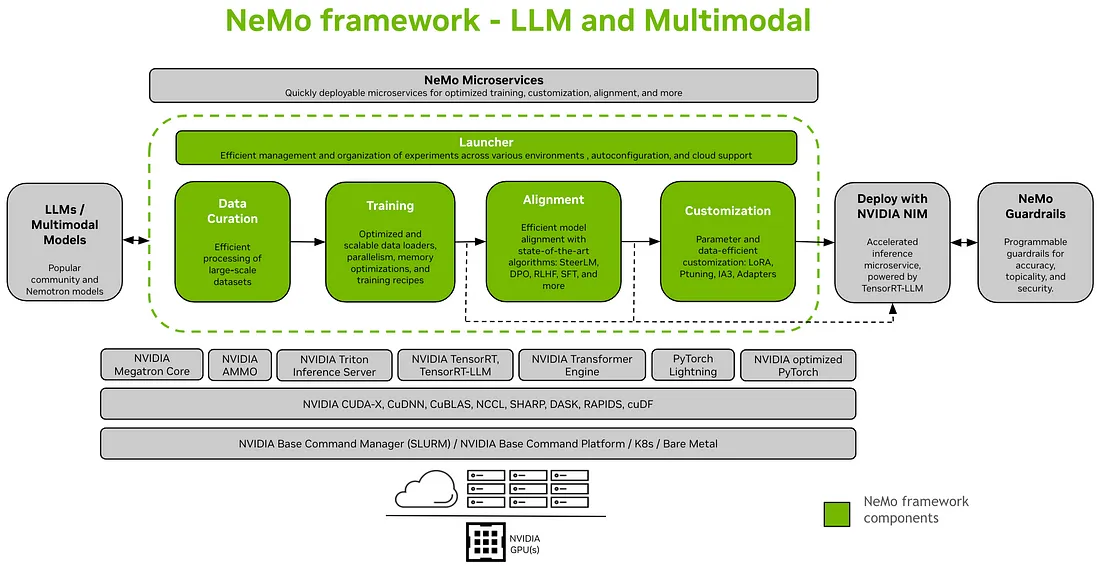

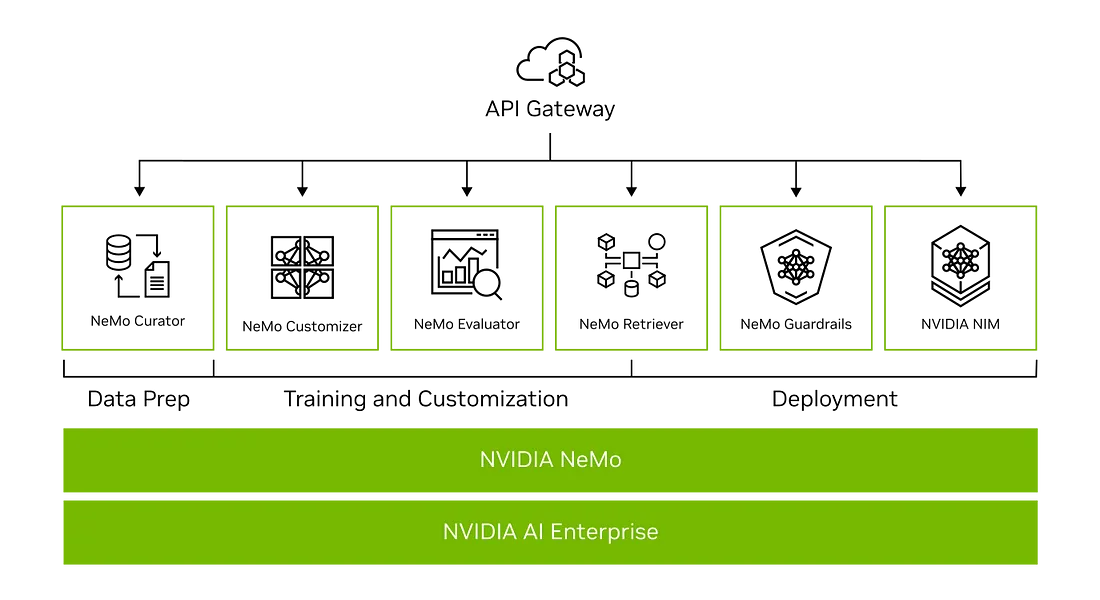

NVIDIA NEMO 与 NIM

NVIDIA NeMo 是一个端到端平台,专为开发定制的生成式人工智能解决方案而设计。它包括训练最先进的人工智能模型,如大型语言模型(LLM)、音频、视觉、多模态等。

最近,NVIDIA推出了 "Nemotron-4 340B "模型,其主要目的是为大型语言模型(LLM)创建合成训练数据。这将大大减轻开发人员的负担,因为他们需要定制的、大量的、行业特定的训练数据,而这些数据可能很难通过其他方式获得。

Nvidia NIM 简化了从基础架构优化到应用部署的大规模人工智能应用构建流程。现在,利用预构建的容器和行业 API,企业可以在加速环境中运行人工智能应用,以满足更快、更复杂的推理需求。

如何将 NIM 与你的应用程序集成

第 1 步:创建账户并登录

https://build.nvidia.com/explore/discover?signin=true 并创建账户以获得 1000 个免费点数

第 2 步:从可用的 LLM 模型中进行选择

第 3 步:点击获取 API 密钥,获取 API 密钥并保存以备后用。

现在我们有了 API 密钥,开始吧。

安装所需软件包

! pip install langchain langchain-nvidia-ai-endpoints openai langchain-community langchain-qdrant langchainhub sentence-transformers

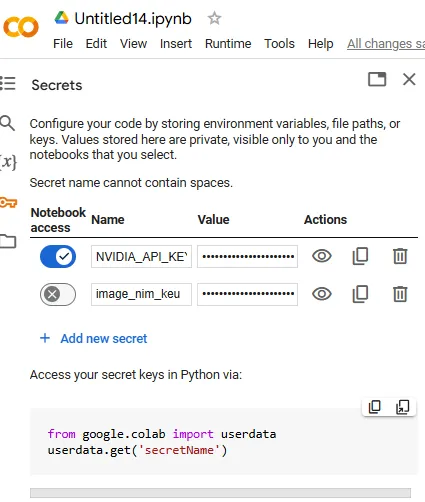

设置 API 密钥

from google.colab import userdata

import os

os.environ['NVIDIA_API_KEY'] = userdata.get('NVIDIA_API_KEY')在开始介绍 LangChain 之前,值得注意的是,你可以通过 OpenAI 软件包直接访问英伟达™(NVIDIA®)人工智能基金会端点。通过这种集成,你可以无缝利用NVIDIA™(NVIDIA®)的人工智能功能。

from openai import OpenAI

client = OpenAI(

base_url = "http://nim-address:8000/v1")

completion = client.chat.completions.create(

model="meta/llama3-70b-instruct",

messages=[{"role":"user","content":""}],## just write your prompt here

temperature=0.5,

top_p=1,

max_tokens=1024,

stream=True)

for chunk in completion:

if chunk.choices[0].delta.content is not None:

print(chunk.choices[0].delta.content, end="")

你可以使用 ChatNVIDIA.get_available_models() 检查可用模型。

from langchain_nvidia_ai_endpoints import ChatNVIDIA

ChatNVIDIA.get_available_models()

输出:

[Model(id='mistralai/mistral-large', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-mistral-large']),id='mistralai/mistral-large', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-mistral-large']),

Model(id='meta/codellama-70b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-codellama-70b', 'playground_llama2_code_70b', 'llama2_code_70b', 'playground_llama2_code_34b', 'llama2_code_34b', 'playground_llama2_code_13b', 'llama2_code_13b']),

Model(id='writer/palmyra-med-70b-32k', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-palmyra-med-70b-32k']),

Model(id='nvidia/neva-22b', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/vlm/nvidia/neva-22b', aliases=['ai-neva-22b', 'playground_neva_22b', 'neva_22b']),

Model(id='meta/llama3-70b-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-llama3-70b']),

Model(id='ibm/granite-8b-code-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-granite-8b-code-instruct']),

Model(id='mediatek/breeze-7b-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-breeze-7b-instruct']),

Model(id='google/recurrentgemma-2b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-recurrentgemma-2b']),

Model(id='microsoft/phi-3-small-128k-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-phi-3-small-128k-instruct']),

Model(id='snowflake/arctic', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-arctic']),

Model(id='seallms/seallm-7b-v2.5', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-seallm-7b']),

Model(id='microsoft/phi-3-small-8k-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-phi-3-small-8k-instruct']),

Model(id='upstage/solar-10.7b-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-solar-10_7b-instruct']),

Model(id='mistralai/mixtral-8x7b-instruct-v0.1', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-mixtral-8x7b-instruct', 'playground_mixtral_8x7b', 'mixtral_8x7b']),

Model(id='liuhaotian/llava-v1.6-mistral-7b', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/stg/vlm/community/llava16-mistral-7b', aliases=['ai-llava16-mistral-7b', 'community/llava16-mistral-7b', 'liuhaotian/llava16-mistral-7b']),

Model(id='aisingapore/sea-lion-7b-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-sea-lion-7b-instruct']),

Model(id='liuhaotian/llava-v1.6-34b', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/stg/vlm/community/llava16-34b', aliases=['ai-llava16-34b', 'community/llava16-34b', 'liuhaotian/llava16-34b']),

Model(id='microsoft/phi-3-vision-128k-instruct', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/vlm/microsoft/phi-3-vision-128k-instruct', aliases=['ai-phi-3-vision-128k-instruct']),

Model(id='microsoft/phi-3-mini-4k-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-phi-3-mini-4k', 'playground_phi2', 'phi2']),

Model(id='google/gemma-7b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-gemma-7b', 'playground_gemma_7b', 'gemma_7b']),

Model(id='microsoft/phi-3-mini-128k-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-phi-3-mini']),

Model(id='adept/fuyu-8b', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/vlm/adept/fuyu-8b', aliases=['ai-fuyu-8b', 'playground_fuyu_8b', 'fuyu_8b']),

Model(id='google/deplot', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/vlm/google/deplot', aliases=['ai-google-deplot', 'playground_deplot', 'deplot']),

Model(id='mistralai/mistral-7b-instruct-v0.2', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-mistral-7b-instruct-v2', 'playground_mistral_7b', 'mistral_7b']),

Model(id='google/gemma-2b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-gemma-2b', 'playground_gemma_2b', 'gemma_2b']),

Model(id='meta/llama2-70b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-llama2-70b', 'playground_llama2_70b', 'llama2_70b', 'playground_llama2_13b', 'llama2_13b']),

Model(id='google/codegemma-7b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-codegemma-7b']),

Model(id='google/codegemma-1.1-7b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-codegemma-1.1-7b']),

Model(id='ibm/granite-34b-code-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-granite-34b-code-instruct']),

Model(id='microsoft/kosmos-2', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/vlm/microsoft/kosmos-2', aliases=['ai-microsoft-kosmos-2', 'playground_kosmos_2', 'kosmos_2']),

Model(id='meta/llama3-8b-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-llama3-8b']),

Model(id='google/paligemma', model_type='vlm', client='ChatNVIDIA', endpoint='https://ai.api.nvidia.com/v1/vlm/google/paligemma', aliases=['ai-google-paligemma']),

Model(id='microsoft/phi-3-medium-4k-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-phi-3-medium-4k-instruct']),

Model(id='writer/palmyra-med-70b', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-palmyra-med-70b']),

Model(id='mistralai/mixtral-8x22b-instruct-v0.1', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-mixtral-8x22b-instruct']),

Model(id='databricks/dbrx-instruct', model_type='chat', client='ChatNVIDIA', endpoint=None, aliases=['ai-dbrx-instruct'])]

文本生成任务:

在此,我们将评估模型根据输入提示生成文本的能力,ChatGPT 和 Claude 等应用程序在这方面表现出色。因此,我们将测试英伟达驱动的文本生成模型。

现在,让我们看看NVIDIA™ AI 基础端点在使用 langchain 执行标准文本生成任务时的表现如何。

# Import the ChatNVIDIA class from the langchain_nvidia_ai_endpoints package

from langchain_nvidia_ai_endpoints import ChatNVIDIA

# Create an instance of the ChatNVIDIA class, configuring it with the specified model and parameters

llm = ChatNVIDIA(

model="meta/llama3-8b-instruct", # Specify the model to use for generating the text

temperature=0.3, # Set the temperature for the generation to control randomness (0.3 is relatively low, meaning more deterministic)

max_tokens=1024 # Limit the response to a maximum of 1024 tokens

)

# Invoke the model with a prompt to generate a ballad about LangChain

result = llm.invoke("Write the matematical formula for theory of relativity?")

# Print the content of the result

print(result.content)

我们所要做的就是在 ChatNVIDIA 函数中定义模型参数,并在提示符下调用它。

为了评估人工智能的生成能力,人工智能生成了以下代码块

输出:

A bold question!

The theory of relativity, developed by Albert Einstein, is a complex and multifaceted concept that encompasses two main parts: special relativity and general relativity. While there isn't a single, concise formula that summarizes the entire theory, I can provide you with some key equations that represent the core ideas.

**Special Relativity (1905)**

1. **Time dilation**: The Lorentz transformation for time dilation:

t' = γ(t - vx/c^2)

where t' is the time measured by an observer in motion, t is the time measured by an observer at rest, v is the relative velocity, x is the position, c is the speed of light, and γ is the Lorentz factor: γ = 1 / sqrt(1 - v^2/c^2).

2. **Length contraction**: The Lorentz transformation for length contraction:

L' = L / γ

where L' is the length measured by an observer in motion, L is the length measured by an observer at rest, and γ is the Lorentz factor.

3. **Relativistic mass-energy equivalence**: The famous equation:

E^2 = (pc)^2 + (mc^2)^2

where E is the total energy, p is the momentum, m is the rest mass, and c is the speed of light.

**General Relativity (1915)**

1. **Einstein's field equations**: The fundamental equations that describe the curvature of spacetime:

Rμν - 1/2Rgμν = (8πG/c^4)Tμν

where Rμν is the Ricci tensor, R is the Ricci scalar, gμν is the metric tensor, G is the gravitational constant, c is the speed of light, and Tμν is the stress-energy tensor.

2. **Geodesic equation**: The equation that describes the motion of objects in curved spacetime:

d^2x^μ/dλ^2 + Γμνρ dx^ν/dλ dx^ρ/dλ = 0

where x^μ is the position, λ is the affine parameter, and Γμνρ is the Christoffel symbol.

These equations form the foundation of the theory of relativity, but keep in m

# Asking it to generate a new song to test its creativity

result = llm.invoke("Write a song about theory of relativity?")

# Print the content of the result

print(result.content)

为了评估人工智能的生成能力,人工智能生成了以下代码块

输出:

What a fascinating topic! Here's a song about the theory of relativity:

**Title:** "Time and Space"

**Verse 1:**

In the realm of physics, a revolution's born

Einstein's insight, a new dawn is sworn

Time and space, no longer the same

Relative truths, in a world of flame

**Chorus:**

Time and space, a dance we play

Dependent on speed, in a relativistic way

The faster we move, the slower it goes

In the theory of relativity, our minds explode

**Verse 2:**

The speed of light, a constant guide

The fabric of spacetime, where gravity resides

Mass and energy, intertwined they stand

The equivalence principle, a fundamental hand

**Chorus:**

Time and space, a dance we play

Dependent on speed, in a relativistic way

The faster we move, the slower it goes

In the theory of relativity, our minds explode

**Bridge:**

Imagine a clock, ticking away

On a train, moving at incredible sway

From a stationary view, it seems to slow

But for the traveler, time flows, don't you know

**Chorus:**

Time and space, a dance we play

Dependent on speed, in a relativistic way

The faster we move, the slower it goes

In the theory of relativity, our minds explode

**Outro:**

In the realm of relativity, we find our place

A world of wonder, where time and space entwine

The theory of relativity, a masterpiece of mind

A dance of time and space, forever aligned.

Please note that this is a creative interpretation of the theory of relativity, and not a scientifically accurate representation. The song aims to capture the essence of the theory in a lyrical and melodic form, rather than providing a detailed explanation of the mathematical concepts involved.

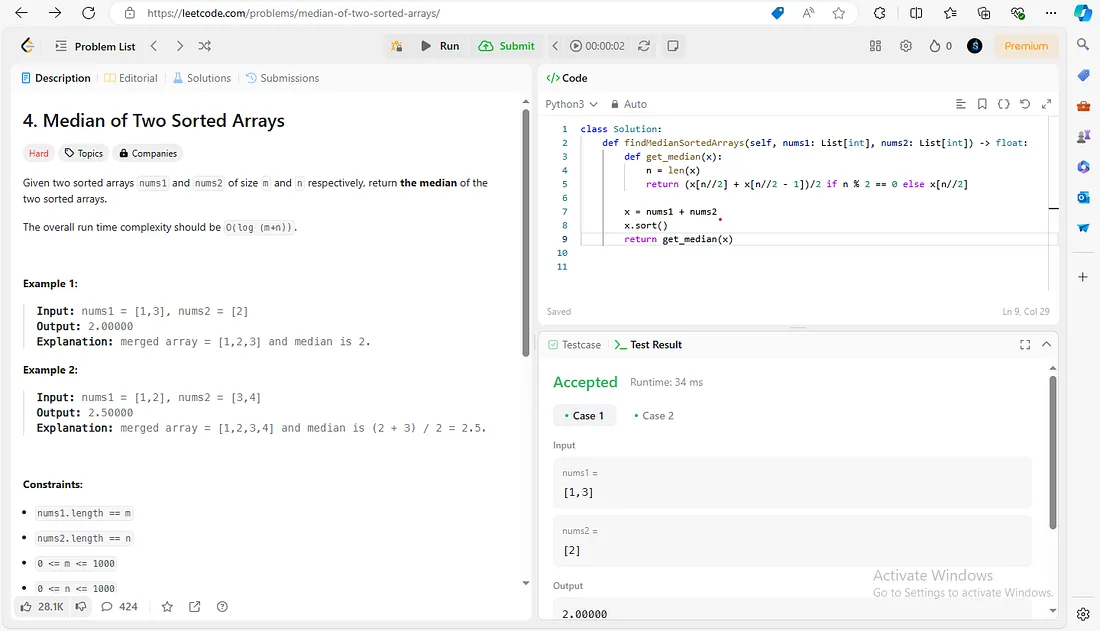

代码生成器

现在,让我们来评估模型根据输入提示生成代码的能力,像 Codellama 这样的基金会模型在代码生成任务中具有特殊能力。因此,我们将测试NVIDIA™(NVIDIA®)驱动的代码生成模型的效率。

prompt = ChatPromptTemplate.from_messages(

[

(

"system","system",

"You are an expert coding Assistant. Strictly Follow python syntax; Do not use external libraries; Provide only with the correct answer and refrain from explainations.",

),

("user", "{input}"),

]

)

chain = prompt | ChatNVIDIA(model="meta/codellama-70b") | StrOutputParser()

leetcode_problem=

'''

Solve this problem: https://leetcode.com/problems/median-of-two-sorted-arrays/description/

'''

for txt in chain.stream({"input": leetcode_problem}):

print(txt, end="")

如你所见,我在 ChatNVIDIA 函数中定义了 codellama-70b 模型,并为其提供了 leetcode 问题的链接,它会读取问题描述并给出如下输出。

输出:

class Solution:

def findMedianSortedArrays(self, nums1: List[int], nums2: List[int]) -> float:

def get_median(x):

n = len(x)

return (x[n//2] + x[n//2 - 1])/2 if n % 2 == 0 else x[n//2]

x = nums1 + nums2

x.sort()

return get_median(x)

多模态能力

多模态人工智能是指生成式人工智能(Gen AI)模型能够理解、处理和生成各种类型的内容数据,包括图像、视频、文本、音频等。通过利用各种模式的力量,人工智能可以更全面地了解世界是如何运作的。

我是这样认为的: 我们人类有 5 种感官:听、说、看、嗅、触,前 3 种能力人工智能已经实现,后 2 种能力尚待实现,以便向 AGI 迈进。

回到 Nvidia NIM,以下是你可以推理的模型:

图像到文本

import IPython

import requests

image_url = "https://th.bing.com/th/id/OIP.AvJzGpdSlqXmr1hNmoGiGgHaEo?rs=1&pid=ImgDetMain"

image_content = requests.get(image_url).content

IPython.display.Image(image_content)

from langchain_core.messages import HumanMessage

from langchain_nvidia_ai_endpoints import ChatNVIDIA

mllm = ChatNVIDIA(model="nvidia/neva-22b")

mllm.invoke(

[

HumanMessage(

content=[

{"type": "text", "text": "Can you name a movie based on this image"},

{"type": "image_url", "image_url": {"url": image_url}},

]

)

]

)

输出:

Yes, the image of the orange and white clownfish swimming near a

coral reef could remind you of the movie "Finding Nemo". The movie features

a clownfish named Nemo who gets captured by a diver and separated from

his father, Marlin. Marlin goes on a journey to find his son, encountering

various marine creatures and obstacles along the way. The image of the

clownfish swimming near the coral reef could evoke the sense of adventure and

exploration that the movie "Finding Nemo" represents.

图像到视频生成

import requests

invoke_url = "https://ai.api.nvidia.com/v1/genai/stabilityai/stable-video-diffusion""https://ai.api.nvidia.com/v1/genai/stabilityai/stable-video-diffusion"

headers = {

"Authorization": f"Bearer {userdata.get('image_nim_keu')}",

"Accept": "application/json",

}

payload = {

"image": _"data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAIAAACQd1PeAAAAEElEQVR4nGK6HcwNCAAA//8DTgE8HuxwEQAAAABJRU5ErkJggg==",

"cfg_scale": 2.5,

"seed": 0

}

response = requests.post(invoke_url, headers=headers, json=payload)

response.raise_for_status()

response_body = response.json()

print(response_body)

import base64

# Assuming response_body contains the base64-encoded video data

response_body = {

'artifacts': [

{

'base64': f'{response_body["video"]}'

}

]

}

# Decode the base64 string

video_data = base64.b64decode(response_body['artifacts'][0]['base64'])

# Define the filename for the video

filename = 'generated_vido.mp4'

# Write the decoded data to the video file

with open(filename, 'wb') as f:

f.write(video_data)

print(f"Video saved as {filename}")

文本到图像生成

import requests

invoke_url = "https://ai.api.nvidia.com/v1/genai/stabilityai/sdxl-turbo""https://ai.api.nvidia.com/v1/genai/stabilityai/sdxl-turbo"

headers = {

"Authorization": f"Bearer {userdata.get('image_nim_keu')}",

"Accept": "application/json",

}

payload = {

"text_prompts": [

{

"text": "A motion of titanic sinking in the abyss and helicopters circling the ship",

"weight": 1

}

],

"seed": 0,

"sampler": "K_EULER_ANCESTRAL",

"steps": 2

}

response = requests.post(invoke_url, headers=headers, json=payload)

response.raise_for_status()

response_body = response.json()

print(response_body)

import base64

imgdata = base64.b64decode(response_body['artifacts'][0]['base64'])

filename = 'some_image.jpg' # I assume you have a way of picking unique filenames

with open(filename, 'wb') as f:

f.write(imgdata)

IPython.display.Image(filename)

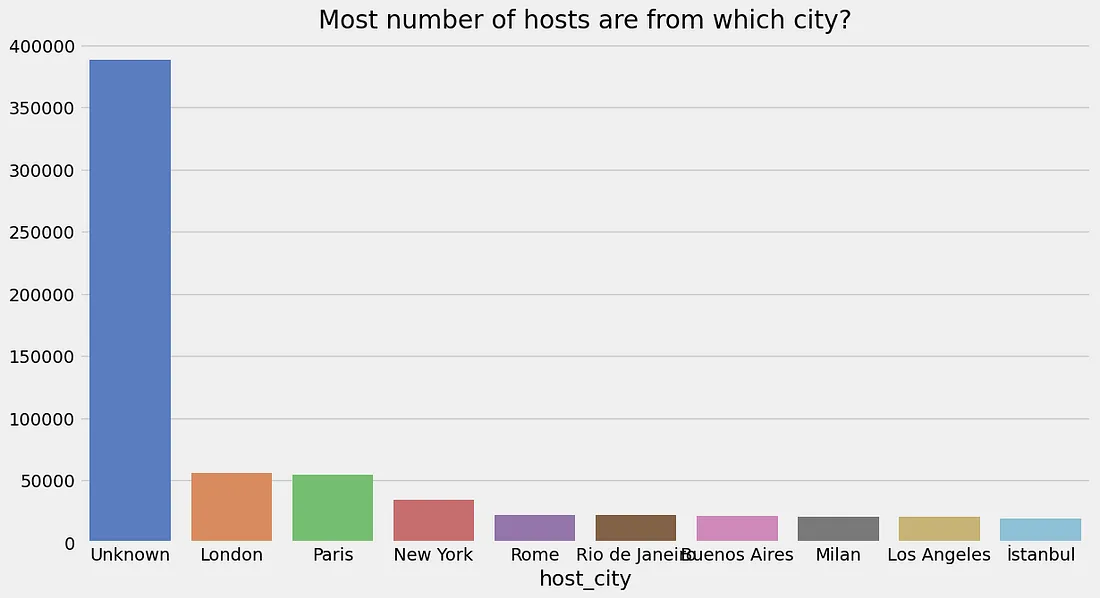

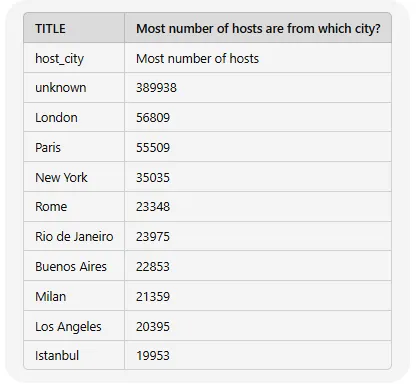

图像到表格

import requests, base64

invoke_url = "https://ai.api.nvidia.com/v1/vlm/google/deplot"

stream = False

with open("/content/top10hostcitiies.png", "rb") as f:

image_b64 = base64.b64encode(f.read()).decode()

assert len(image_b64) < 180_000, \

"To upload larger images, use the assets API (see docs)"

headers = {

"Authorization": f"Bearer {userdata.get('image_nim_keu')}",

"Accept": "text/event-stream" if stream else "application/json"

}

payload = {

"messages": [

{

"role": "user",

"content": f'Generate underlying data table of the figure below: <img src=_"data:image/png;base64,{image_b64}" />'

}

],

"max_tokens": 1024,

"temperature": 0.20,

"top_p": 0.20,

"stream": stream

}

response = requests.post(invoke_url, headers=headers, json=payload)

if stream:

for line in response.iter_lines():

if line:

print(line.decode("utf-8"))

else:

print(response.json())

输出:

使用 CHATNVIDIA 构建基于 RAG 的 streamlit 应用程序

或者,你也可以通过本教程实现自己的抹布应用:

在 .env 文件中设置环境变量

NVIDIA_API_KEY="<YOUR_NVIDIA_API_KEY>"

安装依赖项

!pip install openai python-dotenv langchain_nvidia_ai_endpoints

langchain_community

streamlit

faiss-cpu

pypdf

以下是 streamlit 应用程序的完整 python 脚本:

import os

import streamlit.web.cli as st

from langchain_nvidia_ai_endpoints import * # Import NVIDIA AI endpoints for language model integration

from langchain_community.document_loaders import PyPDFDirectoryLoader # Import PDF document loader

from langchain.text_splitter import RecursiveCharacterTextSplitter # Import text splitter for document processing

from langchain.chains.combine_documents import create_stuff_documents_chain # Import function to create document chains

from langchain.prompts import ChatPromptTemplate # Import template for chat prompts

from langchain.chains import create_retrieval_chain # Import function to create retrieval chains

from langchain.output_parsers import StrOutputParser # Import output parser for string parsing

from langchain_community.vectorstores import FAISS # Import FAISS vector store for embeddings

from dotenv import load_dotenv # Import dotenv to load environment variables from a .env file

load_dotenv() # Load environment variables from .env file

# Set the NVIDIA API key from the environment variable

os.environ["NVIDIA_API_KEY"] = os.getenv("NVIDIA_API_KEY")

# Initialize the ChatNVIDIA language model

llm = ChatNVIDIA(model="meta/llama-70b-instruct")

# Function to generate vector embeddings for documents

def vector_embeddings():

if "vectors" not in st.session_state: # Check if vectors are already in the session state

st.session_state.embeddings = NVIDIAEmbeddings() # Initialize NVIDIA embeddings

st.session_state.loader = PyPDFDirectoryLoader("docs") # Load PDF documents from the "docs" directory

st.session_state.docs = st.session_state.loader.load() # Load documents into session state

st.session_state.text_splitter = RecursiveCharacterTextSplitter(chunk_size=700, chunk_overlap=50) # Initialize text splitter

st.session_state.final_documents = st.session_state.text_splitter.split_documents(st.session_state.docs[:30]) # Split documents into chunks

st.session_state.vectors = FAISS.from_documents(st.session_state.final_documents, st.session_state.embeddings) # Create FAISS vector store from documents

st.title("Nvidia NIM demo") # Set the title of the Streamlit app

# Define the chat prompt template

prompt = ChatPromptTemplate.from_template(

"""

Answer the questions based on the provided context only.

Please provide the most accurate response based on the question

<context>

{context}

<context>

Questions: {input}

"""

)

# Input field for user to enter their question

prompt1 = st.text_input("Enter Your Question From Documents")

# Button to trigger document embedding process

if st.button("Documents Embedding"):

vector_embeddings() # Generate vector embeddings

st.write("Vector Store DB Is Ready") # Indicate that the vector store is ready

import time # Import time module for performance measurement

# If a question is entered, process it

if prompt1:

document_chain = create_stuff_documents_chain(llm, prompt) # Create document chain for processing

retriever = st.session_state.vectors.as_retriever() # Use vectors as retriever

retrieval_chain = create_retrieval_chain(retriever, document_chain) # Create retrieval chain

start = time.process_time() # Start timer

response = retrieval_chain.invoke({'input': prompt1}) # Get response from retrieval chain

print("Response time:", time.process_time() - start) # Print response time

st.write(response['answer']) # Display the answer

# With a Streamlit expander for document similarity search

with st.expander("Document Similarity Search"):

# Find and display the relevant chunks

for i, doc in enumerate(response["context"]):

st.write(doc.page_content) # Display the page content of each relevant document

st.write("--------------------------------") # Separator for clarity

将其保存为 app.py,然后运行

streamlit run app.py

总结

在云托管 API 和NVIDIA API 目录的帮助下,开发人员将能够评估最新的尖端生成式人工智能模型。开发人员也可以将 NIM 下载到本地环境中,为自己的应用程序使用自托管架构。这可以缩短部署和运行模型所需的时间和成本。

在 Kubernetes 协调的帮助下,NIM 可以在企业中部署大规模的最新模型。NIM 无需专业知识或详尽的微调/定制工作,可与企业当前的基础设施轻松集成。

从业务角度来看,NIM 可以支持那些希望构建 AI 基础设施的新进入者,而无需进行复杂的容器化或对公司的管道进行大量更改,甚至无需深入了解 AI 模型。NIM 有助于降低运营和扩展 AI 驱动的基础设施所需的成本。