GraphRAG :索引如何提升RAG中知识图谱的性能

TLDR:

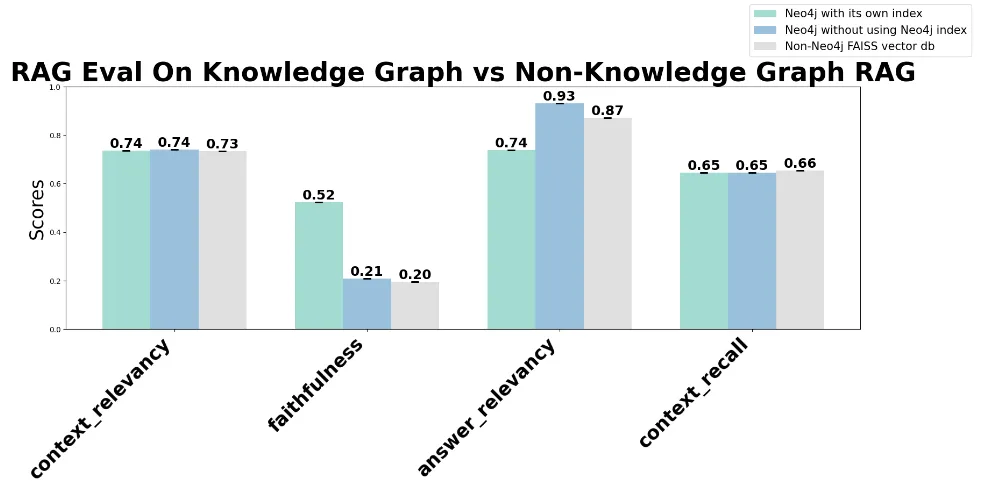

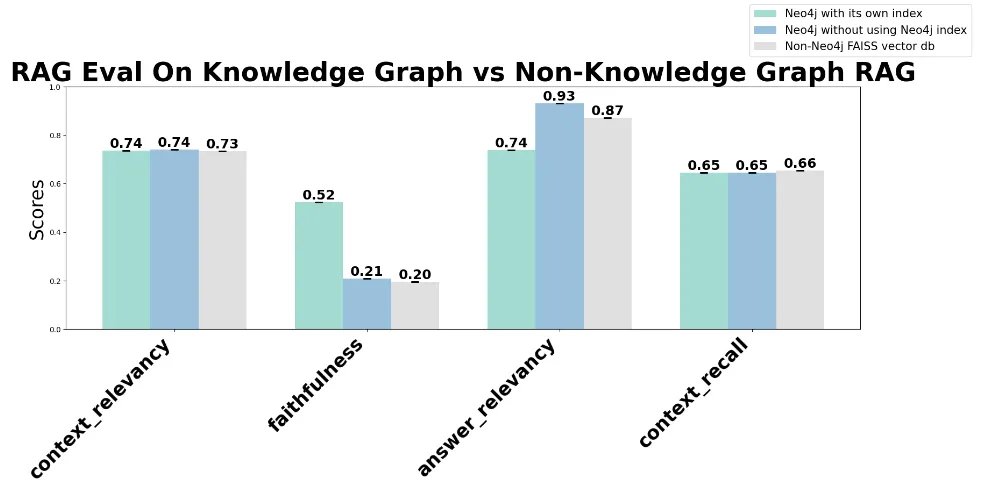

- 知识图谱可能不会对上下文检索产生重大影响--我研究的所有知识图谱 RAG 方法都显示出与 FAISS 相似的上下文相关性得分(~0.74)。

- 没有自身索引的 Neo4j 可获得更高的答案相关性得分(0.93),但与 FAISS 相比,8% 的提升可能不值得投资回报率限制。与 Neo4j WITH index(0.74)和 FAISS(0.87)相比,这一得分表明,在不需要微调的高价值用例中,需要高精度答案的应用可能会受益。

- 与不使用 Neo4j 指数(0.21)或使用 FAISS 指数(0.20)相比,使用 Neo4j 指数(0.52)时的忠实度得分明显提高。这减少了捏造的信息,是有好处的,但仍给开发人员提出了一个问题,即使用 GraphRAG 是否值得投资回报率限制(与微调相比,微调的成本可能略高,但能带来更高的分数)。

导致我进行分析的原始问题是:

如果“GraphRAG”方法像炒作的那样深奥,那么我何时以及为什么要在 RAG 应用程序中使用知识图谱?

我一直在寻求了解这项技术在当前热议的讨论之外的实际应用,因此我研究了原始的微软研究论文,以更深入地了解他们的方法和发现。

MSFT 论文声称 GraphRAG 提升了两个指标:

指标1-“全面性”:

“答案提供了多少细节来涵盖问题的所有方面和细节?”

认识到响应细节级别可能受到知识图谱实现之外的各种因素的影响——本文纳入的“直接性”指标为控制响应长度提供了一种有趣的方法,但令我惊讶的是这只是提升所引用的两个指标之一,并且对其他指标很好奇。

指标2-“多样性”:

“对于这个问题,答案在提供不同的观点和见解方面有多么多样化和丰富?”

响应多样性的概念代表了一个复杂的指标,可能受到各种因素的影响,包括观众的期望和提示设计。这个指标代表了一种有趣的评估方法,但对于直接测量 RAG 中的知识图谱,它可能需要进一步完善。

更令人好奇的是为什么升力大小不明确:

该论文对上述两个指标的提升的官方声明:

"与简单的 RAG 基线相比有实质性改进

这篇论文报告说,新开源的 RAG 管道 GraphRAG 比 "基线 "有了 "实质性改进"。

分析方法概述:

- 我将一份 PDF 文档分割成相同的块,用于本分析的所有变体(2024 年 6 月的美国总统辩论记录,这是在辩论之前创建模型的合适 RAG 机会)。

- 将文档加载到 Neo4j 中,使用其图形表示找到的语义值,并创建 Neo4j 索引。

- 创建 3 个检索器作为测试变体:

- 一个使用Neo4j知识图谱和Neo4j索引

- 另一个使用Neo4j知识图谱,不使用Neo4j索引

- FAISS检索基线,加载相同的文档时无需参考Neo4j。

- 开发基本真实问答数据集,以研究规模对性能指标的潜在影响。

- 使用 RAGAS 评估检索质量和答案质量的结果(精确度和召回率),这为微软研究中使用的指标提供了一个补充视角。

- 将结果绘制如下,并注意偏差。

分析:

快速运行下面的代码--我使用了 langchain、OpenAI 进行嵌入(以及评估和检索)、Neo4j 和 RAGAS

# Ignore Warnings

import warnings

warnings.filterwarnings('ignore')

# Import packages

import os

import asyncio

import nest_asyncio

nest_asyncio.apply()

import pandas as pd

from dotenv import load_dotenv

from typing import List, Dict, Union

from scipy import stats

from collections import OrderedDict

import openai

from langchain_openai import OpenAI, OpenAIEmbeddings

from langchain_community.document_loaders import PyPDFLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain.text_splitter import TokenTextSplitter

from langchain_community.vectorstores import Neo4jVector, FAISS

from langchain_core.retrievers import BaseRetriever

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import PromptTemplate, ChatPromptTemplate

from langchain.chat_models import ChatOpenAI

from langchain.schema import Document

from neo4j import GraphDatabase

import numpy as np

import matplotlib.pyplot as plt

from ragas import evaluate

from ragas.metrics import (

faithfulness,

answer_relevancy,

context_relevancy,

context_recall,

)

from datasets import Dataset

import random

添加了来自 OAI 的 OpenAI API 密钥和来自 Neo4j 的 neo4j 身份验证

# Set up API keys

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

neo4j_url = os.getenv("NEO4J_URL")

neo4j_user = os.getenv("NEO4J_USER")

neo4j_password = os.getenv("NEO4J_PASSWORD")

openai_api_key = os.getenv("OPENAI_API_KEY") # changed keys - ignore

# Load and process the PDF

pdf_path = "debate_transcript.pdf"

loader = PyPDFLoader(pdf_path)

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200) # Comparable to Neo4j

texts = text_splitter.split_documents(documents)

# Set up Neo4j connection

driver = GraphDatabase.driver(neo4j_url, auth=(neo4j_user, neo4j_password))

使用 Cypher 在 Neo4j 中加载自己的文档图形表示法,并创建 Neo4j 索引

# Create function for vector index in Neo4j after the graph representation is complete below

def create_vector_index(tx):

query = """

CREATE VECTOR INDEX pdf_content_index IF NOT EXISTS

FOR (c:Content)

ON (c.embedding)

OPTIONS {indexConfig: {

`vector.dimensions`: 1536,

`vector.similarity_function`: 'cosine'

}}

"""

tx.run(query)

# Function for Neo4j graph creation

def create_document_graph(tx, texts, pdf_name):

query = """

MERGE (d:Document {name: $pdf_name})

WITH d

UNWIND $texts AS text

CREATE (c:Content {text: text.page_content, page: text.metadata.page})

CREATE (d)-[:HAS_CONTENT]->(c)

WITH c, text.page_content AS content

UNWIND split(content, ' ') AS word

MERGE (w:Word {value: toLower(word)})

MERGE (c)-[:CONTAINS]->(w)

"""

tx.run(query, pdf_name=pdf_name, texts=[

{"page_content": t.page_content, "metadata": t.metadata}

for t in texts

])

# Create graph index and structure

with driver.session() as session:

session.execute_write(create_vector_index)

session.execute_write(create_document_graph, texts, pdf_path)

# Close driver

driver.close()

为检索和嵌入设置 OpenAI

# Define model for retrieval

llm = ChatOpenAI(model_name="gpt-3.5-turbo", openai_api_key=openai_api_key)

# Setup embeddings model w default OAI embeddings

embeddings = OpenAIEmbeddings(openai_api_key=openai_api_key)

设置 3 个检索器进行测试:

- 参考索引的 Neo4j

- Neo4j没有参考其索引,因此它从Neo4j中创建嵌入,因为它是存储的

- FAISS 在相同的分块文档上建立一个非 Neo4j 向量数据库作为基线

# Neo4j retriever setup using Neo4j, OAI embeddings model using Neo4j index

neo4j_vector_store = Neo4jVector.from_existing_index(

embeddings,

url=neo4j_url,

username=neo4j_user,

password=neo4j_password,

index_name="pdf_content_index",

node_label="Content",

text_node_property="text",

embedding_node_property="embedding"

)

neo4j_retriever = neo4j_vector_store.as_retriever(search_kwargs={"k": 2})

# OpenAI retriever setup using Neo4j, OAI embeddings model NOT using Neo4j index

openai_vector_store = Neo4jVector.from_documents(

texts,

embeddings,

url=neo4j_url,

username=neo4j_user,

password=neo4j_password

)

openai_retriever = openai_vector_store.as_retriever(search_kwargs={"k": 2})

# FAISS retriever setup - OAI embeddings model baseline for non Neo4j vector store touchpoint

faiss_vector_store = FAISS.from_documents(texts, embeddings)

faiss_retriever = faiss_vector_store.as_retriever(search_kwargs={"k": 2})

为 RAGAS 评估(N = 100)创建来自 PDF 的地面实况。

使用 OpenAI 模型作为地面实况,但在所有变体中也使用 OpenAI 模型作为检索的默认值,因此在创建地面实况时没有引入真正的偏差(OpenAI 训练数据之外!)。

# Move to N = 100 for more Q&A ground truth

def create_ground_truth2(texts: List[Union[str, Document]], num_questions: int = 100) -> List[Dict]:

llm_ground_truth = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0.7)

# Function to extract text from str or Document

def get_text(item):

if isinstance(item, Document):

return item.page_content

return item

# Split long texts into smaller chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_text(' '.join(get_text(doc) for doc in texts))

ground_truth2 = []

question_prompt = ChatPromptTemplate.from_template(

"Given the following text, generate {num_questions} diverse and specific questions that can be answered based on the information in the text. "

"Provide the questions as a numbered list.\n\nText: {text}\n\nQuestions:"

)

all_questions = []

for split in all_splits:

response = llm_ground_truth(question_prompt.format_messages(num_questions=3, text=split))

questions = response.content.strip().split('\n')

all_questions.extend([q.split('. ', 1)[1] if '. ' in q else q for q in questions])

random.shuffle(all_questions)

selected_questions = all_questions[:num_questions]

llm = ChatOpenAI(temperature=0)

for question in selected_questions:

answer_prompt = ChatPromptTemplate.from_template(

"Given the following question, provide a concise and accurate answer based on the information available. "

"If the answer is not directly available, respond with 'Information not available in the given context.'\n\nQuestion: {question}\n\nAnswer:"

)

answer_response = llm(answer_prompt.format_messages(question=question))

answer = answer_response.content.strip()

context_prompt = ChatPromptTemplate.from_template(

"Given the following question and answer, provide a brief, relevant context that supports this answer. "

"If no relevant context is available, respond with 'No relevant context available.'\n\n"

"Question: {question}\nAnswer: {answer}\n\nRelevant context:"

)

context_response = llm(context_prompt.format_messages(question=question, answer=answer))

context = context_response.content.strip()

ground_truth2.append({

"question": question,

"answer": answer,

"context": context,

})

return ground_truth2

ground_truth2 = create_ground_truth2(texts)

为每种检索方法创建 RAG 链。

# RAG chain works for each retrieval method

def create_rag_chain(retriever):

template = """Answer the question based on the following context:

{context}

Question: {question}

Answer:"""

prompt = PromptTemplate.from_template(template)

return (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

# Calling the function for each method

neo4j_rag_chain = create_rag_chain(neo4j_retriever)

faiss_rag_chain = create_rag_chain(faiss_retriever)

openai_rag_chain = create_rag_chain(openai_retriever)

然后使用 RAGAS 的所有 4 个指标对每个 RAG 链进行评估(上下文相关性和上下文召回指标评估 RAG 检索,而答案相关性和忠实度指标则根据基本事实评估完整的提示响应)。

# Eval function for RAGAS at N = 100

async def evaluate_rag_async2(rag_chain, ground_truth2, name):

splitter = TokenTextSplitter(chunk_size=500, chunk_overlap=50)

generated_answers = []

for item in ground_truth2:

question = splitter.split_text(item["question"])[0]

try:

answer = await rag_chain.ainvoke(question)

except AttributeError:

answer = rag_chain.invoke(question)

truncated_answer = splitter.split_text(str(answer))[0]

truncated_context = splitter.split_text(item["context"])[0]

truncated_ground_truth = splitter.split_text(item["answer"])[0]

generated_answers.append({

"question": question,

"answer": truncated_answer,

"contexts": [truncated_context],

"ground_truth": truncated_ground_truth

})

dataset = Dataset.from_pandas(pd.DataFrame(generated_answers))

result = evaluate(

dataset,

metrics=[

context_relevancy,

faithfulness,

answer_relevancy,

context_recall,

]

)

return {name: result}

async def run_evaluations(rag_chains, ground_truth2):

results = {}

for name, chain in rag_chains.items():

result = await evaluate_rag_async(chain, ground_truth2, name)

results.update(result)

return results

def main(ground_truth2, rag_chains):

# Get event loop

loop = asyncio.get_event_loop()

# Run evaluations

results = loop.run_until_complete(run_evaluations(rag_chains, ground_truth2))

return results

# Run main function for N = 100

if __name__ == "__main__":

rag_chains = {

"Neo4j": neo4j_rag_chain,

"FAISS": faiss_rag_chain,

"OpenAI": openai_rag_chain

}

results = main(ground_truth2, rag_chains)

for name, result in results.items():

print(f"Results for {name}:")

print(result)

print()

开发了一个计算 95%置信区间的函数,为 LLM 检索结果与地面实况之间的相似性提供了一个不确定性度量,但由于结果已经是一个值,我没有使用该函数,而是在多次重复运行后观察到相同的三角洲幅度和模式时确认了方向差异。

# Plot CI - low sample size due to Q&A constraint at 100

def bootstrap_ci(data, num_bootstraps=1000, ci=0.95):

bootstrapped_means = [np.mean(np.random.choice(data, size=len(data), replace=True)) for _ in range(num_bootstraps)]

return np.percentile(bootstrapped_means, [(1-ci)/2 * 100, (1+ci)/2 * 100])

创建了一个绘制条形图的函数,最初带有估计误差。

# Function to plot

def plot_results(results):

name_mapping = {

'Neo4j': 'Neo4j with its own index',

'OpenAI': 'Neo4j without using Neo4j index',

'FAISS': 'FAISS vector db (not knowledge graph)'

}

# Create a new OrderedDict

ordered_results = OrderedDict()

ordered_results['Neo4j with its own index'] = results['Neo4j']

ordered_results['Neo4j without using Neo4j index'] = results['OpenAI']

ordered_results['Non-Neo4j FAISS vector db'] = results['FAISS']

metrics = list(next(iter(ordered_results.values())).keys())

chains = list(ordered_results.keys())

fig, ax = plt.subplots(figsize=(18, 10))

bar_width = 0.25

opacity = 0.8

index = np.arange(len(metrics))

for i, chain in enumerate(chains):

means = [ordered_results[chain][metric] for metric in metrics]

all_values = list(ordered_results[chain].values())

error = (max(all_values) - min(all_values)) / 2

yerr = [error] * len(means)

bars = ax.bar(index + i*bar_width, means, bar_width,

alpha=opacity,

color=plt.cm.Set3(i / len(chains)),

label=chain,

yerr=yerr,

capsize=5)

for bar in bars:

height = bar.get_height()

ax.text(bar.get_x() + bar.get_width()/2., height,

f'{height:.2f}', # Changed to 2 decimal places

ha='center', va='bottom', rotation=0, fontsize=18, fontweight='bold')

ax.set_xlabel('RAGAS Metrics', fontsize=16)

ax.set_ylabel('Scores', fontsize=16)

ax.set_title('RAGAS Evaluation Results with Error Estimates', fontsize=26, fontweight='bold')

ax.set_xticks(index + bar_width * (len(chains) - 1) / 2)

ax.set_xticklabels(metrics, rotation=45, ha='right', fontsize=14, fontweight='bold')

ax.legend(loc='upper right', fontsize=14, bbox_to_anchor=(1, 1), ncol=1)

plt.ylim(0, 1)

plt.tight_layout()

plt.show()

最后,将这些指标绘制成图。

为了便于集中比较,各实验中的文档分块、嵌入模型和检索模型等关键参数保持不变。虽然我通常会绘制 CI 值,但在多次重复实验后,我发现这种模式是正确的(这假定了数据的统一性),因此我对这种模式很放心。因此,需要注意的是,结果有待于统计窗口的差异。

在重新运行时,重复运行时的相对分数模式始终显示出可以忽略不计的可变性(令人惊讶),在由于资源超时而意外运行了几次分析后,模式保持一致,我对这一结果基本可以接受。

# Plot

plot_results(results)

总结:

所有方法都显示出相似的上下文相关性,这意味着 RAG 中的知识图谱对上下文检索无益,但 Neo4j 凭借其自身的索引显著提高了忠实度。请注意,这还有待于 CI 和偏差平衡。