使用YOLOv8和Streamlit自动进行车牌检测和OCR

2024年07月22日 由 alex 发表

446

0

在这个项目中,我们将使用 YOLOv8 自动完成车牌检测过程,然后使用光学字符识别 (OCR) 技术读取检测到的车牌。

第 1 步:下载并提取数据集

首先,我们需要从给定的 URL 下载数据集。我们将使用 gdown 库将 ZIP 文件下载到 Google Drive,然后提取其中的内容。下面的脚本将对此进行处理:

import gdown

import zipfile

import os

# Define the URL of the file on Google Drive

url = 'https://universe.roboflow.com/ds/2uTKfYPXAl?key=6dageyjgDS'

# Define the destination file path

file_path = 'dataset.zip'

# Download the file

gdown.download(url, file_path, quiet=False)

# Function to extract zip files

def extract_zip(file_path, extract_to):

with zipfile.ZipFile(file_path, 'r') as zip_ref:

zip_ref.extractall(extract_to)

# Check if the downloaded file is a zip file

if zipfile.is_zipfile(file_path):

# Extract the contents of the main zip file

main_extract_path = 'dataset'

extract_zip(file_path, main_extract_path)

# Remove the main zip file after extraction

os.remove(file_path)

# List all files in the main extraction path

for item in os.listdir(main_extract_path):

item_path = os.path.join(main_extract_path, item)

# Check if the item is a zip file

if zipfile.is_zipfile(item_path):

# Extract the contents of the zip file into the main extraction folder

extract_zip(item_path, main_extract_path)

os.remove(item_path)

else:

print("The downloaded file is not a zip file.")

第 2 步:准备数据配置

接下来,我们需要创建一个 YAML 配置文件,定义数据集路径和类:

import yaml

# Define the data configuration

data_config = {

'train': '/content/data/images/train',

'val': '/content/data/images/valid',

'nc': 1, # number of classes

'names': ['license_plate']

}

# Write the configuration to a YAML file

with open('data.yaml', 'w') as file:

yaml.dump(data_config, file)

第 3 步:整理数据集

我们将使用多线程方法将数据集整理为训练、验证和测试目录,以加快整理过程:

import os

import shutil

from concurrent.futures import ThreadPoolExecutor

def copy_files(src_dir, dst_dir):

"""Copy files from src_dir to dst_dir."""

os.makedirs(dst_dir, exist_ok=True)

for file in os.listdir(src_dir):

shutil.copy(os.path.join(src_dir, file), os.path.join(dst_dir, file))

def process_folder(inputPar, outPar, folder):

"""Process 'images' and 'labels' subfolders within the given folder."""

inputChild = os.path.join(inputPar, folder)

for subfldr in ["images", "labels"]:

inputSubChild = os.path.join(inputChild, subfldr)

outChild = os.path.join(outPar, subfldr, folder)

if os.path.exists(inputSubChild):

copy_files(inputSubChild, outChild)

def main():

cPath = os.getcwd()

inputPar = os.path.join(cPath, "dataset")

outPar = os.path.join(cPath, "data")

folders = ["train", "valid", "test"]

with ThreadPoolExecutor() as executor:

executor.map(lambda folder: process_folder(inputPar, outPar, folder), folders)

if __name__ == "__main__":

main()

第 4 步:训练 YOLOv8 模型

整理好数据集后,我们就可以训练 YOLOv8 模型了。首先,安装 ultralytics 软件包:

!pip install ultralytics

然后,使用以下命令训练模型:

!yolo task=detect mode=train model=yolov8s.pt data=/content/data.yaml epochs=25 plots=True

第 5 步:执行推理

最后,我们将对测试图像进行推理,并应用 OCR 阅读检测到的车牌:

import cv2

import matplotlib.pyplot as plt.pyplot as plt

from ultralytics import YOLO

def imshow(title="Image", image=None, size=16):

h, w = image.shape[:2]

aspect_ratio = w / h

plt.figure(figsize=(size * aspect_ratio, size))

plt.imshow(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

plt.title(title)

plt.show()

model = YOLO('/content/runs/detect/train/weights/best.pt')

# Path to the image

image_path = '/content/data/images/test/0002a5b67e5f0909_jpg.rf.07ca41e79eb878b14032f650f34d0967.jpg'

# Perform inference

results = model(image_path)

# Extract bounding boxes and crop license plates

for result in results:

boxes = result.boxes.xyxy.cpu().numpy() # Get bounding boxes

img = cv2.imread(image_path)

for box in boxes:

print(box)

x1, y1, x2, y2 = map(int, box)

plate = img[y1:y2, x1:x2]

label = 'License Plate Text'

cv2.rectangle(img, (x1, y1), (x2, y2), (0, 0, 0), 1)

text_size = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.3, 1)[0]

cv2.rectangle(img, (x1, y1 - text_size[1]), (x1 + text_size[0], y1), (255, 255, 255), -1)

cv2.putText(img, label, (x1, y1), cv2.FONT_HERSHEY_SIMPLEX, 0.3, (0, 0, 0), 1)

imshow('License Plate', img)

第 6 步:建立 Streamlit 网络应用程序

为了使我们的车牌识别系统具有交互性和用户友好性,我们将使用 Streamlit 创建一个网络应用程序。下面介绍如何在 Streamlit 应用程序中集成 YOLOv8 检测和 PaddleOCR:

import streamlit as st

import cv2

import os

import numpy as np

from PIL import Image, ImageDraw, ImageFont

from ultralytics import YOLO

from paddleocr import PaddleOCR

# Set the environment variable to avoid OpenMP runtime conflict

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

ocr = PaddleOCR(lang='en', rec_algorithm='CRNN')

# Load the YOLOv8 model

model = YOLO('best.pt') # Replace with your specific YOLOv8 model path if needed

def enhance_contrast(image):

lab = cv2.cvtColor(image, cv2.COLOR_BGR2LAB)

l, a, b = cv2.split(lab)

clahe = cv2.createCLAHE(clipLimit=3.0, tileGridSize=(8, 8))

cl = clahe.apply(l)

limg = cv2.merge((cl, a, b))

enhanced_image = cv2.cvtColor(limg, cv2.COLOR_LAB2BGR)

return enhanced_image

def adaptive_threshold(image):

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

binary_image = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C,

cv2.THRESH_BINARY, 11, 2)

return binary_image

def load_image(image_file):

img = Image.open(image_file)

return img

def predict_license_plate(image):

# Convert PIL image to numpy array

image_np = np.array(image)

# Perform YOLOv8 detection

results = model.predict(image_np)

annotations = []

for result in results:

for detection in result.boxes:

bbox = detection.xyxy[0].tolist() # Bounding box coordinates

confidence = detection.conf[0].item() # Confidence score

class_id = detection.cls[0].item() # Class ID

# For this example, we assume class_id = 0 corresponds to license plates

if class_id == 0:

annotations.append({

'bbox': bbox,

'confidence': confidence

})

return annotations

def draw_annotations(image, annotations):

draw = ImageDraw.Draw(image)

for ann in annotations:

bbox = ann['bbox']

confidence = ann['confidence']

# Draw bounding box

draw.rectangle([(bbox[0], bbox[1]), (bbox[2], bbox[3])], outline='blue', width=3)

# Crop the license plate from the image

cropped_plate = image.crop((bbox[0], bbox[1], bbox[2], bbox[3]))

# Convert cropped Pillow image back to NumPy array

cropped_plate_arr = np.array(cropped_plate)

# Preprocess the image

enhanced_image = enhance_contrast(cropped_plate_arr)

binary_image = adaptive_threshold(enhanced_image)

# Perform OCR on the preprocessed image

results = ocr.ocr(binary_image, cls=False, det=False)

if results and results[0][0][1] > 0.6:

label = results[0][0][0]

else:

label = f"License_plate {confidence:.2f}"

ann['ocr_text'] = label

# Draw OCR result on the image

font = ImageFont.load_default()

w, h = font.getsize(label)

draw.rectangle((bbox[0], bbox[1] - h, bbox[0] + w, bbox[1]), fill='blue')

draw.text((bbox[0], bbox[1] - h), label, fill='white', font=font)

return image

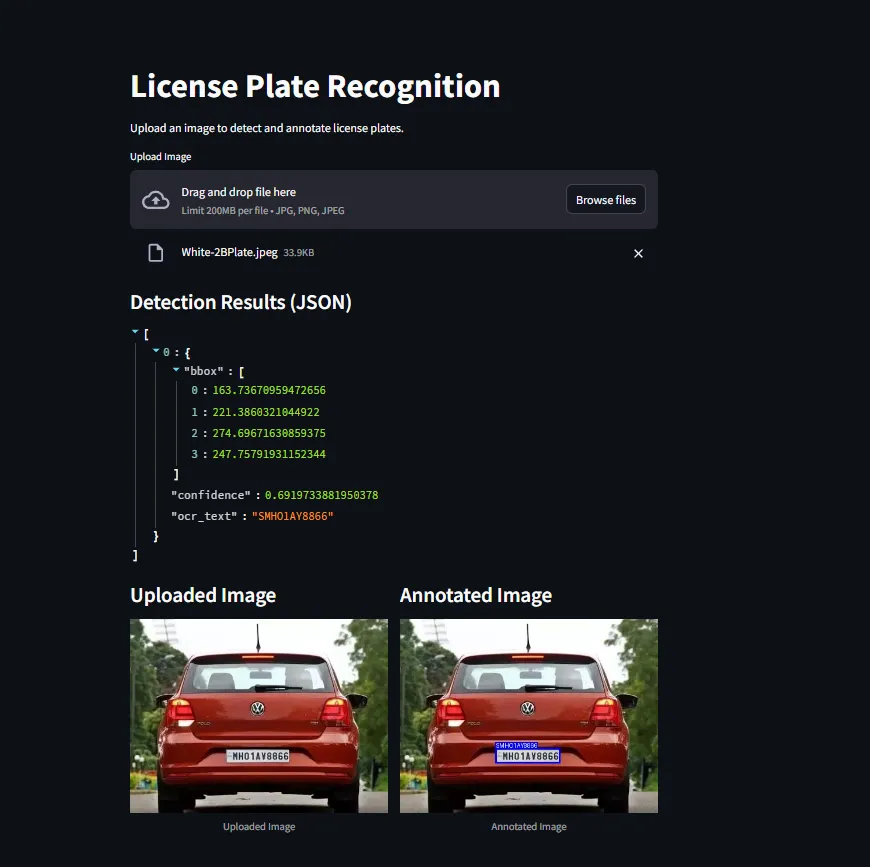

st.title("License Plate Recognition")

st.write("Upload an image to detect and annotate license plates.")

# Image upload

image_file = st.file_uploader("Upload Image", type=["jpg", "png", "jpeg"])

if image_file is not None:

# Load and display the uploaded image

image = load_image(image_file)

# Predict license plates

annotations = predict_license_plate(image)

# Annotate image

annotated_image = draw_annotations(image.copy(), annotations)

# Display JSON output

st.subheader("Detection Results (JSON)")

st.json(annotations)

# Create two columns

col1, col2 = st.columns(2)

with col1:

st.subheader("Uploaded Image")

st.image(image, caption='Uploaded Image', use_column_width=True)

with col2:

st.subheader("Annotated Image")

st.image(annotated_image, caption='Annotated Image', use_column_width=True)

在本文中,我们使用 YOLOv8 成功建立了车牌检测和 OCR 管道。我们从下载和提取数据集开始,准备数据配置、组织数据集、训练模型,最后执行推理和 OCR。该工作流程可适用于各种物体检测任务,为类似项目提供了强大的解决方案。

文章来源:https://medium.com/@vipinra79/automating-license-plate-recognition-alpr-and-ocr-with-yolov8-and-streamlit-7086acbe7da9

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消