解密RAG管道中文档块重叠的影响

检索增强生成(RAG)已经存在了一段时间,最近又有了多种形式。如今,它融合了先进的搜索策略、多代理方法和语义缓存。RAG 原型的构建相对容易,但在生产环境中实施则更具挑战性。在开发用于生产的 RAG 系统时,关注具体的业务用例至关重要。应将持续评估整合到流水线中,以确保满足延迟、可扩展性、容错和响应质量等性能指标。

目前已经出现了许多框架和技术,可根据对检索器和生成器组件的定性和定量实验来评估 RAG 系统的性能。其中包括 BLEU、ROUGE、ARES 和 RAGAs。

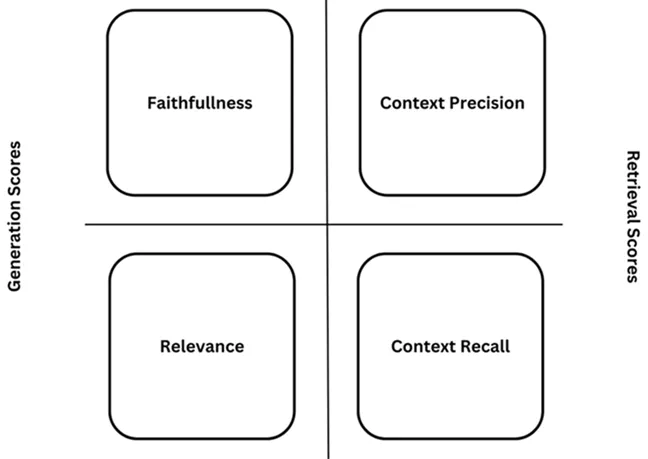

RAGAs

RAGAs 框架是一种专门用于评估检索增强生成管道的工具。虽然有许多工具和框架可用于构建 RAG 管道,但对它们的评估和性能衡量可能相当困难。这就是 RAGAs 发挥作用的地方。它为你提供最先进的工具,用于评估 LLM 生成的文本,为你的 RAG 管道提供有价值的见解。它可以无缝集成到你的 CI/CD 管道中,以促进持续的性能检查。它可根据准确性、语气、幻觉和流畅性等各种参数评估性能。

它与 LangChain 和 LlamaIndex 等领先框架集成,可在工作流中实施评估流程。

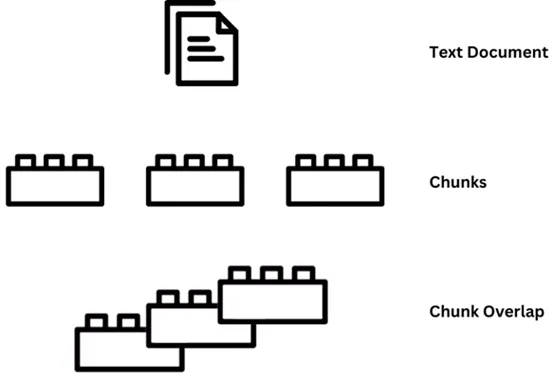

块大小和块重叠的概念

存储嵌入的分块大小和分块重叠度是两个重要参数,对系统的性能和生产中的输出质量起着至关重要的作用。分块大小决定了输入系统的信息粒度,而分块重叠则确保在分块过程中不会遗漏关键信息。经过良好校准的重叠可以防止信息块之间的上下文丢失,确保信息流的无缝衔接。在查询答案跨越多个分块的情况下,这一点尤为重要。

在本文中,我将提供使用 Qdrant [1] 和 LangChain 构建 RAG 系统的详细教程,用 RAGAs 对其进行评估,并深入分析改变向量数据库中的块重叠度如何影响 RAG 系统的结果。

RAG 管道

前提条件

本教程在这些 Python 库上进行了测试。请在工作时验证版本。

langchain: 0.2.7

sentence_transformers: 3.0.1

langchain_qdrant: 0.1.2

langchain-community: 0.2.7

langchain_experimental: 0.0.62

qdrant-client: 1.10.1

torch: 2.3.0

ragas: 0.1.10

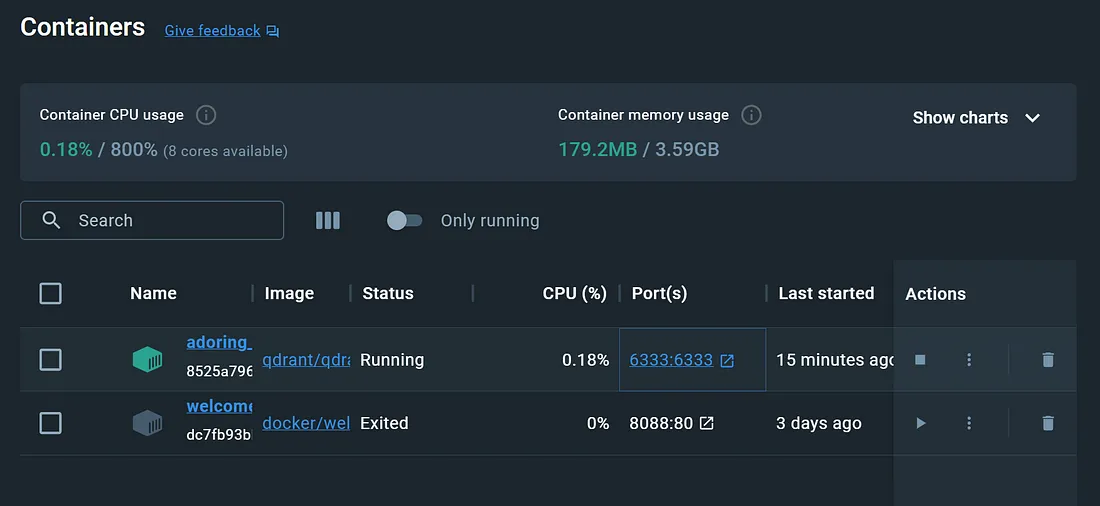

设置 Qdrant

如果使用本地环境,请确保已安装 Docker 并保持 Docker 引擎运行。可通过下载 Docker 映像安装 Qdrant。

docker pull qdrant/qdrant

使用该命令运行 Qdrant Docker 容器:

docker run -p 6333:6333 \

-v $(pwd)/qdrant_storage:/qdrant/storage \pwd)/qdrant_storage:/qdrant/storage \

qdrant/qdrant

或者,你也可以从 Docker 桌面控制台启动容器。

然后,你就可以启动Qdrant客户端了。

嵌入和数据库

由于我们要根据语块重叠度评估 RAG,因此最好使用一些连续的文本数据。在这里,我使用的是唐纳德-特朗普的一些竞选演讲。设置本地目录以存储文本文档。

使用 LangChain 的目录阅读器加载文档,并将文档分割成块。

from langchain_community.document_loaders import DirectoryLoader

from langchain_qdrant import Qdrant

from langchain_text_splitters import CharacterTextSplitter

# Some async tasks need to be done

import nest_asyncio

nest_asyncio.apply()

# Load Data

import random

loader = DirectoryLoader('./documents', glob="**/*.txt", show_progress=True)

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

docs = text_splitter.split_documents(documents)

在这里,我使用的是 Groq 提供的免费 LLM 端点。登录并在控制台中获取 API 密钥,然后再进行下一步。定义 LLM 和嵌入模型。

from langchain_community.embeddings.huggingface import HuggingFaceEmbeddings

from langchain_groq import ChatGroq

llm = ChatGroq(temperature=0, model="llama3-70b-8192", api_key="API_KEY")

critic_llm = ChatGroq(temperature=0,model='gemma-7b-it', api_key ="API_KEY" )

embeddings = HuggingFaceEmbeddings(

model_name="sentence-transformers/all-mpnet-base-v2"

)

创建向量存储。这包括处理输入文件,将其转换为矢量嵌入,并将这些嵌入及其元数据存储到指定的 Qdrant 矢量存储中。

metadatas = []

texts = []

for doc in docs:

metadatas.append(doc.metadata)

texts.append(doc.page_content)

vector_store = Qdrant.from_texts(texts,

metadatas=metadatas,

embedding=embeddings,

location=":memory:",

prefer_grpc=True,

collection="SpeechData")

配置链

定义合适的提示模板并构建链。

from langchain import PromptTemplate

prompt_template = """Use the following pieces of context to answer the question enclosed within 3 backticks at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

Please provide an answer which is factually correct and based on the information retrieved from the vector store.

Please also mention any quotes supporting the answer if any present in the context supplied within two double quotes "" .

{context}

QUESTION:```{question}```

ANSWER:

"""

PROMPT = PromptTemplate(

template=prompt_template, input_variables=["context","question"]

)

#

chain_type_kwargs = {"prompt": PROMPT}

retriever = vector_store.as_retriever(search_kwargs={"k": 5})

from langchain.chains import RetrievalQA

chain = RetrievalQA.from_chain_type(llm=llm,

chain_type="stuff",

chain_type_kwargs={"prompt": PROMPT},

retriever=retriever,

return_source_documents=True)

这样,一个基本的 RAG 系统就建立起来了。现在,让我们进入评估阶段。

使用 RAGAs 评估 RAG

可以根据 RAG 系统的具体应用,按照不同的标准对其进行评估。例如,如果 RAG 系统用于文本生成,评估标准可能包括生成文本的连贯性、流畅性和相关性。如果系统用于信息检索,则要求包括精确度、召回率和排序质量。在问题解答应用中,系统的评估标准可能包括准确性、答案的完整性以及处理复杂查询的能力。

我们将使用 RAGAs 的忠实度、相关性、上下文精确度和上下文调用度量来进行评估。

- 忠实性: 它衡量的是模型输出如何准确地反映源材料中的信息。忠实的响应包含与原始内容一致的信息,而不会引入新的或相互矛盾的信息。高忠实度意味着模型没有产生幻觉。

- 相关性: 它评估的是模型的输出在多大程度上解决了给定的查询。相关的回复能直接回答查询,包含符合用户需求的信息。高相关性表明模型理解了查询,并提供了适当的主题信息。

- 语境召回: 它是候选句子提取和自然语言推理(NLI)的结合,允许系统估计正确捕获的数据点(真阳性,TP)和未能捕获的数据点(假阴性,FN)。(TP/(TP+FN))

- 上下文精度: 它指的是从所提供的上下文中精确定位和提取与准确回答给定问题相关且必不可少的特定句子的评价作用。最终得分是提取出的句子数量与给定上下文中句子总数的比率,用于衡量提取的完整性和准确性。

开始设置实验 使用 TestsetGenerator cl 生成评估数据集。默认情况下使用 OpenAI 模型。选择这样做可能会提高速度,但也可能导致大量成本。

import time

from ragas.testset.generator import TestsetGenerator

from ragas.testset.evolutions import simple, reasoning, multi_context

generator = TestsetGenerator.from_langchain(

generator_llm= llm,

critic_llm=critic_llm,

embeddings=embeddings,

)

如你所见,我们在这里使用了两个 LLM。

- Critic LLM:在 RAGAs 框架中,Critic LLM 充当质量控制检查员,对生成的问答对进行过滤并提供反馈,以确保其相关性和质量。

- 生成器 LLM:生成器 LLM 负责根据给定的上下文或文档创建初始的各种问答对。它分析输入内容,提出相关问题,并生成相应的答案,为测试数据集奠定基础。

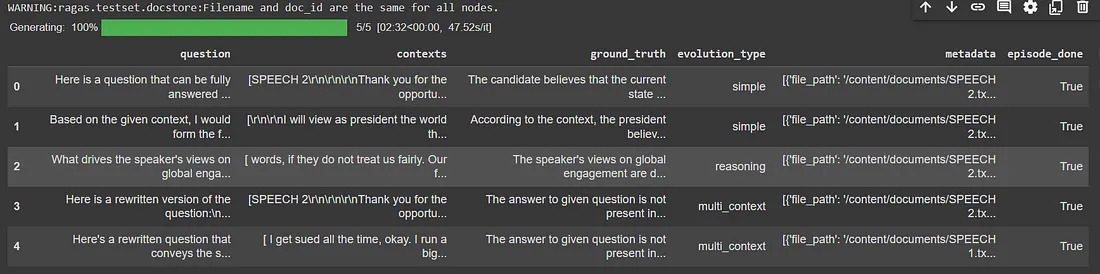

生成测试集

testset = generator.generate_with_langchain_docs(

documents,

test_size=5,5,

distributions={simple: 0.5, reasoning: 0.25, multi_context: 0.25},

)

df = testset.to_pandas()

df.head()

在使用前对数据集进行适当转换。RAGAs 希望评估数据集采用特定格式。

{

"question": [q1, q2…],

"answer": [a1, a2…],

"contexts": [c1, c2…],

"ground_truth": [g1,g2,…]

}from datasets import Dataset

def create_eval_dataset(dataset, eval_size,retrieval_window_size):

questions = []

answers = []

contexts = []

ground_truths = []

for i in range(eval_size):

entry = dataset[i]

question = entry['question']

ground_truth = entry['ground_truth']

questions.append(question)

ground_truths.append(ground_truth)

response = chain(question)

answer = response['result']

context = [doc.page_content for doc in response['source_documents'][:retrieval_window_size]]

contexts.append(context)

answers.append(answer)

rag_response_data = {

"question": questions,

"answer": answers,

"contexts": contexts,

"ground_truth": ground_truths

}

return rag_response_data

EVAL_SIZE = 4

RETRIEVAL_SIZE = 2

ds_dict= create_eval_dataset(ds,EVAL_SIZE,RETRIEVAL_SIZE)

经过处理的评估数据集预计会是这样。

{

'question': ['Here is a question that can be fully answered from the given context:\n\nWhat approach will a Trump administration take to strengthen relationships with Russia and China?\n\nAnswer: A Trump administration will seek common ground based on shared interests, regard Russia and China with open eyes, and desire to live peacefully and in friendship with them, while maintaining a position of strength.',

'Here is a question that can be fully answered from the given context:\n\nQuestion: How did ISIS get the oil, according to the speaker?\n\nAnswer: ISIS took the oil.',

'Here is a rewritten question that conveys the same meaning in a less direct and shorter manner:\n\n"For richer US, what must be done to Social Security and Medicare?"\n\nThis question still requires the reader to make connections between the speaker\'s stance and the importance of addressing waste and fraud, but it\'s more concise and indirect, encouraging the reader to think critically about the context.',

'Here is a rewritten question that conveys the same meaning in a less direct and shorter manner:\n\n"What comes before military & infrastructure rebuild?"\n\nThis question still requires the reader to make connections between the speaker\'s statements, but in a more concise and indirect way.'],

'answer': ['The answer is: A Trump administration will seek common ground based on shared interests, regard Russia and China with open eyes, and desire to live peacefully and in friendship with them, while maintaining a position of strength.\n\nSupporting quote: "We desire to live peacefully and in friendship with Russia and China. We have serious differences with these two nations, and must regard them with open eyes, but we are not bound to be adversaries. We should seek common ground based on shared interests."',

'The answer is: ISIS took the oil.\n\nThis is supported by the quote: "And for those of you that know and love Donald Trump--there are some of you--have I been saying for four years, keep the oil. And now, to top it off, we have ISIS."',

'Based on the provided context, the answer to the rewritten question is:\n\n"To make the country rich again, get rid of the waste and fraud in Social Security and Medicare, so that they can be afforded."\n\nThis answer is supported by the quote: "Get rid of the waste, get rid of the fraud, but you deserve your Social Security; you\'ve been paying your Security."',

'Based on the provided context, the answer to the question "What comes before military & infrastructure rebuild?" is "America First".\n\nThis is supported by the quote: "My foreign policy will always put the interests of the American people and American security above all else. It has to be first. Has to be." and "America First will be the major and overriding theme of my administration."'],

'contexts': [['Russia, for instance, has also seen the horror of Islamic terrorism. I believe an easing of tensions, and improved relations with Russia from a position of strength only is possible, absolutely possible. Common sense says this cycle, this horrible cycle of hostility must end and ideally will end soon. Good for both countries.\n\nSome say the Russians won’t be reasonable. I intend to find out. If we can’t make a deal under my administration, a deal that’s great — not good, great — for America, but also good for Russia, then we will quickly walk from the table. It’s as simple as that. We’re going to find out.\n\nFixing our relations with China is another important step — and really toward creating an even more prosperous period of time. China respects strength and by letting them take advantage of us economically, which they are doing like never before, we have lost all of their respect.',

'We have a massive trade deficit with China, a deficit that we have to find a way quickly, and I mean quickly, to balance. A strong and smart America is an America that will find a better friend in China, better than we have right now. Look at what China is doing in the South China Sea. They’re not supposed to be doing it. No respect for this country or this president. We can both benefit or we can both go our separate ways. If need be, that’s what’s going to have to happen.\n\nAfter I’m elected president, I will also call for a summit with our NATO allies and a separate summit with our Asian allies. In these summits, we will not only discuss a rebalancing of financial commitments, but take a fresh look at how we can adopt new strategies for tackling our common challenges. For instance, we will discuss how we can upgrade NATO’s outdated mission and structure, grown out of the Cold War to confront our shared challenges, including migration and Islamic terrorism.'],

["So now ISIS has the oil. And the stuff that ISIS doesn't have, Iran is going to take. So we get nothing. We have $2 trillion and we have thousands of lives lost, thousands, and we have, what do we have. I mean I walk down the streets of New York and I see so many wounded warriors, incredible people. And we have to help those people, we have to help our vets, we have to help our military, we have to build our military. But, and we have to do it fast; we have to do it fast. We have incompetent people. They put people in charge that have no clue what they're doing. It needs money. We have to make our country rich again so we do that, so we can save Social Security. 'Cause I'm not a cutter; I'll probably be the only Republican that does not want to cut Social Security. I'm not a cutter of Social Security; I want to make the country rich so that Social Security can be afforded, and Medicare and Medicaid. Get rid of the waste, get rid of the fraud, but you deserve your Social Security; you've been paying your Security. And like, I like Congressman Ryan, I like a lot of the people that are talking about you know cutting Social Security, and by the way the Democrats are eating your lunch on this issue. It's an issue that you're not going to win; you've got to make the country rich again and strong again so that you can afford it, and so you can afford military, and all of the other things. Now, we have a game changer now, and the game changer is nuclear weapons.",

'They just kept coming and coming. We went from mistakes in Iraq to Egypt to Libya, to President Obama’s line in the sand in Syria. Each of these actions have helped to throw the region into chaos and gave ISIS the space it needs to grow and prosper. Very bad. It all began with a dangerous idea that we could make western democracies out of countries that had no experience or interests in becoming a western democracy.\n\nWe tore up what institutions they had and then were surprised at what we unleashed. Civil war, religious fanaticism, thousands of Americans and just killed be lives, lives, lives wasted. Horribly wasted. Many trillions of dollars were lost as a result. The vacuum was created that ISIS would fill. Iran, too, would rush in and fill that void much to their really unjust enrichment.'],

['And what are we doing about this? President Obama has proposed a 2017 defense budget that in real dollars, cuts nearly 25 percent from what we were spending in 2011. Our military is depleted and we’re asking our generals and military leaders to worry about global warming.\n\nWe will spend what we need to rebuild our military. It is the cheapest, single investment we can make. We will develop, build and purchase the best equipment known to mankind. Our military dominance must be unquestioned, and I mean unquestioned, by anybody and everybody.\n\nBut we will look for savings and spend our money wisely. In this time of mounting debt, right now we have so much debt that nobody even knows how to address the problem. But I do. No one dollar can be wasted. Not one single dollar can we waste. We’re also going to have to change our trade, immigration and economic policies to make our economy strong again. And to put Americans first again.',

'Finally, we must develop a foreign policy based on American interests. Businesses do not succeed when they lose sight of their core interests and neither do countries. Look at what happened in the 1990s. Our embassies in Kenya and Tanzania — and this was a horrible time for us — were attacked. and 17 brave sailors were killed on the U.S.S. Cole.\n\nAnd what did we do? It seemed we put more effort into adding China into the World Trade organization, which has been a total disaster for the United States. Frankly, we spent more time on that than we did in stopping Al Qaeda. We even had an opportunity to take out Osama bin Laden and we didn’t do it\n\nAnd then we got hit at the World Trade Center and the Pentagon. Again, the worst attack on our country in its history. Our foreign policy goals must be based on America’s core national security interests. And the following will be my priorities.'],

['So we have to rebuild quickly our infrastructure of this country. If we don\'t-- The other day in Ohio a bridge collapsed. Bridges are collapsing all over the country. The reports on bridges and the like are unbelievable, what\'s happening with our infrastructure. I go to Saudi Arabia, I go to Dubai; I am doing big jobs in Dubai. I go to various different places. I go to China. They are building a bridge on every corner. They have bridges that make the George Washington Bridge like small time stuff. They\'re building the most incredible things you have ever seen. They are building airports in Qatar--which they like to say "cutter" but I\'ve always said "qatar" so I\'ll keep it "qatar" what the hell. But they\'re building, they\'re building an airport and have just completed an airport the likes of which you have never seen, in Dubai an airport the likes of which you have never seen. And then I come back to LaGuardia where the runways have potholes. The place is falling apart. You go into the main terminal and they have a terraza floor that\'s so old it\'s falling apart. And they have a hole in it, and they replace it with asphalt. So you have a white terraza floor and they put asphalt all over the place. This is inside, not outside. And I just left Dubai where they have the most incredible thing you\'ve ever seen. In fact my pilot said oh Mr. Trump this is such an honor. I said it\'s not an honor; they\'re just smart.',

'SPEECH 2\n\nThank you for the opportunity to speak to you, and thank you to the Center for National Interest for honoring me with this invitation. It truly is a great honor. I’d like to talk today about how to develop a new foreign policy direction for our country, one that replaces randomness with purpose, ideology with strategy, and chaos with peace.\n\nIt’s time to shake the rust off America’s foreign policy. It’s time to invite new voices and new visions into the fold, something we have to do. The direction I will outline today will also return us to a timeless principle. My foreign policy will always put the interests of the American people and American security above all else. It has to be first. Has to be.\n\nThat will be the foundation of every single decision that I will make.\n\nAmerica First will be the major and overriding theme of my administration. But to chart our path forward, we must first briefly take a look back. We have a lot to be proud of.']],

'ground_truth': ['A Trump administration will seek common ground based on shared interests, regard Russia and China with open eyes, and desire to live peacefully and in friendship with them, while maintaining a position of strength.',

'ISIS took the oil.',

'To afford Social Security and Medicare, the country needs to be made rich again by getting rid of waste and fraud, not by cutting these programs.',

'Making the country rich again']

}下面的函数根据这些标准对 RAG 进行评估,并返回延迟和分数。你可能需要为每次迭代重复创建向量存储。

from ragas import evaluate

from ragas.metrics import (

faithfulness,

answer_relevancy,

context_recall,

context_precision,

)

import time

chunk_size = 50

def evaluate_response_time_and_accuracy(chunk_overlap, ds_dict, llm, embed_model):

text_splitter = CharacterTextSplitter(chunk_size=chunk_size, chunk_overlap=chunk_overlap)

docs = text_splitter.split_documents(documents)

metadatas = []

texts = []

for doc in docs:

metadatas.append(doc.metadata)

texts.append(doc.page_content)

vector_store = Qdrant.from_texts(texts,

metadatas=metadatas,

embedding=embed_model,

location=":memory:",

prefer_grpc=True,

collection="SpeechData")

# Prepare the dataset

dataset = Dataset.from_dict(ds_dict)

# Define metrics

metrics = [

faithfulness,

answer_relevancy,

context_precision,

context_recall,

]

# Evaluate using Ragas

start_time = time.time()

result = evaluate(

metrics=metrics,

dataset=dataset,

llm=llm,

embeddings=embed_model,

raise_exceptions=False,

)

average_response_time = time.time() - start_time

average_faithfulness = result['faithfulness']

average_answer_relevancy = result['answer_relevancy']

average_context_precision = result['context_precision']

average_context_recall = result['context_recall']

return (average_response_time, average_faithfulness, average_answer_relevancy,

average_context_precision, average_context_recall)

我们的目标是分析改变分块重叠度带来的性能变化。在此,我们将通过遍历一些值来决定。

for chunk_overlap in range(0,chunk_size,6):

(avg_time, avg_faithfulness, avg_answer_relevancy,avg_context_precision, avg_context_recall) = evaluate_response_time_and_accuracy(chunk_overlap, ds_dict, llm, embeddings)

print(f"Chunk size {chunk_size}, Overlap {chunk_overlap} - "

f"Average Response time: {avg_time:.2f}s, "

f"Average Faithfulness: {avg_faithfulness:.2f}, "

f"Average Answer Relevancy: {avg_answer_relevancy:.2f}, "

f"Average Context Precision: {avg_context_precision:.2f}, "

f"Average Context Recall: {avg_context_recall:.2f}")

time.sleep(2)

你可以看到根据我们使用的 chunk_overlapvalues 得出的分数。

Chunk size 50, Overlap 0 - Average Response time: 3.87s, Average Faithfulness: 0.67, Average Answer Relevancy: 0.83, Average Context Precision: 0.75, Average Context Recall: 0.80

Chunk size 50, Overlap 6 - Average Response time: 4.30s, Average Faithfulness: 0.67, Average Answer Relevancy: 0.67, Average Context Precision: 0.70, Average Context Recall: 0.72

Chunk size 50, Overlap 12 - Average Response time: 3.99s, Average Faithfulness: 0.70, Average Answer Relevancy: 0.70, Average Context Precision: 0.68, Average Context Recall: 0.70

Chunk size 50, Overlap 18 - Average Response time: 3.98s, Average Faithfulness: 0.72, Average Answer Relevancy: 0.72, Average Context Precision: 0.70, Average Context Recall: 0.72

Chunk size 50, Overlap 24 - Average Response time: 4.11s, Average Faithfulness: 0.75, Average Answer Relevancy: 0.75, Average Context Precision: 0.72, Average Context Recall: 0.74

Chunk size 50, Overlap 30 - Average Response time: 4.08s, Average Faithfulness: 0.83, Average Answer Relevancy: 0.83, Average Context Precision: 0.82, Average Context Recall: 0.85

Chunk size 50, Overlap 36 - Average Response time: 4.62s, Average Faithfulness: 0.85, Average Answer Relevancy: 0.85, Average Context Precision: 0.84, Average Context Recall: 0.87

Chunk size 50, Overlap 42 - Average Response time: 4.89s, Average Faithfulness: 0.87, Average Answer Relevancy: 0.87, Average Context Precision: 0.86, Average Context Recall: 0.89

Chunk size 50, Overlap 48 - Average Response time: 5.63s, Average Faithfulness: 0.88, Average Answer Relevancy: 0.88, Average Context Precision: 0.87, Average Context Recall: 0.90

根据上述结果,30 的 chunk_overlap 似乎是性能最好的。当重叠值超过 30 时,响应时间开始明显增加,而性能指标仅有微小提升。最佳的块重叠值可能取决于具体的使用情况和所处理文本的性质。虽然没有经验法则,但在选择值时有几点需要注意。

- 无块重叠会导致不同块之间的上下文丢失。

- 过度重叠会造成重复和冗余。

- 增加信息块重叠度可以提高忠实度和相关性得分。

- 如果响应时间是你使用案例的关键因素,你可能更喜欢较低的重叠度,这样响应速度会更快。相反,如果响应的质量更重要,则可能更喜欢较高的重叠度。

强烈建议将 chunk_overlap 值保持在 chunk_size 以下。

语义分块

传统的分块方法是根据固定长度或逻辑部分来划分文档,而语义分块则采用了不同的方法。它将文档切割成有意义的、语义完整的块。语义分块法计算每个句子的嵌入,并将语义相似的句子归为一组。由于考虑到了内容的上下文,语义分块技术大大提高了检索质量,从而提高了响应速度。

除了文本分割器之外,RAG的实现方法与上述相同。在这里,我们将使用SemanticChunker(语义分割器),它将文档分割成节点,每个节点都是一组语义相关的句子。详情请参见官方文档。

这种分割器的两个重要参数是

- breakpoint_threshold_type: 分块器通过比较句子的嵌入,并在差异超过一定阈值时将其拆分。确定这种差异的方法包括百分位数、标准偏差、四分位间差和梯度。

- embeddings : 使用的嵌入模型。

from langchain_experimental.text_splitter import SemanticChunker

semantic_chunker = SemanticChunker(embeddings, breakpoint_threshold_type="percentile")

docs = semantic_chunker.create_documents([d.page_content for d in documents])

metadatas = []

texts = []

for doc in docs:

metadatas.append(doc.metadata)

texts.append(doc.page_content)

print(len(metadatas),len(texts))

vector_store = Qdrant.from_texts(texts,

metadatas=metadatas,

embedding=embeddings,

location=":memory:",

prefer_grpc=True,

collection="SpeechDataSemantic")

(avg_time, avg_faithfulness, avg_answer_relevancy,avg_context_precision, avg_context_recall) = evaluate_response_time_and_accuracy(30,ds_dict, llm, embeddings)

print(f"Average Response time: {avg_time:.2f}s, "

f"Average Faithfulness: {avg_faithfulness:.2f}, "

f"Average Answer Relevancy: {avg_answer_relevancy:.2f}, "

f"Average Context Precision: {avg_context_precision:.2f}, "

f"Average Context Recall: {avg_context_recall:.2f}")

通过评估该 RAG 管道的结果,我们可以发现,使用语义分块技术的结果要稍好一些。

Average Response time: 1.43s, Average Faithfulness: 0.83, Average Relevancy: 0.83, Average Context Precision: 0.9, Average Context Recall: 1.00

结论

在开发生产就绪的 RAG 系统时,分块大小和重叠是需要考虑的关键参数。目前还没有普遍适用的最佳值,主要取决于所使用的嵌入模型、处理的文档、手头的任务以及其他因素。

总之,我们已经学会了如何使用 LangChain 和 RAGAs 开发和评估 RAG 系统。我们详细讨论了在 RAG 系统中选择块大小和块重叠的相关性和影响。较大的块大小可以增强上下文,但可能会增加延迟和成本,而较小的块大小可能会导致更多的块和潜在的上下文丢失。虽然不重叠会导致上下文丢失,过度重叠会造成冗余,但最佳的重叠可以提高响应的忠实性和相关性。建议将重叠度保持在小于分块大小的范围内。平衡这些参数是关键,同时还要考虑响应时间、响应的忠实性和相关性。

我们还讨论了一种先进的分块策略--语义分块,并展示了它如何通过将语义相似的句子组合在一起来改善 RAG 的某些指标,从而确保文本的语义完整性。