【指南】微调情绪分析模型

Hugging Face指南

在这篇微调情感分析模型的教科书实施中,我将按照 Hugging Face 提供的演示进行操作。我将逐一介绍每个步骤,并提供相应的解释。

首先,我将使用谷歌 Colab 笔记本,随后我将在配备 M1 芯片和 16 GB 内存的 MacBook Air 上重复同样的步骤。

打开一个新的 Colab,将运行时类型更改为 GPU。

安装必要的库。

!pip install torch datasets transformers huggingface_hub

import torch

torch.cuda.is_available()

# this should return "True" to use cuda.

我们安装了 git-lfs,以便使用 Git 大文件存储(LFS)来管理 Hugging Face 模型仓库中的大文件。

!apt-get install git-lfs

我们需要使用 Hugging Face 令牌。从 Hugging Face 配置文件的设置中获取它,并允许它访问存储库。然后在 Colab 中设置秘钥。

from google.colab import userdata

my_secret_key = userdata.get('HF_TOKEN')

接下来,让我们下载 IMDB 数据集,用于微调。

# Load data

from datasets import load_dataset

imdb = load_dataset("imdb")

type(imdb) # datasets.dataset_dict.DatasetDict

print(imdb)

"""

DatasetDict({

train: Dataset({

features: ['text', 'label'],

num_rows: 25000

})

test: Dataset({

features: ['text', 'label'],

num_rows: 25000

})

unsupervised: Dataset({

features: ['text', 'label'],

num_rows: 50000

})

})

"""

print(imdb['train'][0:2])

"""

{'text': ['I rented I AM CURIOUS-YELLOW from my video store because of all

the controversy that surrounded it when it was first released in 1967.

I also heard that at first it was seized by U.S. customs if it ever

tried to enter this country, therefore being a fan of films considered

"controversial" I really had to see this for myself.<br /><br />The plot

is centered around a young Swedish drama student named Lena who wants to

learn everything she can about life. In particular she wants to focus her

attentions to making some sort of documentary on what the average Swede

thought about certain political issues such as the Vietnam War and race

issues in the United States. In between asking politicians and ordinary

denizens of Stockholm about their opinions on politics, she has sex with

her drama teacher, classmates, and married men.<br /><br />What kills me

about I AM CURIOUS-YELLOW is that 40 years ago, this was considered

pornographic. Really, the sex and nudity scenes are few and far between,

even then it\'s not shot like some cheaply made porno.

While my countrymen mind find it shocking, in reality sex and

nudity are a major staple in Swedish cinema. Even Ingmar Bergman,

arguably their answer to good old boy John Ford, had sex scenes in his films.

<br /><br />I do commend the filmmakers for the fact that any sex shown

in the film is shown for artistic purposes rather than just to shock

people and make money to be shown in pornographic theaters in America.

I AM CURIOUS-YELLOW is a good film for anyone wanting to study the meat

and potatoes (no pun intended) of Swedish cinema. But really, this film

doesn\'t have much of a plot.',

'"I Am Curious: Yellow" is a risible and

pretentious steaming pile. It doesn\'t matter what one\'s political views

are because this film can hardly be taken seriously on any level. As for

the claim that frontal male nudity is an automatic NC-17, that isn\'t true.

I\'ve seen R-rated films with male nudity. Granted, they only offer some

fleeting views, but where are the R-rated films with gaping vulvas and

flapping labia? Nowhere, because they don\'t exist. The same goes for those

crappy cable shows: schlongs swinging in the breeze but not a clitoris in

sight. And those pretentious indie movies like The Brown Bunny, in which

we\'re treated to the site of Vincent Gallo\'s throbbing johnson, but not

a trace of pink visible on Chloe Sevigny. Before crying (or implying)

"double-standard" in matters of nudity, the mentally obtuse should take

into account one unavoidably obvious anatomical difference between men and

women: there are no genitals on display when actresses appears nude, and

the same cannot be said for a man. In fact, you generally won\'t see

female genitals in an American film in anything short of porn or explicit

erotica. This alleged double-standard is less a double standard than an

admittedly depressing ability to come to terms culturally with the insides

of women\'s bodies.'],

'label': [0, 0]}

"""

我们有三个数据集:训练集、测试集和无监督集。每个数据集包含两个特征:文本和标签。在标签数据集中,0 代表负标签,1 代表正标签。在无监督数据集中,所有标签都设置为-1,因为它们事先没有被标记。

出于学习目的,我们将使用其中的一小部分数据来加速训练过程。

small_train_dataset = imdb["train"].shuffle(seed=42).select([i for i in list(range(3000))])

small_test_dataset = imdb["test"].shuffle(seed=42).select([i for i in list(range(300))])

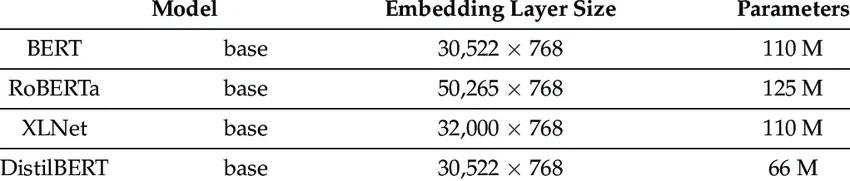

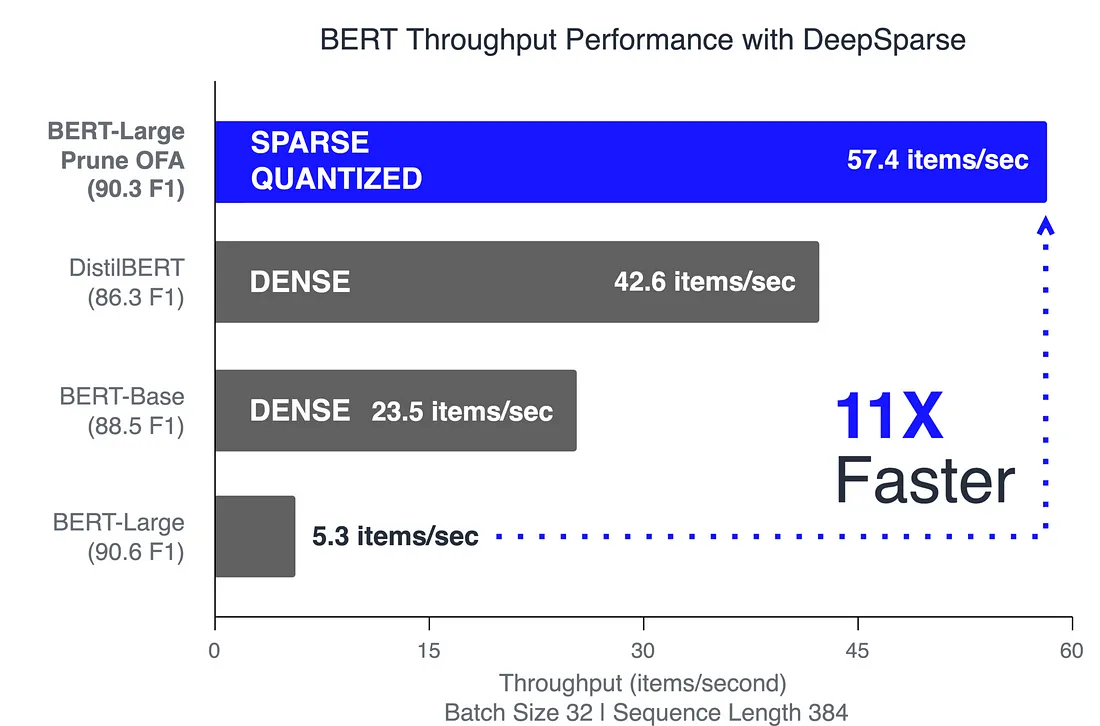

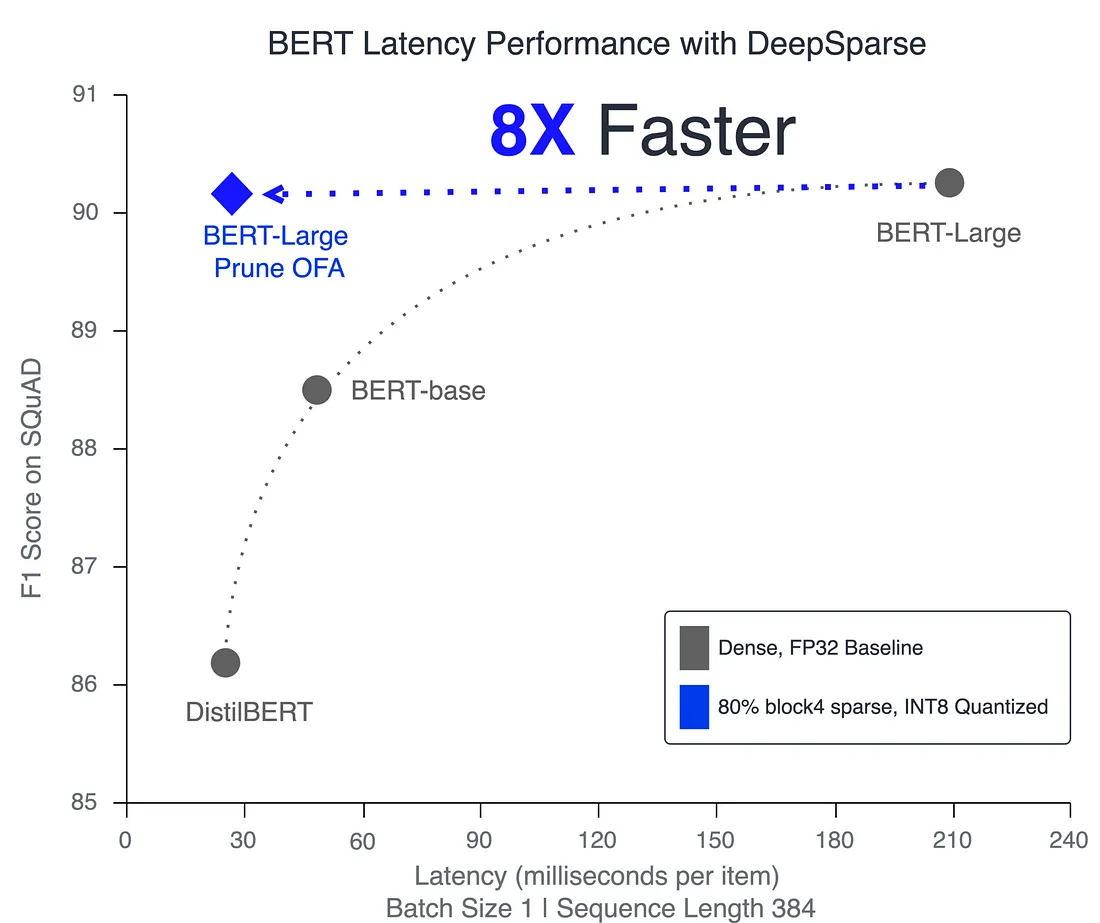

作为基础模型,我们将使用 DistilBERT。

DistilBERT 是更小、更快、更便宜、更轻便的 BERT 版本。

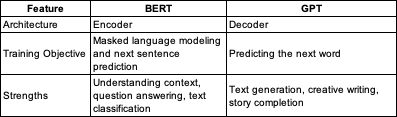

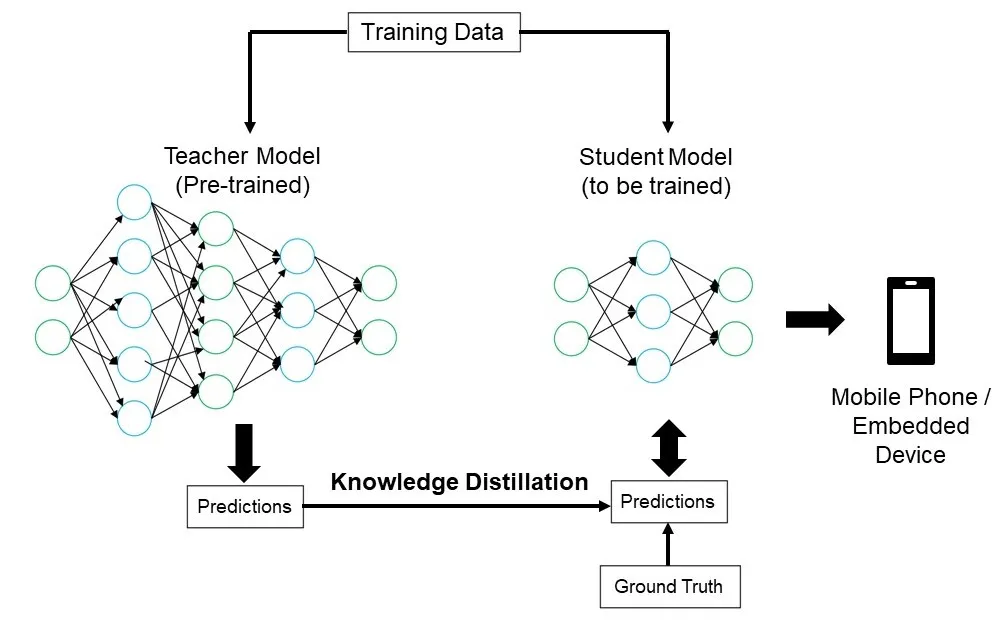

什么是 BERT?

来自变换器的双向编码器表示法(BERT)是一种著名的基于变换器架构的模型,它使用自我注意机制来处理输入文本。

BERT 是双向的,这意味着它被设计为深度双向的,在所有层中对左右上下文进行训练。传统语言模型的训练目的是预测句子中的下一个单词(单向性),这限制了模型可以学习的语境。

BERT 使用的是 Transformer 架构的编码器部分,旨在处理输入序列。

BERT 可以根据句子的上下文来学习如何表示单词的含义。

好,让我们回到 DistilBERT。

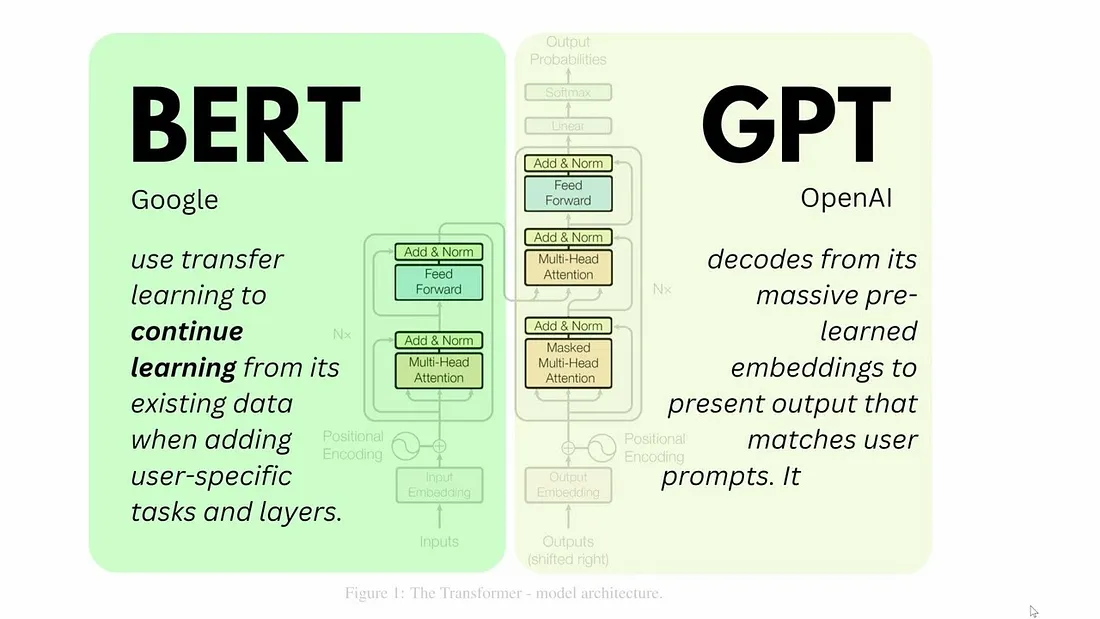

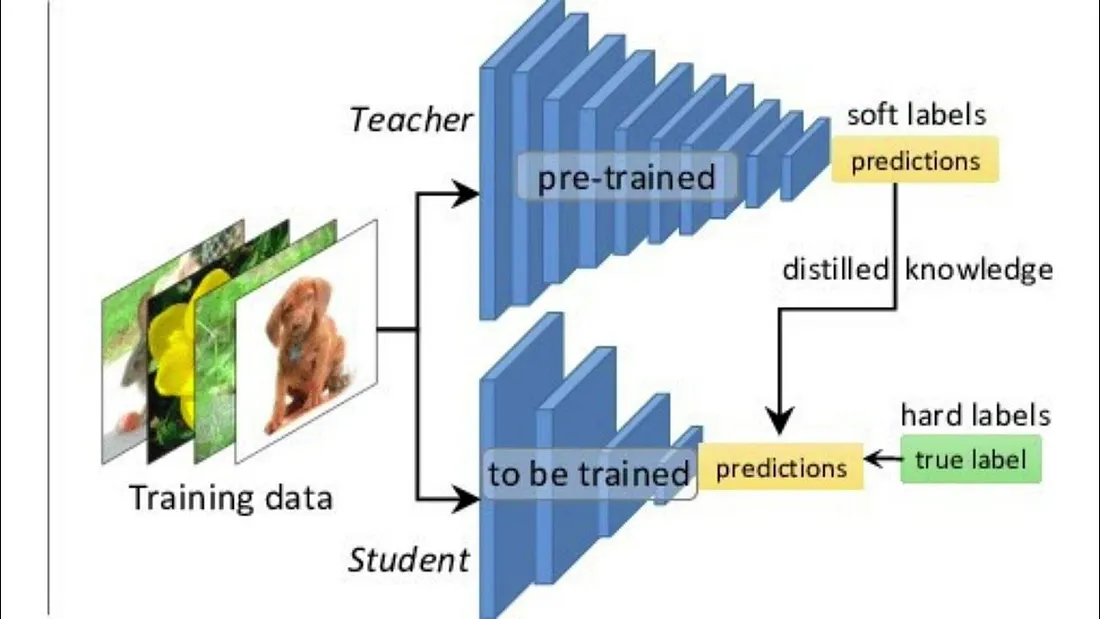

DistilBERT 是通过一种被称为 "知识蒸馏 "的技术进行训练的。

知识提炼包括训练一个较小的模型(学生)来模仿一个更大、更复杂的模型(教师)的行为。在 DistilBERT 中,教师就是 BERT。学生模型通过学习产生与教师相似的输出,但参数更少。

学生模型不仅要在硬目标(数据集中的实际标签)上进行训练,还要在软目标(即教师模型产生的输出分布(概率))上进行训练。与硬标签相比,这些软目标包含有关不同类别之间关系的更丰富信息。

学生模型体积更小,速度更快,使用的计算资源更少,适合在计算能力或内存有限的环境中部署。

由于我们正在学习如何微调模型,因此使用较小的模型是合理的。让我们继续;

为了处理训练数据,我们将使用标记化器。

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

AutoTokenizer 是一个可以自动检测并使用与你指定的预训练模型相对应的适当标记符的类。标记化器负责将文本转换为模型可以理解的格式,通常是将文本分割为标记或单词,然后将这些标记转换为数字 ID。

uncased 表示模型不区分大写字母和小写字母,将所有文本都视为小写字母。

def preprocess_function(examples):

return tokenizer(examples["text"], truncation=True)

tokenized_train = small_train_dataset.map(preprocess_function, batched=True)

tokenized_test = small_test_dataset.map(preprocess_function, batched=True)

print(tokenized_train[0])

"""

{'text': 'There is no relation at all between Fortier and Profiler but

the fact that both are police series about violent crimes. Profiler

looks crispy, Fortier looks classic. Profiler plots are quite simple. ...',

'label': 1,

'input_ids': [101, 2045, 2003, 2053, 7189, 2012, 2035, 2090, 3481, 3771,

1998, 6337, 2099, 2021, 1996, 2755, 2008, 2119, 2024, 2610, 2186, 2055,

...

6355, 6997, 1012, 6337, 2099, 3504, 15594, 2100, 1010, 3481, 3771, 3504,

2012, 2035, 1012, 1012, 1012, 102],

'attention_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

...

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

]}

"""

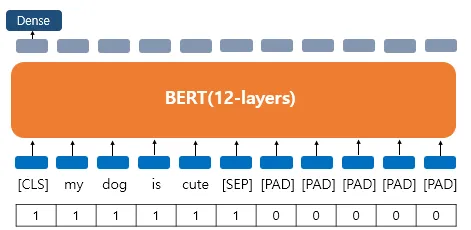

truncation=True 参数可确保如果标记化文本超过了模型所能处理的最大长度,它将被截断以适合模型。

- text: 示例的原始文本。

- label:标签: 与此示例相关的标签(用于监督学习)。

- input_ids:输入 ID: 这些是从文本中获取的标记的数字表示(标记 ID)。这些 ID 与标记化器词汇表中的标记相对应。

- 注意掩码(attention_mask): 该键包含一个 0 和 1 的列表,长度与 input_ids 相同。该掩码有助于模型关注序列的相关部分。带有 1 的位置表示需要考虑的有效标记,而 0 通常表示截断过程中引入的填充。

在使用基于转换器的模型(如 BERT)时,输入序列通常有不同的长度。为了高效地批量处理这些序列,我们需要确保它们都具有相同的长度。这就是所谓的填充。

整理器会对较短的序列进行填充,以匹配批次中最长序列的长度。这可以确保批次中的所有输入都具有相同的形状,而这正是模型高效处理所必需的。

from transformers import DataCollatorWithPadding

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)

print(data_collator)

"""

DataCollatorWithPadding(tokenizer=DistilBertTokenizerFast(name_or_path='distilbert-base-uncased', vocab_size=30522, model_max_length=512, is_fast=True, padding_side='right', truncation_side='right', special_tokens={'unk_token': '[UNK]', 'sep_token': '[SEP]', 'pad_token': '[PAD]', 'cls_token': '[CLS]', 'mask_token': '[MASK]'}, clean_up_tokenization_spaces=True), added_tokens_decoder={

0: AddedToken("[PAD]", rstrip=False, lstrip=False, single_word=False, normalized=False, special=True),

100: AddedToken("[UNK]", rstrip=False, lstrip=False, single_word=False, normalized=False, special=True),

101: AddedToken("[CLS]", rstrip=False, lstrip=False, single_word=False, normalized=False, special=True),

102: AddedToken("[SEP]", rstrip=False, lstrip=False, single_word=False, normalized=False, special=True),

103: AddedToken("[MASK]", rstrip=False, lstrip=False, single_word=False, normalized=False, special=True),

}, padding=True, max_length=None, pad_to_multiple_of=None, return_tensors='pt')

"""

预处理部分已经结束。让我们进入训练部分。

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased", num_labels=2)

AutoModelForSequenceClassification 类专为序列分类任务而设计。设置 num_labels=2 则是为二元分类任务(有两种可能的输出类别)配置模型。

我们需要以与 Transformers 库兼容的方式定义我们的评估指标。

import numpy as np

from datasets import load_metric

def compute_metrics(eval_pred):

load_accuracy = load_metric("accuracy")

load_f1 = load_metric("f1")

logits, labels = eval_pred

predictions = np.argmax(logits, axis=-1)

accuracy = load_accuracy.compute(predictions=predictions, references=labels)["accuracy"]

f1 = load_f1.compute(predictions=predictions, references=labels)["f1"]

return {"accuracy": accuracy, "f1": f1}

load_metric 函数用于加载预定义的度量计算函数。

该函数只接受一个参数 eval_pred,即一个包含两个元素的元组:

- logits: 这些是模型为每个类别生成的未规范化的原始分数(最后线性层的输出)。

- labels: 数据的真实标签,用于与预测标签进行比较,以计算准确率。

from transformers import TrainingArguments, Trainer

repo_name = "finetuning-sentiment-model-3000-samples"

training_args = TrainingArguments(

output_dir=repo_name,

learning_rate=2e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

num_train_epochs=2,

weight_decay=0.01,

save_strategy="epoch",

push_to_hub=True,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_train,

eval_dataset=tokenized_test,

tokenizer=tokenizer,

data_collator=data_collator,

compute_metrics=compute_metrics,

)

repo_name 定义了 Hugging Face 中模型仓库的名称。训练好的模型将被推送到该 repo。

TrainingArguments 用于定义控制训练过程的参数。

- output_dir: 指定保存模型检查点和训练日志的目录。

- learning_rate:学习率 设置优化器的学习率。

- per_device_train_batch_size(每设备训练批次大小): 确定训练的批次大小。

- per_device_eval_batch_size: 确定训练的批次大小: 设置评估的批次大小。

- num_train_epochs(训练历元数):定义训练历元数。

- weight_decay: 权重衰减: 对模型权重进行 L2 正则化。

- save_strategy(保存策略): 指定何时保存模型检查点(在本例中,每个 epoch 后)。

- push_too_hub: 表示是否将最终模型推送到 "拥抱脸部集线器"。

Trainer 负责训练、评估和将模型推送到 Hugging Face 的模型集线器。

- model: 要训练的模型(已在前面定义)

- args:训练参数

- train_dataset 和 eval_dataset: 预处理数据集

- tokenizer: 用于预处理数据的标记符

- data_collator: 之前定义的数据整理器

- compute_metrics: 计算评估指标的函数

print(training_args)

"""

TrainingArguments(

_n_gpu=1,

accelerator_config={'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None, 'use_configured_state': False},

adafactor=False,

adam_beta1=0.9,

adam_beta2=0.999,

adam_epsilon=1e-08,

auto_find_batch_size=False,

batch_eval_metrics=False,

bf16=False,

bf16_full_eval=False,

data_seed=None,

dataloader_drop_last=False,

dataloader_num_workers=0,

dataloader_persistent_workers=False,

dataloader_pin_memory=True,

dataloader_prefetch_factor=None,

ddp_backend=None,

ddp_broadcast_buffers=None,

ddp_bucket_cap_mb=None,

ddp_find_unused_parameters=None,

ddp_timeout=1800,

debug=[],

deepspeed=None,

disable_tqdm=False,

dispatch_batches=None,

do_eval=False,

do_predict=False,

do_train=False,

eval_accumulation_steps=None,

eval_delay=0,

eval_do_concat_batches=True,

eval_on_start=False,

eval_steps=None,

eval_strategy=no,

evaluation_strategy=None,

fp16=False,

fp16_backend=auto,

fp16_full_eval=False,

fp16_opt_level=O1,

fsdp=[],

fsdp_config={'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False},

fsdp_min_num_params=0,

fsdp_transformer_layer_cls_to_wrap=None,

full_determinism=False,

gradient_accumulation_steps=1,

gradient_checkpointing=False,

gradient_checkpointing_kwargs=None,

greater_is_better=None,

group_by_length=False,

half_precision_backend=auto,

hub_always_push=False,

hub_model_id=None,

hub_private_repo=False,

hub_strategy=every_save,

hub_token=<HUB_TOKEN>,

ignore_data_skip=False,

include_inputs_for_metrics=False,

include_num_input_tokens_seen=False,

include_tokens_per_second=False,

jit_mode_eval=False,

label_names=None,

label_smoothing_factor=0.0,

learning_rate=2e-05,

length_column_name=length,

load_best_model_at_end=False,

local_rank=0,

log_level=passive,

log_level_replica=warning,

log_on_each_node=True,

logging_dir=finetuning-sentiment-model-3000-samples/runs/Aug10_12-22-35_1a90537ce774,

logging_first_step=False,

logging_nan_inf_filter=True,

logging_steps=500,

logging_strategy=steps,

lr_scheduler_kwargs={},

lr_scheduler_type=linear,

max_grad_norm=1.0,

max_steps=-1,

metric_for_best_model=None,

mp_parameters=,

neftune_noise_alpha=None,

no_cuda=False,

num_train_epochs=2,

optim=adamw_torch,

optim_args=None,

optim_target_modules=None,

output_dir=finetuning-sentiment-model-3000-samples,

overwrite_output_dir=False,

past_index=-1,

per_device_eval_batch_size=16,

per_device_train_batch_size=16,

prediction_loss_only=False,

push_to_hub=True,

push_to_hub_model_id=None,

push_to_hub_organization=None,

push_to_hub_token=<PUSH_TO_HUB_TOKEN>,

ray_scope=last,

remove_unused_columns=True,

report_to=['tensorboard'],

restore_callback_states_from_checkpoint=False,

resume_from_checkpoint=None,

run_name=finetuning-sentiment-model-3000-samples,

save_on_each_node=False,

save_only_model=False,

save_safetensors=True,

save_steps=500,

save_strategy=epoch,

save_total_limit=None,

seed=42,

skip_memory_metrics=True,

split_batches=None,

tf32=None,

torch_compile=False,

torch_compile_backend=None,

torch_compile_mode=None,

torchdynamo=None,

tpu_metrics_debug=False,

tpu_num_cores=None,

use_cpu=False,

use_ipex=False,

use_legacy_prediction_loop=False,

use_mps_device=False,

warmup_ratio=0.0,

warmup_steps=0,

weight_decay=0.01,

)

"""

print(trainer)

# <transformers.trainer.Trainer object at 0x7f5398514130>

开始训练吧

trainer.train()

"""

[376/376 04:51, Epoch 2/2]

Step Training Loss

TrainOutput(global_step=376, training_loss=0.3059146353539, metrics={'train_runtime': 294.2756, 'train_samples_per_second': 20.389, 'train_steps_per_second': 1.278, 'total_flos': 782725021021056.0, 'train_loss': 0.3059146353539, 'epoch': 2.0})

"""

在 Colab 用时 04:51 分钟。

trainer.evaluate()

"""

{'eval_loss': 0.3140079379081726,

'eval_accuracy': 0.88,

'eval_f1': 0.8838709677419355,

'eval_runtime': 50.3961,

'eval_samples_per_second': 5.953,

'eval_steps_per_second': 0.377,

'epoch': 2.0}

"""

将训练好的模型上传到 Hugging Face 软件仓库:

trainer.push_to_hub()

your_username/finetuning-sentiment-model-3000-samples

现在我们可以使用这个模型了。

from transformers import pipeline

sentiment_model = pipeline(model="yeniguno/finetuning-sentiment-model-3000-samples", task='text-classification')

sentiment_model(["I love this move", "This movie sucks!"])

"""

[{'label': 'LABEL_1', 'score': 0.9655360579490662},

{'label': 'LABEL_0', 'score': 0.9486633539199829}]

我可以使用相同的代码在笔记本电脑上进行训练,但我只需要修改这一部分。

training_args = TrainingArguments(

output_dir=repo_name,

learning_rate=2e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

num_train_epochs=2,

weight_decay=0.01,

save_strategy="epoch",

push_to_hub=True,

no_cuda=True, # Ensure this is True to force the use of the CPU

use_mps_device=False, # Disable MPS as it might not be available

)

培训在我的笔记本电脑上进行了 67 分钟。

Torch 和外部数据

在本演示中,我们将使用 Transformers 库以外的数据,并使用 PyTorch 训练基础模型。

import pandas as pd

import torch

import torch.nn as nn

from torch.utils.data import Dataset, DataLoader, random_split

from transformers import DistilBertModel, DistilBertTokenizerFast, AdamW

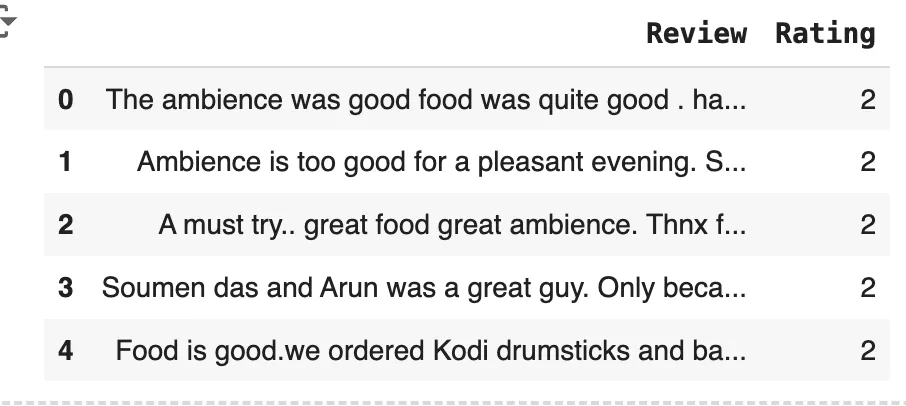

我们将使用来自 Kaggle 的数据集。

!wget https://raw.githubusercontent.com/kyuz0/llm-chronicles/main/datasets/restaurant_reviews.csv

# Load the dataset

df = pd.read_csv('restaurant_reviews.csv')

# Map sentiments to numerical labels

sentiment_mapping = {'negative': 0, 'neutral': 1, 'positive': 2}

df['Rating'] = df['Rating'].map(sentiment_mapping)

df.head()

PyTorch 的数据集类用于加载和预处理模型训练所需的数据。

class ReviewDataset(Dataset):

def __init__(self, csv_file, tokenizer, max_length):

self.dataset = pd.read_csv(csv_file)

self.tokenizer = tokenizer

self.max_length = max_length

# Map sentiments to numerical labels

self.label_dict = {'negative': 0, 'neutral': 1, 'positive': 2}

def __len__(self):

return len(self.dataset)

def __getitem__(self, idx):

review_text = self.dataset.iloc[idx, 0]

sentiment = self.dataset.iloc[idx, 1]

labels = self.label_dict[sentiment]

# Tokenize the review text

encoding = self.tokenizer.encode_plus(

review_text,

add_special_tokens=True,

max_length=self.max_length,

return_token_type_ids=False,

padding='max_length',

return_attention_mask=True,

return_tensors='pt',

truncation=True

)

return {

'review_text': review_text,

'input_ids': encoding['input_ids'].flatten(),

'attention_mask': encoding['attention_mask'].flatten(),

'labels': torch.tensor(labels, dtype=torch.long)

}

- csv_file: 包含数据集的 CSV 文件的路径。

- tokenizer: 标记符对象,本例中为 DistilBERT 标记符,用于将文本转换为模型可以处理的标记符。

- max_length:最大长度: 标记化序列的最大长度。长度大于此值的序列将被截断,长度小于此值的序列将被填充。

- self.label_dict: 将文本情感标签(如 "负面"、"中性"、"正面")映射为数字标签(0、1、2)。

__len__(self)

目的:返回样本的总数: 返回数据集中样本的总数。

操作:简单地返回数据集的长度: 简单地返回数据集的长度(len(self.dataset)),即 CSV 文件的行数。

__getitem__(self, idx)

目的 在指定的索引 idx 处从数据集中读取单个样本(数据点)。

参数:__getitem__(self)

idx: 要检索的样本的索引。

关键操作

- 检索数据: 提取评论文本和情感标签,并使用 self.label_dict 将情感标签转换为数值。

- 标记化: 使用提供的标记符对评论文本进行标记。

- add_special_tokens=True:在序列中添加特殊标记,如 [CLS]。

- max_length=self.max_length: 将序列截断或填充到最大长度。

- return_token_type_ids=假: 在这种情况下不需要令牌类型 ID。

- padding='max_length': 如果序列较短,则将其填充到最大长度。

- return_attention_mask=True:返回注意力掩码。

- return_tensors='pt': 返回 PyTorch 张量输出。

- truncation=True:如果序列长度超过最大值,则将其截断。

返回:包含以下内容的字典:

- review_text: 原始评论文本。

- input_ids: 扁平化标记 ID 张量。

- attention_mask:注意力掩码张量: 扁平化注意力掩码张量。

- labels:标签: 情感的数字标签,作为 PyTorch 张量。

tokenizer = DistilBertTokenizerFast.from_pretrained('distilbert-base-uncased')

review_dataset = ReviewDataset('restaurant_reviews.csv', tokenizer, 512)

print(review_dataset)

"""

<__main__.ReviewDataset object at 0x792342a7baf0>

"""

review_dataset[0]

"""

{'review_text': 'The ambience was good food was quite good . had Saturday lunch which was cost effective . Good place for a sate brunch. One can also chill with friends and or parents. Waiter Soumen Das was really courteous and helpful.',

'input_ids': tensor([ 101, 1996, 2572, 11283, 5897, 2001, 2204, 2833, 2001, 3243,

2204, 1012, 2018, 5095, 6265, 2029, 2001, 3465, 4621, 1012,

2204, 2173, 2005, 1037, 2938, 2063, 7987, 4609, 2818, 1012,

2028, 2064, 2036, 10720, 2007, 2814, 1998, 2030, 3008, 1012,

15610, 2061, 27417, 8695, 2001, 2428, 2457, 14769, 1998, 14044,

1012, 102, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0]),

'attention_mask': tensor([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0]),

'labels': tensor(2)}

"""我们可以解码编码。

print(tokenizer.decode(review_dataset[0]['input_ids']))

"""

[CLS] the ambience was good food was quite good. had saturday lunch which was cost effective. good place for a sate brunch. one can also chill with friends and or parents. waiter soumen das was really courteous and helpful. [SEP] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD]

"""

让我们把数据分成训练子集和测试子集。

from torch.utils.data import DataLoader, random_split

# Split dataset into training and validation

train_size = int(0.8 * len(df))

val_size = len(df) - train_size

train_dataset, test_dataset = random_split(review_dataset, [train_size, val_size])

train_loader = DataLoader(train_dataset, batch_size=16, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=16)

len(train_loader), len(test_loader)

"""

(498, 125)

"""

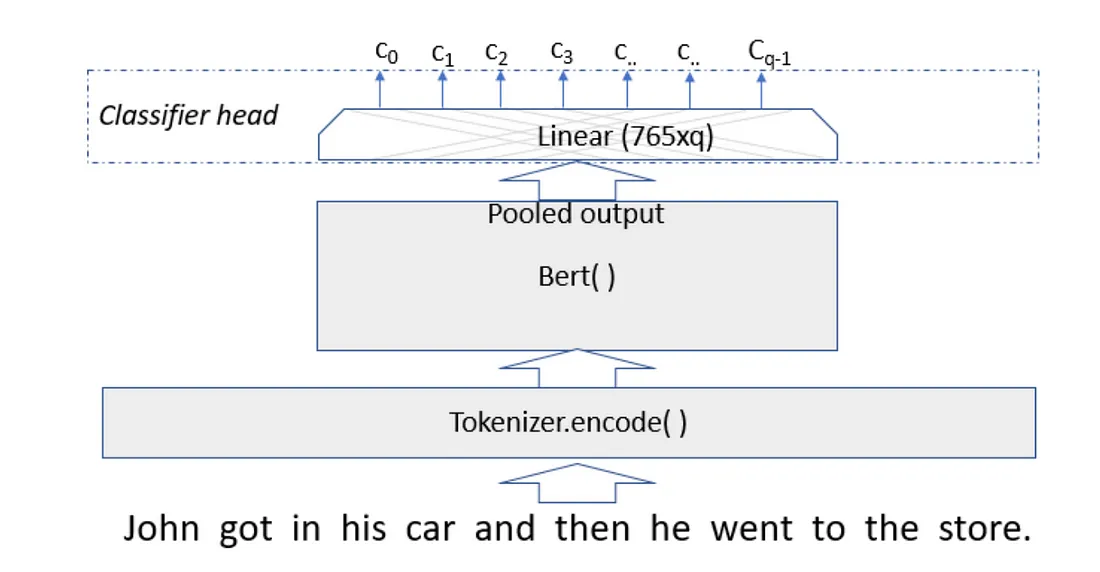

我们将创建一个继承自 PyTorch 的 nn.Module 的自定义神经网络模块。

class CustomDistilBertForSequenceClassification(nn.Module):

def __init__(self, num_labels=3):

super(CustomDistilBertForSequenceClassification, self).__init__()

self.distilbert = DistilBertModel.from_pretrained('distilbert-base-uncased')

self.pre_classifier = nn.Linear(768, 768)

self.dropout = nn.Dropout(0.3)

self.classifier = nn.Linear(768, num_labels)

def forward(self, input_ids, attention_mask):

distilbert_output = self.distilbert(input_ids=input_ids, attention_mask=attention_mask)

hidden_state = distilbert_output[0] # (batch_size, sequence_length, hidden_size)

pooled_output = hidden_state[:, 0] # we take the representation of the [CLS] token (first token)

pooled_output = self.pre_classifier(pooled_output)

pooled_output = nn.ReLU()(pooled_output)

pooled_output = self.dropout(pooled_output) # regularization

logits = self.classifier(pooled_output)

return logits

num_labels: 分类的类别数。默认值为 3,表示该模型是为三类分类问题(如负面、中性、正面情绪)而设置的。

self.distilbert:从 Hugging Face 的模型中心加载预先训练好的 DistilBERT 模型。这是模型的主干,负责从输入文本生成隐藏状态。

self.pre_classifier: 线性层,用于转换 [CLS] 标记的隐藏状态。它接收大小为 768(DistilBERT 的隐藏大小)的输入,并输出相同大小的输入。

self.dropout: 剔除层,剔除概率为 0.3,用于在训练过程中随机将部分输出设置为零,以防止过度拟合。

self.classifier:分类器层: 最后的线性层,将集合输出映射到标签数(本例中为 3)。

forward(self,input_ids,attention_mask):定义模型的前向传递,即输入数据如何通过网络转换产生输出。

在像 BERT 这样的转换器模型中,每个输入标记(单词或子单词)都会被转换成一个叫做隐藏状态的高维向量。这种隐藏状态代表了标记在序列上下文中的含义。

池化输出(pooled_output)是一种聚合表示,它将整个输入序列概括为一个单一的向量。这对于分类等任务至关重要,因为在这些任务中,我们需要对长度可变的序列进行固定大小的表示。

在 BERT 等模型中,池化输出(pooled_output)通常来自与序列中第一个标记[CLS] 标记相对应的隐藏状态。

CLS]标记的隐藏状态比较特殊,因为它可以捕捉到整个序列的信息,因此对分类任务非常有用。

池化输出通常是通过对 [CLS] 标记的隐藏状态应用密集层(非线性激活函数)来获得的。

- distilbert_output 包含 DistilBERT 模型的输出。

- hidden_state 是形状为(batch_size、sequence_length、hidden_size)的输出张量。

- pooled_output 提取 [CLS] 标记的隐藏状态。

- 池化输出经过预分类器、ReLU 激活和剔除。

- 最后,分类器为分类任务生成 logits。

model = CustomDistilBertForSequenceClassification()

现在,培训部分...

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

optimizer = AdamW(model.parameters(), lr=5e-5)

model.train()

for epoch in range(10):

for i, batch in enumerate(train_loader):

input_ids = batch['input_ids'].to(device)

attention_mask = batch['attention_mask'].to(device)

labels = batch['labels'].to(device)

optimizer.zero_grad()

logits = model(input_ids=input_ids, attention_mask=attention_mask)

loss = nn.CrossEntropyLoss()(logits, labels)

loss.backward()

optimizer.step()

if (i + 1) % 100 == 0:

print(f"Epoch {epoch + 1}, Batch {i + 1}, Loss: {loss.item():.4f}")

"""

Epoch 1, Batch 100, Loss: 0.2725

Epoch 1, Batch 200, Loss: 0.4001

Epoch 1, Batch 300, Loss: 0.1839

Epoch 1, Batch 400, Loss: 0.3460

Epoch 2, Batch 100, Loss: 0.2771

Epoch 2, Batch 200, Loss: 0.4541

Epoch 2, Batch 300, Loss: 0.4895

Epoch 2, Batch 400, Loss: 0.2796

Epoch 3, Batch 100, Loss: 0.1542

Epoch 3, Batch 200, Loss: 0.0881

Epoch 3, Batch 300, Loss: 0.1238

Epoch 3, Batch 400, Loss: 0.2519

Epoch 4, Batch 100, Loss: 0.0036

Epoch 4, Batch 200, Loss: 0.5554

Epoch 4, Batch 300, Loss: 0.1186

Epoch 4, Batch 400, Loss: 0.0182

Epoch 5, Batch 100, Loss: 0.0705

Epoch 5, Batch 200, Loss: 0.1194

Epoch 5, Batch 300, Loss: 0.0462

Epoch 5, Batch 400, Loss: 0.0863

Epoch 6, Batch 100, Loss: 0.1655

Epoch 6, Batch 200, Loss: 0.0095

Epoch 6, Batch 300, Loss: 0.1298

Epoch 6, Batch 400, Loss: 0.0023

Epoch 7, Batch 100, Loss: 0.0457

Epoch 7, Batch 200, Loss: 0.0195

Epoch 7, Batch 300, Loss: 0.0237

Epoch 7, Batch 400, Loss: 0.0316

Epoch 8, Batch 100, Loss: 0.3937

Epoch 8, Batch 200, Loss: 0.0027

Epoch 8, Batch 300, Loss: 0.0047

Epoch 8, Batch 400, Loss: 0.0429

Epoch 9, Batch 100, Loss: 0.0456

Epoch 9, Batch 200, Loss: 0.0138

Epoch 9, Batch 300, Loss: 0.0434

Epoch 9, Batch 400, Loss: 0.0187

Epoch 10, Batch 100, Loss: 0.0330

Epoch 10, Batch 200, Loss: 0.0485

Epoch 10, Batch 300, Loss: 0.0406

Epoch 10, Batch 400, Loss: 0.0097

"""我们创建了一个优化器 AdamW,用于在训练过程中更新模型权重。它以模型参数和学习率(lr=5e-5)为参数。优化器将在反向传播过程中根据计算的损失调整模型权重。

model.train()将模型设置为训练模式(对某些层(如 dropout)很重要)。

外循环迭代特定次数的历时。内循环遍历 train_loader 中的批次。

训练批次中的每个元素(input_ids、attention_mask、labels)都会被传输到选定的设备(GPU 或 CPU)上。这样可以确保计算在指定硬件上进行,从而加快训练速度。

optimizer.zeroo_grad() 会重置上一次迭代积累的梯度。nn.CrossEntropyLoss 用作损失函数,适用于情感分析等多类分类任务。

loss.backward() 执行反向传播,计算损失函数相对于模型参数的梯度。

optimizer.step() 使用计算出的梯度,按照损失最小化的方向更新模型权重。这是训练过程的核心。

我们需要对训练好的模型进行评估。

model.eval()

total_correct = 0

total = 0

for batch in test_loader:

input_ids = batch['input_ids'].to(device)

attention_mask = batch['attention_mask'].to(device)

labels = batch['labels'].to(device)

with torch.inference_mode():

logits = model(input_ids=input_ids, attention_mask=attention_mask)

predictions = torch.argmax(logits, dim=1)

total_correct += (predictions == labels).sum().item()

total += predictions.size(0)

print(f'Test Accuracy: {total_correct / total:.4f}')

# Test Accuracy: 0.8559

model.eval() 将模型设置为评估模式。这将禁用特定于训练的操作,如丢弃和批量归一化。

with torch.inference_mode():

logits = model(input_ids=input_ids, attention_mask=attention_mask)

- 在推理过程中禁用梯度计算,以提高效率。

- 通过模型传递输入批次以获得对数。

predictions = torch.argmax(logits, dim=1)

total_correct += (predictions == labels).sum().item()

- 使用 torch.argmax 将 logits 转换为预测的类标签。

- 将预测结果与真实标签进行比较,如果正确,则增加 total_correct。

我们有一种预测给定文本的方法。

def predict_sentiment(review_text, model, tokenizer, max_length = 512):

"""

Predicts the sentiment of a given review text.

Args:

- review_text (str): The review text to analyze.

- model (torch.nn.Module): The fine-tuned sentiment analysis model.

- tokenizer (PreTrainedTokenizer): The tokenizer for encoding the text.

- max_length (int): The maximum sequence length for the model.

Returns:

- sentiment (str): The predicted sentiment label ('negative', 'neutral', 'positive').

"""

# Ensure the model is in evaluation mode

model.eval()

# Tokenize the input text

encoding = tokenizer.encode_plus(

review_text,

add_special_tokens=True,

max_length=max_length,

return_token_type_ids=False,

padding='max_length',

return_attention_mask=True,

return_tensors='pt',

truncation=True

)

input_ids = encoding['input_ids']

attention_mask = encoding['attention_mask']

# Move tensors to the same device as the model

input_ids = input_ids.to(device)

attention_mask = attention_mask.to(device)

with torch.inference_mode():

# Forward pass, get logits

logits = model(input_ids=input_ids, attention_mask=attention_mask)

# Extract the highest scoring output

prediction = torch.argmax(logits, dim=1).item()

# Map prediction to label

label_dict = {0: 'negative', 1: 'neutral', 2: 'positive'}

sentiment = label_dict[prediction]

return sentiment

# Test

review_1 = "We ordered from Papa Johns a so-called pizza... what to say? I'd rather eat a piece of dry cardboard, calling this pizza is an insult to Italians! "

review_2 = "I guess PizzaHut is decent but far from the Italian pizza. This is not going to blow you away, but still quite ok in the end."

review_3 = "Gino's pizza is what authentical Neapolian pizza tastes like, highly recommended."

print(predict_sentiment(review_1, model, tokenizer))

print(predict_sentiment(review_2, model, tokenizer))

print(predict_sentiment(review_3, model, tokenizer))

"""

negative

neutral

positive

"""