【指南】多模态代理应用

简介

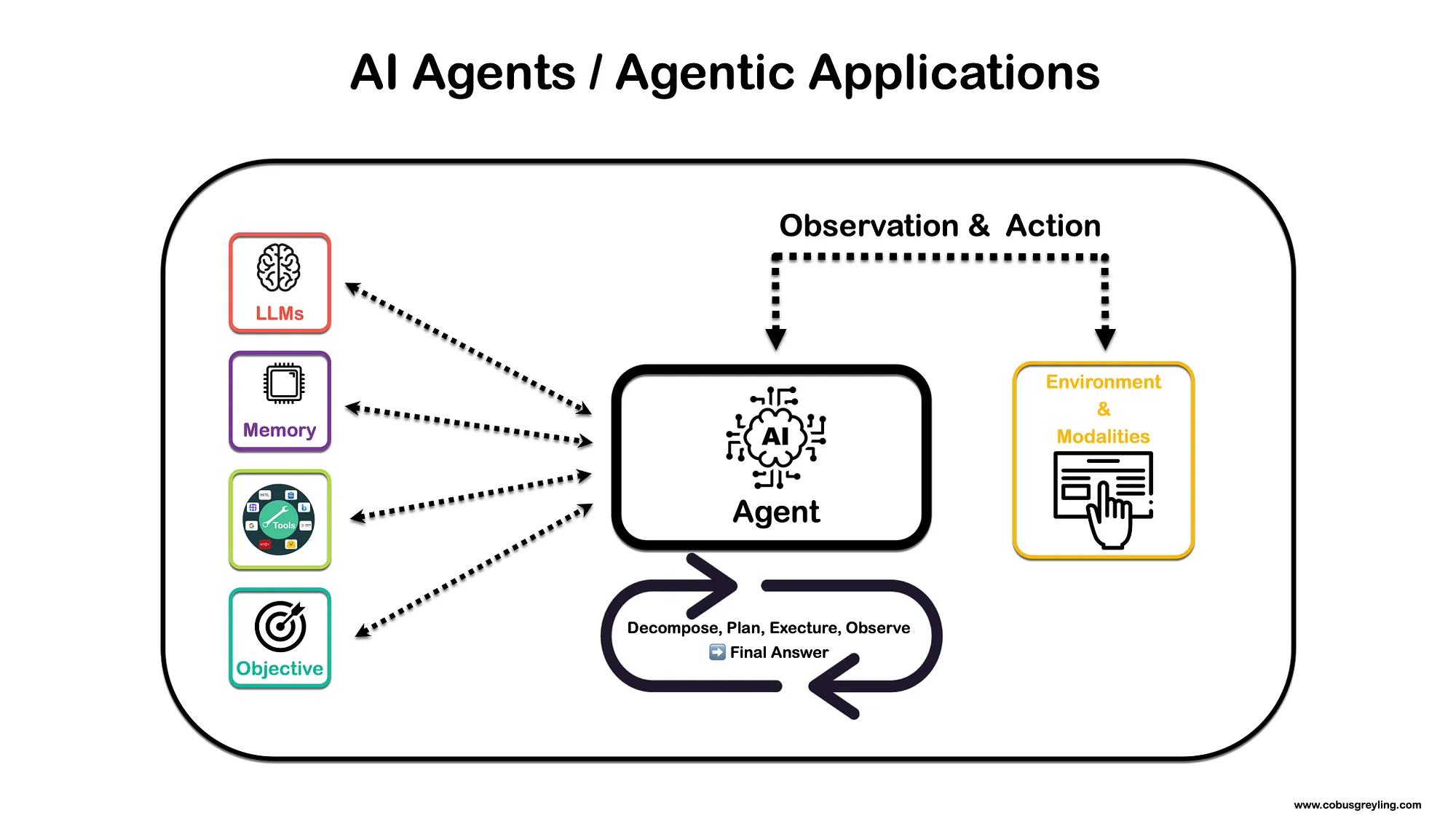

人工智能代理是一种软件程序,旨在根据现有工具自主执行任务或做出决策。

如下图所示,代理依靠一个或多个大型语言模型或基础模型,将复杂的任务分解为易于管理的子任务。

这些子任务被组织成代理可以执行的一系列行动。

代理还可以使用一组定义好的工具,每种工具都有一个说明,帮助代理确定何时以及如何依次使用这些工具来应对挑战并得出最终结论。

工具

代理可用的工具包括研究门户网站的 API,如 Arxiv、HuggingFace、GALE、必应搜索和 Serp API。

其中一个值得注意的工具是 Human-In-The-Loop 功能,该功能允许代理在遇到下一步或结论不确定时联系真人代理。该人工代理可为具体问题提供答案,确保代理能更有效地应对挑战。

人工智能代理与自主性

人们对人工智能代理及其自主程度有这样一种担心......代理有三个限制因素,这也引入了一定程度的安全性......

- 允许代理循环或迭代的次数。

- 代理可使用的工具。代理可用工具的数量和性质决定了代理的自主程度。代理遵循将问题分解为更小的连续步骤或问题的过程,以解决更大的挑战。对于每个步骤,代理都可以选择一种或多种工具。

- 如果代理的信心低于一定水平,它可以向人类寻求帮助;人类也可以作为一种工具。

分解与规划

下面的代码展示了一个完整的工作示例,LangChain 代理回答了一个极其模糊和复杂的问题:

What is the square root of the year of birth of the person who is regarded as the father of the iPhone?

为了解决这个问题,该代理原型可以使用:

- LLM Math、

- SerpApi。SerpApi 可以从搜索引擎结果中提取可操作的数据。

- GPT-4 (gpt-4-0314)。

多模式代理

多模态代理有两个角度...

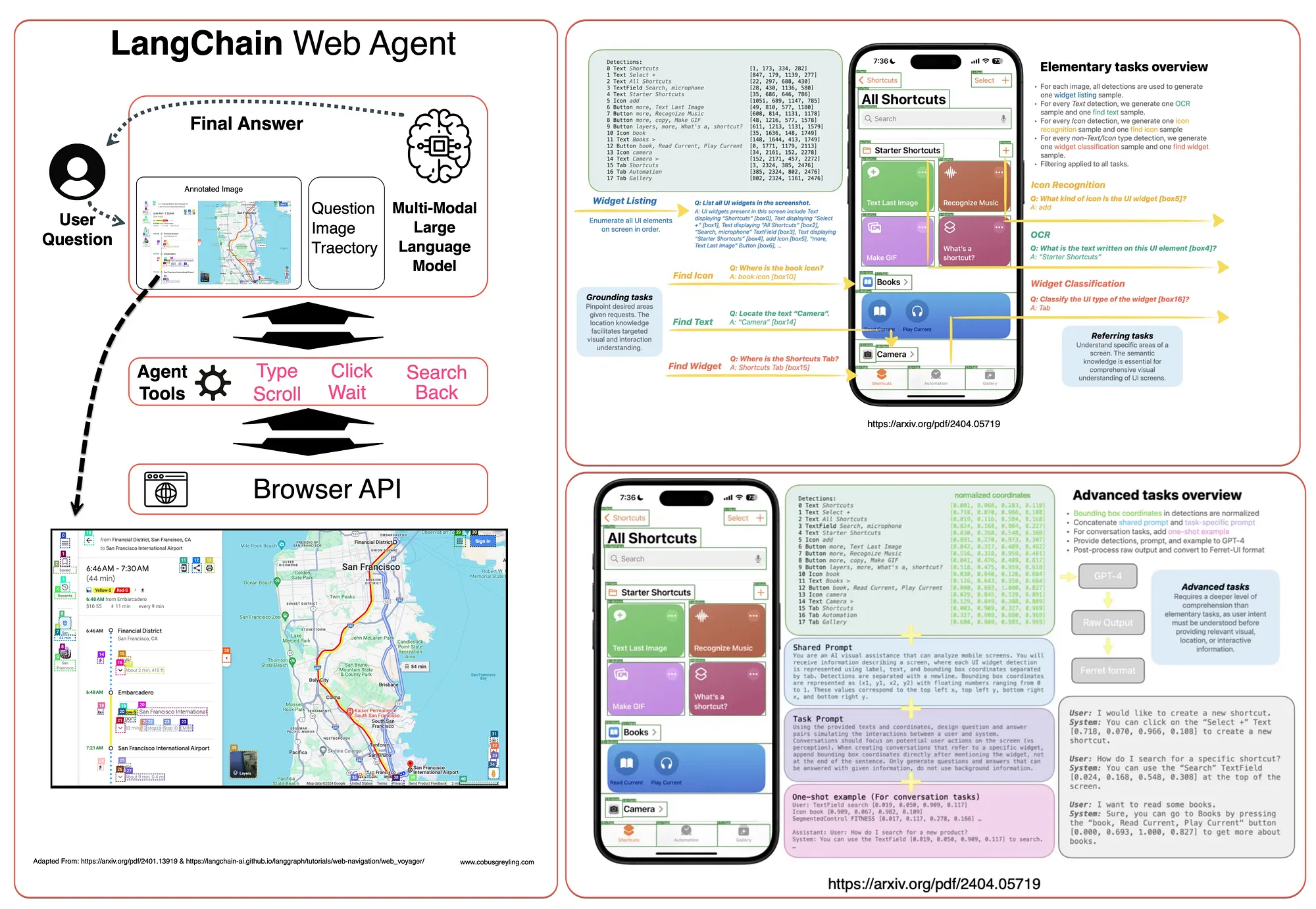

首先,具有视觉功能的语言模型通过增加一种额外的模式来增强人工智能代理。

我经常思考多模态模型的最佳用例,在需要视觉的代理应用中使用这些模型就是一个很好的例子。

其次,还有一些开发成果,包括Apple公司的 Ferrit-UI 和 WebVoyager / LangChain 实现,其中 GUI 元素通过命名的边界框进行映射和定义。

如下所示:

LangChain 多模态生成应用程序

以下是完整的工作代码,你可以直接复制并粘贴到笔记本上运行。你唯一需要的是一个 OpenAI API Key。

在下面的代码中,LangChain 演示了如何将多模态输入直接传递给模型。目前,所有输入都应按照 OpenAI 的规范格式化。对于其他支持多模态输入的模型提供商,LangChain 在类中实现了将数据转换为所需格式的逻辑。

### Image URL Setup

image_url = "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg"

#### Package Installation

pip install -q langchain_core

pip install -q langchain_openai

#### Environment Setup

import os

os.environ['OPENAI_API_KEY'] = str("<Your OpenAI API Key goes here>")

#### Model Initialization

from langchain_core.messages import HumanMessage

from langchain_openai import ChatOpenAI

model = ChatOpenAI(model="gpt-4o-mini")

### The httpx library fetches the image from the URL, and the base64

### library encodes the image data in base64 format.

### This conversion is necessary to send the image data as part of the

### input to the mode.

import base64

import httpx

image_data = base64.b64encode(httpx.get(image_url).content).decode("utf-8")

### Constructing the message. This creates a HumanMessage object that contains

### two types of input: a text prompt asking to describe the weather in the

### image, and the image itself in base64 format.

message = HumanMessage(

content=[

{"type": "text", "text": "describe the weather in this image"},

{

"type": "image_url",

"image_url": {"url": f_"data:image/jpeg;base64,{image_data}"},

},

],

)

### The model.invoke method sends the message to the model,

### which processes it and returns a response.

response = model.invoke([message])

### The result, which should be a description of the weather in the image,

### is then printed out.

print(response.content)

结果:

he weather in the image appears to be clear and pleasant,

with a bright blue sky scattered with clouds.

The lush green grass and vibrant foliage suggest it is likely a warm season,

possibly spring or summer.

The overall scene conveys a calm and inviting atmosphere,

ideal for outdoor activities.

描述图像...

### HumanMessage: This is an object that encapsulates the input data meant

### for the AI model. It includes a list of content items,

### each specifying a different type of input.

message = HumanMessage(

content=[

{"type": "text", "text": "describe the weather in this image"},

{"type": "image_url", "image_url": {"url": image_url}},

],

)

### The model response is assigned

response = model.invoke([message])

###. And the response is printed out

print(response.content)

结果:

The weather in the image appears to be pleasant and clear.

The sky is mostly blue with some fluffy clouds scattered across it,

suggesting a sunny day.

The vibrant green grass and foliage indicate that it might be spring or summer,

with warm temperatures and good visibility.

The overall atmosphere looks calm and inviting,

perfect for a walk along the wooden path.

与图像比较...

### The HUmanMessage object encapsulates text input and two images.

### The model is asked to compare the two images.

message = HumanMessage(

content=[

{"type": "text", "text": "are these two images the same?"},

{"type": "image_url", "image_url": {"url": image_url}},

{"type": "image_url", "image_url": {"url": image_url}},

],

)

response = model.invoke([message])

print(response.content)

结果...

Yes, the two images are the same.

下面的工作代码展示了如何创建一个自定义工具,该工具可以创建

### It allows you to restrict a function's input to specific literal

### values—in this case, specific weather conditions

### ("sunny," "cloudy," or "rainy").

from typing import Literal

### It's used to define a custom tool that the AI model can use during

### its execution.

from langchain_core.tools import tool

### This decorator registers the function as a tool that the model can use.

### Tools are custom functions that can be invoked by the AI model to perform

### specific tasks.

@tool

### Function Body: The function currently doesn't perform any action (pass simply means "do nothing"),

### but in a full implementation, it might process the input weather condition in some way, such as generating a description.

def weather_tool(weather: Literal["sunny", "cloudy", "rainy"]) -> None:

"""Describe the weather"""

pass

### This line binds the weather_tool to the AI model,

### creating a new model instance (model_with_tools) that is capable of

### using this tool. Now, whenever the model processes input,

### it can invoke weather_tool if needed.

model_with_tools = model.bind_tools([weather_tool])

### This section constructs a HumanMessage object containing both a

### text prompt and an image URL. The prompt asks the model to

### 'describe the weather in this image,' while the image URL points to the

### image to be analyzed.

message = HumanMessage(

content=[

{"type": "text", "text": "describe the weather in this image"},

{"type": "image_url", "image_url": {"url": image_url}},

],

)

### model_with_tools.invoke([message]): This line sends the constructed

### message to the model instance that has the weather_tool bound to it.

### The agent processes the inputs and, if necessary, uses the tool to help

### generate its response.

response = model_with_tools.invoke([message])

### response.tool_calls: This attribute contains a log of any tool calls the

### model made while processing the message.

### If the agent decided to use weather_tool to help generate a response,

### that action would be recorded here.

print(response.tool_calls): This line prints the list of tool calls to the console, providing insight into whether and how the model used the weather_tool.

print(response.tool_calls)

结果

[{'name': 'weather_tool', 'args': {'weather': 'sunny'}, 'id': 'call_l5qYYYSpUQf2UbBE450bNjqo', 'type': 'tool_call'}]