基于知识图谱的RAG:将ai-arxiv-chunks数据集转换为知识图谱

简介

每个机器学习爱好者都一定使用过或至少听说过 arXiv,它是一个免费开放的资料库,收藏了大量不同领域的学术研究论文。arXiv 中蕴含着丰富的知识,是研究人员、学者和开发人员的首选资源。然而,挑战不仅在于如何访问这些信息,还在于如何有效地组织和利用这些信息,尤其是在处理大规模数据集时。

在本文中,我们将重点讨论如何将詹姆斯-布里格斯(James Briggs)精心编译的 ai-arxiv-chunks 数据集转化为结构化知识图谱。这种转换可以更有效地检索和利用数据集中的信息,为检索增强生成(RAG)管道等高级应用铺平道路。

RAG 是一种前沿方法,它通过整合外部知识源来增强语言模型,从而做出更明智、更贴近上下文的反应。然而,当这些外部知识以图的形式结构化时,系统就可以利用概念、作者和论文之间丰富的相互联系,从而获得更精确、更有洞察力的结果。

RAG 与 GraphRAG

检索增强生成(RAG)可定义为通过让大型语言模型访问外部知识源来增强其输出的过程。

RAG 如何工作?

在 RAG 管道中,你通常会先将数据分割成小块(请参阅本文了解有关分割的更多信息),然后将它们存储到向量存储区中。矢量存储完成后,你就可以根据与用户输入查询的相似度来检索相应的文档。因此,你可以将这些文档提供给你的 llm,让它根据上下文做出响应。

RAG 与 GraphRAG 的区别

另一方面,GraphRAG 可以被定义为高级 RAG,它使用知识图谱来增强生成能力。在知识图谱中,你可以存储实体和实体之间的关系,从而在检索信息时获得更多的洞察力和上下文。

ai-arxiv-chunks 数据集

让我们从加载数据集 :

from datasets import load_dataset

data = load_dataset("jamescalam/ai-arxiv-chunked", split="train")

data

Dataset({

features: ['doi', 'chunk-id', 'chunk', 'id', 'title', 'summary', 'source', 'authors', 'categories', 'comment', 'journal_ref', 'primary_category', 'published', 'updated', 'references'],

num_rows: 41584

})由于我们不需要原始数据集中的所有列,因此让我们执行一个简单的映射转换,只保留相关信息:

data = data.map(lambda x: {

"id": f'{x["id"]}-{x["chunk-id"]}',

"text": x["chunk"],

"metadata": {

"title": x["title"],

"url": x["source"],

"primary_category": x["primary_category"],

"published": x["published"],

"updated": x["updated"],

"authors":x["authors"]

}

})

# drop uneeded columns

data = data.remove_columns([

"title", "summary", "source",

"authors", "categories", "comment",

"journal_ref", "primary_category",

"published", "updated", "references",

"doi", "chunk-id",

"chunk"

])现在数据集的行将如下所示

{'id': '1910.01108-0',

'text': 'DistilBERT, a distilled version of BERT: smaller,\nfaster,

cheaper and lighter, ... model is cheaper to pre-train and we demonstrate its',

'metadata': {'authors': ['Victor Sanh',

'Lysandre Debut',

'Julien Chaumond',

'Thomas Wolf'],

'primary_category': 'cs.CL',

'published': '20191002',

'title': 'DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter',

'updated': '20200301',

'url': 'http://arxiv.org/pdf/1910.01108'}}知识图谱

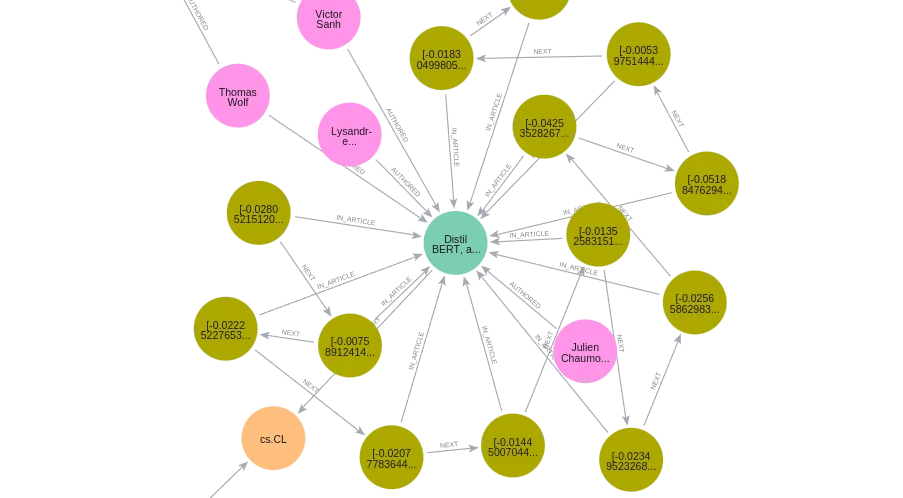

下一步是确定我们要在图中表示的关键实体和关系。为了简单起见,在本教程中,我选择在图中包含以下实体:

- 作者: 代表作者姓名。

- 文章: 包含论文标题和一些元数据。

- 章节: 代表文章的章节。

- 类别:表示论文的主要类别。

关于这些实体之间的关系,我们可以建立以下联系:

- 作者 -[authored]-> 文章

- 文章 -[in_category]-> 类别

- 语块 -[in_article]-> 文章

- 大块-[下一个]->大块

稍后,我们将深入探讨 NEXT 关系,并探讨它如何增强生成过程。

我们最终的图结构如下:

创建和填充图

在本教程中,我们将使用 neo4j 作为图形数据库管理系统,因此只需创建一个 neo4j 账户并创建一个实例连接即可。

连接到图

幸运的是,langchain 已经集成了 neo4j,我们可以用下面一行代码连接到我们的图:

from langchain_community.graphs import Neo4jGraph

graph=Neo4jGraph(

url=NEO4J_URI,

username=NEO4J_USERNAME,

password=NEO4J_PASSWORD,

)

一旦连接到 neo4j 提供的免费实例,你就可以获得 url、用户名和密码。

Cypher 查询语言

Cypher 是 neo4j 的图形查询语言,可让你检索数据并将其写入图形。它可以被视为类似于 SQL,但针对图形,因此如果你熟悉 SQL,应该很容易掌握,但不用担心,即使你不熟悉,我们将使用的查询也很简单,并将进行解释。

创建节点

为了在图中创建新节点,我们可以使用CREATE或MERGE子句。它们之间的区别在于,MERGE子句用于匹配现有节点或创建新节点(如果节点尚不存在),而CREATE子句即使节点已存在也会创建该节点。在我们的例子中,我们将使用 MERGE 子句,因为它可以确保我们避免重复。

例如,要创建 AUTHOR 和 CATEGORY 节点,我们可以使用以下查询:

query = """

MERGE (n:AUTHOR {name: $name})

"""

graph.query(query, params={'name' : author_name})

query = """

MERGE (c:CATEGORY {category: category})

"""

graph.query(query, params={'category' : category})

类似的查询将用于创建 ARTICLE 和 CHUNK 节点,唯一不同的是我们将传递更多参数。

我们可以使用下面的函数来创建和填充图节点:

from tqdm import tqdm

#create and populate the graph nodes

def create_nodes(data, start=0, end=len(data)):

"""

Creates and populates nodes in a graph database for authors, categories, articles, and chunks.

Parameters:

- data: dict

A dictionary containing data about authors, categories, articles, and text chunks.

- start: int, optional

The starting index in the data from which we start populating nodes.

- end: int, optional

The ending index in the data.

"""

eyedee = data['id'][0].split('-')[0]

print("creating authors nodes")

for i in tqdm(range(start, end)):

#ensure that we don't go over the same author more than once

if eyedee==data['id'][i].split('-')[0] and i!=0 :

pass

else :

eyedee=data['id'][i].split('-')[0]

query = """

UNWIND $names AS name

MERGE (n:AUTHOR {name: name})

"""

graph.query(query, params={"names": data['metadata'][i]['authors']})

print("="*50)

eyedee = data['id'][0].split('-')[0]

print("creating categories nodes")

for i in tqdm(range(start, end)):

#ensure that we don't go over the same category more than once

if eyedee==data['id'][i].split('-')[0] and i!=0 :

pass

else:

eyedee=data['id'][i].split('-')[0]

query = """

UNWIND $categories AS category

MERGE (c:CATEGORY {category: category})

"""

graph.query(query, params={"categories": data['metadata'][i]['primary_category']})

print("="*50)

eyedee = data['id'][0]

print("creating articles nodes")

for i in tqdm(range(start, end)):

#ensure that we don't go over the same article more than once

if eyedee==data['id'][i].split('-')[0] and i!=0 :

pass

else:

eyedee=data['id'][i].split('-')[0]

query = """

UNWIND $titles AS title

UNWIND $ids AS id

UNWIND $sources AS source

UNWIND $published AS published

UNWIND $updated AS updated

MERGE (t:ARTICLE {title: title, id: id, source: source, published: published, updated:updated})

"""

graph.query(query, params={"titles": data['metadata'][i]['title'], "ids": data['id'][i].split('-')[0], "sources": data['metadata'][i]['url'], "published": data['metadata'][i]['published'],"updated": data['metadata'][i]['updated']})

print("="*50)

print("creating chunks nodes")

for i in tqdm(range(start, end)):

query = """

UNWIND $texts AS text

UNWIND $ids AS id

MERGE (c:CHUNK {text: text, id: id})

"""

graph.query(query, params={"texts": data['text'][i], "ids": data['id'][i]})

print("="*50)

创建节点间的关系

首先创建 AUTHORED 关系,可以使用下面的查询来完成:

query = """

UNWIND $authors as author

MATCH (a:AUTHOR{name: author}), (t:ARTICLE{title: $title})

MERGE (a)-[r:AUTHORED]->(t)

"""

graph.query(query, params={"authors": authors, "title": article})

让我们来分解一下这个查询:

- UNWIND $authors as author:该子句获取由 $authors 参数提供的作者姓名列表,并单独处理每个姓名,将每个姓名都称为作者。

- MATCH (a:AUTHOR{name: author}), (t:ARTICLE{title: $title}) : MATCH 子句用于查找图中已经存在的节点。在此查询中,例如 (a:AUTHOR{name: author}) 查找 name 属性与 UNWIND 操作中的当前 author 值相匹配的 AUTHOR 节点。

- MERGE (a)-[r:AUTHORED]->(t) : 这里的 MERGE 子句用于在 AUTHOR 节点和 ARTICLE 节点之间创建关系(如果关系还不存在)。

类似的查询也用于创建 IN_ARTICLE 和 IN_CATEGORY 关系。

现在,对于 NEXT 关系,我们将使用查询来处理所有 CHUNK 节点,根据其对应的文章对它们进行分组,根据它们在文章中的位置对它们进行顺序排列,然后在每个 Chunk 和下一个 Chunk 之间创建 NEXT 关系。这样就能有效地将同一篇文章中的数据块按照出现的顺序连接起来,从而实现按顺序遍历数据块。

query = """

MATCH (c:CHUNK)

WITH c, SPLIT(c.id, "-")[0] AS articleId, TOINTEGER(SPLIT(c.id, "-")[1]) AS chunkOrder

ORDER BY articleId, chunkOrder

WITH articleId, collect(c) AS chunks

UNWIND RANGE(0, size(chunks)-2) AS i

WITH chunks[i] AS currentChunk, chunks[i+1] AS nextChunk

MERGE (currentChunk)-[:NEXT]->(nextChunk)

"""

graph.query(query)

我们可以使用该函数创建图中节点之间的所有关系:

def create_relations(data,start=0, end=len(data)) :

"""

Creates and populates relationships between nodes in a graph database, including:

- AUTHORED: Between authors and articles.

- IN_CATEGORY: Between articles and categories.

- IN_ARTICLE: Between text chunks and their respective articles.

- NEXT: Between sequential text chunks within the same article.

- Sets 'first' and 'last' flags on chunks to indicate their position within an article.

Parameters:

- data: dict

A dictionary containing data about authors, categories, articles, and text chunks.

- start: int, optional

The starting index in the data from which we start creating the relations.

- end: int, optional

The ending index in the data .

"""

eyedee = data['id'][0].split('-')[0]

print("creating AUTHORED relation")

for i in tqdm(range(start, end)):

if eyedee==data['id'][i].split('-')[0] and i!=0 :

pass

else :

eyedee=data['id'][i].split('-')[0]

article=data['metadata'][i]['title']

authors=data['metadata'][i]['authors']

query = """

UNWIND $authors as author

MATCH (a:AUTHOR{name: author}), (t:ARTICLE{title: $title})

MERGE (a)-[r:AUTHORED]->(t)

"""

graph.query(query, params={"authors": authors, "title": article})

print("="*50)

eyedee = data['id'][0].split('-')[0]

print("creating IN_CATEGORY relation")

for i in tqdm(range(start, end)):

if eyedee==data['id'][i].split('-')[0] and i!=0 :

pass

else :

eyedee=data['id'][i].split('-')[0]

article=data['metadata'][i]['title']

category=data['metadata'][i]['primary_category']

query = """

MATCH (c:CATEGORY{category: $category}), (t:ARTICLE{title: $title})

MERGE (t)-[r:IN_CATEGORY]->(c)

"""

graph.query(query, params={"category": category, "title": article})

print("="*50)

print("creating IN_ARTICLE relation")

query = """

MATCH (c:CHUNK)

WITH c, SPLIT(c.id, "-")[0] AS articleId

MATCH (a:ARTICLE {id: articleId})

MERGE (c)-[:IN_ARTICLE]->(a)

"""

graph.query(query)

print("="*50)

print("creating NEXT relation")

query = """

MATCH (c:CHUNK)

WITH c, SPLIT(c.id, "-")[0] AS articleId, TOINTEGER(SPLIT(c.id, "-")[1]) AS chunkOrder

ORDER BY articleId, chunkOrder

WITH articleId, collect(c) AS chunks

UNWIND RANGE(0, size(chunks)-2) AS i

WITH chunks[i] AS currentChunk, chunks[i+1] AS nextChunk

MERGE (currentChunk)-[:NEXT]->(nextChunk)

"""

现在,图形终于完成了!下面是它在 neo4j 中的概览:

创建 RAG 管道

在从我们的图数据库中进行检索之前,还有一个步骤,即我们需要创建一个向量索引和每个块的嵌入,以便能够执行相似性搜索。

创建向量索引

我们将使用以下查询来创建chunks_vector索引:

#query to create the vector index

graph.query("""

CREATE VECTOR INDEX `chunks_vector` IF NOT EXISTS

FOR (c:CHUNK) ON (c.textEmbedding)

OPTIONS { indexConfig: {

`vector.dimensions`: 1536,

`vector.similarity_function`: 'cosine'

}}

""")

- CREATE VECTOR INDEX `chunks_vector` IF NOT EXISTS : 查询的这一部分创建名为 chunks_vector 的矢量索引,并确保只在索引不存在的情况下创建索引,防止出现错误或重复索引。

- FOR(c:CHUNK): 指定为标有 CHUNK 的节点创建索引,这些节点被称为 c。

- ON (c.textEmbedding) : 表示索引将应用于 CHUNK 节点的 textEmbedding 属性。textEmbedding 通常是数据块文本内容的向量表示。

- `vector.dimensions`: 1536:指定存储在 textEmbedding 属性中的向量有 1536 个维度。这是由某些模型生成的嵌入向量的常用维度,例如 OpenAI 的嵌入向量(我们将在本例中使用的嵌入向量)。

- `vector.similarity_function`: 'cosine':指定此索引使用的相似度函数为余弦相似度。

嵌入片段文本

我们将使用 OpenAI 来嵌入每个语块的文本:

graph.query("""

MATCH (chunk:CHUNK)

WHERE chunk.textEmbedding IS NULL

WITH chunk, genai.vector.encode(

chunk.text,

"OpenAI",

{ token: $openAiApiKey }

) AS vector

CALL db.create.setNodeVectorProperty(chunk, "textEmbedding", vector)

""",

params={"openAiApiKey": OPENAI_API_KEY})让我们来解释一下这里发生了什么:

- 我们首先匹配图中文本嵌入属性设置为 NULL 的所有块。

- 然后,我们向 genai.vector.encode 函数提供要编码的文本以及模型和 OpenAI api 密钥,以生成文本嵌入。

- 最后,我们将向量值分配给该块节点的 textEmbedding 属性。

现在,我们可以检查最终的图模式

graph.refresh_schema()

print(graph.schema)

它应该是这样的

Node properties:

AUTHOR {name: STRING}

CATEGORY {category: STRING}

ARTICLE {source: STRING, published: STRING, id: STRING, title: STRING, updated: STRING}

CHUNK {source: STRING, id: STRING, text: STRING, textEmbedding: LIST, last: BOOLEAN, first: BOOLEAN}

Relationship properties:

The relationships:

(:AUTHOR)-[:AUTHORED]->(:ARTICLE)

(:ARTICLE)-[:IN_CATEGORY]->(:CATEGORY)

(:CHUNK)-[:IN_ARTICLE]->(:ARTICLE)

(:CHUNK)-[:NEXT]->(:CHUNK)

我们终于可以开始聊天并从图中检索了!

从知识图谱中检索相关文档

我们可以使用以下加密查询进行检索

question = "can you explain why we would want to do rlhf?"

#retieving documents

kg.query("""

WITH genai.vector.encode(

$question,

"OpenAI",

{

token: $openAiApiKey

}) AS question_embedding

CALL db.index.vector.queryNodes(

'chunks_vector',

$top_k,

question_embedding

) YIELD node AS chunk, score

RETURN chunk.source, chunk.text, score

""",

params={"openAiApiKey":OPENAI_API_KEY,

"question": question,

"top_k": 5

})

- 首先,我们使用之前的相同函数获取问题的嵌入信息

- 然后,将这些嵌入信息与矢量索引名称和我们想要检索的文档数量一起提供给 db.index.vector.queryNodes 函数。

- 该函数将返回大块节点列表以及与输入查询的相似度得分。

设置 QA 链

还记得我们之前讨论过的 NEXT 关系吗?这种关系可以提供更丰富的上下文,从而大大增强 LLM 的生成能力。我们不能简单地将检索到的文档直接输入 LLM,而是可以利用 NEXT 关系来收集连续的数据块--本质上就是创建一个上下文的滑动窗口。这种方法提供了更具凝聚力的信息流,为 LLM 生成准确、相关的响应奠定了更好的基础。

我们首先定义查询,以检索语块窗口

retrieval_query_window = """

MATCH window=

(:CHUNK)-[:NEXT*0..1]->(node)-[:NEXT*0..1]->(:CHUNK)

WITH node, score, window as longestWindow

ORDER BY length(window) DESC LIMIT 1

WITH nodes(longestWindow) as chunkList, node, score

UNWIND chunkList as chunkRows

WITH collect(chunkRows.text) as textList, node, score

RETURN apoc.text.join(textList, " \n ") as text,

score,

node {.source} AS metadata

"""

该查询可识别围绕给定节点的最长 CHUNK 节点序列,收集这些块中的文本,并返回合并后的文本以及节点的得分和元数据。

现在,我们可以使用该查询扩展向量存储定义,以接受 Cypher 查询。Cypher 查询会获取矢量相似性搜索的结果,然后以某种方式对其进行修改。因此,我们可以定义一个窗口检索查询,以获取连续的数据块

from langchain_community.vectorstores import Neo4jVector

from langchain.chains import RetrievalQAWithSourcesChain

from langchain_openai import ChatOpenAI

import textwrap

VECTOR_INDEX_NAME = 'chunks_vector'

VECTOR_NODE_LABEL = 'CHUNK'

VECTOR_SOURCE_PROPERTY = 'text'

VECTOR_EMBEDDING_PROPERTY = 'textEmbedding'

llm = ChatOpenAI(model="gpt-4o",temperature=0)

#Set up a QA chain that will use the window retrieval query

vector_store_window = Neo4jVector.from_existing_graph(

embedding=OpenAIEmbeddings(),

url=NEO4J_URI,

username=NEO4J_USERNAME,

password=NEO4J_PASSWORD,

index_name=VECTOR_INDEX_NAME,

node_label=VECTOR_NODE_LABEL,

text_node_properties=[VECTOR_SOURCE_PROPERTY],

embedding_node_property=VECTOR_EMBEDDING_PROPERTY,

retrieval_query=retrieval_query_window,

)

# Create a retriever from the vector store

retriever_window = vector_store_window.as_retriever()

# Create a chatbot Question & Answer chain from the retriever

chain_window = RetrievalQAWithSourcesChain.from_chain_type(

llm,

chain_type="stuff",

retriever=retriever_window

)

最后问几个问题:

question = "can you explain why we would want to do rlhf?"

answer = chain_window.invoke(question)

print(textwrap.fill(answer["answer"]))

RLHF (Reinforcement Learning from Human Feedback) is pursued to fine-

tune Large Language Models (LLMs) and significantly enhance their

performance. This method allows models to be iteratively aligned with

human expectations and preferences by incorporating feedback from

human users. It has been shown to address issues related to

factuality, toxicity, and helpfulness that cannot be resolved merely

by scaling up LLMs. The approach has been successfully applied across

various tasks, demonstrating its effectiveness in improving model

outputs and ensuring they are more useful and engaging.

用图表聊天

我们还有另一种方法来利用这个知识图谱。我们可以指示 LLM 将用户的输入查询翻译成加密查询,然后根据加密查询从图中检索数据。

下面是我们的代码实现方法。

首先,我们定义系统提示:

from langchain.prompts.prompt import PromptTemplate

CYPHER_GENERATION_TEMPLATE = """Task: Generate Cypher statement to query a graph database.

Instructions:

Use only the provided relationship types and properties in the schema.

Do not use any other relationship types or properties that are not provided.

Schema:

{schema}

Note: Do not include any explanations or apologies in your responses.

Do not respond to any questions that might ask anything else than for you to construct a Cypher statement.

Do not include any text except the generated Cypher statement.

Examples: Here are a few examples of generated Cypher statements for particular questions:

# Who authored the article titled "Graph Databases 101"?

MATCH (author:AUTHOR)-[:AUTHORED]->(article:ARTICLE)

WHERE article.title = 'Graph Databases 101'

RETURN author.name

# What are the categories for the article with ID '12345'?

MATCH (article:ARTICLE)-[:IN_CATEGORY]->(category:CATEGORY)

WHERE article.id = '12345'

RETURN category.category

# What are the next chunks following the chunk with ID 'chunk-001'?

MATCH (chunk:CHUNK)-[:NEXT]->(nextChunk:CHUNK)

WHERE chunk.id = 'chunk-001'

RETURN nextChunk.text

The question is:

{question}"""

CYPHER_GENERATION_PROMPT = PromptTemplate(

input_variables=["schema", "question"],

template=CYPHER_GENERATION_TEMPLATE

)

现在,我们只需导入 langchain 的 GraphCypherQAChain 即可:

from langchain.chains import GraphCypherQAChain

cypherChain = GraphCypherQAChain.from_llm(

llm,

graph=graph,

verbose=True,

cypher_prompt=CYPHER_GENERATION_PROMPT,

)

def prettyCypherChain(question: str) -> str:

response = cypherChain.run(question)

print(textwrap.fill(response, 60))

例如,我们可以询问某篇文章的作者情况

prettyCypherChain("Who authored the article titled Text and Patterns: For Effective Chain of Thought, It Takes Two to Tango?")> Entering new GraphCypherQAChain chain...

Generated Cypher:

cypher

MATCH (author:AUTHOR)-[:AUTHORED]->(article:ARTICLE)

WHERE article.title = 'Text and Patterns: For Effective Chain of Thought, It Takes Two to Tango'

RETURN author.name

Full Context:

[{'author.name': 'Aman Madaan'}, {'author.name': 'Amir Yazdanbakhsh'}]

> Finished chain.

Aman Madaan, Amir Yazdanbakhsh authored the article titled

Text and Patterns: For Effective Chain of Thought, It Takes

Two to Tango.

如你所见,我们的链将用户的查询翻译成了 cypher 查询,在图上执行了该查询,并根据结果返回了响应。

下面是另一个例子

prettyCypherChain("give me three articles names that belong to the stat.ML category ")> Entering new GraphCypherQAChain chain...

Generated Cypher:

MATCH (article:ARTICLE)-[:IN_CATEGORY]->(category:CATEGORY)

WHERE category.category = 'stat.ML'

RETURN article.title

LIMIT 3

Full Context:

[{'article.title': 'Mitigating Statistical Bias within Differentially Private Synthetic Data'}, {'article.title': 'Partitioned Variational Inference: A unified framework encompassing federated and continual learning'}, {'article.title': 'UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction'}]

> Finished chain.

Mitigating Statistical Bias within Differentially Private

Synthetic Data, Partitioned Variational Inference: A unified

framework encompassing federated and continual learning,

UMAP: Uniform Manifold Approximation and Projection for

Dimension Reduction

结论

总之,将 ai-arxiv-chunks 数据集转换为结构化知识图谱为利用和探索学术研究论文中包含的大量信息提供了一种强大的方法。通过利用 GraphRAG 的功能,这种方法不仅增强了传统的 RAG 流程,而且还能通过图中捕捉到的丰富的相互联系深入洞察。

有了作者、文章和类别等实体的映射,以及 AUTHORED 和 NEXT 等关系的定义,知识图谱就成了执行高级检索和生成任务的动态工具。

将知识图谱与向量嵌入和相似性搜索等尖端技术相结合,进一步推动了人工智能和机器学习的发展,使从大规模数据集中获取、分析和生成知识变得更加容易。

这一转变凸显了知识图谱在人工智能驱动的研发中日益增长的重要性,为未来更多的创新应用奠定了基础。