Microsoft GraphRAG:RDF知识图谱的应用与解析(第1部分)

和很多人一样,我读过微软关于 GraphRAG 的论文,想尝试一下。但是,它需要大量调用 OpenAI 兼容模型,这很快就会证明成本非常高。听说可以在本地运行这些模型而无需花费成本,所以我想自己尝试一下。

本地服务器要求

在选择本地运行的服务器时,我提出了一些符合 Microsoft GraphRAG 工作方式的要求:

- 能够使用 GGUF 格式的模型

- 聊天和嵌入的端点

- 开源

- 能够在本地计算机上运行

- 不向外部网站传递数据

GGUF 格式

为什么我要求使用 GGUF 格式的模型?LLM 模型名副其实--非常庞大!当你将训练好的模型连同权重一起保存时,生成的文件将达到数千兆字节。为了减小文件大小,可以将其转换为 GGUF 格式。这不仅能减小文件大小,还能加快模型加载速度。

llama.cpp

大多数可在本地运行的服务器似乎都是基于名为 llama.cpp 的开源产品构建的。如果访问他们的网站,就会看到使用其产品的用户界面列表。这个列表非常有用,因为你知道它们都能使用 GGUF 格式的模型。

我开始使用的是 Ollama,因为它目前相当流行。但使用最新版本时,你必须将 GGUF 导入他们的系统,然后转换成他们自己的专有格式。如果下载文件,情况也是一样。我不喜欢看不到 GGUF 文件,所以我从列表中选择了另一个用户界面: LM Studio

LM 工作室

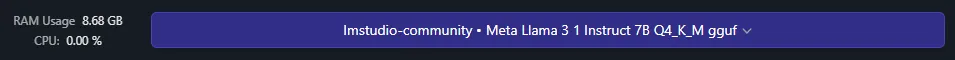

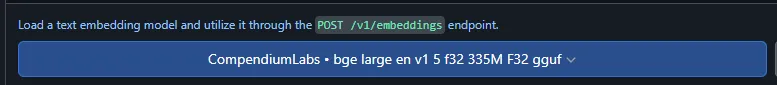

我从 LM Studio 网站下载了最新版本的 LM Studio,然后下载了一个用于聊天的模型(我使用的是 Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf)和一个用于 Embdedding 的模型(我使用的是 bge-large-en-v1.5-f32.gguf)。

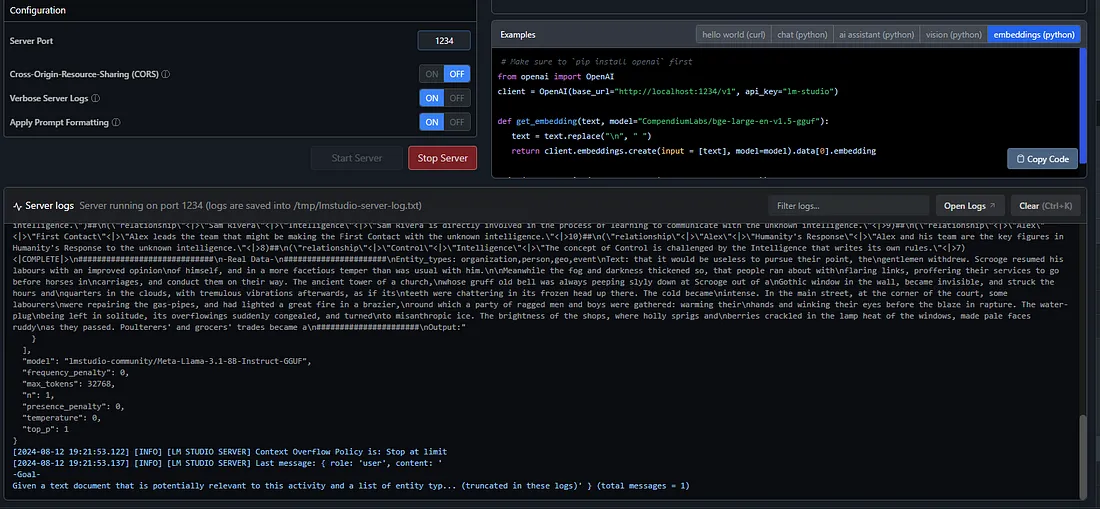

配置

为了使模型能在 Microsoft GraphRAG 中很好地工作,有一些地方需要更改。下载我们要使用的模型后,选择加载它们:

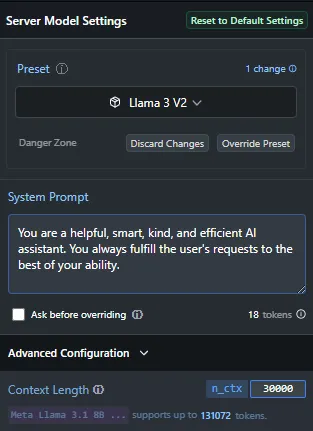

在右侧,你将看到服务器的设置。加载模型时,它会设置这些值,同时也会选择一些默认设置。我们需要更改的默认设置之一就是上下文长度。随着模型不断改进并变得越来越大,它们也允许更大的上下文长度。当你加载模型时,它会知道最大的上下文长度。但默认值只有 400,这就相当小了。我将上下文长度设为 30,000,以应对我们可能要处理的大块文本(GraphRAG 的默认值为 12,000):

下一步是加载嵌入模型:

现在,我们可以设置 Microsoft GraphRAG 并使用本地服务器运行它了。

运行 Microsoft GraphRAG

如果你按照 “入门 ”页面的帮助创建了初始环境,那么你需要调整 “ragtest ”文件夹中生成的 2 个文件。

.env 文件

该文件通常存储 OpenAI 账户的 API 密钥。对于我们的设置,只需将密钥值更改为 “lm-studio ”即可:

GRAPHRAG_API_KEY=lm-studio

settings.yaml

该文件中需要更改的重要内容有:

- 我们正在使用 OpenAI 聊天 API(第 6 行和第 36 行)

- LLM 模型的名称(第 7 行)

- 令牌的最大数量(第 9 行)

- API 基础(LM Studio 显示了这一点--第 11 行)

- 线程数。我将其设置为 1,因为我也在同一台机器上运行 LM Studio(第 27 行)

- 嵌入模型(第 37 行)

- 分块大小。新的默认值是 1200。我将其设置为旧值 300,这样效果更好(第 51 行)

encoding_model: cl100k_base

skip_workflows: []

llm:

api_key: ${GRAPHRAG_API_KEY}

type: openai_chat # or azure_openai_chat

model: lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF

model_supports_json: true # recommended if this is available for your model.

max_tokens: 32768

# request_timeout: 180.0

api_base: http://localhost:1234/v1

# api_version: 2024-02-15-preview

# organization: <organization_id>

# deployment_name: <azure_model_deployment_name>

# tokens_per_minute: 150_000 # set a leaky bucket throttle

# requests_per_minute: 10_000 # set a leaky bucket throttle

# max_retries: 10

# max_retry_wait: 10.0

# sleep_on_rate_limit_recommendation: true # whether to sleep when azure suggests wait-times

# concurrent_requests: 25 # the number of parallel inflight requests that may be made

# temperature: 0 # temperature for sampling

# top_p: 1 # top-p sampling

# n: 1 # Number of completions to generate

parallelization:

stagger: 0.3

num_threads: 1 # the number of threads to use for parallel processing

async_mode: threaded # or asyncio

embeddings:

## parallelization: override the global parallelization settings for embeddings

async_mode: threaded # or asyncio

llm:

api_key: ${GRAPHRAG_API_KEY}

type: openai_embedding # or azure_openai_embedding

model: "CompendiumLabs/bge-large-en-v1.5-gguf"

api_base: http://localhost:1234/v1

# api_version: 2024-02-15-preview

# organization: <organization_id>

# deployment_name: <azure_model_deployment_name>

# tokens_per_minute: 150_000 # set a leaky bucket throttle

# requests_per_minute: 10_000 # set a leaky bucket throttle

# max_retries: 10

# max_retry_wait: 10.0

# sleep_on_rate_limit_recommendation: true # whether to sleep when azure suggests wait-times

concurrent_requests: 1 # the number of parallel inflight requests that may be made

#batch_size: 16 # the number of documents to send in a single request

# batch_max_tokens: 8191 # the maximum number of tokens to send in a single request

# target: required # or optional

chunks:

size: 300

overlap: 100

group_by_columns: [id] # by default, we don't allow chunks to cross documents

input:

type: file # or blob

file_type: text # or csv

base_dir: "input"

file_encoding: utf-8

file_pattern: ".*\\.txt$"

cache:

type: file # or blob

base_dir: "cache"

# connection_string: <azure_blob_storage_connection_string>

# container_name: <azure_blob_storage_container_name>

storage:

type: file # or blob

base_dir: "output/${timestamp}/artifacts"

# connection_string: <azure_blob_storage_connection_string>

# container_name: <azure_blob_storage_container_name>

reporting:

type: file # or console, blob

base_dir: "output/${timestamp}/reports"

# connection_string: <azure_blob_storage_connection_string>

# container_name: <azure_blob_storage_container_name>

entity_extraction:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

prompt: "prompts/entity_extraction.txt"

entity_types: [organization,person,geo,event]

max_gleanings: 1

summarize_descriptions:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

prompt: "prompts/summarize_descriptions.txt"

max_length: 500

claim_extraction:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

enabled: false # warning: setting this to true added 16 hours to the run!

prompt: "prompts/claim_extraction.txt"

description: "Any claims or facts that could be relevant to information discovery."

max_gleanings: 1

community_reports:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

prompt: "prompts/community_report.txt"

max_length: 2000

max_input_length: 8000

cluster_graph:

max_cluster_size: 10

embed_graph:

enabled: false # if true, will generate node2vec embeddings for nodes

# num_walks: 10

# walk_length: 40

# window_size: 2

# iterations: 3

# random_seed: 597832

umap:

enabled: false # if true, will generate UMAP embeddings for nodes

snapshots:

graphml: false

raw_entities: false

top_level_nodes: false

local_search:

# text_unit_prop: 0.5

# community_prop: 0.1

# conversation_history_max_turns: 5

# top_k_mapped_entities: 10

# top_k_relationships: 10

# llm_temperature: 0 # temperature for sampling

# llm_top_p: 1 # top-p sampling

# llm_n: 1 # Number of completions to generate

# max_tokens: 12000

global_search:

# llm_temperature: 0 # temperature for sampling

# llm_top_p: 1 # top-p sampling

# llm_n: 1 # Number of completions to generate

# max_tokens: 12000

# data_max_tokens: 12000

# map_max_tokens: 1000

# reduce_max_tokens: 2000

# concurrency: 32

我们剩下的就是使用以下命令启动 GraphRAG:

python -m graphrag.index — root ./ragtest

查看提示

如果你想查看 Microsoft GraphRAG 生成的更多聊天提示,只需将 LM Studio 的 “verbose(详细说明)”开关打开即可: