Microsoft GraphRAG:RDF知识图谱的应用与解析(第3部分)

知识图谱简介

如果你一直在关注这个系列,那么此时你应该已经在 RDF 存储(我一直在使用 GraphDB)中填充了一个知识图谱。前两个步骤是:

- 第 1 部分--使用本地 LLM 和编码器完成 Microsoft 的 GraphRAG

- 第 2 部分--将 Microsoft GraphRAG 的输出上传到 RDF 存储器中

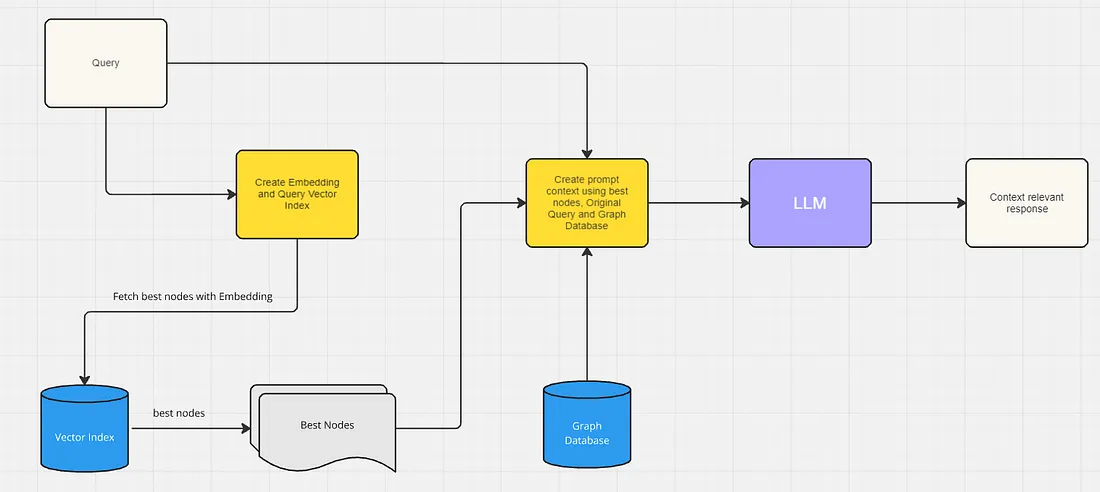

在最后一部分,我们将进行以下操作:

- 将我们的问题编码成一个嵌入向量(Embedding Vector),然后进行搜索,找出离该向量最近的 10 条实体记录。

- 然后找到与这些实体记录相关联的 Chunk 记录,并返回前 3 条记录。

- 我们还会找到与这些实体记录相关联的社区记录,并返回前 3 条记录

- 我们还会找到这些实体记录的内部和外部关系

- 我们还会获取每个实体的描述

- 获得所有这些信息后,我们将其作为上下文与我们的问题一起输入到我们的 LLM 中

编码我们的问题

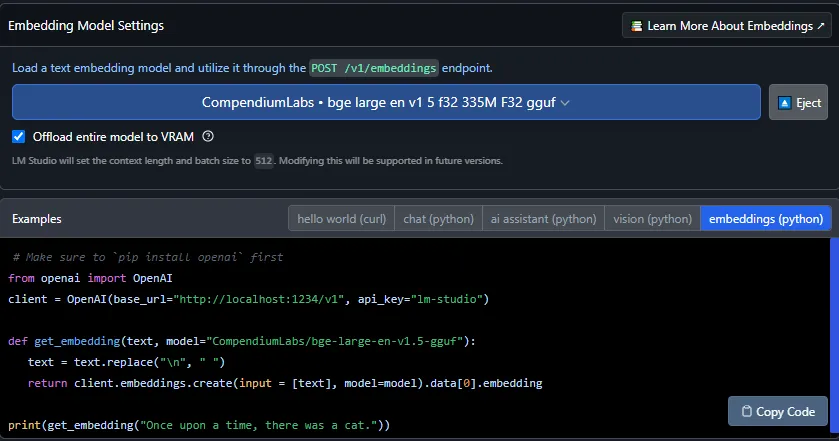

为了对问题进行编码,我们将使用 LM Server 提供的嵌入端点。这是一个 OpenAI 端点。LM 服务器提供了非常有用的代码,告诉你如何连接到他们的服务器:

question_text = "What is the relationship between Bob Cratchit and Belinda Cratchit?"

def get_embedding(text: str, client: Any, model: str="CompendiumLabs/bge-large-en-v1.5-gguf"):

"""Convert the text into an embedding vector using the model provided

:param text: text to be converted to and embedding vector

:param client: OpenAI client

:param model: name of the model to use for encoding

"""

text = text.replace("\n", " ")

return client.embeddings.create(input = [text], model=model).data[0].embedding

client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio")

embedding_vector = get_embedding(question_text, client=client)

现在,embedding_vector 将是一个浮点数值向量,大小与编码模型相同。我选择的编码模型是 bge-large-en-v1.5-gguf,创建的向量大小为 1024。在这一步中,使用与第一部分中相同的编码模型至关重要,否则在向量空间中的搜索将无法进行。

为了搜索 10 条最近匹配的实体记录,我们将使用在第 2 部分中创建和使用的 Elasticsearch。假定你已经在机器上安装并运行了 Elasticsearch,那么你可以按如下步骤进行操作:

from elasticsearch import Elasticsearch

es_username = 'elastic'

es_password = '' # change this to your Elasticsearch password

top_entities = 10

es = Elasticsearch("https://localhost:9200",

basic_auth=(es_username, es_password),

verify_certs=False)

es.info().body

query = {

"field" : "description_embedding" ,

"query_vector" : embedding_vector, # our question converted to an embedding vector

"k" : top_entities,

"num_candidates" : 100 ,

}

res = es.search(index=index_name, knn=query, source=["id"])

search_results = res["hits"]["hits"]

# convert our results into a list of Entities

# This list will be ordered by match score descending (i.e the more likely matches will be at the beginning)

entity_list = [x['_id'] for x in search_results]

现在,entity_list 包含了前 10 条实体记录的 id。

获取前3条Chunk记录

使用实体_列表,我们将查询知识图谱,并获取与这些实体记录链接的前3条Chunk记录。对于每个已识别的大块,我们会计算列表中链接到的实体记录的数量,然后按照降序排列:

top_chunks = 3

def get_text_mapping(nodes: List[str], limit_chunks: int = 3) -> str:

"""Get a SPARQL query that fetches the top Chunks that are connected to Entity records

:param nodes: list of Entity ids

:param limit_chunks: how many chunks to return

:returns: a SPARQL query string

"""

query = """

PREFIX gr: <http://ormynet.com/ns/msft-graphrag#>

SELECT

?chunkText

(COUNT(?entity_uri) AS ?freq)

WHERE {

?chunk_uri gr:has_entity ?entity_uri;

gr:text ?chunk_text .

"""

first = True

for node in nodes:

if not first:

query += " UNION "

query += f"""

{{

?entity_uri a gr:Entity;

gr:id "{node}" .

}}

"""

first = False

query += """

BIND(REPLACE(?chunk_text, "\\r\\n", " ") as ?chunkText)

}

GROUP BY ?chunk_uri ?chunkText

ORDER BY DESC(?freq)

"""

query += f" LIMIT {limit_chunks} "

return query

text_mapping_df =sparql_query(get_text_mapping(entity_list, limit_chunks=top_chunks))

获取前 3 条社区记录

同样,我们使用实体列表查询知识图谱,并获取与这些实体记录相关联的社区记录。我们按等级和权重对社区记录进行排序,并选出前 3 条:

def get_report_mapping(nodes: List[str], limit_communities: int = 3) -> str:

"""Get the Communities that are most likely to contain the Entities

:param nodes: list of Entity ids

:param limit_communities: how many communities

:returns: a SPARQL query string

"""

query = """

PREFIX gr: <http://ormynet.com/ns/msft-graphrag#>

SELECT ?community_uri ?rank ?weight ?summary

WHERE

{

?community_uri a gr:Community;

gr:rank ?rank;

gr:weight ?weight;

gr:summary ?community_summary .

BIND(REPLACE(?community_summary, "\\r\\n", " ", "i") AS ?summary)

?entity_uri gr:in_community ?community_uri;

"""

first = True

for node in nodes:

if not first:

query += " UNION "

query += f"""

{{

?entity_uri a gr:Entity;

gr:id "{node}" .

}}

"""

first = False

query += """

}

GROUP BY ?rank ?weight ?community_uri ?summary

ORDER BY DESC(?rank) DESC(?weight)

"""

query += f" LIMIT {limit_communities} "

return query

top_communities = 3

report_mapping_df = sparql_query(get_report_mapping(entity_list, limit_communities=top_communities))

获取内部和外部关系

我们使用实体_列表和相关_to 关系查询知识图谱,以查找内部和外部关系:

def get_outside_relationships(nodes: List[str], limit_outside_relationships: int = 10) -> str:

"""Get the outside relationships

:param nodes: list of Entity ids

:param limit_outside_relationships: how many relationships to return

:returns: a pandas DataFrame containing the relationships found

"""

query = """

PREFIX gr: <http://ormynet.com/ns/msft-graphrag#>

SELECT

?description

?entity_from_id ?entity_to_id

?rank ?weight

WHERE {

?related_to_uri a gr:related_to;

gr:id ?id;

gr:rank ?rank;

gr:description ?desc;

gr:weight ?weight .

BIND(REPLACE(?desc, "\\r\\n", "") as ?description)

?entity_from_uri ?related_to_uri ?entity_to_uri .

?entity_from_uri gr:id ?entity_from_id .

?entity_to_uri gr:id ?entity_to_id .

"""

first = True

for node in nodes:

if first:

query += " FILTER( "

else:

query += " && "

query += f"""

?entity_to_id != "{node}" """

first = False

query += """

)

}

ORDER BY DESC(?rank) DESC(?weight)

"""

query += f" LIMIT {limit_outside_relationships} "

return query

top_outside_relationships = 10

outside_relationships_df = sparql_query(get_outside_relationships(entity_list, limit_outside_relationships=top_outside_relationships))

还有内部关系:

def get_inside_relationships(nodes: List[str], limit_inside_relationships: int = 10) -> str:

"""Get a SPARQL query to fetch the inside relationships

:param nodes: list of Entity ids

:param limit_inside_relationships: how many relationships to return

:returns: a SPARQL query string

"""

query = """

PREFIX gr: <http://ormynet.com/ns/msft-graphrag#>

SELECT

?description

?entity_from_id ?entity_to_id

?rank ?weight

WHERE {

?related_to_uri a gr:related_to;

gr:id ?id;

gr:rank ?rank;

gr:description ?desc;

gr:weight ?weight .

BIND(REPLACE(?desc, "\\r\\n", "") as ?description)

?entity_from_uri ?related_to_uri ?entity_to_uri .

?entity_from_uri gr:id ?entity_from_id .

?entity_to_uri gr:id ?entity_to_id .

"""

first = True

for node in nodes:

if first:

query += " FILTER( "

else:

query += " || "

query += f"""

?entity_to_id = "{node}" """

first = False

query += """

)

}

ORDER BY DESC(?rank) DESC(?weight)

"""

query += f" LIMIT {limit_inside_relationships} "

return query

top_inside_relationships = 10

inside_relationships_df = sparql_query(get_inside_relationships(entity_list, limit_inside_relationships=top_inside_relationships))

获取实体描述

我们还可以获取实体描述:

def get_entities(nodes: List[str]) -> str:

"""Get a SPARQL query that will fetch details of the Entites that are in the list

:param nodes: list of Entity ids

:returns: a SPARQL query string

"""

query = """

PREFIX gr: <http://ormynet.com/ns/msft-graphrag#>

SELECT ?id ?description

WHERE

{

?entity_uri a gr:Entity;

gr:id ?id;

gr:description ?entity_desc .

BIND(REPLACE(?entity_desc, "\\r\\n", " ", "i") AS ?description)

"""

first = True

for node in nodes:

if first:

query += " FILTER( "

else:

query += " || "

query += f' ?id = "{node}" '

first = False

query += """ )

}

"""

return query

entities_df =sparql_query(get_entities(entity_list))

将上下文输入 LLM

获得所有这些数据帧后,我们要将每个数据帧转换成一种格式,然后将其输入到 LLM 中。下面是我们之前获得的 entity_df 的转换示例:

def convert_df_to_text(df: pd.DataFrame, key_name: str, colname: str):

"""Convert a DataFrame to text suitable for LLM context

:param df: input DataFrame

:param key_name: name of the key to use

:param colname: name of the column in the DataFrame to use

:returns: string suitable for LLM context

"""

output_text = "{\"" + key_name + ":\" [\n"

first = True

for i in range(len(df)):

if first:

output_text += "\""

first = False

else:

output_text += ",\n\""

output_text += df[colname].iloc[i] + "\""

output_text += "]}"

return output_text

entity_text = convert_df_to_text(entities_df, 'Entities', 'description')

创建 LangChain 响应

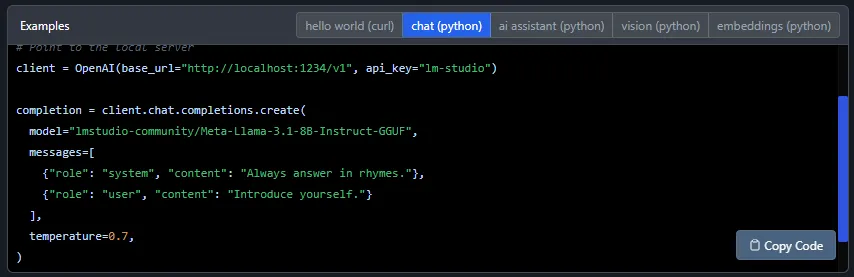

现在一切准备就绪,我们就可以与本地 LM 服务器实例建立 LLM 聊天了。LM 服务器再次为我们提供了一些有用的示例代码来连接服务器:

实际上,我们将使用 LangChain 将聊天模型和响应链在一起:

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

llm = ChatOpenAI(

model="lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF",

temperature=0.7,

max_tokens=None,

timeout=None,

max_retries=2,

api_key="lm-server",

base_url="http://localhost:1234/v1"

)

首先,让我们在没有知识图谱上下文的情况下进行提示:

simple_prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful assistant that answers questions about a book.",

),

("human", "{input}"),

]

)

simple_chain = simple_prompt | llm | StrOutputParser()

simple_chain.invoke(

{

"input": question_text,

}

)

这是我的模型给我的回复:

我觉得这里可能有些混淆!

在查尔斯-狄更斯的经典小说《圣诞颂歌》中,鲍勃-克拉奇特的妻子其实叫艾米丽,而不是贝琳达。

鲍勃-克拉齐特是埃比尼泽-斯古治(Ebenezer Scrooge)手下一个勤劳善良的职员。他是六个孩子的父亲: 他有六个孩子:彼得、贝儿(不是贝琳达)、小添添和另外三个不知名的孩子。

我特意选择了一个关于全名的问题,我知道这个全名不在文本中,但通过观察关系可以知道。这显然是失败的。

现在,让我们利用从知识图谱中获得的信息,结合上下文提出同样的问题:

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful assistant that answers questions about a book.",

),

("human", "{context} {input}"),

]

)

chain = prompt | llm | StrOutputParser()

chain.invoke(

{

"context": entity_text + "," + chunk_text + "," + relationships_text +"," + reports_text,

"input": question_text,

}

)

这是我这次收到的回复:

根据所提供的数据,贝琳达-克拉奇特是鲍勃-克拉奇特的女儿。他们是亲子关系。此外,书中还提到克拉琪特太太(鲍勃的妻子)协助贝琳达做家务,比如管理布匹,这表明他们之间有着亲密的家庭关系。

不错!很明显,它了解这本书,并且能够根据我们从知识图谱中提供的信息找出其中的关系。

总结

我在本地 PC 上运行了 GraphRAG 以及 LLM、Vector Embedding、Vector Index 和 RDF Store。我认为,我通过聊天获得的输出响应表明,这种方法比简单的 RAG 好得多。