如何使用LlamaIndex工作流程简化我的研究和演示

LlamaIndex 最近推出了一项新功能:工作流。它对于那些想要创建既可靠又灵活的 AI 解决方案的人来说非常有用。为什么?因为它允许你使用控制流定义自定义步骤。它支持循环、反馈和错误处理。它就像一个支持 AI 的管道。但与通常以有向无环图 (DAG) 形式实现的典型管道不同,工作流还支持循环执行,使其成为实现代理和其他更复杂流程的良好候选者。

在本文中,我将展示如何使用 LlamaIndex Workflows 简化研究某个主题的最新进展的过程,然后将该研究成果制作成 PowerPoint 演示文稿。

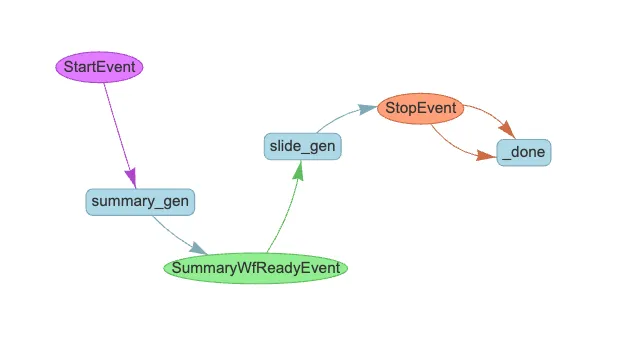

主要工作流程

主工作流程由两个嵌套的子工作流程组成:

- summary_gen:此子工作流程查找给定主题的研究论文并生成摘要。它通过网络查询进行论文搜索,并根据指示使用 LLM 获取见解和摘要。

- slide_gen:此子工作流负责使用上一步中的摘要生成 PowerPoint 幻灯片。它使用提供的 PowerPoint 模板格式化幻灯片,并通过使用库创建和执行 Python 代码来生成它们python-pptx。

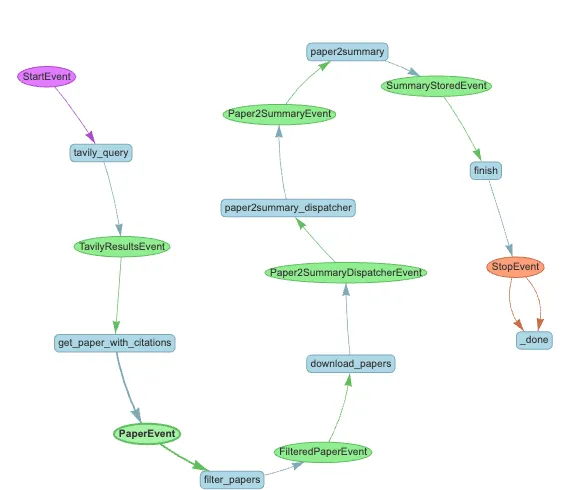

摘要生成子工作流程

让我们仔细看看这些子工作流程。首先,summary_gen工作流程非常简单。它遵循一个简单的线性过程。它基本上充当“数据处理”工作流程,其中一些步骤会向 LLM 发送请求。

工作流程从获取用户输入(研究主题)开始,然后执行以下步骤:

- tavily_query:使用 Tavily API 进行查询,以获取与该主题相关的学术论文作为结构化响应。

- get_paper_with_citations:对于 Tavily 查询返回的每篇论文,该步骤使用 SemanticScholar API 检索论文元数据以及所引用论文的元数据。

- filter_papers:由于并非所有检索到的引文都与原始主题直接相关,因此此步骤会细化结果。每篇论文的标题和摘要都会发送给 LLM 以评估其相关性。此步骤定义为:

@step(num_workers=4)

async def filter_papers(self, ev: PaperEvent) -> FilteredPaperEvent:

llm = new_gpt4o_mini(temperature=0.0)

response = await process_citation(ev.paper, llm)

return FilteredPaperEvent(paper=ev.paper, is_relevant=response)

在 process_citation() 函数中,我们使用 LlamaIndex 中的 FunctionCallingProgram 来获取结构化响应:

IS_CITATION_RELEVANT_PMT = """

You help a researcher decide whether a paper is relevant to their current research topic: {topic}

You are given the title and abstract of a paper.

title: {title}

abstract: {abstract}

Give a score indicating the relevancy to the research topic, where:

Score 0: Not relevant

Score 1: Somewhat relevant

Score 2: Very relevant

Answer with integer score 0, 1 or 2 and your reason.

"""

class IsCitationRelevant(BaseModel):

score: int

reason: str

async def process_citation(citation, llm):

program = FunctionCallingProgram.from_defaults(

llm=llm,

output_cls=IsCitationRelevant,

prompt_template_str=IS_CITATION_RELEVANT_PMT,

verbose=True,

)

response = await program.acall(

title=citation.title,

abstract=citation.summary,

topic=citation.topic,

description="Data model for whether the paper is relevant to the research topic.",

)

return response

- download_papers: 该步骤收集所有筛选出的论文,根据相关性得分和在 ArXiv 上的可用性对其进行优先排序,并下载最相关的论文。

- paper2summary_dispatcher: 通过设置图像和摘要的存储路径,为生成摘要做好准备。该步骤使用 self.send_event() 来并行执行每篇论文的 paper2summary 步骤。它还通过变量 ctx.data[“n_pdfs”]设置了工作流上下文中的论文数量,这样后面的步骤就能知道总共要处理多少篇论文。

@step(pass_context=True)

async def paper2summary_dispatcher(

self, ctx: Context, ev: Paper2SummaryDispatcherEvent

) -> Paper2SummaryEvent:

ctx.data["n_pdfs"] = 0

for pdf_name in Path(ev.papers_path).glob("*.pdf"):

img_output_dir = self.papers_images_path / pdf_name.stem

img_output_dir.mkdir(exist_ok=True, parents=True)

summary_fpath = self.paper_summary_path / f"{pdf_name.stem}.md"

ctx.data["n_pdfs"] += 1

self.send_event(

Paper2SummaryEvent(

pdf_path=pdf_name,

image_output_dir=img_output_dir,

summary_path=summary_fpath,

)

)

- paper2summary: 对于每篇论文,它都会将 PDF 转换成图像,然后发送到 LLM 进行摘要。摘要生成后,会保存在一个标记文件中,供日后参考。特别是,这里生成的摘要相当详细,就像一篇小文章,因此还不太适合直接放在演示文稿中。但我们还是保留了它,以便用户可以查看这些中间结果。在后面的步骤中,我们将使这些信息更易于展示。提供给法律硕士的提示包括一些关键说明,以确保摘要准确简洁:

SUMMARIZE_PAPER_PMT = """

You are an AI specialized in summarizing scientific papers.

Your goal is to create concise and informative summaries, with each section preferably around 100 words and

limited to a maximum of 200 words, focusing on the core approach, methodology, datasets,

evaluation details, and conclusions presented in the paper. After you summarize the paper,

save the summary as a markdown file.

Instructions:

- Key Approach: Summarize the main approach or model proposed by the authors.

Focus on the core idea behind their method, including any novel techniques, algorithms, or frameworks introduced.

- Key Components/Steps: Identify and describe the key components or steps in the model or approach.

Break down the architecture, modules, or stages involved, and explain how each contributes to the overall method.

- Model Training/Finetuning: Explain how the authors trained or finetuned their model.

Include details on the training process, loss functions, optimization techniques,

and any specific strategies used to improve the model’s performance.

- Dataset Details: Provide an overview of the datasets used in the study.

Include information on the size, type and source. Mention whether the dataset is publicly available

and if there are any benchmarks associated with it.

- Evaluation Methods and Metrics: Detail the evaluation process used to assess the model's performance.

Include the methods, benchmarks, and metrics employed.

- Conclusion: Summarize the conclusions drawn by the authors. Include the significance of the findings,

any potential applications, limitations acknowledged by the authors, and suggested future work.

Ensure that the summary is clear and concise, avoiding unnecessary jargon or overly technical language.

Aim to be understandable to someone with a general background in the field.

Ensure that all details are accurate and faithfully represent the content of the original paper.

Avoid introducing any bias or interpretation beyond what is presented by the authors. Do not add any

information that is not explicitly stated in the paper. Stick to the content presented by the authors.

"""

- finish: 工作流收集所有生成的摘要,验证它们是否被正确存储,并记录流程的完成情况,返回 StopEvent 作为最终结果。

如果这个工作流是独立运行的,执行将到此结束。但是,由于这只是主流程的一个子工作流,因此完成后会触发下一个子工作流 slide_gen。

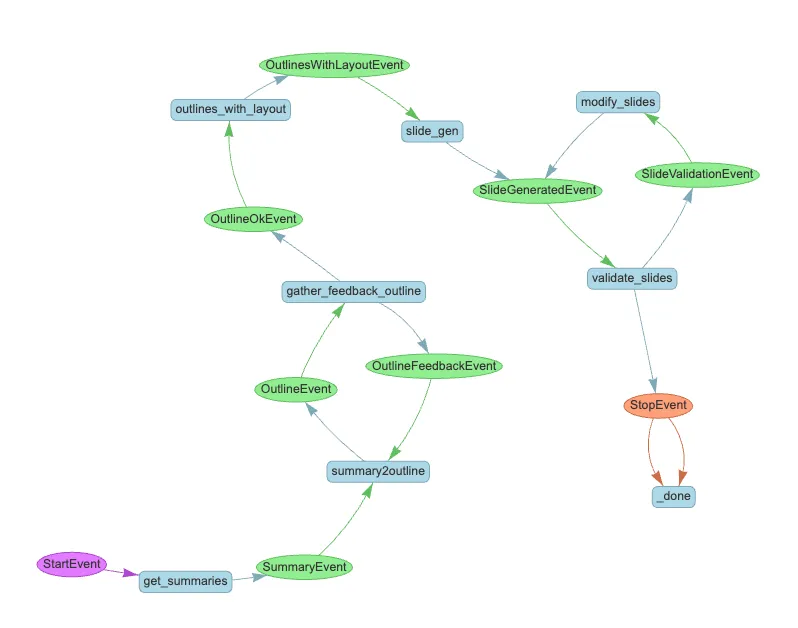

幻灯片生成子工作流

该工作流程根据上一步创建的摘要生成幻灯片。下面是幻灯片生成工作流程的概述:

当上一个子工作流程结束,摘要标记文件准备就绪时,此工作流程开始:

- get_summaries: 这一步会读取摘要文件的内容,并再次利用 self.send_event() 为每个文件触发一个摘要事件,以便并发执行,加快处理速度。

- summary2outline: 此步骤使用 LLM 将摘要制作成幻灯片大纲文本。它将摘要缩短为句子或要点,以便放入演示文稿中。

- gather_feedback_outline: 在这一步骤中,它会向用户展示建议的幻灯片大纲和论文摘要,供用户审阅。用户提供反馈,如果需要修改,则可能触发 OutlineFeedbackEvent。这一反馈循环将与 summary2outline 步骤一起继续,直到用户批准最终大纲,此时将触发 OutlineOkEvent。

@step(pass_context=True)

async def gather_feedback_outline(

self, ctx: Context, ev: OutlineEvent

) -> OutlineFeedbackEvent | OutlineOkEvent:

"""Present user the original paper summary and the outlines generated, gather feedback from user"""

print(f"the original summary is: {ev.summary}")

print(f"the outline is: {ev.outline}")

print("Do you want to proceed with this outline? (yes/no):")

feedback = input()

if feedback.lower().strip() in ["yes", "y"]:

return OutlineOkEvent(summary=ev.summary, outline=ev.outline)

else:

print("Please provide feedback on the outline:")

feedback = input()

return OutlineFeedbackEvent(

summary=ev.summary, outline=ev.outline, feedback=feedback

)

- outlines_with_layout: 它使用 LLM 将给定 PowerPoint 模板中的页面布局细节加入到每张幻灯片的大纲中。该阶段将所有幻灯片页面的内容和设计保存在一个 JSON 文件中。

- slide_gen: 它使用 ReAct 代理根据给定的大纲和布局细节制作幻灯片。该代理有一个代码解释器工具,用于在隔离环境中运行和校正代码,还有一个布局检查工具,用于查看给定的 PowerPoint 模板信息。该代理会被提示使用 python-pptx 制作幻灯片,并能观察和修正错误。

@step(pass_context=True)

async def slide_gen(

self, ctx: Context, ev: OutlinesWithLayoutEvent

) -> SlideGeneratedEvent:

agent = ReActAgent.from_tools(

tools=self.azure_code_interpreter.to_tool_list() + [self.all_layout_tool],

llm=new_gpt4o(0.1),

verbose=True,

max_iterations=50,

)

prompt = (

SLIDE_GEN_PMT.format(

json_file_path=ev.outlines_fpath.as_posix(),

template_fpath=self.slide_template_path,

final_slide_fname=self.final_slide_fname,

)

+ REACT_PROMPT_SUFFIX

)

agent.update_prompts({"agent_worker:system_prompt": PromptTemplate(prompt)})

res = self.azure_code_interpreter.upload_file(

local_file_path=self.slide_template_path

)

logging.info(f"Uploaded file to Azure: {res}")

response = agent.chat(

f"An example of outline item in json is {ev.outline_example.json()},"

f" generate a slide deck"

)

local_files = self.download_all_files_from_session()

return SlideGeneratedEvent(

pptx_fpath=f"{self.workflow_artifacts_path}/{self.final_slide_fname}"

)

- validate_slide:检查幻灯片,确保其符合给定的标准。这一步包括将幻灯片转化为图像,并让 LLM 根据指南目测其内容是否正确、风格是否一致。根据 LLM 的发现,如果有问题,它会发送 SlideValidationEvent;如果一切正常,它会发送 StopEvent。

@step(pass_context=True)

async def validate_slides(

self, ctx: Context, ev: SlideGeneratedEvent

) -> StopEvent | SlideValidationEvent:

"""Validate the generated slide deck"""

ctx.data["n_retry"] += 1

ctx.data["latest_pptx_file"] = Path(ev.pptx_fpath).name

img_dir = pptx2images(Path(ev.pptx_fpath))

image_documents = SimpleDirectoryReader(img_dir).load_data()

llm = mm_gpt4o

program = MultiModalLLMCompletionProgram.from_defaults(

output_parser=PydanticOutputParser(SlideValidationResult),

image_documents=image_documents,

prompt_template_str=SLIDE_VALIDATION_PMT,

multi_modal_llm=llm,

verbose=True,

)

response = program()

if response.is_valid:

return StopEvent(

self.workflow_artifacts_path.joinpath(self.final_slide_fname)

)

else:

if ctx.data["n_retry"] < self.max_validation_retries:

return SlideValidationEvent(result=response)

else:

return StopEvent(

f"The slides are not fixed after {self.max_validation_retries} retries!"

)

用于验证的标准是:

SLIDE_VALIDATION_PMT = """

You are an AI that validates the slide deck generated according to following rules:

- The slide need to have a front page

- The slide need to have a final page (e.g. a 'thank you' or 'questions' page)

- The slide texts are clearly readable, not cut off, not overflowing the textbox

and not overlapping with other elements

If any of the above rules are violated, you need to provide the index of the slide that violates the rule,

as well as suggestion on how to fix it.

"""

- modify_slides: 如果幻灯片未能通过验证检查,上一步会发送 SlideValidationEvent 事件。这时,另一个 ReAct 代理会根据验证器的反馈更新幻灯片,更新后的幻灯片会被保存并返回再次验证。根据 SlideGenWorkflow 类的 max_validation_retries 变量属性,这个验证循环可发生多次。

为了端到端运行整个工作流程,我们通过以下方式启动流程:

class SummaryAndSlideGenerationWorkflow(Workflow):

@step

async def summary_gen(

self, ctx: Context, ev: StartEvent, summary_gen_wf: SummaryGenerationWorkflow

) -> SummaryWfReadyEvent:

print("Need to run reflection")

res = await summary_gen_wf.run(user_query=ev.user_query)

return SummaryWfReadyEvent(summary_dir=res)

@step

async def slide_gen(

self, ctx: Context, ev: SummaryWfReadyEvent, slide_gen_wf: SlideGenerationWorkflow

) -> StopEvent:

res = await slide_gen_wf.run(file_dir=ev.summary_dir)

return StopEvent()

async def run_workflow(user_query: str):

wf = SummaryAndSlideGenerationWorkflow(timeout=2000, verbose=True)

wf.add_workflows(

summary_gen_wf=SummaryGenerationWorkflow(timeout=800, verbose=True)

)

wf.add_workflows(slide_gen_wf=SlideGenerationWorkflow(timeout=1200, verbose=True))

result = await wf.run(

user_query=user_query,

)

print(result)

@click.command()

@click.option(

"--user-query",

"-q",

required=False,

help="The user query",

default="powerpoint slides automation",

)

def main(user_query: str):

asyncio.run(run_workflow(user_query))

if __name__ == "__main__":

draw_all_possible_flows(

SummaryAndSlideGenerationWorkflow, filename="summary_slide_gen_flows.html"

)

main()

结果

现在,让我们来看看为论文《LayoutGPT》生成的中间摘要示例: 使用大型语言模型进行合成视觉规划和生成:

# Summary of "LayoutGPT: Compositional Visual Planning and Generation with Large Language Models"

## Key Approach

The paper introduces LayoutGPT, a framework leveraging large language models (LLMs) for compositional visual planning and generation. The core idea is to utilize LLMs to generate 2D and 3D scene layouts from textual descriptions, integrating numerical and spatial reasoning. LayoutGPT employs a novel prompt construction method and in-context learning to enhance the model's ability to understand and generate complex visual scenes.

## Key Components/Steps

1. **Prompt Construction**: LayoutGPT uses detailed task instructions and CSS-like structures to guide the LLMs in generating layouts.

2. **In-Context Learning**: Demonstrative exemplars are provided to the LLMs to improve their understanding and generation capabilities.

3. **Numerical and Spatial Reasoning**: The model incorporates reasoning capabilities to handle numerical and spatial relationships in scene generation.

4. **Scene Synthesis**: LayoutGPT generates 2D keypoint layouts and 3D scene layouts, ensuring spatial coherence and object placement accuracy.

## Model Training/Finetuning

LayoutGPT is built on GPT-3.5 and GPT-4 models, utilizing in-context learning rather than traditional finetuning. The training process involves providing the model with structured prompts and examples to guide its generation process. Loss functions and optimization techniques are not explicitly detailed, as the focus is on leveraging pre-trained LLMs with minimal additional training.

## Dataset Details

The study uses several datasets:

- **NSR-1K**: A new benchmark for numerical and spatial reasoning, created from MSCOCO annotations.

- **3D-FRONT**: Used for 3D scene synthesis, containing diverse indoor scenes.

- **HRS-Bench**: For evaluating color binding accuracy in generated scenes.

These datasets are publicly available and serve as benchmarks for evaluating the model's performance.

## Evaluation Methods and Metrics

The evaluation involves:

- **Quantitative Metrics**: Precision, recall, and F1 scores for layout accuracy, numerical reasoning, and spatial reasoning.

- **Qualitative Analysis**: Visual inspection of generated scenes to assess spatial coherence and object placement.

- **Comparative Analysis**: Benchmarking against existing methods like GLIGEN and ATISS to demonstrate improvements in layout generation.

## Conclusion

The authors conclude that LayoutGPT effectively integrates LLMs for visual planning and scene generation, achieving state-of-the-art performance in 2D and 3D layout tasks. The framework's ability to handle numerical and spatial reasoning is highlighted as a significant advancement. Limitations include the focus on specific scene types and the need for further exploration of additional visual reasoning tasks. Future work suggests expanding the model's capabilities to more diverse and complex visual scenarios.

不足为奇的是,对于LLM来说,总结并不是一项特别具有挑战性的任务。只需提供论文的图片,LLM就能有效地抓住提示中列出的所有关键方面,并很好地遵守了样式说明。

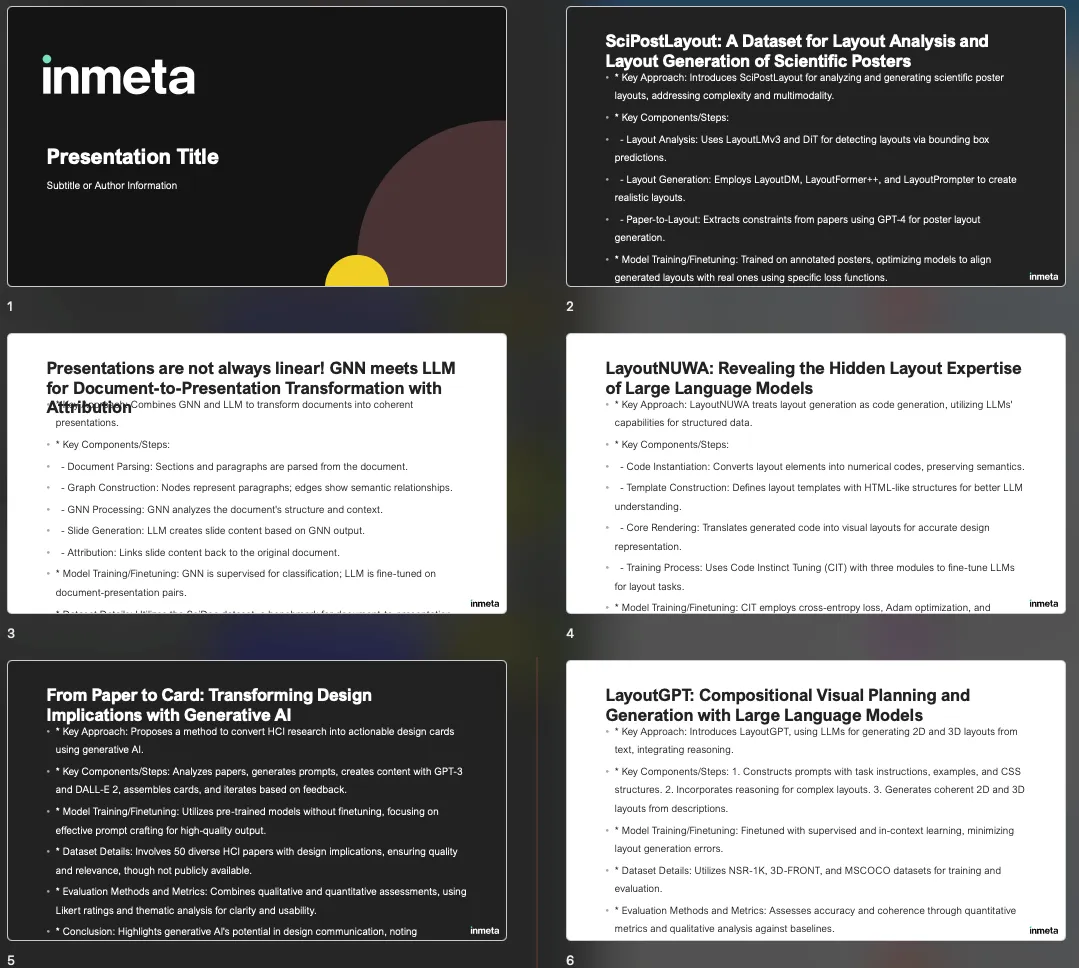

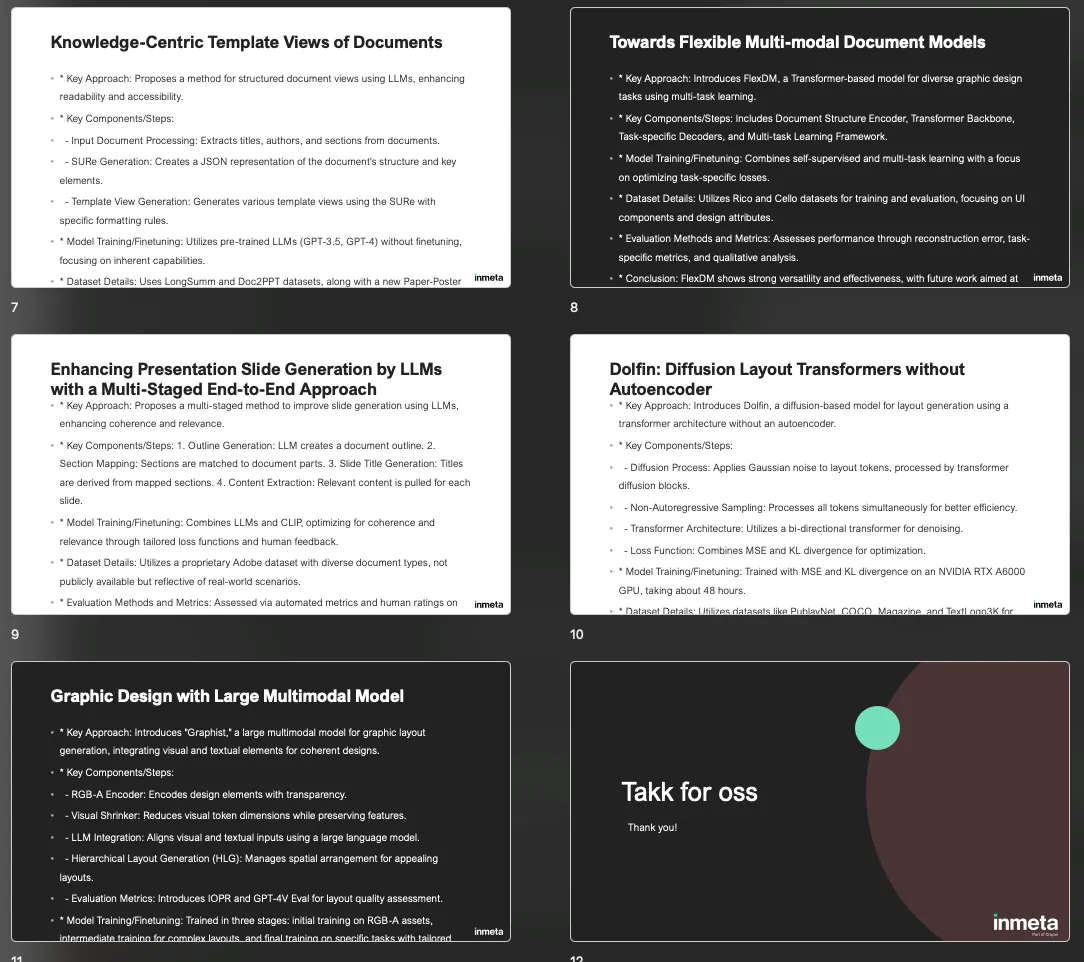

至于最终结果,下面是生成的演示幻灯片的几个示例:

在按照模板的布局填写摘要内容时,文字保持模板的风格,将摘要要点以项目符号的形式列出,并在幻灯片中包含所有需要的相关文件,工作流程都做得很好。有一个问题是,有时主要内容占位符中的文本没有调整大小以适应文本框。文本会溢出幻灯片边界。这类错误也许可以通过使用更有针对性的幻灯片验证提示来解决。

总结

在本文中,我展示了如何使用 LlamaIndex 工作流程来简化我的研究和演示过程,从查询学术论文到生成最终的 PowerPoint 幻灯片。以下是我在实施这一工作流程过程中的一些想法和观察,以及我认为可能需要改进的一些方面。