图上高效RAG:三层固定实体架构

知识图谱正在成为结构化和检索各领域信息的强大工具。它们正越来越多地与机器学习和自然语言处理技术相结合,以增强信息检索和推理能力。在本文中,我介绍了一种构建知识图谱的三层架构,它将固定的本体实体、文档块和提取的命名实体结合在一起。通过利用嵌入和余弦相似性,这种方法提高了检索效率,并允许在查询过程中进行更精确的图遍历。该方法论介绍了构建基于固定实体的知识库的方法,为大型语言模型(LLM)提供了一种可扩展且具有成本效益的替代方案,同时符合当前检索增强生成(RAG)系统的发展趋势。

之前对于新方法我将其命名为固定实体架构,用于构建带有嵌入的知识图谱,并将其用作检索-增强生成(RAG)解决方案中检索步骤的向量数据库。本文旨在介绍使用预定义本体构建图的概念。该本体基于一个简单的例句: “阿尔伯特-爱因斯坦提出了相对论,彻底改变了理论物理学和天文学"。

简而言之,所介绍的方法涉及创建两个实体层。第一层节点,我们可以称之为固定实体层(FEL1),代表本体的 “骨架”,可以由领域专家利用他们的知识、经验或特定领域的一些基础文档来构建。第二层由你希望用作实际知识库的文档组成。这些文档被分成若干块,作为文档节点存储在基于 Neo4j 的知识图谱中。

这种方法的关键在于两层之间的连接。这种连接是通过计算第 1 层(FEL1)和第 2 层(我们可以称之为文档层(DL2))之间的余弦相似度来建立的。(我在末尾使用数字是为了避免与机器学习和数据科学中的其他著名缩写混淆)。FEL1 和 DL2 基于相似性阈值进行链接。这样就可以在图上有效地执行检索增强生成(RAG)。

在本文中,我将介绍第三层,并将其命名为 spaCy 实体层(SEL3)。该层包括从文档层提取的命名实体,可在用户查询时进一步增强和完善图遍历,并有可能提高检索准确性。让我们深入了解一下三层固定实体架构。

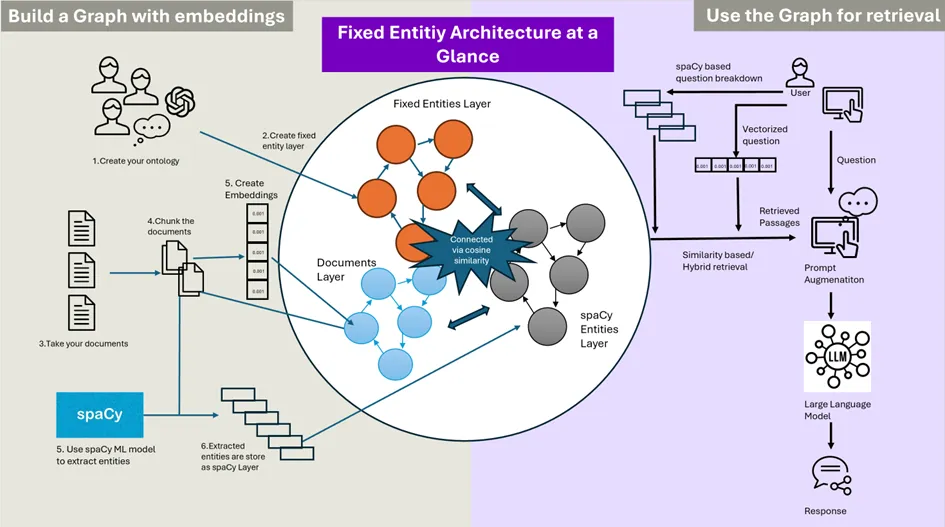

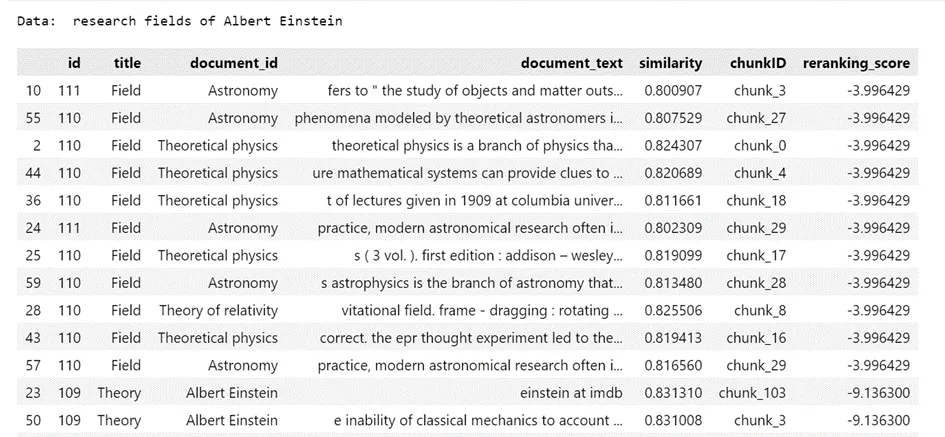

图1

基于三层的固定实体架构

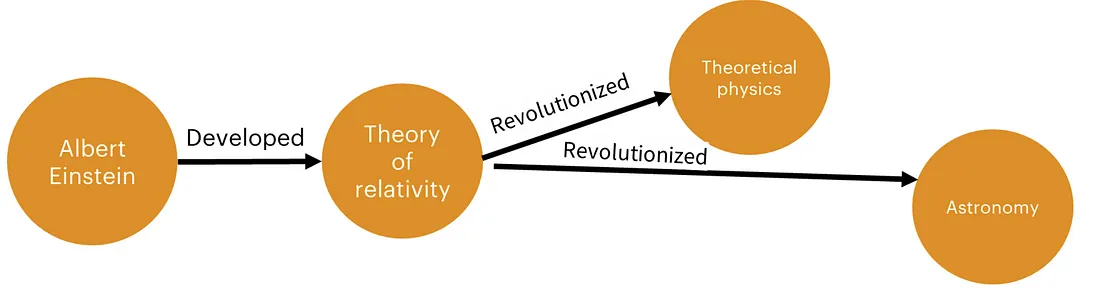

图 1 是三层固定实体架构的概览。知识图谱由三层组成。橙色实体代表建立知识图谱的特定领域或用例的固定本体。蓝色实体对应于分块文档,这些文档根据余弦相似度与橙色实体建立链接。灰色实体是从每个蓝色分块中提取的命名实体,根据提取过程链接回分块。你也可以使用相同的相似性技术将所有层相互连接起来,但现在,让我们先关注一下爱因斯坦句子是如何转化为这个三层架构的。图 2 展示了固定实体层 1(FEL1)。

图2

建立数据库

添加第一层:固定实体层

让我们再次使用神奇的维基百科 API 为第一层节点填充描述。拥有固定实体的简短描述对于后续层的基于相似性的附着至关重要。请注意,我只使用维基百科 API 提取的文章摘要来构建固定实体层,而对于文档层,我稍后会解析文章全文。

# Define the entities and their labels

entities = [

{"label": "Person", "name": "Albert Einstein"},

{"label": "Theory", "name": "Theory of relativity"},

{"label": "Field", "name": "Theoretical physics"},

{"label": "Field", "name": "Astronomy"}

]

# Generate the embeddings and descriptions

for entity in entities:

entity["embeddings"] = embeddings.embed_query(f"{entity['label']}: {entity['name']}").tolist()

entity["description"] = get_wikipedia_article_summary(entity["name"], f"https://en.wikipedia.org/wiki/{entity['name']}").replace("'", "\\'")

# Create the query

query = f"""

MERGE (a:Entity {{label: '{entities[0]['label']}', name: '{entities[0]['name']}'}})

SET a.embeddings = {entities[0]['embeddings']}, a.description = '{entities[0]['description']}'

MERGE (b:Entity {{label: '{entities[1]['label']}', name: '{entities[1]['name']}'}})

SET b.embeddings = {entities[1]['embeddings']}, b.description = '{entities[1]['description']}'

MERGE (c:Entity {{label: '{entities[2]['label']}', name: '{entities[2]['name']}'}})

SET c.embeddings = {entities[2]['embeddings']}, c.description = '{entities[2]['description']}'

MERGE (d:Entity {{label: '{entities[3]['label']}', name: '{entities[3]['name']}'}})

SET d.embeddings = {entities[3]['embeddings']}, d.description = '{entities[3]['description']}'

// Create edges

MERGE (a)-[:DEVELOPED]->(b)

MERGE (b)-[:REVOLUTIONIZED]->(c)

MERGE (b)-[:REVOLUTIONIZED]->(d)

"""

# Run the query

run_query(driver, query)

添加第二层:文件层

恭喜,FEL1 已准备就绪!现在我们要添加知识库第二层(DL2)。为此,我们将再次使用维基百科,提取文章文本作为知识库。对于三层架构,我在一次运行中将 DL2 和 SEL3 同时填充到数据库中,具体如下:

node_list = ['Albert Einstein', 'Theory of relativity', 'Theoretical physics', 'Astronomy']'Albert Einstein', 'Theory of relativity', 'Theoretical physics', 'Astronomy']

# Example usage

for node_name in node_list:

text = get_wikipedia_article_text(node_name, f"https://en.wikipedia.org/wiki/{node_name}")

if len(text) == 0 or text == "The article does not exist.":

text = node_name

if len(text) == 0:

print(f"Could not retrieve text for {node_name}")

continue

else:

chunks = embeddings.split_text(text, file_type="text", chunk_size=1000, chunk_overlap=200)

print("Number of chunks:", len(chunks))

print("Adding chunks to the database")

add_chunks_to_db(chunks, node_name)

########################################################

print("Adding spaCies")

add_spacies(chunks, node_name)

请注意,这次我使用的是每块 1000 个标记,200 个标记重叠。

我们先来看看 FEL1 和 DL2 这两个连接层是如何出现在爱因斯坦数据库中的(见图 3)。

图3

正如上一篇文章所讨论的那样,块是通过余弦相似性连接的。这意味着,一个数据块可以连接到多个固定实体,反之亦然。通过这种设置,你可以搜索实体并检索仅与领域相关部分相连的数据块,从而完善检索流程。此外,你还可以根据相似性将你的查询与检索到的数据块进行比较,从而执行智能搜索。所有这些都可以无缝集成到一个 Cypher 查询中。从本质上讲,你的检索幻想是唯一的限制!

添加第三层:SpaCy 实体层

现在让我们考虑第三层。将分块文档与固定实体连接起来效果很好,但仍然会有一些限制。例如,如果 FEL1 实体的描述太短、质量太差,甚至缺失,可能会影响各层在相似性上的连接效果。此外,还应该考虑到相似性搜索的不对称性,即比较文本的长度很重要。

该层的一个潜在改进方法是模仿标签搜索,即通过关联和搜索基于特定标签的信息来改进搜索功能。

从文本中提取命名实体也是一项挑战。为了在很大程度上保持无 LLM 的数据库创建方法,我发现使用 spaCy 的实体链接器(Entity Linker)特别有用。它为标记化、语音部分标记和命名实体识别等任务提供了预训练模型和工具。

spaCy 的实体链接器组件用于命名实体链接和消歧。它将文本提及(被识别为命名实体)与知识库中的唯一标识符连接起来,使其立足于 “现实世界”。例如,它可以将文本中提到的 “苹果 ”与苹果公司而不是水果联系起来。

在这里,我采用了与创建第一层固定实体类似的方法。我不仅包含了提取 spaCy 实体的节点,还使用了 spaCy 提取实体提供的维基百科链接,从维基百科中获取摘要,并将其作为描述添加到 spaCy 节点中。

让我们以维基百科上关于相对论的摘要为例,它被添加为 FEL1 实体 “相对论 ”的描述属性。如下所示:

text = """The theory of relativity usually encompasses two interrelated physics theories by Albert Einstein: special relativity and general relativity, proposed and published in 1905 and 1915, respectively. Special relativity applies to all physical phenomena in the absence of gravity. General relativity explains the law of gravitation and its relation to the forces of nature. It applies to the cosmological and astrophysical realm, including astronomy.The theory transformed theoretical physics and astronomy during the 20th century, superseding a 200-year-old theory of mechanics created primarily by Isaac Newton. It introduced concepts including 4-dimensional spacetime as a unified entity of space and time, relativity of simultaneity, kinematic and gravitational time dilation, and length contraction. In the field of physics, relativity improved the science of elementary particles and their fundamental interactions, along with ushering in the nuclear age. With relativity, cosmology and astrophysics predicted extraordinary astronomical phenomena such as neutron stars, black holes, and gravitational waves."""

现在,我们可以提取类似的 spaCies:

import spacy

# initialize language model

nlp = spacy.load("en_core_web_md")

# add pipeline (declared through entry_points in setup.py)

nlp.add_pipe("entityLinker", last=True)

doc = nlp(text)

# returns all entities in the whole document

all_linked_entities = doc._.linkedEntities

# iterates over sentences and prints linked entities

for sent in doc.sents:

sent._.linkedEntities.pretty_print()

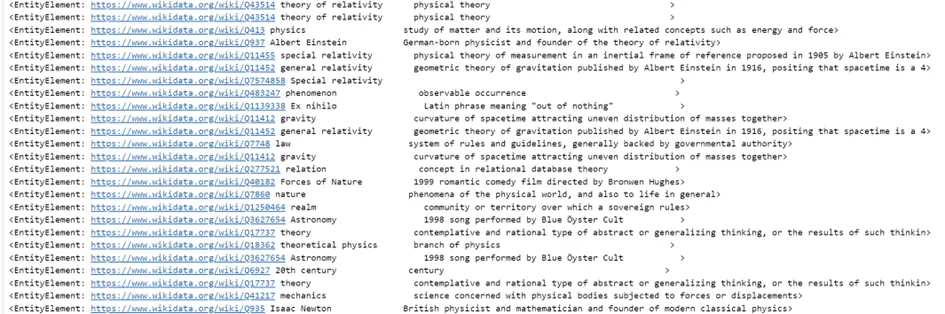

这个查询的输出结果有点长,所以我只分享前几行的快照:

连接点

运行代码后,我的图形数据库中就有了所有三个层。值一旦添加了所有内容,我就会运行之前的相似性算法。这个过程包括文档块和所有实体,计算每个实体的相似度得分,如果相似度超过我定义的阈值(阈值可根据使用情况而变化),则将它们连接起来。

在三层架构的情况下,你也可以考虑这样做:

query = ''''''

MATCH (e:Document), (d:Document)

WHERE e.docID <> d.docID

WITH e, d,

reduce(numerator = 0.0, i in range(0, size(e.embeddings)-1) | numerator + toFloat(e.embeddings[i])*toFloat(d.embeddings[i])) as dotProduct,

sqrt(reduce(eSum = 0.0, i in range(0, size(e.embeddings)-1) | eSum + toFloat(e.embeddings[i])^2)) as eNorm,

sqrt(reduce(dSum = 0.0, i in range(0, size(d.embeddings)-1) | dSum + toFloat(d.embeddings[i])^2)) as dNorm

WITH e, d, dotProduct / (eNorm * dNorm) as cosineSimilarity

WHERE cosineSimilarity > 0.9

MERGE (d)-[r:RELATES_TO]->(e)

MERGE (e)-[r1:RELATES_TO]->(d)

SET r.cosineSimilarity = cosineSimilarity

RETURN ID(e), e.docID, ID(d), d.docID, d.full_text, r.cosineSimilarity

ORDER BY cosineSimilarity DESC

'''

或者这样

query = ''''''

MATCH (e:SpacyEntities), (d:Document)

WITH e, d,

reduce(numerator = 0.0, i in range(0, size(e.embeddings)-1) | numerator + toFloat(e.embeddings[i])*toFloat(d.embeddings[i])) as dotProduct,

sqrt(reduce(eSum = 0.0, i in range(0, size(e.embeddings)-1) | eSum + toFloat(e.embeddings[i])^2)) as eNorm,

sqrt(reduce(dSum = 0.0, i in range(0, size(d.embeddings)-1) | dSum + toFloat(d.embeddings[i])^2)) as dNorm

WITH e, d, dotProduct / (eNorm * dNorm) as cosineSimilarity

WHERE cosineSimilarity > 0.85

MERGE (e)-[r:RELATES_TO]->(d)

SET r.cosineSimilarity = cosineSimilarity

RETURN ID(e), e.name, ID(d), d.full_text, r.cosineSimilarity

ORDER BY cosineSimilarity DESC

'''

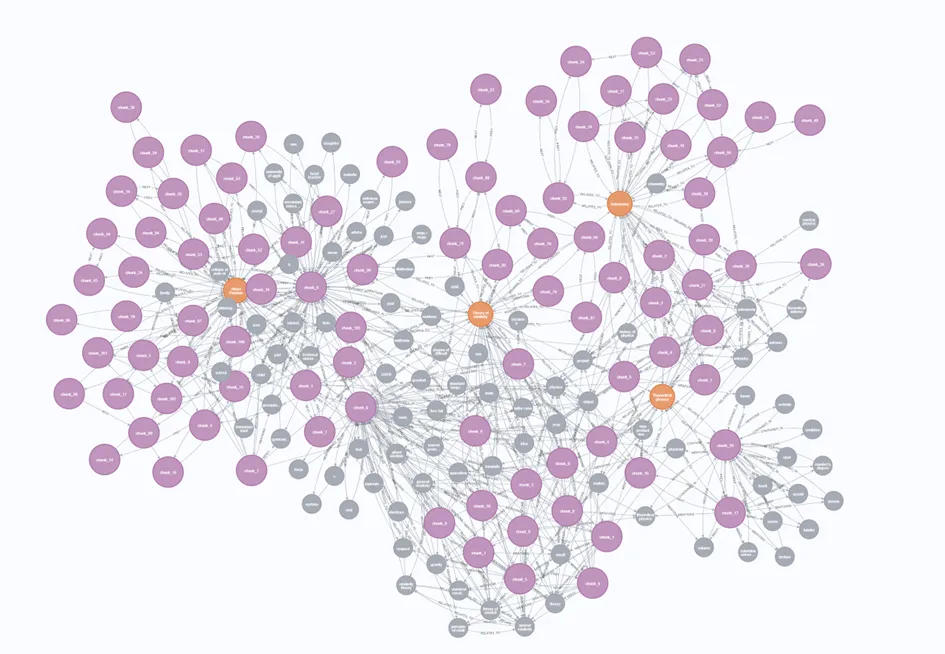

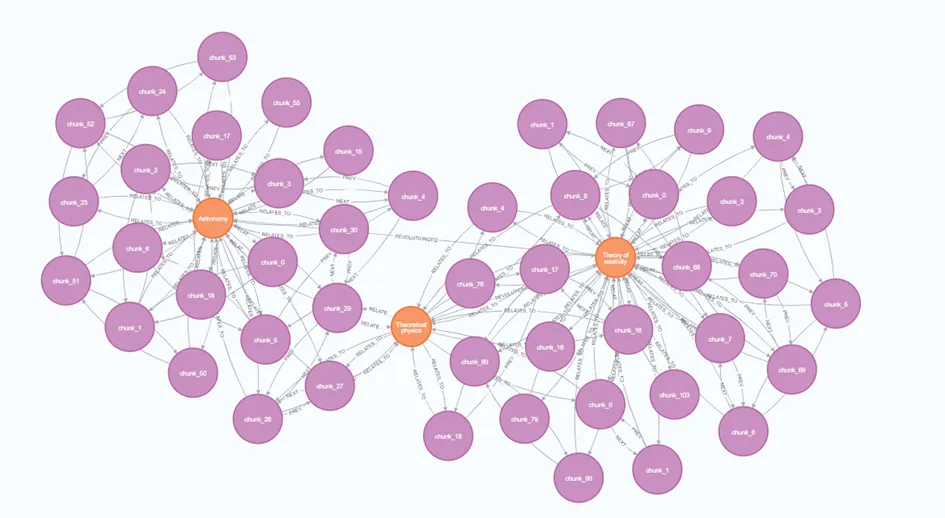

以此类推--根据你的偏好或对你的使用情况最有意义的方式,将所有东西与所有东西连接起来。一旦你的数据库完全互联,它应该看起来像这样:

图14

检索

我已经建立了多个索引:三个矢量索引用于所有三个层中的节点,以及跨所有层的文本或关键字索引。有了这六个索引,就有了设计检索 Cypher 查询的多种可能性。我将演示几种不同的方法。从本质上讲,你可以使用 UNION 关键字将所有内容组合起来,然后根据检索指标或人工判断来决定哪种方法最适合你的情况。

结果与我想要的差不多,突出了主句中提到的三个领域:相对论、理论物理学和天文学。让我们看看 ChatGPT 能根据这个检索结果,使用前三个文档返回什么。

from IPython.display import Markdown

question = "research fields of Albert Einstein"

res = completions_4.generate(str(list(df.iloc[:3]['document_text'])),

f"Take the context sent to you and answer on {question}")

Markdown(res)

我们在这里得到这个:

Albert Einstein made significant contributions to several research fields within physics, particularly in theoretical physics. Here are some of the key areas he worked on:to several research fields within physics, particularly in theoretical physics. Here are some of the key areas he worked on:

1. Special Relativity: Einstein developed the theory of special relativity in 1905, which revolutionized the understanding of space and time. This theory introduced the famous equation (E=mc^2), which describes the equivalence of mass and energy.

2. General Relativity: In 1915, Einstein published the theory of general relativity, which extended the principles of special relativity to include gravity. This theory describes gravity not as a force, but as a curvature of spacetime caused by mass and energy.

3. Photoelectric Effect: Einstein explained the photoelectric effect in 1905, demonstrating that light can be thought of as quanta of energy (photons). This work provided crucial support for the quantum theory and earned him the Nobel Prize in Physics in 1921.

4. Brownian Motion: Einstein's work on Brownian motion provided empirical evidence for the existence of atoms and molecules, supporting the atomic theory of matter.

5. Quantum Theory: Although Einstein had reservations about some aspects of quantum mechanics, he made significant contributions to its development, including the concept of wave-particle duality and the Einstein-Podolsky-Rosen paradox, which questioned the completeness of quantum mechanics.

6. Cosmology: Einstein's equations of general relativity have been fundamental in the field of cosmology. He initially introduced the cosmological constant to allow for a static universe, but later discarded it after the discovery of the expanding universe.

7. Unified Field Theory: In his later years, Einstein worked on developing a unified field theory, an attempt to unify the fundamental forces of physics into a single theoretical framework. Although he did not succeed, his efforts paved the way for future research in theoretical physics.

Einstein's work has had a profound and lasting impact on many areas of physics, influencing both theoretical and experimental research.

当然,阿尔伯特-爱因斯坦一直在研究很多东西,而 ChatGPT 不用我检索的数据就能知道他。但这个答案有点过于笼统,而且我认为在这种情况下,检索并不是最佳优化方案。请注意,这次数据库的构建方式与上次有点不同,它的数据块更短。

现在让我们尝试使用数据库中所有 6 个索引的组合进行检索:3 个矢量索引和 3 个基于文本的索引:

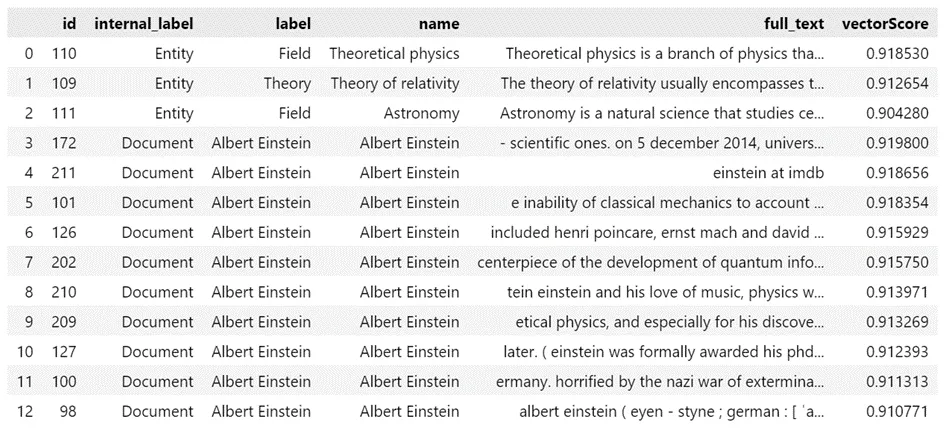

乍一看,这个结果更好。将前三项再次交给 ChatGPT,我们得到:

Albert Einstein was a German-born theoretical physicist who made groundbreaking contributions to several fields of physics. His most notable research fields include:to several fields of physics. His most notable research fields include:

1. Theory of Relativity:

o Special Relativity: Introduced in 1905, this theory revolutionized the understanding of space and time. It includes the famous mass-energy equivalence formula ( E = mc^2 ), which has been called "the world's most famous equation."

o General Relativity: Published in 1915, this theory extended the principles of special relativity to include gravity, providing a new description of gravitation as the curvature of spacetime caused by mass and energy.

2. Quantum Mechanics:

o Einstein made significant contributions to the early development of quantum theory. His work on the photoelectric effect, for which he received the 1921 Nobel Prize in Physics, was pivotal in establishing the quantum nature of light.

3. Photoelectric Effect:

o Einstein's explanation of the photoelectric effect demonstrated that light could be understood as quanta of energy (photons), which was a crucial step in the development of quantum mechanics.

Einstein's work laid the foundation for much of modern physics, influencing a wide range of subsequent research and technological advancements.

这个结果已经比较具体了,但仍然没有达到我的目标--它甚至没有提到宇宙学或天文学。让我们更进一步,充分发挥图形作为矢量数据库的优势,利用其底层关系和数据连接。

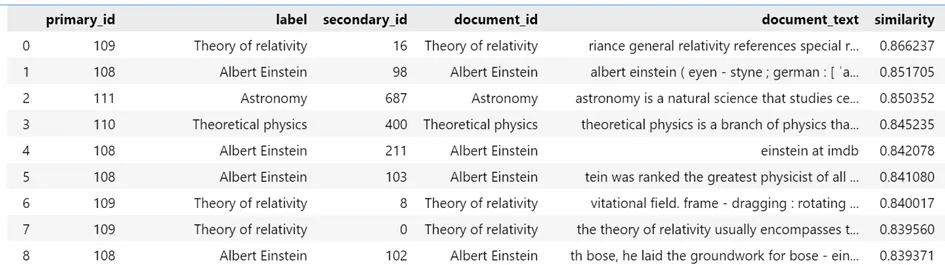

让第三层发挥作用

让我们利用第三层中的 “锚 ”来捕捉查询的主要主题,并检索最相关的信息。

SpaCy 提供了一个有用的功能,可将提取的实体总结为超级实体集合。下面是它的工作原理:

有趣的是,spaCy 认出 “天文学 ”是一首歌,而不是物理学的实际分支。然而,在建立一个拥有大量数据和广泛自动化的生产系统时,实现完全无差错的性能是一项挑战。我们的目标是尽可能减少错误。

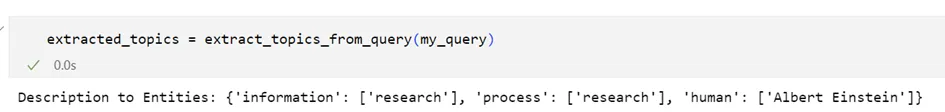

让我们看看我们的查询会返回什么结果:

关键信息是 “研究”,我同意重点应放在研究主题而不是个人上。

现在,让我们使用 spaCy 识别出的标签来提取一个子图:

Cypher 查询的结果如下:

// Define the dictionary

WITH {

information: ["research"],

process: ["research"],

human: ["Albert Einstein"]

} AS description_to_entities

// Unwind the dictionary to get all entity names

UNWIND keys(description_to_entities) AS description

UNWIND description_to_entities[description] AS entity_name

// Match nodes with the given names

MATCH (n)

WHERE (n:Entity OR n:SpacyEntities) AND n.name IN description_to_entities[description]

// Collect all matched nodes

WITH collect(n) AS matchedNodes

// Find the shortest path subgraph between all nodes

CALL apoc.path.subgraphAll(matchedNodes, {

relationshipFilter: ">",

minLevel: 1,

maxLevel: 5

})

YIELD nodes AS subgraphNodes, relationships AS subgraphRelationships

// Filter to include only Entity and Document nodes

WITH [node IN subgraphNodes WHERE node:Entity OR node:Document] AS filteredNodes, subgraphRelationships

// Return the filtered nodes and relationships in the subgraph

UNWIND filteredNodes AS node

RETURN node

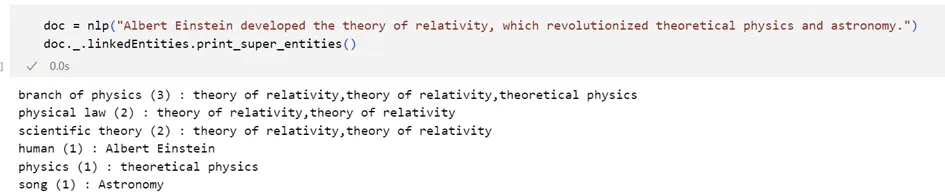

仔细看看我是怎么做的: 首先,我根据 spaCy 的 super_entities 列表提取了第一层和第三层实体的子图。然后,我找到了这些实体之间的最短路径,并从中构建了一个子图。接下来,我指定 “只取这个子图,只输出第一层和第二层实体,从最后一层开始,只包括与第一层实体相连的文档块"。

图5

现在,让我只对爱因斯坦图的这一部分进行矢量搜索!

使用第 1 层和第 2 层矢量索引的简单组合:

WITH $filtered_nodes AS filtered_nodes, $user_query_emb AS user_query_emb

CALL db.index.vector.queryNodes('test_index_entity', 100, user_query_emb)

YIELD node AS vectorNode, score as vectorScore

WITH vectorNode, vectorScore, filtered_nodes

WHERE ID(vectorNode) IN filtered_nodes

RETURN ID(vectorNode) AS id, labels(vectorNode)[0] AS internal_label, vectorNode.label AS label, vectorNode.name AS name, vectorNode.description AS full_text, vectorScore

ORDER BY vectorScore DESC

LIMIT 10

UNION

WITH $filtered_nodes AS filtered_nodes, $user_query_emb AS user_query_emb

CALL db.index.vector.queryNodes('test_index_document', 100, user_query_emb)

YIELD node AS vectorNode, score as vectorScore

WITH vectorNode, vectorScore, filtered_nodes

WHERE ID(vectorNode) IN filtered_nodes

RETURN ID(vectorNode) AS id, labels(vectorNode)[0] AS internal_label, vectorNode.docID AS label, vectorNode.docID AS name, vectorNode.full_text AS full_text, vectorScore

ORDER BY vectorScore DESC

LIMIT 10

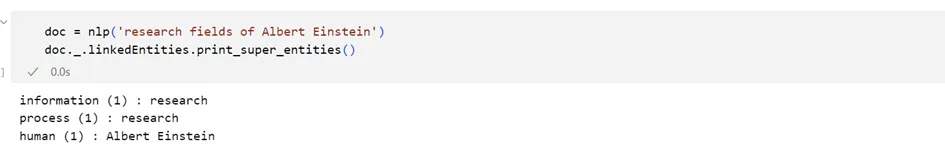

我得到:

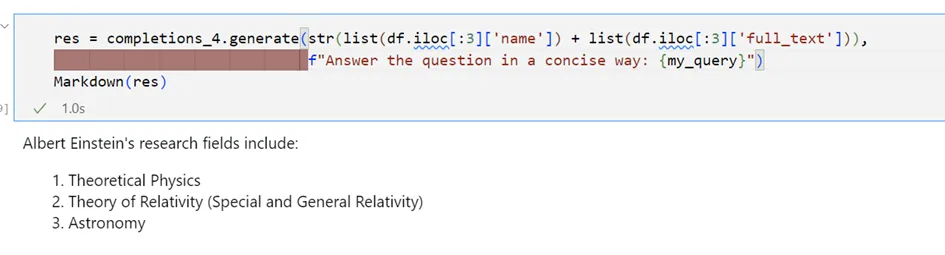

发送到 ChatGPT,我收到了:

我在这里没有重新排序,而前三段实际上只是第一层的实体节点,所以得到这个答案也就不足为奇了。

当然,重要的是要明白,这种技术并不适合每一种使用情况。对文档进行分块、提取实体和编写检索查询的方式都会对最终结果产生重要影响。不过,本文的主要目的是概述如何仅使用 Python 和 Cypher 在图上创建强大的检索功能。

总结

在本文中,我介绍了构建知识图谱的三层架构,该图谱可用作向量数据库,旨在增强检索增强生成(RAG)系统中的检索功能。第一层,固定实体层(FEL1),是由领域专家或基于地面实况文档建立的本体。第二层是文档层(DL2),由通过余弦相似性与固定实体相连的文档块组成。第三层是spaCy 实体层(SEL3),包括从文件块中提取的命名实体,进一步丰富了图的结构,提高了检索的准确性。