探索多LLM代理:提升并行处理与自动摘要效率

2024年09月29日 由 alex 发表

248

0

在工作中,我们经常需要比较多个大型语言模型(LLM)对同一请求的响应。然而,单独执行这些请求可能非常耗时。为了解决这个问题,我们探索了一种使用 LangGraph 的方法,通过单个查询获取多个模型的响应,并利用 LLM 的强大功能自动汇总结果。

实施

我们参考了 LangGraph 的官方文档来实现并行处理。我们使用 Google Colab 作为环境。

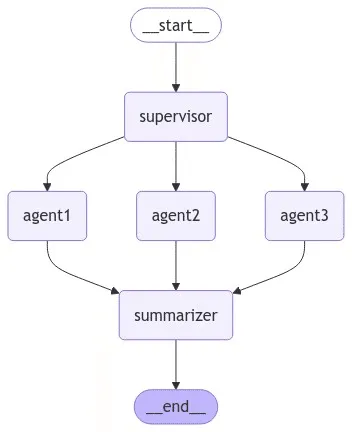

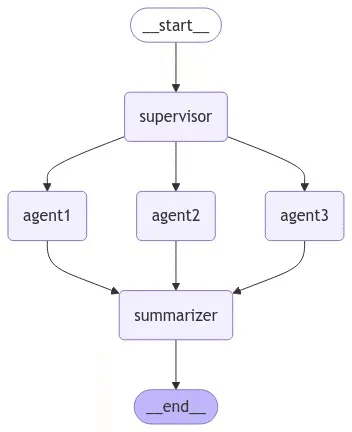

我们构建的图的结构如下。我们将从最简单的配置开始:

- 主管负责监督整个流程,并为每个代理分配任务。

- 每个代理执行其分配的任务,并将结果发送给汇总器。

- 汇总器汇总每个代理的结果并生成最终输出。

安装所需软件包

python

%pip install -qU langchain-google-genai

%pip install -qU langchain-anthropic

%pip install -qU langchain-core

%pip install -qU langchain-openai

%pip install -qU tavily-python

%pip install -qU langchain_community

%pip install -qU langgraph

%pip install -qU duckduckgo-search

设置 API 密钥

我们将每个 LLM 提供商(OpenAI、Anthropic、Google、Langsmith)的 API 密钥设置为环境变量。这些都已在 Colab 的 “Secrets ”中预先配置。

import os

from google.colab import userdata

os.environ["ANTHROPIC_API_KEY"] = userdata.get('ANTHROPIC_API_KEY')

os.environ["GOOGLE_API_KEY"] = userdata.get("GOOGLE_API_KEY")

os.environ['OPENAI_API_KEY'] = userdata.get('OPENAI_API_KEY')

os.environ['LANGCHAIN_TRACING_V2'] = userdata.get('LANGCHAIN_TRACING_V2')

os.environ['LANGCHAIN_API_KEY'] = userdata.get('LANGCHAIN_API_KEY')

定义必要的类和类型

import operator

from typing import Annotated, Any, List

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langchain.schema import HumanMessage, AIMessage

from langchain_anthropic import ChatAnthropic

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain_openai import ChatOpenAI

class State(TypedDict):

messages: Annotated[List[str], operator.add]

iteration: int

class Node:

def __init__(self, name: str):

self.name = name

def process(self, state: State) -> dict:

raise NotImplementedError

def __call__(self, state: State) -> dict:

result = self.process(state)

print(f"{self.name}: Processing complete")

return result

实施每个节点

监控节点

管理任务和初始化。

class SupervisorNode(Node):

def __init__(self):

super().__init__("Supervisor")

def process(self, state: State) -> dict:

state['iteration'] = state.get('iteration', 0) + 1

task = state['messages'][0] if state['messages'] else "No task set."

return {"messages": [f"Iteration {state['iteration']}: {task}"]}

代理节点

为每个 LLM 模型执行任务。

class AgentNode(Node):

def __init__(self, agent_name: str, llm):

super().__init__(agent_name)

self.llm = llm

def process(self, state: State) -> dict:

task = state['messages'][-1]

response = self.llm.invoke([HumanMessage(content=f"You are {self.name}. Please execute the following task: {task}")])

return {"messages": [f"{self.name}'s response: {response.content}"]}

汇总器节点

汇总每个代理的响应。

class SummarizerNode(Node):

def __init__(self, llm):

super().__init__("Summarizer")

self.llm = llm

def process(self, state: State) -> dict:

responses = state['messages'][1:] # Skip the initial task

summary_request = "Please summarize the responses from each agent so far.\n" + "\n".join(responses)

response = self.llm.invoke([HumanMessage(content=summary_request)])

return {"messages": [f"Summary: {response.content}"]}

初始化 LLM 模型

def create_llm(model_class, model_name, temperature=0.7):

return model_class(model_name=model_name, temperature=temperature)

# High-performance models

claude = create_llm(ChatAnthropic, "claude-3-5-sonnet-20240620")

gemini = create_llm(ChatGoogleGenerativeAI, "gemini-1.5-pro-002")

openai = create_llm(ChatOpenAI, "gpt-4o")

# Lightweight models

# claude = create_llm(ChatAnthropic, "claude-3-haiku-20240307")

# gemini = create_llm(ChatGoogleGenerativeAI, "gemini-1.5-flash-latest")

# openai = create_llm(ChatOpenAI, "gpt-4o-mini")

构建图表

定义和执行任务

builder = StateGraph(State)

nodes = {

"supervisor": SupervisorNode(),

"agent1": AgentNode("Agent1 (claude)", claude),

"agent2": AgentNode("Agent2 (gemini)", gemini),

"agent3": AgentNode("Agent3 (gpt)", openai),

"summarizer": SummarizerNode(openai)

}

for name, node in nodes.items():

builder.add_node(name, node)

builder.add_edge(START, "supervisor")

for agent in ["agent1", "agent2", "agent3"]:

builder.add_edge("supervisor", agent)

builder.add_edge(agent, "summarizer")

builder.add_edge("summarizer", END)

graph = builder.compile()

结果和说明

执行后,你会得到类似下面的结果:

Supervisor: Processing complete

Agent2 (gemini): Processing complete

Agent3 (gpt): Processing complete

Agent1 (claude): Processing complete

Summarizer: Processing complete

Summary: Here's a summary of each agent's response:

- **Agent1 (claude)**: The marble is inside the refrigerator. They explain that even when the cup was turned upside down, the marble remained inside, and when the cup was moved to the refrigerator, the marble went with it.

- **Agent2 (gemini)**: The marble is on the table. They explain that when the cup was turned upside down, the marble fell onto the table, and it remained there even after the cup was moved to the refrigerator.

- **Agent3 (gpt)**: The marble is inside the refrigerator. Their reasoning is similar to Agent1's, stating that the marble stayed in the cup when it was turned upside down and was then moved to the refrigerator with the cup.

In summary, Agent1 and Agent3 conclude that "the marble is inside the refrigerator," while Agent2 states that "the marble is on the table."

检查图表定义

将图形结构可视化:

from IPython.display import Image, display

display(Image(graph.get_graph().draw_mermaid_png()))

这将显示图形结构的可视化表示。

文章来源:https://medium.com/@astropomeai/multi-ai-agent-parallel-processing-and-automatic-summarization-using-multiple-llms-ad80f410ae21

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

热门企业

热门职位

写评论取消

回复取消