分块与RAG:超越Qdrant和Reranking的基础知识

在检索增强生成(RAG)工作流程中,分块技术通过将大型文档分割成易于管理的片段,在优化数据摄取方面发挥着至关重要的作用。当与Qdrant的混合矢量搜索和先进的重排方法相结合时,它能确保为查询匹配提供更相关的检索结果。本文介绍了分块的重要性,以及包括混合搜索和重排在内的战略性后处理如何提高 RAG 管道的效率。通过一个由 LlamaIndex、Qdrant、Ollama 和 OpenAI 支持的架构,我们展示了多样化的摄取技术如何带来更好的数据评估和可视化结果。

检索增强生成不仅仅是一种方法或流程,事实上,它是一项经过大量研究和开发的深入研究。

什么是分块?

在检索增强生成(RAG)中,分块是一个关键的预处理步骤,它将大型文档或文本分解成更小、更易于管理的信息块。这一过程对于提高 RAG 系统中信息检索和生成任务的效率和效果至关重要。

分块的主要目的是创建更小的文本单元,以便于索引、搜索和检索。通过将冗长的文档分成较小的块,RAG 系统在响应查询或生成内容时可以更准确地定位相关信息。这种细粒度的方法可以更精确地匹配用户查询和源材料中最相关的部分。

根据内容的性质和 RAG 系统的具体要求,可以使用各种方法进行分块。常见的方法包括按固定数量的标记、句子或段落分割文本。更先进的技术可能会考虑语义边界,确保每个语块保持连贯的含义和上下文。

在 RAG 系统中,块的大小是一个重要的考虑因素。过大的信息块可能包含无关信息,从而可能削弱检索内容的相关性。相反,太小的信息块可能缺乏足够的上下文,使系统难以有效地理解和利用信息。找到适当的平衡点对于实现最佳性能至关重要。

使用了哪些分块策略?

- 语义分块: 这种策略是根据语义而不是任意长度来划分文本。其目的是创建包含连贯观点或主题的语块。语义分块通常使用自然语言处理(NLP)技术来识别文本中的概念边界。

- 主题节点解析器: 这种方法在文档中创建主题的分层结构。它能识别主要主题和子主题,然后对文本进行相应的分块。这种方法对于保持复杂文档的整体结构和上下文特别有用。

- 语义双合并分块法: 这是一个分两步走的过程。首先,将文本分割成固定大小的小块。然后,根据语义相似性合并这些分块。这种方法旨在创建更大的、语义连贯的语块,同时仍保持一定程度的语块大小统一。这种方法使用 Spacy 来实现语义相似性。

架构:

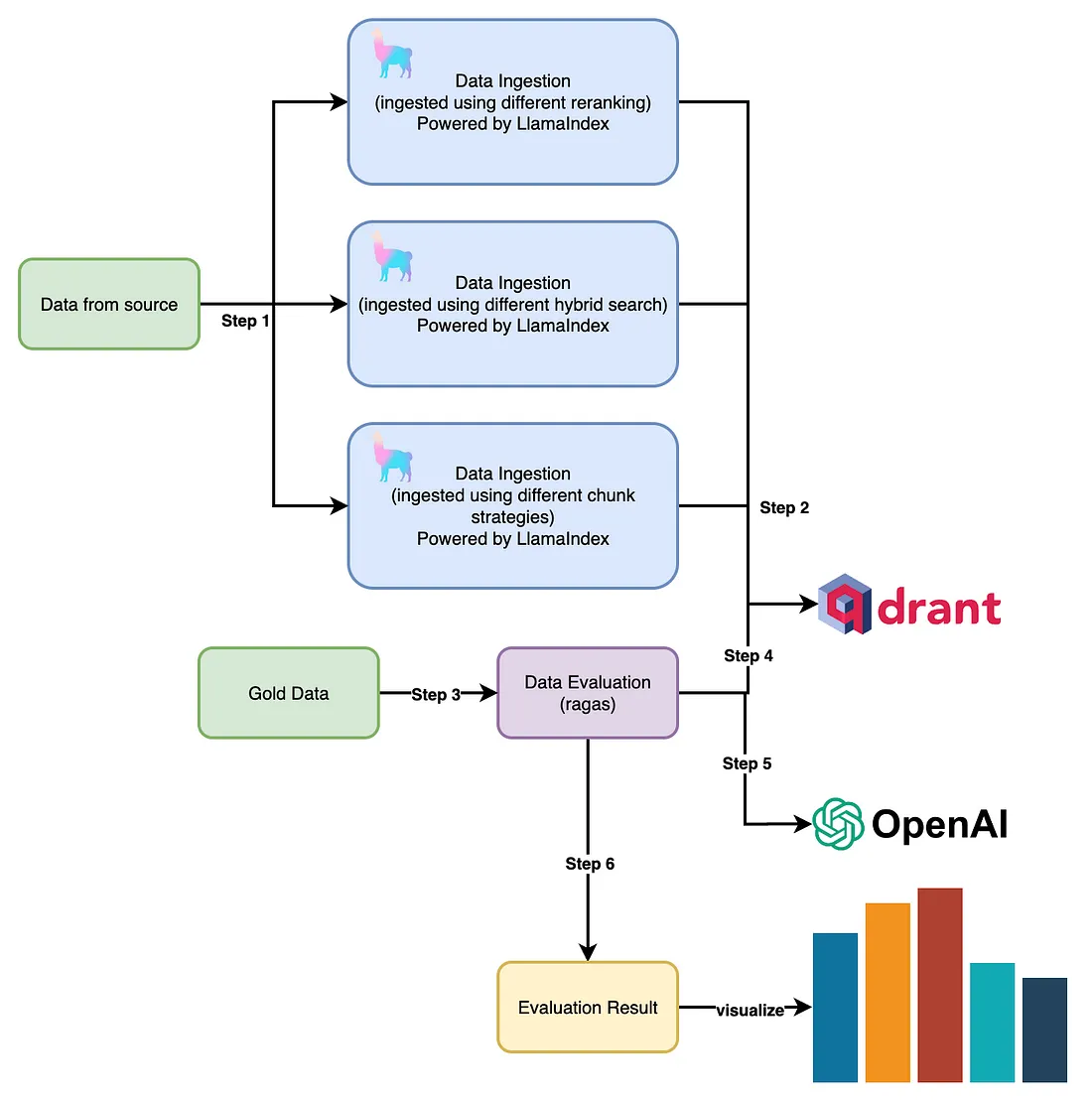

该架构概述了实验,强调探索多种分块策略及其与混合搜索和重排等先进技术相结合时的有效性。总体目标是评估 RAG 管道在不同分块条件下的检索质量和相关性。

在第1步和第2步中,使用 “语义相似性”、“主题节点解析 ”和 “语义双重合并 ”等不同的分块策略摄取数据。每种分块策略既可单独评估,也可与 Qdrant 的混合搜索(利用稀疏和密集向量)和重排序(如 LLMReranking、SentenceTransformerReranking 和 LongContextReorder)相结合进行评估。这就产生了多个迭代或实验,提供了一个比较框架,以了解每种分块策略(单独使用或与高级机制搭配使用)如何影响检索过程。

进入第 3 步和第 4 步后,将准备一个黄金数据集,其中包含 “问题”、“基本事实 ”和 “实际上下文 ”等核心要素。这个数据集是实验的基础。每次查询都会生成额外的数据点:来自 LLM(如 OpenAI 或 Ollama)的响应和来自 Qdrant 向量存储的检索上下文。这确保了模型生成的答案和向量存储提供的上下文都能被捕获,以便进行后续评估。

在第 5 步中,黄金数据集中的每个问题都会被发送到 LLM,以获得回复。然后,使用一个评估框架(本实验使用 RAGAS)来计算基于检索结果的指标。重点是三个主要指标:忠实度、答案相关性和答案正确性。这些指标对于评估 RAG 管道在不同条件下的整体性能和可靠性至关重要。

最后,在第 6 步 中,评估结果会被发送到一个可视化框架,并在该框架中对性能指标进行分析。这一步有助于可视化不同指标及其在不同实验环境下的行为,从而深入了解哪些分块、检索和重排组合在 RAG 管道中表现最佳。

指标(取自 RAGA):

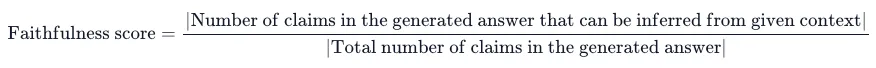

忠实性: 该指标用于衡量根据给定上下文生成的答案的事实一致性。它根据答案和检索到的上下文计算得出。答案的刻度范围为 (0,1)。越高越好。如果答案中的所有主张都可以从给定的上下文中推断出来,那么生成的答案就被认为是忠实的。要计算这一点,首先要确定生成答案中的一组主张。然后将其中的每一条主张与给定的上下文进行交叉检查,以确定是否可以从上下文中推断出来。忠实度得分的计算公式如下:

答案相关性: 评价指标 “答案相关性 ”侧重于评估生成的答案与给定提示的相关程度。不完整或包含冗余信息的答案得分较低,得分越高则表示相关性越好。该指标通过问题、上下文和答案计算得出。答案相关性被定义为原始问题与大量人工问题的平均余弦相似度,人工问题是根据答案生成的(逆向工程):

答案正确性: 答案正确性评估包括将生成的答案与地面实况进行比较,衡量生成答案的准确性。该评估依赖于地面实况和答案,分值从 0 到 1 不等。分数越高,表示生成的答案与地面实况越接近,正确性越高。答案正确性包括两个关键方面:生成答案与基本事实之间的语义相似性以及事实相似性。使用加权方案将这些方面结合起来,得出答案正确性得分。如果需要,用户还可以选择使用 “阈值”,将得出的分数四舍五入为二进制。

实施:

我们准备了一个包含 20 个问题的黄金数据集及其 ground_truth,数据集的上下文示例如下。

[

{

"question": "What is the goal of MLOps?",

"ground_truth": "The goal of MLOps is to facilitate the creation of machine learning products by bridging the gap between development (Dev) and operations (Ops).",

"context": "MLOps (Machine Learning Operations) is a paradigm, including aspects like best practices, sets of concepts, as well as a development culture when it comes to the end-to-end conceptualization, implementation, monitoring, deployment, and scalability of machine learning products."

},

{

"question": "What are the key principles of MLOps?",

"ground_truth": "The key principles of MLOps are CI/CD automation, workflow orchestration, reproducibility, versioning of data/model/code, collaboration, continuous ML training and evaluation, ML metadata tracking and logging, continuous monitoring, and feedback loops.",

"context": "MLOps aims to facilitate the creation of machine learning products by leveraging these principles: CI/CD automation, workflow orchestration, reproducibility; versioning of data, model, and code; collaboration; continuous ML training and evaluation; ML metadata tracking and logging; continuous monitoring; and feedback loops."

},

{

"question": "What disciplines does MLOps combine?",

"ground_truth": "MLOps combines machine learning, software engineering (especially DevOps), and data engineering.",

"context": "Most of all, it is an engineering practice that leverages three contributing disciplines: machine learning, software engineering (especially DevOps), and data engineering."

}

]

在本项目中,我们有三个数据索引文件,一个是包含所有 3 种分块策略的 vanilla 索引器,第二个是包含分块策略 + qdrant 混合搜索能力(稀疏 + 密集向量)的索引器,最后一个是包含分块策略和重排机制(如“LLM Reranker”、“Sentence Transformer Reranking”和“Long Context Reorder”)的索引器。

采用所有 3 种分块策略的数据索引器:

from llama_index.core import SimpleDirectoryReader, VectorStoreIndex, StorageContext, Settings

from llama_index.core.indices.base import IndexType

from llama_index.node_parser.topic import TopicNodeParser

from llama_index.core.node_parser import (

SentenceSplitter,

SemanticSplitterNodeParser,

SemanticDoubleMergingSplitterNodeParser,

LanguageConfig

)

from llama_index.vector_stores.qdrant import QdrantVectorStore

from llama_index.llms.ollama import Ollama

from llama_index.embeddings.ollama import OllamaEmbedding

from dotenv import load_dotenv, find_dotenv

import qdrant_client

import os

class LlamaIndexDataHandler:

def __init__(self, chunk_size: int, chunk_overlap: int, top_k: int):

load_dotenv(find_dotenv())

input_dir = os.environ.get('input_dir')

collection_name = os.environ.get('collection_name')

qdrant_url = os.environ.get('qdrant_url')

qdrant_api_key = os.environ.get('qdrant_api_key')

llm_url = os.environ.get('llm_url')

llm_model = os.environ.get('llm_model')

embed_model_name = os.environ.get('embed_model_name')

# Initialize settings

self.top_k = top_k

self.collection_name = collection_name

# Setting up LLM and embedding model

Settings.llm = Ollama(base_url=llm_url, model=llm_model, request_timeout=300)

Settings.embed_model = OllamaEmbedding(base_url=llm_url, model_name=embed_model_name)

Settings.chunk_size = chunk_size

Settings.chunk_overlap = chunk_overlap

# Load documents from the specified directory

self.documents = self.load_documents(input_dir)

# Initialize Qdrant client

self.qdrant_client = qdrant_client.QdrantClient(url=qdrant_url, api_key=qdrant_api_key)

# Set up Qdrant vector store

self.qdrant_vector_store = QdrantVectorStore(collection_name=self.collection_name,

client=self.qdrant_client,

# sparse_config= None,

# dense_config= = None

)

# Set up StorageContext

self.storage_ctx = StorageContext.from_defaults(vector_store=self.qdrant_vector_store)

self.vector_store_index: IndexType = None

self.sentence_splitter = SentenceSplitter.from_defaults(chunk_size=Settings.chunk_size,

chunk_overlap=Settings.chunk_overlap)

def load_documents(self, input_dir):

return SimpleDirectoryReader(input_dir=input_dir, required_exts=['.pdf']).load_data(show_progress=True)

def index_data_based_on_method(self, method: str):

if method == 'semantic_chunking':

# Initialize splitters

splitter = SemanticSplitterNodeParser(

buffer_size=1, breakpoint_percentile_threshold=95, embed_model=Settings.embed_model

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=splitter.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter]

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store)

elif method == 'semantic_double_merge_chunking':

config = LanguageConfig(language="english", spacy_model="en_core_web_md")

splitter = SemanticDoubleMergingSplitterNodeParser(

language_config=config,

initial_threshold=0.4,

appending_threshold=0.5,

merging_threshold=0.5,

max_chunk_size=5000,

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=splitter.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter]

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store)

elif method == 'topic_node_parser':

node_parser = TopicNodeParser.from_defaults(

llm=Settings.llm,

max_chunk_size=Settings.chunk_size,

similarity_method="llm",

similarity_threshold=0.8,

window_size=3 # paper suggests it as 5

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=node_parser.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter]

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store)

def create_query_engine(self):

return self.vector_store_index.as_query_engine(top_k=self.top_k)

def query(self, query_text):

query_engine = self.create_query_engine()

return query_engine.query(str_or_query_bundle=query_text)

# Usage example

def main():

llama_index_handler = LlamaIndexDataHandler(chunk_size=128, chunk_overlap=20, top_k=3)

llama_index_handler.index_data_based_on_method(method='semantic_double_merge_chunking')

# response = llama_index_handler.query("operational challenges of mlops")

# print(response)

if __name__ == "__main__":

main()

带分块策略的数据索引器 + qdrant 混合搜索能力(稀疏矢量 + 密集矢量)

from llama_index.core import SimpleDirectoryReader, VectorStoreIndex, StorageContext, Settings

from llama_index.core.indices.base import IndexType

from llama_index.node_parser.topic import TopicNodeParser

from llama_index.core.node_parser import (

SentenceSplitter,

SemanticSplitterNodeParser,

SemanticDoubleMergingSplitterNodeParser,

LanguageConfig

)

from llama_index.vector_stores.qdrant import QdrantVectorStore

from llama_index.llms.ollama import Ollama

from llama_index.embeddings.ollama import OllamaEmbedding

from dotenv import load_dotenv, find_dotenv

from fastembed import TextEmbedding

import qdrant_client

import os

class LlamaIndexDataHandler:

def __init__(self, chunk_size: int, chunk_overlap: int, top_k: int):

load_dotenv(find_dotenv())

input_dir = os.environ.get('input_dir')

collection_name = os.environ.get('collection_name')

qdrant_url = os.environ.get('qdrant_url')

qdrant_api_key = os.environ.get('qdrant_api_key')

llm_url = os.environ.get('llm_url')

llm_model = os.environ.get('llm_model')

embed_model_name = os.environ.get('embed_model_name')

# Initialize settings

self.top_k = top_k

self.collection_name = collection_name

# Setting up LLM and embedding model

Settings.llm = Ollama(base_url=llm_url, model=llm_model, request_timeout=300)

Settings.embed_model = OllamaEmbedding(base_url=llm_url, model_name=embed_model_name)

Settings.chunk_size = chunk_size

Settings.chunk_overlap = chunk_overlap

# Load documents from the specified directory

self.documents = self.load_documents(input_dir)

# Initialize Qdrant client

self.qdrant_client = qdrant_client.QdrantClient(url=qdrant_url, api_key=qdrant_api_key)

# Set up Qdrant vector store

self.qdrant_vector_store = QdrantVectorStore(collection_name=self.collection_name,

client=self.qdrant_client,

fastembed_sparse_model=os.environ.get("sparse_model"),

enable_hybrid=True

)

# Set up StorageContext

self.storage_ctx = StorageContext.from_defaults(vector_store=self.qdrant_vector_store)

self.vector_store_index: IndexType = None

self.sentence_splitter = SentenceSplitter.from_defaults(chunk_size=Settings.chunk_size,

chunk_overlap=Settings.chunk_overlap)

def load_documents(self, input_dir):

return SimpleDirectoryReader(input_dir=input_dir, required_exts=['.pdf']).load_data(show_progress=True)

def index_data_based_on_method(self, method: str):

if method == 'semantic_chunking':

# Initialize splitters

splitter = SemanticSplitterNodeParser(

buffer_size=1, breakpoint_percentile_threshold=95, embed_model=Settings.embed_model

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=splitter.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter],

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name"))

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store,

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name")))

elif method == 'semantic_double_merge_chunking':

config = LanguageConfig(language="english", spacy_model="en_core_web_md")

splitter = SemanticDoubleMergingSplitterNodeParser(

language_config=config,

initial_threshold=0.4,

appending_threshold=0.5,

merging_threshold=0.5,

max_chunk_size=5000,

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=splitter.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter],

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name"))

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store,

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name")))

elif method == 'topic_node_parser':

node_parser = TopicNodeParser.from_defaults(

llm=Settings.llm,

max_chunk_size=Settings.chunk_size,

similarity_method="llm",

similarity_threshold=0.8,

window_size=3 # paper suggests it as 5

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=node_parser.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter],

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name"))

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store,

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name")))

def create_query_engine(self):

return self.vector_store_index.as_query_engine(top_k=self.top_k)

def query(self, query_text):

query_engine = self.create_query_engine()

return query_engine.query(str_or_query_bundle=query_text)

# Usage example

def main():

llama_index_handler = LlamaIndexDataHandler(chunk_size=128, chunk_overlap=20, top_k=3)

llama_index_handler.index_data_based_on_method(method='semantic_double_merge_chunking')

# response = llama_index_handler.query("operational challenges of mlops")

# print(response)

if __name__ == "__main__":

main()

数据索引器采用分块策略和重排机制,如 “LLM Reranker”、“Sentence Transformer Reranking”和“Long Context Reorder”。

from llama_index.core import SimpleDirectoryReader, VectorStoreIndex, StorageContext, Settings

from llama_index.core.indices.base import IndexType

from llama_index.node_parser.topic import TopicNodeParser

from llama_index.core.node_parser import (

SentenceSplitter,

SemanticSplitterNodeParser,

SemanticDoubleMergingSplitterNodeParser,

LanguageConfig

)

from llama_index.vector_stores.qdrant import QdrantVectorStore

from llama_index.core.postprocessor import LLMRerank, SentenceTransformerRerank, LongContextReorder

from llama_index.llms.ollama import Ollama

from llama_index.embeddings.ollama import OllamaEmbedding

from dotenv import load_dotenv, find_dotenv

import qdrant_client

import os

class LlamaIndexDataHandler:

def __init__(self, chunk_size: int, chunk_overlap: int, top_k: int):

load_dotenv(find_dotenv())

input_dir = os.environ.get('input_dir')

collection_name = os.environ.get('collection_name')

qdrant_url = os.environ.get('qdrant_url')

qdrant_api_key = os.environ.get('qdrant_api_key')

llm_url = os.environ.get('llm_url')

llm_model = os.environ.get('llm_model')

embed_model_name = os.environ.get('embed_model_name')

# Initialize settings

self.top_k = top_k

self.collection_name = collection_name

# Setting up LLM and embedding model

Settings.llm = Ollama(base_url=llm_url, model=llm_model, request_timeout=300)

Settings.embed_model = OllamaEmbedding(base_url=llm_url, model_name=embed_model_name)

Settings.chunk_size = chunk_size

Settings.chunk_overlap = chunk_overlap

# Load documents from the specified directory

self.documents = self.load_documents(input_dir)

# Initialize Qdrant client

self.qdrant_client = qdrant_client.QdrantClient(url=qdrant_url, api_key=qdrant_api_key)

# Set up Qdrant vector store

self.qdrant_vector_store = QdrantVectorStore(collection_name=self.collection_name,

client=self.qdrant_client,

# sparse_config= None,

# dense_config= = None

)

# Set up StorageContext

self.storage_ctx = StorageContext.from_defaults(vector_store=self.qdrant_vector_store)

self.vector_store_index: IndexType = None

self.sentence_splitter = SentenceSplitter.from_defaults(chunk_size=Settings.chunk_size,

chunk_overlap=Settings.chunk_overlap)

def load_documents(self, input_dir):

return SimpleDirectoryReader(input_dir=input_dir, required_exts=['.pdf']).load_data(show_progress=True)

def index_data_based_on_method(self, method: str):

if method == 'semantic_chunking':

# Initialize splitters

splitter = SemanticSplitterNodeParser(

buffer_size=1, breakpoint_percentile_threshold=95, embed_model=Settings.embed_model

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=splitter.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter]

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store)

elif method == 'semantic_double_merge_chunking':

config = LanguageConfig(language="english", spacy_model="en_core_web_md")

splitter = SemanticDoubleMergingSplitterNodeParser(

language_config=config,

initial_threshold=0.4,

appending_threshold=0.5,

merging_threshold=0.5,

max_chunk_size=5000,

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=splitter.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter]

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store)

elif method == 'topic_node_parser':

node_parser = TopicNodeParser.from_defaults(

llm=Settings.llm,

max_chunk_size=Settings.chunk_size,

similarity_method="llm",

similarity_threshold=0.8,

window_size=3 # paper suggests it as 5

)

if not self.qdrant_client.collection_exists(collection_name=self.collection_name):

# Create VectorStoreIndex

self.vector_store_index = VectorStoreIndex(

nodes=node_parser.get_nodes_from_documents(documents=self.documents),

storage_context=self.storage_ctx, show_progress=True, transformations=[self.sentence_splitter]

)

else:

self.vector_store_index = VectorStoreIndex.from_vector_store(vector_store=self.qdrant_vector_store)

def create_query_engine(self, postprocessing_method: str):

if postprocessing_method == 'llm_reranker':

reranker = LLMRerank(llm=Settings.llm, choice_batch_size=self.top_k)

return self.vector_store_index.as_query_engine(top_k=self.top_k, node_postprocessors=[reranker])

elif postprocessing_method == 'sentence_transformer_rerank':

reranker = SentenceTransformerRerank(

model="cross-encoder/ms-marco-MiniLM-L-2-v2", top_n=self.top_k

)

return self.vector_store_index.as_query_engine(top_k=self.top_k, node_postprocessors=[reranker])

elif postprocessing_method == 'long_context_reorder':

reorder = LongContextReorder()

return self.vector_store_index.as_query_engine(top_k=self.top_k, node_postprocessors=[reorder])

return self.vector_store_index.as_query_engine(top_k=self.top_k)

def query(self, query_text, postprocessing_method: str):

query_engine = self.create_query_engine(postprocessing_method=postprocessing_method)

return query_engine.query(str_or_query_bundle=query_text)

# Usage example

def main():

llama_index_handler = LlamaIndexDataHandler(chunk_size=128, chunk_overlap=20, top_k=3)

llama_index_handler.index_data_based_on_method(method='semantic_double_merge_chunking')

# response = llama_index_handler.query("operational challenges of mlops")

# print(response)

if __name__ == "__main__":

main()

索引器就位后,我们就可以专注于评估了。我创建了一个名为 evaluation_playground.py 的文件,在该文件中,我们连接到现有集合上的向量存储,这些存储是作为摄取阶段的一部分创建的,然后请求 LLM 响应我们的 gold_dataset。

import os

import logging

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader, Settings

from llama_index.vector_stores.qdrant import QdrantVectorStore

from llama_index.core.postprocessor import LLMRerank, SentenceTransformerRerank, LongContextReorder

from llama_index.llms.openai import OpenAI

from llama_index.llms.ollama import Ollama

from llama_index.embeddings.ollama import OllamaEmbedding

from datasets import Dataset

import qdrant_client

import pandas as pd

from ragas import evaluate

from ragas.metrics import (

faithfulness,

answer_relevancy,

answer_correctness,

)

from dotenv import load_dotenv, find_dotenv

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class LlamaIndexEvaluator:

def __init__(self, input_dir="data", model_name="llama3.2:latest", base_url="http://localhost:11434"):

logger.info(

f"Initializing LlamaIndexEvaluator with input_dir={input_dir}, model_name={model_name}, base_url={base_url}")

load_dotenv(find_dotenv())

self.input_dir = input_dir

self.model_name = model_name

self.base_url = base_url

self.documents = self.load_documents()

self.top_k = 5

Settings.llm = Ollama(model=self.model_name, base_url=self.base_url)

logger.info("Loading dataset from gold_data.json")

self.dataset = pd.read_json(os.path.join(input_dir, "gold_data.json"))

self.query_engine = self.build_query_engine(postprocessing_method='llm_reranker')

def load_documents(self):

logger.info(f"Loading documents from directory: {self.input_dir}")

documents = SimpleDirectoryReader(input_dir=self.input_dir, required_exts=['.pdf'],

num_files_limit=30).load_data(

show_progress=True)

logger.info(f"Loaded {len(documents)} documents")

return documents

def build_query_engine(self, postprocessing_method: str):

logger.info("Initializing Qdrant client")

# Initialize Qdrant client

qdrant_cli = qdrant_client.QdrantClient(url=os.environ.get("qdrant_url"),

api_key=os.environ.get("qdrant_api_key"))

logger.info("Setting up Qdrant vector store")

# Set up Qdrant vector store as per the experiment hybrid vs normal

qdrant_vector_store = QdrantVectorStore(collection_name=os.environ.get("collection_name"), client=qdrant_cli)

# qdrant_vector_store = QdrantVectorStore(collection_name=os.environ.get("collection_name"),

# client=qdrant_cli,

# fastembed_sparse_model=os.environ.get("sparse_model"),

# enable_hybrid=True

# )

logger.info("Building VectorStoreIndex from existing collection")

vector_index = VectorStoreIndex.from_vector_store(

vector_store=qdrant_vector_store,

embed_model=OllamaEmbedding(model_name=os.environ.get("embed_model_name"), base_url=self.base_url)

)

query_engine = None

if postprocessing_method == 'llm_reranker':

reranker = LLMRerank(llm=Settings.llm, choice_batch_size=self.top_k)

query_engine = vector_index.as_query_engine(top_k=self.top_k, node_postprocessors=[reranker])

elif postprocessing_method == 'sentence_transformer_rerank':

reranker = SentenceTransformerRerank(

model="cross-encoder/ms-marco-MiniLM-L-2-v2", top_n=self.top_k

)

query_engine = vector_index.as_query_engine(top_k=self.top_k, node_postprocessors=[reranker])

elif postprocessing_method == 'long_context_reorder':

reorder = LongContextReorder()

query_engine = vector_index.as_query_engine(top_k=self.top_k, node_postprocessors=[reorder])

query_engine = vector_index.as_query_engine(similarity_top_k=5, llm=Settings.llm)

logger.info(f"Query engine built successfully: {query_engine}")

return query_engine

def generate_responses(self, test_questions, test_answers=None):

logger.info("Generating responses for test questions")

responses = [self.query_engine.query(q) for q in test_questions]

answers = []

contexts = []

for r in responses:

answers.append(r.response)

contexts.append([c.node.get_content() for c in r.source_nodes])

dataset_dict = {

"question": test_questions,

"answer": answers,

"contexts": contexts,

}

if test_answers is not None:

dataset_dict["ground_truth"] = test_answers

logger.info("Responses generated successfully")

return Dataset.from_dict(dataset_dict)

def evaluate(self):

logger.info("Starting evaluation")

evaluation_ds = self.generate_responses(

test_questions=self.dataset["question"].tolist(),

test_answers=self.dataset["ground_truth"].tolist()

)

metrics = [

faithfulness,

answer_relevancy,

answer_correctness,

]

logger.info("Evaluating dataset")

evaluation_result = evaluate(

dataset=evaluation_ds,

metrics=metrics

)

logger.info("Evaluation completed")

return evaluation_result

if __name__ == "__main__":

evaluator = LlamaIndexEvaluator()

_evaluation_result = evaluator.evaluate()

logger.info("Evaluation result:")

logger.info(_evaluation_result)

每次实验后,我们都会从日志中提取评估结果,并将其记录在名为 evaluation_result.json 的文件中。如上所述,这一步始终可以实现自动化。

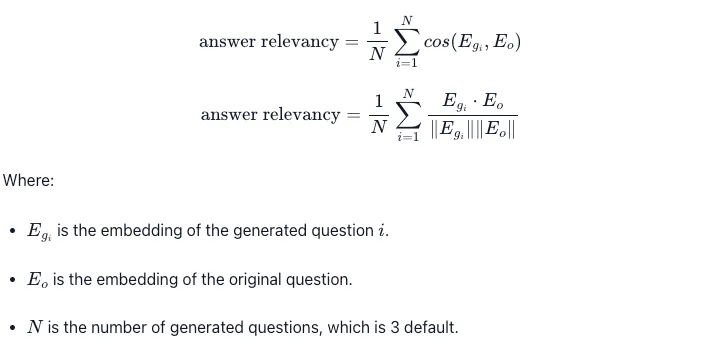

[

{

"method": "semantic_chunking",

"result": {

"faithfulness": 0.7084,

"answer_relevancy": 0.9566,

"answer_correctness": 0.6119

}

},

{

"method": "semantic_chunking_with_hybrid_search",

"result": {

"faithfulness": 0.8684,

"answer_relevancy": 0.9182,

"answer_correctness": 0.6077

}

},

{

"method": "semantic_chunking_with_llm_reranking",

"result": {

"faithfulness": 0.8575,

"answer_relevancy": 0.8180,

"answer_correctness": 0.6219

}

},

{

"method": "topic_node_parser",

"result": {

"faithfulness": 0.6298,

"answer_relevancy": 0.9043,

"answer_correctness": 0.6211

}

},

{

"method": "topic_node_parser_hybrid",

"result": {

"faithfulness": 0.4632,

"answer_relevancy": 0.8973,

"answer_correctness": 0.5576

}

},

{

"method": "topic_node_parser_with_llm_reranking",

"result": {

"faithfulness": 0.6804,

"answer_relevancy": 0.9028,

"answer_correctness": 0.5935

}

},

{

"method": "semantic_double_merge_chunking",

"result": {

"faithfulness": 0.7210,

"answer_relevancy": 0.8049,

"answer_correctness": 0.5340

}

},

{

"method": "semantic_double_merge_chunking_hybrid",

"result": {

"faithfulness": 0.7634,

"answer_relevancy": 0.9346,

"answer_correctness": 0.5266

}

},

{

"method": "semantic_double_merge_chunking_with_llm_reranking",

"result": {

"faithfulness": 0.7450,

"answer_relevancy": 0.9424,

"answer_correctness": 0.5527

}

}

]

现在,我们需要对收集到的评估结果进行可视化处理,以便就 RAG 管道中应使用哪些参数得出结论,从而为客户提供最佳精确度。

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

# Data provided

data = [

{"method": "semantic_chunking", "result": {"faithfulness": 0.7084, "answer_relevancy": 0.9566, "answer_correctness": 0.6119}},

{"method": "semantic_chunking_with_hybrid_search", "result": {"faithfulness": 0.8684, "answer_relevancy": 0.9182, "answer_correctness": 0.6077}},

{"method": "semantic_chunking_with_llm_reranking", "result": {"faithfulness": 0.8575, "answer_relevancy": 0.8180, "answer_correctness": 0.6219}},

{"method": "topic_node_parser", "result": {"faithfulness": 0.6298, "answer_relevancy": 0.9043, "answer_correctness": 0.6211}},

{"method": "topic_node_parser_hybrid", "result": {"faithfulness": 0.4632, "answer_relevancy": 0.8973, "answer_correctness": 0.5576}},

{"method": "topic_node_parser_with_llm_reranking", "result": {"faithfulness": 0.6804, "answer_relevancy": 0.9028, "answer_correctness": 0.5935}},

{"method": "semantic_double_merge_chunking", "result": {"faithfulness": 0.7210, "answer_relevancy": 0.8049, "answer_correctness": 0.5340}},

{"method": "semantic_double_merge_chunking_hybrid", "result": {"faithfulness": 0.7634, "answer_relevancy": 0.9346, "answer_correctness": 0.5266}},

{"method": "semantic_double_merge_chunking_with_llm_reranking", "result": {"faithfulness": 0.7450, "answer_relevancy": 0.9424, "answer_correctness": 0.5527}},

]

# Flattening data for DataFrame

flattened_data = []

for d in data:

row = {"method": d["method"]}

row.update(d["result"])

flattened_data.append(row)

# Create DataFrame

df = pd.DataFrame(flattened_data)

# Creating subsets for each plot

df1 = df.iloc[:3]

df2 = df.iloc[3:6]

df3 = df.iloc[6:]

# Set seaborn style

sns.set(style="whitegrid")

# Plotting the metrics for each method in separate grouped bar plots, similar to the provided example image

fig, axes = plt.subplots(3, 1, figsize=(10, 18))

# Plotting each set of methods with separate bars for each metric

for i, (df_subset, ax, title) in enumerate(zip([df1, df2, df3], axes,

["Results for Semantic Chunking Methods",

"Results for Topic Node Parser Methods",

"Results for Semantic Double Merge Chunking Methods"])):

df_melted = df_subset.melt(id_vars='method', var_name='metric', value_name='score')

sns.barplot(x='method', y='score', hue='metric', data=df_melted, ax=ax, palette='Blues')

ax.set_title(title)

ax.set_ylabel("Scores")

ax.tick_params(axis='x', rotation=45)

ax.legend(title='Metric')

# Adjust layout for better spacing

plt.tight_layout()

plt.show()

结果与假设:

在本实验中,我们保持了许多参数不变,例如:chunk_size 为 “128”,chunk_overlap 为 “20”,top_k 为 5,dense_embedding_model 为 “nomic-embed-text:late”,sparse_embedding_model 为 “Qdrant/bm42-all-minilm-l6-v2-attentions”。不过,我们可以将所有这些参数与上述解释的过程结合起来,创建一个组合,这听起来似乎执行起来更复杂,但肯定会比简单的 RAG 管道产生更好的结果。

结论

在对各种分块策略和混合方法进行评估时,采用混合搜索的语义分块始终表现出卓越的忠实性和答案相关性。不过,采用 LLM 重排的语义分块在正确性方面略胜一筹。主题节点解析方法表现出稳定的性能,但在忠实性方面却有所欠缺,而语义双重合并技术则在所有指标上保持了平衡。这些见解为完善 RAG 管道提供了可操作的指导,而混合搜索策略则成为提高相关性和正确性的关键因素。