强化版RAG:Self-RAG详解

在不断发展的人工智能领域,检索增强生成(RAG)是一个重大飞跃,尤其是在解决大型语言模型(LLM)输出中的事实不准确性方面。RAG 使模型能够检索相关的外部知识,从而提高其响应的准确性和上下文相关性。然而,即使是 RAG 也有其局限性。它经常不加区分地检索文档,而不评估检索到的信息是否有助于回答查询。这会导致输出结果杂乱无章,甚至产生误导。

为了解决这些局限性,研究人员引入了自我检索-增强生成(Self-RAG),这是一种先进的策略,通过在检索和生成过程中加入自我反思和自我评分来增强 RAG。在本文中,我将实施自己对 Self-RAG 方法,重点关注两项关键改进:通过对检索文档的相关性评估进行自我纠正,以及通过检查幻觉和评估生成回复的相关性进行自我反思。

什么是 Self-RAG?

自我反思检索-增强生成(Self-RAG)是一种通过添加自我评估机制来增强传统 RAG 的框架,允许系统批判性地评估检索到的文档和生成的回复。在传统的 RAG 中,检索过程往往是不加区分的。检索文档时不会评估其与查询的真正相关性,也不会考虑生成的回复是否与检索到的信息一致。

在我的 Self-RAG 方法中,我重点关注两个主要功能:

1. 通过评估信息块的相关性进行自我修正:

在检索的每个步骤中,系统都会评估检索到的信息块的相关性。通过主动检查这些数据块是否有助于回答问题,系统可以避免杂乱无章,并确保只有高质量的相关数据才会传递给生成过程。

2. 通过检查幻觉和相关性进行自我反思:

在生成回复后,系统将评估回复是否存在幻觉,确保检索到的文件支持所有关键主张。这就降低了生成无支持或与事实不符内容的可能性。此外,还会对回复与查询的相关性进行分级,确保输出的内容既符合事实,又与上下文相符。如果生成的回复没有得到检索文件的充分支持或被标记为不相关,系统将启动额外的网络搜索以收集更多信息。然后,网络搜索的结果将反馈到生成过程中,使模型能够利用更高质量的数据完善其回复。

这种简化方法的重点是通过避免不必要的文档检索以及增加轻量级的幻觉和相关性检查,最大限度地减少延迟和计算成本。这样就产生了一个高效而强大的 Self-RAG 框架,它保留了反射和校正的核心优势,却没有更复杂实现的开销。

使用LangGraph实现Self-RAG

为了实现 Self-RAG 方法,我们将使用 LangChain 库的高级扩展 LangGraph。LangGraph 可以创建有状态的循环计算工作流,管理多步骤任务,并根据不断变化的条件进行动态调整。这对于构建像 Self-RAG 这样的系统至关重要,因为在这种系统中,模型需要根据对检索到的文档和生成的响应的实时评估不断完善其输出。

加载矢量存储

要建立一个有效的 Self-RAG 系统,我们需要一个矢量存储库(vectorstore)--一个以矢量格式存储文档或数据的数据库,以便快速高效地检索。当提出问题时,向量存储是检索相关数据块的核心组件。每份文档都嵌入了向量,这些向量可以捕捉文本的含义和上下文,从而可以根据语义相似性快速搜索相关信息。

在本节中,我们将加载包含预处理数据的向量存储以及用于将文档向量化的嵌入模型。在本示例中,我们将处理一系列科学论文,并将其存储为矢量嵌入,以备检索。我们还将加载这一过程中使用的嵌入模型--在本例中,是通过 AWS Bedrock 访问的 Amazon Titan 嵌入模型。

一旦矢量存储开始运行,它就会成为我们处理 Self-RAG 系统检索任务的基础。

# Load vectorstore

vectorstore = PineconeVectorStore(index_name='tutorial-202408', embedding=bedrock_embeddings)

# Initialize embedding model

bedrock_client = boto3.client("bedrock-runtime", region_name='us-east-1')

bedrock_embeddings = BedrockEmbeddings(model_id="amazon.titan-embed-text-v1", client=bedrock_client)

大型语言模型 (LLM)

在人工智能代理的交互过程中,我们将利用功能强大的大型语言模型(LLM): Anthropic 的 Claude 3.5 Sonnet。该模型在自然语言理解和生成方面表现出色,是处理复杂查询和提供准确、与上下文相关的响应的可靠选择。我们将通过 AWS Bedrock 访问 Claude 3.5 Sonnet,它简化了集成,并允许我们使用温度等设置对响应的创造性和相关性等参数进行微调。

下面是 Claude 3.5 Sonnet 的初始化代码:

# Initialize laude 3.5 Sonnet LLM

model = ChatBedrock(model_id='anthropic.claude-3-5-sonnet-20240620-v1:0', client=bedrock_client, model_kwargs={'temperature': 0.6}, region_name='us-east-1')

检索器

检索器在我们的 Self-RAG 系统中扮演着核心角色,它从向量存储中获取相关的文本块。这些文本块包含的信息将用于生成对用户问题的回复。检索器的工作是扫描向量库,查找与查询最相关的文档嵌入,并返回这些嵌入供进一步处理。

为此,我们首先要从向量存储中初始化检索器:

retriever = vectorstore.as_retriever()

这一行确保我们的矢量存储(包含预处理和矢量化的文档)能够高效处理检索查询。

接下来,我们定义了检索函数,它接收当前状态(包括用户的问题)并返回最相关的文档:

def retrieve(state: Dict[str, Any]) -> Dict[str, Any]:

print("---RETRIEVE---")

question = state["question"]

documents = retriever.invoke(question)

return {"documents": [doc.page_content for doc in documents], "question": question}

网络搜索

如果最初从矢量存储中检索到的文件不能提供足够的信息或相关内容,我们可以求助于网络搜索,作为 Self-RAG 流程的额外步骤。通过网络搜索,我们可以确保系统获得最新、最全面的信息,这在处理矢量存储无法完全覆盖的时效性或专业性查询时特别有用。

为此,我们将使用 TavilySearch,这是一种根据用户查询从网上获取信息的工具。搜索结果以文本块的形式返回,处理方式与从矢量存储中获取文档的方式相同。

下面是 web_search 函数的实现:

def web_search(state: Dict[str, Any]) -> Dict[str, Any]:

print("---WEB SEARCH---")

question = state["question"]

documents = state["documents"]

docs = TavilySearchResults(k=3).invoke({"query": question})

web_results = "\n".join([d["content"] for d in docs])

documents.append(web_results)

return {"documents": documents, "question": question, "web_search": "Yes"}

网络搜索功能的关键步骤:

- 输入: 函数接受当前状态,其中包括用户的问题和从矢量存储中检索到的文档(如果有)。

- 执行网络搜索: 使用 TavilySearchResults 方法,我们根据用户的查询执行网络搜索。这将从网络上检索到三块相关信息(k=3),可根据我们想要检索的信息量进行调整。

- 合并和附加结果: 将搜索结果合并为一个字符串((n)用于分隔信息块),然后附加到之前检索到的文档列表中。这确保了网络搜索结果被整合到响应生成过程中。

- 输出: 该函数返回更新后的文档列表,以及原始问题和一个标志(“web_search”: “Yes”),表示执行了网络搜索。该标记可在稍后的决策过程中发挥作用。

文件分级

Self-RAG 自我修正机制的一个重要部分是对检索到的文本块的相关性进行分级。从矢量存储中检索到的文档并非都对用户的查询有用或相关。为了确保只有有意义的数据才会传递给生成过程,我们实施了一个分级步骤,对每份文档进行相关性评估。

这一分级过程有助于过滤无关信息,提高最终回复的准确性和针对性。这一步骤的关键是使用由大型语言模型(LLM)驱动的检索分级器,评估每份文档是否包含与用户问题相关的关键词或内容。

首先,我们定义一个提示模板,指示 LLM 充当评分器,根据文档是否与问题相关给出一个简单的二进制分数(是或否)。测试不需要过于严格;目的是快速过滤掉错误或不相关的检索。

retrieval_grader_prompt = PromptTemplate(

template="""You are a grader assessing relevance of a retrieved document to a user question. If the document contains keywords related to the user question, grade it as relevant. It does not need to be a stringent test. The goal is to filter out erroneous retrievals.

Give a binary score 'yes' or 'no' score to indicate whether the document is relevant to the question.

Provide the binary score as a JSON with a single key 'score' and no preamble or explanation.

Here is the retrieved document:

{document}

Here is the user question:

{question}""",

input_variables=["question", "document"],

)

接下来,我们将检索分级提示(retrieval_grader_prompt)与 LLM 和 JSON 输出解析器结合起来,创建一个检索分级链:

retrieval_grader = retrieval_grader_prompt | model | JsonOutputParser()

该retrieval_grader链将用于评估系统检索到的每份文档。

接下来,grade_documents 函数将处理从矢量存储(或网络搜索)中检索到的每份文档,并过滤掉那些被认为不相关的文档。代码如下:

def grade_documents(state: Dict[str, Any]) -> Dict[str, Any]:

print("---CHECK DOCUMENT RELEVANCE TO QUESTION---")

question = state["question"]

documents = state["documents"]

filtered_docs = []

# Iterate through each document and assess its relevance

for doc in documents:

score = retrieval_grader.invoke({"question": question, "document": doc})

# Only keep documents marked as relevant

if score["score"].lower() == "yes":

print("---GRADE: DOCUMENT RELEVANT---")

filtered_docs.append(doc)

else:

print("---GRADE: DOCUMENT NOT RELEVANT---")

return {"documents": filtered_docs, "question": question}

功能的关键步骤:

- 输入(状态): 函数接受当前状态,其中包括用户的问题和之前步骤中检索到的文档(从矢量存储或网络搜索中)。

- 分级过程: 对于每份文档,都会调用检索分级器。该模型会评估文档是否与问题相关,并以 JSON 格式返回分数(“是 ”表示相关,“否 ”表示不相关)。

- 筛选: 如果文档被评为相关(是),则将其添加到过滤文档列表中。无关文档将被丢弃。

- 输出: 函数将返回更新后的过滤文件列表,该列表将与原始问题一起传递给 Self-RAG 流程的下一步骤。

生成

一旦检索到相关的文本块并进行了分级,Self-RAG 流程的下一步就是为用户生成回复。这时,系统会根据用户的询问,提取检索到的文档,并生成准确、翔实、结构合理的回复。

为此,我们定义了一个提示模板,为如何生成回复设定了明确的规则。我们的目标是模拟一个专业的、知识渊博的助手,根据检索到的内容提供准确的答案,而不引入任何虚构的内容。

以下是我们如何定义答案提示:

answer_prompt = PromptTemplate(

template="""You are a scientific research assistant and engage in a professional and informative conversation with a person.

**RULES**

- Use a neutral, objective, and respectful tone.

- Never fabricate information. Use only the information provided in the context.

- Answer from the perspective of a neutral and independent researcher.

- Avoid phrases like "according to the website" or "based on the documentation" or "in the given context."

- Do not end an answer with "for further information contact..."

- Be precise, but feel free to be creative with your explanations while remaining professional.

- Use markdown formatting for your output, but do not use headings or titles.

- Do not ask follow-up questions.

Question: {question}

Context: {context}

Answer:""",

input_variables=["question", "context"],

)

一旦定义了提示符,我们就可以将其与 LLM(本例中为 Claude 3.5 Sonnet)和字符串输出解析器结合起来,创建一个检索-增强生成(RAG)链:

rag_chain = answer_prompt | model | StrOutputParser()

现在,我们要定义调用 RAG 链生成响应的函数:

def generate(state: Dict[str, Any]) -> Dict[str, Any]:

print("---GENERATE---")

question = state["question"]

documents = state["documents"]

# Generate an answer using the retrieved documents as context

answer = rag_chain.invoke(

{"context": "\n\n".join(documents), "question": question}

)

return {"documents": documents, "question": question, "answer": answer}

功能如何运行:

- 输入: 该函数接受当前状态,其中包含用户的问题和之前步骤(从向量存储或网络搜索)中检索到的文档。

- 构建上下文: 将检索到的文档合并成一个字符串,每个字符串以换行分隔。这就是生成回复的上下文。

- 生成回复: 调用 rag_chain.invoke() 方法,将问题和上下文输入模型,生成与文档事实内容一致的精确答案。

- 输出: 函数返回生成的答案以及文档和问题。

幻觉分级器

在语言模型中,幻觉指的是自信生成的答案与检索数据中的事实不符。这可能会导致误导性的答案,尤其是当生成的答案没有得到所提供文档的充分支持时。为了解决这个问题,幻觉分级器会对答案进行评估,确保它以事实为基础,并与用户的查询直接相关。如果幻觉分级器检测到答案不相关或包含幻觉,就会触发后备机制:将查询发送到网络搜索,以检索更准确的信息。

幻觉评分器(hallucination_grader_prompt)旨在评估生成的答案是否完全符合检索到的文档。该模型会根据回答是否符合事实给出一个二进制分数(是或否)。

hallucination_grader_prompt = PromptTemplate(

template="""You are a grader assessing whether an answer is grounded in / supported by a set of facts. Give a binary score 'yes' or 'no' score to indicate whether the answer to the question is grounded in / supported by a set of facts. Also, make sure that the answer actually addresses the question. Provide the binary score as a JSON with a single key 'score' and no preamble or explanation.

Here is the question:

{question}

Here are the facts:

{documents}

Here is the answer:

{answer}""",

input_variables=["answer", "documents", "question"],

)

一旦定义了幻觉评分器提示,我们就可以将其与模型和 JSON 输出解析器结合起来,创建一个幻觉评分器链。这个链会评估生成的答案是否符合事实。

hallucination_grader = hallucination_grader_prompt | model | JsonOutputParser()

接下来,grade_answer_v_documents_and_question 函数会处理生成的响应,检查检索到的文档是否完全支持该响应,并决定是否需要采取网络搜索等其他步骤。

def grade_answer_v_documents_and_question(state: Dict[str, Any]) -> str:

print("---CHECK HALLUCINATIONS---")

# If a web search has already been performed, we skip the hallucination check

if state['web_search'] == "Yes":

return 'useful'

# If no web search was performed yet, grade the generated answer for hallucinations

else:

score = hallucination_grader.invoke(

{"documents": state["documents"], "answer": state["answer"], "question": state["question"]}

)

print('hallu score', score)

# If the answer is supported by facts, mark it as useful

if score["score"] == "yes":

return "useful"

# If the answer is not supported (detected as a hallucination), send the query to web search

else:

print("---ANSWER NOT SUPPORTED, INITIATING WEB SEARCH---")

return "websearch"

功能的关键步骤:

- 输入(状态): 函数接受状态,其中包括用户的问题、检索到的文档和生成的响应。

- 避免冗余检查: 如果已经执行了网络搜索,函数会跳过幻觉检查以避免循环,并返回有用的响应。

- 对回复进行分级: 如果没有进行网络搜索,则幻觉分级器会评估生成的答案是否得到检索文档中事实的支持。

- 决策过程: 如果回复有事实依据,函数将返回有用。如果回答没有文件支持(即包含幻觉或不相关),函数会触发网络搜索,以收集更多信息并改进回答。

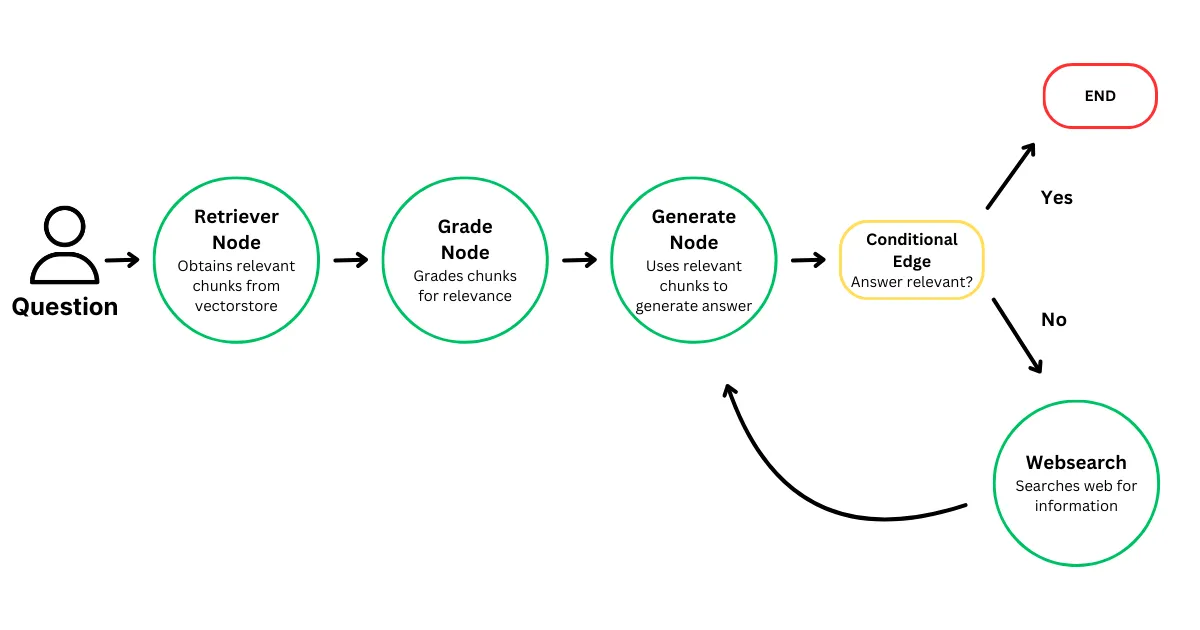

定义人工智能代理图

现在,我们已经定义了核心组件--检索、分级以及生成和分级响应--是时候将这些构件组合成一个有凝聚力的系统了。为了有效管理任务流,我们使用有状态图来构建人工智能代理。这样就可以在每个步骤中进行动态决策,确保代理可以根据输入调整其行动,并在处理信息的过程中不断发展。人工智能代理图使用节点(代表任务)、边(定义任务之间的逻辑流)和条件边(允许系统根据特定条件进行调整和重定向)来构建。

状态定义

状态存储了人工智能代理工作流程中始终存在的关键参数。当人工智能代理处理用户问题、检索相关信息、生成答案并进行评分时,这些参数会在节点之间传递。状态包括以下参数:

- 问题: 用户提出的原始问题。

- 答案: 最终生成的对用户问题的回答。

- web_search: 表示是否执行了网络搜索的标志。这有助于避免工作流程中不必要的循环。

- 文档:从矢量存储或通过网络搜索获取的相关文本块列表。

下面是我们定义状态的方法:

# Define the State

class GraphState(TypedDict):

question: str

answer: str

web_search: str

documents: list[str]

在人工智能代理处理查询时,这一状态将得到维护和更新,从而使代理能够记住过去的决定并进行动态调整。

工作流定义

工作流表示人工智能代理将执行的整个任务序列(节点),以及这些任务之间的转换(边)。工作流中的每个节点都代表一项具体任务(如文档检索或生成答案),而边则定义了这些任务之间的逻辑联系。

节点是代理执行的任务或操作。在此,我们定义了四个关键节点:

- 网络搜索: 如果初始检索失败或生成的答案不支持,该节点将执行网络搜索。

- 检索: 该节点根据用户的查询从向量存储中检索相关文档。

- 文档分级: 该节点对检索到的文档进行过滤和分级,以确保只使用相关内容。

- 生成: 该节点根据检索和分级的文档生成响应。

以下是我们如何定义节点并将其添加到工作流中:

# Define the LangGraph workflow

workflow = StateGraph(GraphState)

# Add Nodes

workflow.add_node("websearch", web_search)

workflow.add_node("retrieve", retrieve)

workflow.add_node("grade_documents", grade_documents)

workflow.add_node("generate", generate)

边定义了节点的连接方式和执行任务的顺序。在这个工作流程中,边代表了行动的顺序,从文档检索开始,到分级和生成回复,必要时还可以执行网络搜索。

下面是我们定义和添加边的方法:

# Add Edges

workflow.add_edge(START, "retrieve")

workflow.add_edge("retrieve", "grade_documents")

workflow.add_edge("grade_documents", "generate")

workflow.add_edge("websearch", "generate")

这种设置可确保代理首先检索文档、对文档进行分级、生成回复,并在必要时执行网络搜索以补充信息。

条件边在工作流中引入了决策点,允许系统根据前一个节点的输入或输出动态决定下一个节点。在本例中,我们在生成步骤后添加了一条条件边。如果生成的答案被认为不相关或无依据(被检测为幻觉),代理将启动网络搜索。如果答案有用且有事实根据,工作流程将终止。

# Add Conditional Edge: this edge decides if the answer is relevant, if not a websearch will be performed

workflow.add_conditional_edges(

"generate",

grade_answer_v_documents_and_question,

{

"not supported": "websearch",

"useful": END,

},

)

这一条件边通过在需要时加入额外的网络搜索步骤,有助于保持人工智能代理响应的准确性和可靠性。

定义完所有节点、边和条件逻辑后,我们编译工作流程。这一步骤可确保整个流程相互连接,随时可以执行:

# Compile the workflow

compiled_workflow = workflow.compile()

编译完成后,人工智能代理可以无缝地通过检索、分级和生成响应的各个阶段,并在遇到不支持的答案时通过触发网络搜索进行动态调整。

运行人工智能代理

为了评估人工智能代理的性能,我们将用相关问题和无关问题对其进行测试。这样,我们就能观察到系统是如何处理已有信息的查询和矢量存储中可能不存在相关数据的查询的。

测试场景 1:相关问题

问题 “什么是癌症疫苗?

我们首先测试一个我们知道向量库中存在相关信息的问题。这有助于验证检索、分级和生成回复的过程。

下面是我们初始化状态的方法:

# User question

question = 'What is a cancer vaccine?'

# Define an empty state

state = {

"question": question,

"documents": [],

"answer": "",

"web_search": "",

}

outputs = []

for output in compiled_workflow.stream(state):

print(output)

for key, value in output.items():

outputs.append({key: value})

print('outputs', outputs)

人工智能代理逐步运行编译后的工作流程。对于每项输出,它都会从向量库中检索相关文档,对其相关性进行分级,并根据最相关的信息生成答案。每一步都会打印输出:

---RETRIEVE---

{'retrieve': {'question': 'what is a cancer vaccine?', 'documents': ['Tllelized miniaturized production lines and significantly enhanced the overall efficiency and accessibility of cancer vaccines for clinical applications.']}}

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

{'grade_documents': {'question': 'what is a cancer vaccine?', 'documents': ['n of scaled parallelized miniaturized production lines and significantly enhanced the overall efficiency and accessibility of cancer vaccines for clinical applications.']}}

---GENERATE---

---CHECK HALLUCINATIONS---

hallu score {'score': 'yes'}

{'generate': {'question': 'what is a cancer vaccine?', 'answer': "Detailed answer", 'documents': [Detailed documents]}

outputs [{'retrieve': {'question': 'what is a cancer vaccine?', 'documents': []}

1. 检索:

人工智能代理成功地从矢量存储中检索到几份包含癌症疫苗详细信息的文档。

2. 相关性分级:

系统对检索到的文档进行分级,确定所有文档都与癌症疫苗的查询相关。这一步骤证明了代理能够过滤掉不相关的数据,专注于高质量的信息。

3. 生成:

人工智能代理利用分级文档生成详细的答案,准确解释癌症疫苗。

4. 幻觉检查:

幻觉分级器验证生成的答案是否得到检索文件的充分支持,确认信息的准确性和事实完整性。

测试场景 2:无关问题

问题 “埃隆-马斯克是谁?

在这个测试中,我们向人工智能代理提出一个不相关的问题,以观察它如何处理矢量存储可能不包含相关数据的情况。这将有助于测试系统的后备机制,包括在必要时启动网络搜索。

下面是我们如何为这个场景初始化状态:

# User question

question = 'Who is Elon Musk?'

# Define an empty state

state = {

"question": question,

"documents": [],

"answer": "",

"web_search": "",

}

outputs = []

for output in compiled_workflow.stream(state):

print(output)

for key, value in output.items():

outputs.append({key: value})

print('outputs', outputs)

输出:

---RETRIEVE---

{'retrieve': {'question': 'who is elon musk?', 'documents': ['']}

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

{'grade_documents': {'question': 'who is elon musk?', 'documents': []}}

---GENERATE---

---CHECK HALLUCINATIONS---

hallu score {'score': 'no'}

{'generate': {'question': 'who is elon musk?', 'answer': "Elon Musk is a prominent entrepreneur, business magnate, and investor known for his involvement in several high-profile technology companies. He is the founder, CEO, and chief engineer of SpaceX, a private space exploration and transportation company. Musk also serves as the CEO and product architect of Tesla, Inc., a leading electric vehicle and clean energy company.\n\nBorn in South Africa in 1971, Musk demonstrated an early aptitude for technology and entrepreneurship. He co-founded PayPal, an online payment company, which was later acquired by eBay. His subsequent ventures have focused on addressing global challenges in areas such as sustainable energy, space exploration, and artificial intelligence.\n\nSome of Musk's other notable projects include:\n\n- The Boring Company, which aims to revolutionize transportation through underground tunnel systems\n- Neuralink, a neurotechnology company developing brain-computer interfaces\n- OpenAI, an artificial intelligence research laboratory (though he has since stepped down from the board)\n\nMusk is known for his ambitious goals, innovative thinking, and sometimes controversial public statements. His work has significantly influenced the fields of electric vehicles, renewable energy, and space technology, earning him both praise and criticism from various sectors of society and industry.", 'documents': []}}

---WEB SEARCH---

{'websearch': {'question': 'who is elon musk?', 'web_search': 'Yes', 'documents': ["Elon Musk, the South African-born entrepreneur widely known as the founder of SpaceX and Tesla, is renowned for his advances in electric vehicles and space\xa0...Zip2 · Tesla, Inc. · Electronic game · PayPal\n“Elon Musk Tried to Pitch the Head of the Yellow Pages Before the Internet Boom: ‘He Threw the Book at Me’.”\nCompaq Computer, via U.S. Securities and Exchange Commission. SpaceX\nMusk used most of the proceeds from his PayPal stake to found Space Exploration Technologies Corp., the rocket's developer commonly known as SpaceX. By his own account, Musk spent $100 million to found SpaceX in 2002.\n Musk subsequently settled a Securities and Exchange Commission (SEC) complaint alleging he knowingly misled investors with the tweet by paying a $20 million fine along with the same penalty for Tesla, and agreeing to let Tesla’s lawyers approve tweets with material corporate information before posting.\n Tesla\nMusk became involved with the electric cars venture as an early investor in 2004, ultimately contributing about $6.3 million, to begin with, and joined the team, including engineer Martin Eberhard, to help run a company then known as Tesla Motors. The company’s board adopted a poison pill provision to discourage Musk from accumulating an even larger stake, but they ultimately accepted Musk’s offer after he disclosed $46.5 billion in committed financing for the deal in a securities filing.\n3 hours ago · Tesla CEO Elon Musk reveals details and timing of Cybercab production · Comments16.Duration: 3:00Posted: 3 hours ago\nAs the co-founder and CEO of Tesla, Elon leads all product design, engineering and global manufacturing of the company's electric vehicles, battery products and\xa0...\nElon is Technoking of Tesla and has served as our Chief Executive Officer since October 2008 and as a member of the Board since April 2004."]}}

---GENERATE---

---CHECK HALLUCINATIONS---

{'generate': {'question': 'who is elon musk?', 'answer': "Elon Musk is a prominent entrepreneur and business magnate known for his involvement in several high-profile technology companies. He is most notably recognized as the founder of SpaceX, a private space exploration company, and the CEO of Tesla, an electric vehicle and clean energy company.\n\nBorn in South Africa, Musk has made significant contributions to various industries:\n\n1. Space exploration: Founded SpaceX in 2002 with the goal of reducing space transportation costs and enabling the colonization of Mars.\n\n2. Electric vehicles: Joined Tesla Motors in 2004 as an early investor and became CEO in 2008, leading the company's efforts in developing and manufacturing electric cars.\n\n3. Online payments: Co-founded X.com, which later merged with Confinity to become PayPal. Musk made a substantial profit when eBay acquired PayPal in 2002.\n\n4. Sustainable energy: Through Tesla, Musk has been involved in developing solar energy products and battery storage systems.\n\nMusk is known for his ambitious goals and innovative approaches to technology and business. However, he has also faced controversy, including a settlement with the Securities and Exchange Commission over misleading tweets about Tesla's stock.\n\nHis leadership style and business decisions have attracted both praise and criticism, making him a polarizing figure in the tech industry and beyond. Musk's companies have had a significant impact on their respective industries, pushing for advancements in electric vehicles, renewable energy, and space exploration.", 'documents': ["Elon Musk, the South African-born entrepreneur widely known as the founder of SpaceX and Tesla, is renowned for his advances in electric vehicles and space\xa0...Zip2 · Tesla, Inc. · Electronic game · PayPal\n“Elon Musk Tried to Pitch the Head of the Yellow Pages Before the Internet Boom: ‘He Threw the Book at Me’.”\nCompaq Computer, via U.S. Securities and Exchange Commission. SpaceX\nMusk used most of the proceeds from his PayPal stake to found Space Exploration Technologies Corp., the rocket's developer commonly known as SpaceX. By his own account, Musk spent $100 million to found SpaceX in 2002.\n Musk subsequently settled a Securities and Exchange Commission (SEC) complaint alleging he knowingly misled investors with the tweet by paying a $20 million fine along with the same penalty for Tesla, and agreeing to let Tesla’s lawyers approve tweets with material corporate information before posting.\n Tesla\nMusk became involved with the electric cars venture as an early investor in 2004, ultimately contributing about $6.3 million, to begin with, and joined the team, including engineer Martin Eberhard, to help run a company then known as Tesla Motors. The company’s board adopted a poison pill provision to discourage Musk from accumulating an even larger stake, but they ultimately accepted Musk’s offer after he disclosed $46.5 billion in committed financing for the deal in a securities filing.\n3 hours ago · Tesla CEO Elon Musk reveals details and timing of Cybercab production · Comments16.Duration: 3:00Posted: 3 hours ago\nAs the co-founder and CEO of Tesla, Elon leads all product design, engineering and global manufacturing of the company's electric vehicles, battery products and\xa0...\nElon is Technoking of Tesla and has served as our Chief Executive Officer since October 2008 and as a member of the Board since April 2004."]}}

outputs [{'retrieve': {'question': 'who is elon musk?', 'documents': ['']}

1. 检索:

系统检索到的文档与 “埃隆-马斯克是谁?”这一问题完全无关。这些文档主要集中在有关 mRNA 癌症疫苗的技术细节上,完全没有涉及该查询。

2. 相关性分级:

所有检索到的文档都被正确分级为不相关,这表明系统有能力识别检索到的信息是否与用户的查询不匹配。

3. 生成:

尽管检索结果不相关,但人工智能代理仍能生成关于埃隆-马斯克的连贯答案。这表明系统有能力在检索步骤中处理不相关的数据,同时仍能根据已有知识生成符合事实的正确答案。

4. 幻觉检查:

幻觉分级器将生成的回答标记为不基于检索到的文档,表明所提供的信息没有得到检索步骤的支持。

5. 网络搜索:

为了解决这个问题,我们进行了一次网络搜索,从外部来源检索有关埃隆-马斯克的相关信息,并验证之前生成的答案。

结论

在本文中,我们探索了 Self-RAG 世界,这是一种创新方法,旨在通过结合自我反思和自我纠正,使人工智能系统更智能、更准确、更可靠。虽然人工智能功能强大,但有时也会产生 “幻觉”--听起来令人信服的答案,却没有真实数据作为基础。为了解决这个问题,Self-RAG 结合了从文档检索到相关性分级和幻觉检测等多层检查,以确保人工智能提供基于事实的回答。