利用小型LLM技术:优化RAG策略详解

简介

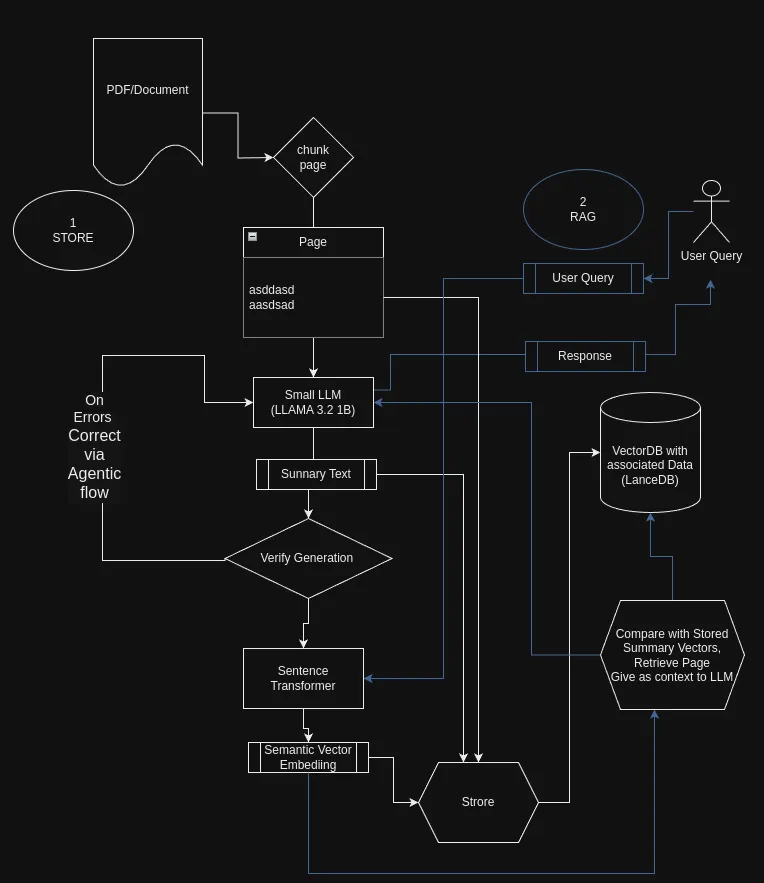

检索增强生成是一种通过将语言模型与外部知识库集成来增强语言模型能力的范例。虽然像 GPT-4 这样的大型 LLM 已经展示了非凡的能力,但它们的计算成本也很高。小型 LLM 提供了一种资源效率更高的替代方案,尤其适用于文本摘要和关键词提取等任务,这些任务对于 RAG 系统中的索引和检索至关重要。

在本文中,我们将演示如何使用小型 LLM:

- 从 PDF 文档中提取和总结文本。

- 生成摘要和关键词的嵌入。

- 在 LanceDB 数据库中高效存储数据。

- 将其用于有效的 RAG

- 还可利用代理工作流程从 LLM 中进行自我纠错

使用较小的 LLM 可以大大降低在海量数据集上进行此类转换的成本,并在较简单的任务中获得与较大参数 LLM 类似的优势,而且可以以最低的成本在企业或云端轻松托管。

我们将使用 LLAMA 3.2 10 亿参数模型,这是目前最先进的最小 LLM。

嵌入原始文本的问题

在深入研究实施之前,有必要了解一下为什么在 RAG 系统中嵌入文档中的原始文本会产生问题。

无效的上下文捕捉

嵌入页面中的原始文本而不进行总结,往往会导致以下嵌入:

- 高维噪声: 原始文本可能包含无关信息、格式伪造或无助于理解核心内容的模板语言。

- 稀释关键概念: 重要的概念可能被埋没在无关的文本中,从而使嵌入的关键信息代表性降低。

检索效率低下

当嵌入词不能准确代表文本中的关键概念时,检索系统可能会失败:

- 有效匹配用户查询: 嵌入可能与查询嵌入不一致,导致检索不到相关文档。

- 提供正确的上下文: 即使检索到了文档,也可能由于嵌入中的噪声而无法提供用户所需的准确信息。

解决方案

在生成嵌入之前对文本进行总结,可以解决这些问题:

- 提炼关键信息: 摘要提取要点和关键词,删除不必要的细节。

- 提高嵌入质量: 从摘要中生成的嵌入更有针对性,更能代表主要内容,从而提高检索准确性。

前提条件

在我们开始之前,请确保你已安装以下软件:

- Python 3.7 或更高版本

- PyTorch

- Transformers库

- 句子转换器

- PyMuPDF(用于 PDF 处理)

- LanceDB

- 带 GPU 的笔记本电脑,最低 6 GB 或 Colab(T4 GPU 即可)或类似设备

步骤 1:设置环境

首先,导入所有必要的库,并设置用于调试和跟踪的日志。

import pandas as pd

import fitz # PyMuPDF

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

import lancedb

from sentence_transformers import SentenceTransformer

import json

import pyarrow as pa

import numpy as np

import re

步骤 2:定义辅助函数

创建提示

我们定义了一个函数,用于创建与 LLAMA 3.2 模型兼容的提示。

def create_prompt(question):

"""

Create a prompt as per LLAMA 3.2 format.

"""

system_message = "You are a helpful assistant for summarizing text and result in JSON format"

prompt_template = f'''

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

{system_message}<|eot_id|><|start_header_id|>user<|end_header_id|>

{question}<|eot_id|><|start_header_id|>assistant1231231222<|end_header_id|>

'''

return prompt_template

处理提示

该函数使用模型和标记符处理提示。我们将温度设置为 0.1,以减少模型的创造性(减少幻觉)。

def process_prompt(prompt, model, tokenizer, device, max_length=500):

"""

Processes a prompt, generates a response, and extracts the assistant's reply.

"""

prompt_encoded = tokenizer(prompt, truncation=True, padding=False, return_tensors="pt")

model.eval()

output = model.generate(

input_ids=prompt_encoded.input_ids.to(device),

max_new_tokens=max_length,

attention_mask=prompt_encoded.attention_mask.to(device),

temperature=0.1 # More deterministic

)

answer = tokenizer.decode(output[0], skip_special_tokens=True)

parts = answer.split("assistant1231231222", 1)

if len(parts) > 1:

words_after_assistant = parts[1].strip()

return words_after_assistant

else:

print("The assistant's response was not found.")

return "NONE"

步骤 3:加载模型

我们使用 LLAMA 3.2 1B Instruct 模型进行汇总。我们使用 bfloat16 加载模型以减少内存,并在 Linux 操作系统下的英伟达笔记本电脑 GPU(英伟达 GeForce RTX 3060 6 GB/驱动程序 NVIDIA-SMI 555.58.02/Cuda编译工具,12.5 版,V12.5.40)中运行。

更好的办法是通过 vLLM 或 exLLamaV2 托管

model_name_long = "meta-llama/Llama-3.2-1B-Instruct""meta-llama/Llama-3.2-1B-Instruct"

tokenizer = AutoTokenizer.from_pretrained(model_name_long)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

log.info(f"Loading the model {model_name_long}")

bf16 = False

fp16 = True

if torch.cuda.is_available():

major, _ = torch.cuda.get_device_capability()

if major >= 8:

log.info("Your GPU supports bfloat16: accelerate training with bf16=True")

bf16 = True

fp16 = False

# Load the model

device_map = {"": 0} # Load on GPU 0

torch_dtype = torch.bfloat16 if bf16 else torch.float16

model = AutoModelForCausalLM.from_pretrained(

model_name_long,

torch_dtype=torch_dtype,

device_map=device_map,

)

log.info(f"Model loaded with torch_dtype={torch_dtype}")

步骤 4:读取和处理 PDF 文档

我们从 PDF 文档的每一页中提取文本。

file_path = './data/troubleshooting.pdf''./data/troubleshooting.pdf'

dict_pages = {}

# Open the PDF file

with fitz.open(file_path) as pdf_document:

for page_number in range(pdf_document.page_count):

page = pdf_document.load_page(page_number)

page_text = page.get_text()

dict_pages[page_number] = page_text

print(f"Processed PDF page {page_number + 1}")

步骤 5:设置 LanceDB 和句子转换器

我们初始化用于生成嵌入的 SentenceTransformer 模型,并设置用于存储数据的 LanceDB。我们将为 LanceDB 表使用基于 PyArrow 的模式

请注意,现在不使用关键字,但可用于混合搜索,即矢量相似性搜索和文本搜索(如有需要)。

# Initialize the SentenceTransformer model

sentence_model = SentenceTransformer('all-MiniLM-L6-v2')

# Connect to LanceDB

db = lancedb.connect('./data/my_lancedb')

# Define the schema using PyArrow

schema = pa.schema([

pa.field("page_number", pa.int64()),

pa.field("original_content", pa.string()),

pa.field("summary", pa.string()),

pa.field("keywords", pa.string()),

pa.field("vectorS", pa.list_(pa.float32(), 384)), # Embedding size of 384

pa.field("vectorK", pa.list_(pa.float32(), 384)),

])

# Create or connect to a table

table = db.create_table('summaries', schema=schema, mode='overwrite')

步骤 6:总结和存储数据

我们循环浏览每个页面,生成摘要和关键词,并将它们与嵌入式一起存储到数据库中。

# Loop through each page in the PDF

for page_number, text in dict_pages.items():

question = f"""For the given passage, provide a long summary about it, incorporating all the main keywords in the passage.

Format should be in JSON format like below:

{{

"summary": <text summary>,

"keywords": <a comma-separated list of main keywords and acronyms that appear in the passage>,

}}

Make sure that JSON fields have double quotes and use the correct closing delimiters.

Passage: {text}"""

prompt = create_prompt(question)

response = process_prompt(prompt, model, tokenizer, device)

# Error handling for JSON decoding

try:

summary_json = json.loads(response)

except json.decoder.JSONDecodeError as e:

exception_msg = str(e)

question = f"""Correct the following JSON {response} which has {exception_msg} to proper JSON format. Output only JSON."""

log.warning(f"{exception_msg} for {response}")

prompt = create_prompt(question)

response = process_prompt(prompt, model, tokenizer, device)

log.warning(f"Corrected '{response}'")

try:

summary_json = json.loads(response)

except Exception as e:

log.error(f"Failed to parse JSON: '{e}' for '{response}'")

continue

keywords = ', '.join(summary_json['keywords'])

# Generate embeddings

vectorS = sentence_model.encode(summary_json['summary'])

vectorK = sentence_model.encode(keywords)

# Store the data in LanceDB

table.add([{

"page_number": int(page_number),

"original_content": text,

"summary": summary_json['summary'],

"keywords": keywords,

"vectorS": vectorS,

"vectorK": vectorK

}])

print(f"Data for page {page_number} stored successfully.")

使用 LLM 纠正其输出

在生成摘要和提取关键字时,LLM 有时可能会产生与预期格式不符的输出,例如畸形 JSON。

我们可以利用 LLM 本身,通过提示它修复错误来纠正这些输出。如上代码所示

# Use the Small LLAMA 3.2 1B model to create summary

for page_number, text in dict_pages.items():

question = f"""For the given passage, provide a long summary about it, incorporating all the main keywords in the passage.

Format should be in JSON format like below:

{{

"summary": <text summary> example "Some Summary text",

"keywords": <a comma separated list of main keywords and acronyms that appear in the passage> example ["keyword1","keyword2"],

}}

Make sure that JSON fields have double quotes, e.g., instead of 'summary' use "summary", and use the closing and ending delimiters.

Passage: {text}"""

prompt = create_prompt(question)

response = process_prompt(prompt, model, tokenizer, device)

try:

summary_json = json.loads(response)

except json.decoder.JSONDecodeError as e:

exception_msg = str(e)

# Use the LLM to correct its own output

question = f"""Correct the following JSON {response} which has {exception_msg} to proper JSON format. Output only the corrected JSON.

Format should be in JSON format like below:

{{

"summary": <text summary> example "Some Summary text",

"keywords": <a comma separated list of keywords and acronyms that appear in the passage> example ["keyword1","keyword2"],

}}"""

log.warning(f"{exception_msg} for {response}")

prompt = create_prompt(question)

response = process_prompt(prompt, model, tokenizer, device)

log.warning(f"Corrected '{response}'")

# Try parsing the corrected JSON

try:

summary_json = json.loads(response)

except json.decoder.JSONDecodeError as e:

log.error(f"Failed to parse corrected JSON: '{e}' for '{response}'")

continue

在这段代码中,如果 LLM 的初始输出无法解析为 JSON,我们会再次提示 LLM 更正 JSON。这种自我纠正模式提高了我们管道的鲁棒性。

假设 LLM 生成了以下错误的 JSON:

{

'summary': 'This page explains the installation steps for the product.','summary': 'This page explains the installation steps for the product.',

'keywords': ['installation', 'setup', 'product']

}由于使用了单引号而不是双引号,在尝试解析 JSON 时出现了错误。我们会捕捉到这个错误,并提示 LLM 加以纠正:

exception_msg = "Expecting property name enclosed in double quotes"

question = f"""Correct the following JSON {response} which has {exception_msg} to proper JSON format. Output only the corrected JSON."""

然后,LLM 会提供更正后的 JSON:

{

"summary": "This page explains the installation steps for the product.",summary": "This page explains the installation steps for the product.",

"keywords": ["installation", "setup", "product"]

}通过使用 LLM 来校正自己的输出,我们可以确保下游处理的数据格式正确。

通过 LLM 代理扩展自校正功能

通过使用 LLM代理,可以扩展和自动化使用 LLM 更正输出的模式。LLM 代理可以:

- 自动处理错误: 检测错误,并在没有明确指令的情况下自主决定如何纠正错误。

- 提高效率 : 减少人工干预或额外纠错代码的需求。

- 增强鲁棒性 : 不断从错误中学习,改进未来的输出。

LLM 代理作为中介管理信息流并智能处理异常。它们可以设计为:

- 解析输出并验证格式。

- 遇到错误时,用完善的指令重新提示 LLM。

- 记录错误和更正,供未来参考和模型微调。

近似实现:

LLM 代理可以封装这一逻辑,而不是手动捕捉异常和重新提示:

def generate_summary_with_agent(text):generate_summary_with_agent(text):

agent = LLMAgent(model, tokenizer, device)

question = f"""For the given passage, provide a summary and keywords in proper JSON format."""

prompt = create_prompt(question)

response = agent.process_and_correct(prompt)

return response

LLMAgent类将在内部处理初始处理、错误检测、重新提示和纠正。

现在,让我们看看如何再次使用 LLM 来帮助排序,从而将嵌入式技术用于有效的 RAG 模式。

检索和生成: 处理用户查询

这是常规流程。我们接收用户的问题,然后搜索最相关的摘要。

# Example usage

user_question = "Not able to manage new devices"

results = search_summary(user_question, sentence_model)

准备检索到的摘要

我们将检索到的摘要汇编成一份清单,为每份摘要标注页码,以供参考。

summary_list = []

for idx, result in enumerate(results):

summary_list.append(f"{result['page_number']}# {result['summary']}")

对摘要进行排序

我们会提示语言模型根据与用户问题的相关性对检索到的摘要进行排序,并选择最相关的摘要。与 K-近邻、余弦距离或其他排序算法相比,在上下文嵌入(向量)匹配方面,我们再次使用 LLM 对摘要进行排序。

question = f"""From the given list of summaries {summary_list}, rank which summary would possibly have \"""From the given list of summaries {summary_list}, rank which summary would possibly have \

the answer to the question '{user_question}'. Return only that summary from the list."""

log.info(question)提取所选摘要并生成最终答案

我们会检索与所选摘要相关的原始内容,并提示语言模型使用此上下文生成用户问题的详细答案。

for idx, result in enumerate(results):

if int(page_number) == result['page_number']:

page = result['original_content']

question = f"""Can you answer the query: '{user_question}' \

using the context below?

Context: '{page}'

"""

log.info(question)

prompt = create_prompt(

question,

"You are a helpful assistant that will go through the given query and context, think in steps, and then try to answer the query \

with the information in the context."

)

response = process_prompt(prompt, model, tokenizer, device, temperature=0.01) # Less freedom to hallucinate

log.info(response)

print("Final Answer:")

print(response)

break

工作流程说明

1. 用户查询矢量化: 使用索引过程中使用的相同句子转换器模型,将用户问题转换为嵌入式问题。

2. 相似性搜索: 使用查询嵌入搜索矢量数据库(LanceDB)中最相似的摘要,并返回前三名

>> From the VectorDB Cosine search and Top 3 nearest neighbour search result,

prepended by linked page numbers

07:04:00 INFO:From the given list of summary [[

'112# Cannot place newly discovered device in managed state',

'113# The passage discusses the troubleshooting steps for managing newly discovered devices on the NSF platform, specifically addressing issues with device placement, configuration, and deployment.',

'116# Troubleshooting Device Configuration Backup Issue']] rank which summary would possibly have the possible answer to the question Not able to manage new devices. Return only that summary from the list

3. 摘要排名: 将检索到的摘要传递给语言模型,该模型会根据摘要与用户问题的相关性对摘要进行排序。

>> Asking LLM to Select from the Top N based on context

07:04:01 INFO:Selected Summary ''113# The passage discusses the troubleshooting steps for managing newly discovered devices on the NSF (Network Systems and Functional Requirements) platform, specifically addressing issues with device placement, configuration, and deployment.''''113# The passage discusses the troubleshooting steps for managing newly discovered devices on the NSF (Network Systems and Functional Requirements) platform, specifically addressing issues with device placement, configuration, and deployment.''

4. 上下文检索: 通过解析页码并从 LanceDB 获取相关页面,检索与最相关摘要相关的原始内容

07:04:01 INFO:Page number: 113

07:04:01 INFO:Can you answer the question or query or provide more deatils query:'Not able to manage new devices' Using the context below'Not able to manage new devices' Using the context below

context:'3

Check that the server and client platforms are appropriately sized. ...

Failed SNMP communication between the server and managed device.

SNMP traps from managed devices are arriving at one server,

or no SNMP traps are ....

'

5. 生成答案: 语言模型利用检索到的上下文生成用户问题的详细答案。

以下是我使用的 PDF 样本的输出示例

07:04:08 INFO:I'll go through the steps and provide more details to answer the query.

The query is: "Not able to manage new devices"

Here's my step-by-step analysis:

**Step 1: Check that the server and client platforms are appropriately sized**

The context mentions that the NSP Planning Guide is available, which implies that the NSP (Network Service Provider) has a planning process to ensure that the server and client platforms are sized correctly. This suggests that the NSP has a process in place to evaluate the performance and capacity of the server and client platforms to determine if they are suitable for managing new devices.

**Step 2: Check for resynchronization problems between the managed network and the NFM-P**

The context also mentions that resynchronization problems between the managed network and the NFM-P can cause issues with managing new devices. This implies that there may be a problem with the communication between the server and client platforms, which can prevent new devices from being successfully managed.

**Step 3: Check for failed SNMP communication between the server and managed device**

The context specifically mentions that failed SNMP communication between the server and managed device can cause issues with managing new devices. This suggests that there may be a problem with the communication between the server and the managed device, which can prevent new devices from being successfully managed.

**Step 4: Check for failed deployment of the configuration request**

The context also mentions that failed deployment of the configuration request can cause issues with managing new devices. This implies that there may be a problem with the deployment process, which can prevent new devices from being successfully managed.

**Step 5: Perform the following steps**

The context instructs the user to perform the following steps:

1. Choose Administration→NE Maintenance→Deployment from the XXX main menu.

2. The Deployment form opens, listing incomplete deployments, deployer, tag, state, and other information.

Based on the context, it appears that the user needs to review the deployment history to identify any issues that may be preventing the deployment of new devices.

**Answer**

Based on the analysis, the user needs to:

1. Check that the server and client platforms are appropriately sized.

2. Check for resynchronization problems between the managed network and the NFM-P.

3. Check for failed SNMP communication between the server and managed device.

4. Check for failed deployment of the configuration request.

By following these steps, the user should be able to identify and resolve the issues preventing the management of

结论

我们可以使用小型 LLM(如 LLAMA 3.2 1B Instruct)从大型文档中有效地总结和提取关键词。这些摘要和关键词可以嵌入并存储在 LanceDB 等数据库中,从而使 RAG 系统能够在工作流程中而不仅仅是在生成过程中使用 LLM 进行高效检索。