使用LLM进行高效文档分块:逐块解锁知识

本文介绍了如何使用大型语言模型(LLM)基于“想法”的概念对文档进行分块。

本例中使用的是OpenAI的gpt-4o模型,但同样的方法也适用于任何其他LLM,如Hugging Face、Mistral等提供的模型。

关于文档分块的考虑

在认知心理学中,块代表“信息单元”。

这个概念同样可以应用于计算领域:使用LLM,我们可以分析一个文档并生成一组通常是可变长度的块,每个块表达一个完整的“想法”。

这意味着系统将文档分成“文本块”,每个文本块都表达一个统一的概念,而不会在同一个块中混合不同的想法。

目标是创建一个由独立元素组成的知识库,这些元素可以相互关联,而不会在同一个块内重叠不同的概念。

当然,在分析和分割过程中,如果某个想法在文档的不同部分重复出现或以不同的方式表达,那么可能会有多个块表达相同的想法。

开始步骤

第一步是确定将成为我们知识库一部分的文档。

这通常是一个PDF或Word文档,可以按页或按段落读取,并转换为文本。

为了简化,我们假设我们已经有了从Around the World in Eighty Days中提取的如下文本段落列表:

documents = [

"""On October 2, 1872, Phileas Fogg, an English gentleman, left London for an extraordinary journey. """On October 2, 1872, Phileas Fogg, an English gentleman, left London for an extraordinary journey.

He had wagered that he could circumnavigate the globe in just eighty days.

Fogg was a man of strict habits and a very methodical life; everything was planned down to the smallest detail, and nothing was left to chance.

He departed London on a train to Dover, then crossed the Channel by ship. His journey took him through many countries,

including France, India, Japan, and America. At each stop, he encountered various people and faced countless adventures, but his determination never wavered.""",

"""However, time was his enemy, and any delay risked losing the bet. With the help of his faithful servant Passepartout, Fogg had to face

unexpected obstacles and dangerous situations.""",

"""Yet, each time, his cunning and indomitable spirit guided him to victory, while the world watched in disbelief.""",

"""With one final effort, Fogg and Passepartout reached London just in time to prove that they had completed their journey in less than eighty days.

This extraordinary adventurer not only won the bet but also discovered that the true treasure was the friendship and experiences he had accumulated along the way."""

]我们还假设正在使用的LLM接受的输入和输出令牌数量有限,我们将其分别称为input_token_nr和output_token_nr。

在此示例中,我们将设置input_token_nr = 300和output_token_nr = 250。

这意味着为了成功分割,提示语和要分析的文档两者的令牌总数必须少于300,而LLM产生的结果则不得超过250个令牌。

使用OpenAI提供的分词器,我们看到我们的知识库文档由254个令牌组成。

因此,无法一次性分析整个文档,因为尽管输入可以在一次调用中处理,但输出却无法容纳。

因此,作为准备步骤,我们需要将原始文档分成不超过250个令牌的块。

然后,这些块将被传递给LLM,LLM将进一步将它们分割成更小的块。

为了谨慎起见,我们将最大块大小设置为200个令牌。

生成块

生成块的过程如下:

- 考虑知识库(KB)中的第一段,确定它所需的令牌数量,如果少于200个,则成为块的第一个元素。

- 分析下一段的大小,如果与当前块的组合大小少于200个令牌,则将其添加到块中,并继续处理剩余段落。

- 当尝试添加另一段导致块大小超过限制时,该块达到其最大大小。

- 重复步骤一,直到所有段落都被处理。

为了简化,块生成过程假设每个段落都小于允许的最大大小(否则,段落本身必须被分割成更小的元素)。

为了执行此任务,我们使用了LLMChunkizerLib/chunkizer.py库中的llm_chunkizer.split_document_into_blocks函数。

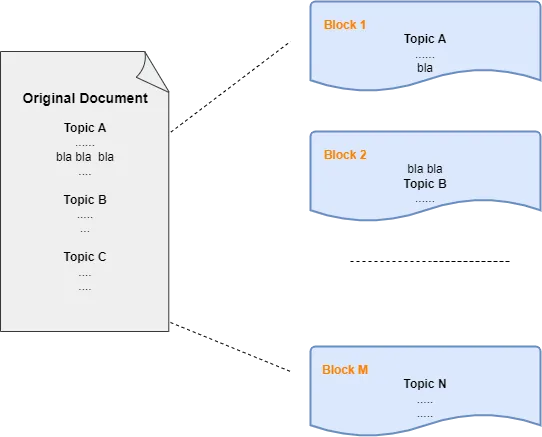

从视觉上看,结果如图1所示。

图1

生成数据块时,唯一需要遵循的规则是不要超过允许的最大尺寸。

对文本的含义不做任何分析或假设。

生成数据块

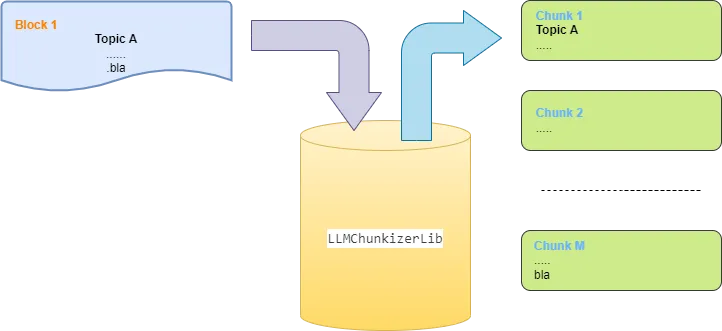

下一步是将数据块拆分成每个都表达相同想法的小块。

对于这项任务,我们使用LLMChunkizerLib/chunkizer.py库中的llm_chunkizer.chunk_text_with_llm函数,该函数也在同一个代码库中。

结果如图2所示。

图2

这个过程是线性进行的,允许大型语言模型(LLM)自由地决定如何形成数据块。

处理两个数据块之间的重叠

如前所述,在数据块拆分过程中,只考虑长度限制,而不考虑表达相同想法的相邻段落是否被拆分到不同的数据块中。

这在图1中很明显,其中“bla bla bla”的概念(代表一个统一的想法)被拆分到两个相邻的数据块之间。

如图2所示,分块器一次只能处理一个数据块,这意味着LLM无法将此信息与下一个数据块相关联(它甚至不知道存在下一个数据块),因此,它将该信息放置在最后一个拆分的数据块中。

在摄入过程中,特别是在导入长文档时,这个问题经常发生,因为长文档的文本无法全部放入一个LLM提示中。

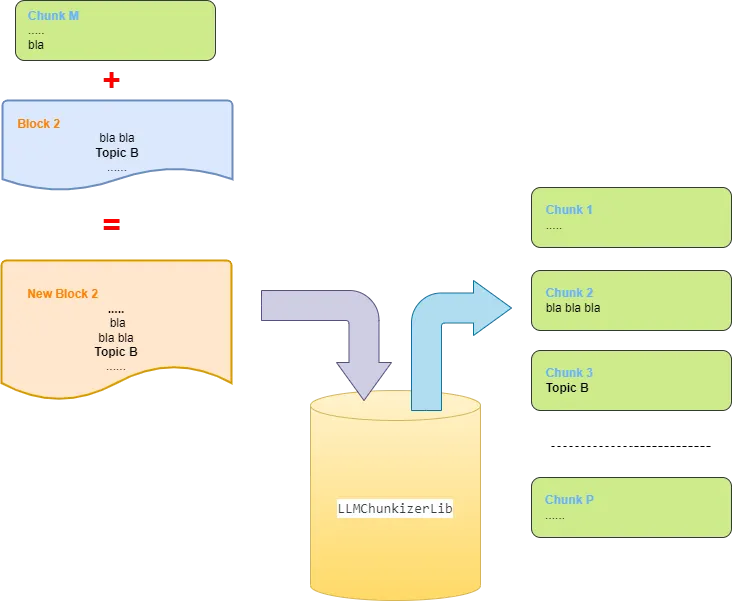

为了解决这个问题,llm_chunkizer.chunk_text_with_llm的工作方式如图3所示:

- 从“有效”数据块列表中移除前一个数据块产生的最后一个数据块(或最后N个数据块),并将其内容添加到下一个要拆分的数据块中。

- 将新的Block2再次传递给分块函数。

图3

如图3所示,数据块M的内容被更有效地拆分成两个数据块,同时保持了“bla bla bla”这一概念的完整性。

这一解决方案背后的想法是,前一个数据块的最后N个数据块代表独立的思想,而不仅仅是无关紧要的段落。

因此,将它们添加到新数据块中,可以让LLM在生成类似数据块的同时,也创建一个新的数据块,将之前不考虑其意义而拆分开的段落合并在一起。

分块结果

最终,系统生成了以下6个数据块:

0: On October 2, 1872, Phileas Fogg, an English gentleman, left London for an extraordinary journey. He had wagered that he could circumnavigate the globe in just eighty days. Fogg was a man of strict habits and a very methodical life; everything was planned down to the smallest detail, and nothing was left to chance.

1: He departed London on a train to Dover, then crossed the Channel by ship. His journey took him through many countries, including France, India, Japan, and America. At each stop, he encountered various people and faced countless adventures, but his determination never wavered.

2: However, time was his enemy, and any delay risked losing the bet. With the help of his faithful servant Passepartout, Fogg had to face unexpected obstacles and dangerous situations.

3: Yet, each time, his cunning and indomitable spirit guided him to victory, while the world watched in disbelief.

4: With one final effort, Fogg and Passepartout reached London just in time to prove that they had completed their journey in less than eighty days.

5: This extraordinary adventurer not only won the bet but also discovered that the true treasure was the friendship and experiences he had accumulated along the way.关于数据块大小的考虑

让我们来看看当原始文档被拆分成最大大小为1000个标记的更大数据块时会发生什么。

当数据块较大时,系统生成了4个数据块而不是6个。

这种行为是预期的,因为大型语言模型(LLM)可以一次性分析更大的内容部分,并且能够使用更多的文本来表示一个概念。

在这种情况下,这些数据块是:

0: On October 2, 1872, Phileas Fogg, an English gentleman, left London for an extraordinary journey. He had wagered that he could circumnavigate the globe in just eighty days. Fogg was a man of strict habits and a very methodical life; everything was planned down to the smallest detail, and nothing was left to chance.

1: He departed London on a train to Dover, then crossed the Channel by ship. His journey took him through many countries, including France, India, Japan, and America. At each stop, he encountered various people and faced countless adventures, but his determination never wavered.

2: However, time was his enemy, and any delay risked losing the bet. With the help of his faithful servant Passepartout, Fogg had to face unexpected obstacles and dangerous situations. Yet, each time, his cunning and indomitable spirit guided him to victory, while the world watched in disbelief.

3: With one final effort, Fogg and Passepartout reached London just in time to prove that they had completed their journey in less than eighty days. This extraordinary adventurer not only won the bet but also discovered that the true treasure was the friendship and experiences he had accumulated along the way.结论

重要的是要进行多次分块运行,每次传递给分块器的数据块大小都要有所不同。

每次尝试后,都应该对结果进行评估,以确定哪种方法最适合达到预期的效果。