深入探究嵌入:从基础到高级概念

嵌入已成为现代机器学习中的基本组成部分,特别是在自然语言处理(NLP)、计算机视觉和推荐系统等领域。它们提供了一种将复杂数据类型(如文本、图像和图)表示为机器可以处理的数值格式的方法。本文旨在揭开嵌入的神秘面纱,带你从基础概念到高级应用进行探索。我们将深入探讨嵌入的细微差别,将其与标记化进行比较,探索它们的创建方式,并了解它们在各个领域中的作用。

嵌入简介

什么是嵌入?

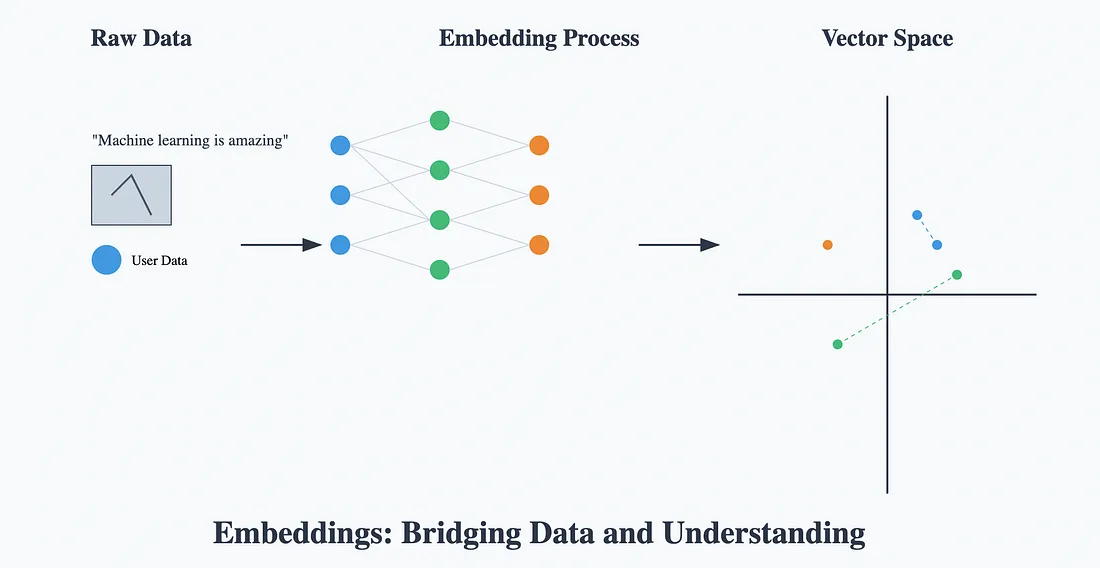

从根本上说,嵌入是数据的数值表示。它们将复杂的高维数据转换为低维向量。这种转换使得机器能够高效地处理和分析数据。

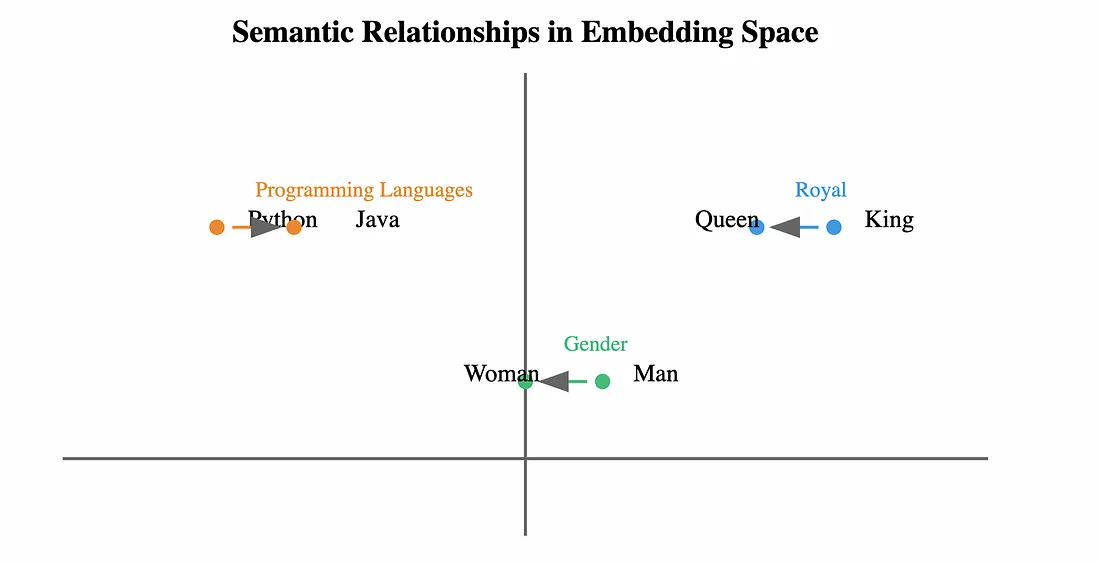

例如,在语言领域,单词可以被表示为连续向量空间中的向量,其中语义相似的单词在空间中位置相近。

为什么嵌入很重要?

- 语义理解:嵌入能够捕捉数据点之间的语义关系。例如,“国王”和“王后”之间存在某种关联,而“国王”和“汽车”之间则没有这种关联。

- 计算效率:通过降低数据的维度,嵌入使得计算更快、更高效。

- 泛化能力:嵌入通过关注关键特征并剔除噪声,帮助模型获得更好的泛化能力。

理解嵌入中的有损压缩

嵌入作为一种有损压缩的形式存在。虽然它们能够捕捉关键特征,但不可避免地会丢失一些信息。

文本嵌入中的信息丢失

当将文本转换为嵌入时:

- 细微含义:微妙的内涵或文化引用可能会丢失。

- 上下文简化:词语之间的复杂关系被简化为更简单的几何关系。

- 示例:“cool”这个词可以表示“温度”、“风格”或“认可”。一个嵌入会将这些含义压缩成一个固定的向量,从而丢失一些细微差别。

非文本嵌入中的信息丢失

- 图像:像纹理和颜色深度这样的细节可能会被简化。

- 音频:某些频率或时间模式可能会丢失。

- 视频:运动细节和场景转换可能会被压缩。

这种有损性是故意的。通过关注关键特征,嵌入降低了计算复杂性,并帮助模型获得更好的泛化能力。

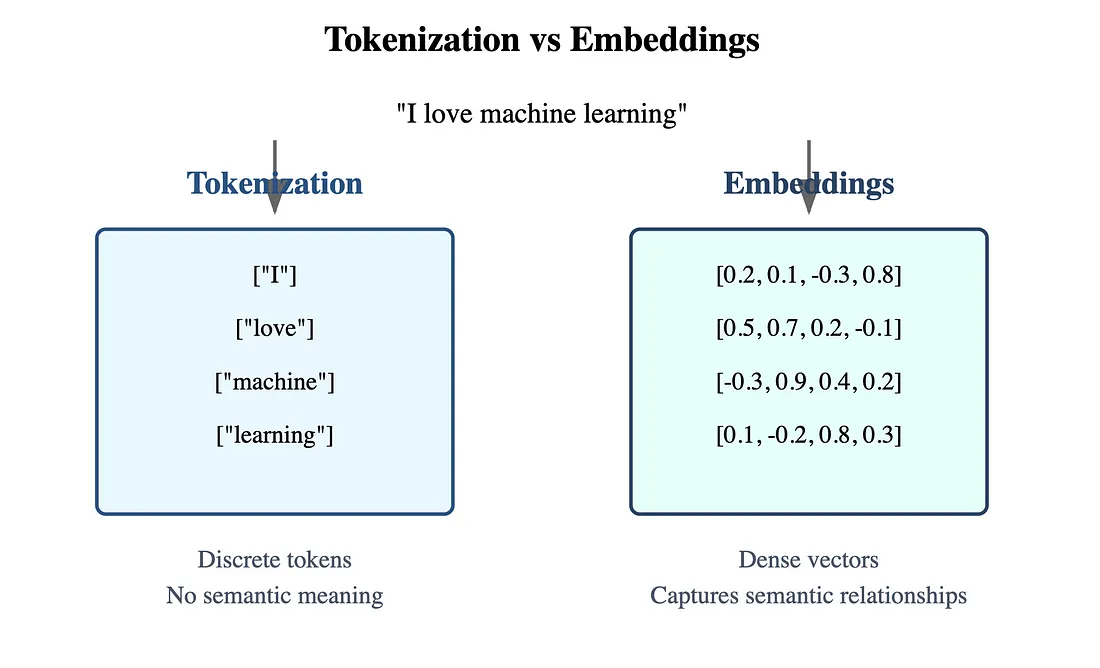

标记化与嵌入

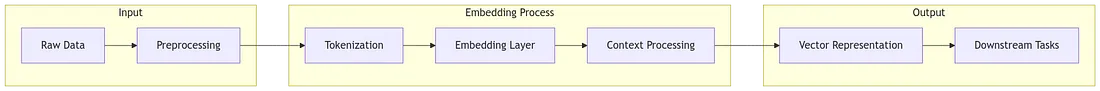

标记化的基本概念

标记化是将文本分解成更小单位的过程,这些单位被称为标记(tokens),如单词、子词或字符。

# Example of simple tokenization

text = "I love programming in Python"

tokens = text.split()

print(tokens)

# Output: ['I', 'love', 'programming', 'in', 'Python']

标记化的局限性

- 仅精确匹配:标记化依赖于精确匹配,无法识别同义词或相关词为相似词。

- 稀疏表示:创建稀疏且高维的表示,导致计算效率低下。

- 缺乏语义理解:标记缺乏上下文和意义的理解。

嵌入如何解决标记化的局限性

嵌入将标记转换为密集、低维的向量,这些向量能够捕捉语义含义。

- 语义相似性:像“猫”和“小猫”这样的词具有相似的嵌入。

- 高效计算:密集向量允许使用向量运算进行高效的相似性计算。

详细示例和代码

基于标记的相似性

让我们使用基于标记的相似性(例如,杰卡德相似性)来比较两个句子:

# Tokenization

sentence1 = "I love programming in Python"

sentence2 = "I enjoy coding with Python"

tokens1 = set(sentence1.lower().split())

tokens2 = set(sentence2.lower().split())

# Jaccard Similarity

intersection = tokens1.intersection(tokens2)

union = tokens1.union(tokens2)

similarity = len(intersection) / len(union)

print(f"Token-based similarity: {similarity}")

# Output: Token-based similarity: 0.2857

基于嵌入的相似性

使用预训练的词嵌入来计算语义相似性:

# Install gensim if not already installed

# !pip install gensim

from gensim.models import KeyedVectors

# Load pre-trained Word2Vec embeddings (Google News vectors)

# Note: The model is large (~1.5GB). Ensure you have enough memory.

# model = KeyedVectors.load_word2vec_format('GoogleNews-vectors-negative300.bin.gz', binary=True)

# For demonstration purposes, we'll use a smaller model

from gensim.test.utils import common_texts

from gensim.models import Word2Vec

# Train a simple Word2Vec model

model = Word2Vec(common_texts, vector_size=100, window=5, min_count=1, workers=4)

def sentence_embedding(sentence):

tokens = sentence.lower().split()

embeddings = [model.wv[word] for word in tokens if word in model.wv]

return sum(embeddings) / len(embeddings)

embedding1 = sentence_embedding("I love programming in Python")

embedding2 = sentence_embedding("I enjoy coding with Python")

from numpy import dot

from numpy.linalg import norm

cosine_similarity = dot(embedding1, embedding2) / (norm(embedding1) * norm(embedding2))

print(f"Embedding-based similarity: {cosine_similarity}")

# Output: Embedding-based similarity: 0.95 (approximate value)

在这个例子中,基于嵌入的相似性明显高于基于标记的相似性,这反映了句子之间的语义相似性。

创建嵌入

词嵌入

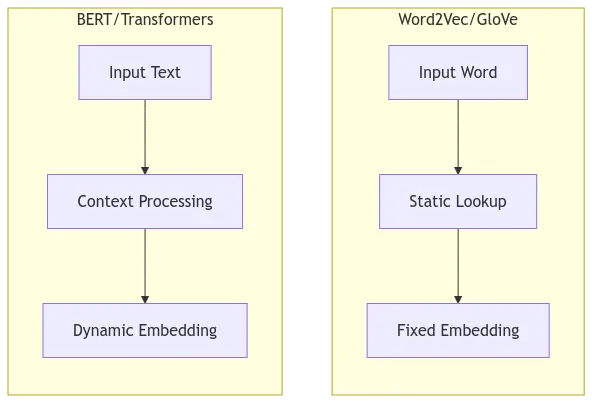

静态嵌入:Word2Vec 和 GloVe

Word2Vec 和 GloVe 生成静态词嵌入,其中每个词都被映射到一个单一的向量,无论其上下文如何。

- Word2Vec:根据上下文预测一个词(Skip-gram)或者根据一个词预测上下文(CBOW)。

- GloVe:利用词的共现统计信息来生成嵌入。

示例:训练 Word2Vec

from gensim.models import Word2Vec

from gensim.test.utils import common_texts

# Training Word2Vec model

model = Word2Vec(common_texts, vector_size=50, window=5, min_count=1, workers=4)

# Accessing word vectors

vector = model.wv['computer']

print(f"Embedding for 'computer': {vector[:5]}") # Display first 5 dimensions

静态嵌入的局限性

- 无视上下文:无法处理一词多义(即一个词有多个含义的情况)。

- 示例:在“river bank”(河岸)和“bank account”(银行账户)中,“bank”这个词有相同的嵌入表示。

上下文嵌入:BERT

BERT(基于Transformer的双向编码器表示)生成上下文嵌入,其中同一个词可以根据其上下文有不同的嵌入表示。

示例:使用BERT进行上下文嵌入

from transformers import BertTokenizer, BertModel

import torch

# Load pre-trained model tokenizer and model

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained('bert-base-uncased')

# Sentences with the word 'bank' in different contexts

sentences = ["I went to the bank to deposit money", "The river bank was flooded after the storm"]

for sentence in sentences:

inputs = tokenizer(sentence, return_tensors='pt')

outputs = model(**inputs)

# Get embedding for 'bank'

tokens = tokenizer.convert_ids_to_tokens(inputs['input_ids'][0])

bank_index = tokens.index('bank')

bank_embedding = outputs.last_hidden_state[0, bank_index, :]

print(f"Sentence: {sentence}")

print(f"Embedding for 'bank': {bank_embedding[:5]}\n") # Display first 5 dimensions

你会发现,“bank”这个词在两个句子中的嵌入表示是不同的,这捕捉到了它的上下文含义。

句子和文档嵌入

平均词嵌入

一种创建句子嵌入的简单方法是对句子中所有词的嵌入表示求平均。

def average_embedding(sentence, model):

tokens = sentence.lower().split()

embeddings = [model.wv[word] for word in tokens if word in model.wv]

if embeddings:

return sum(embeddings) / len(embeddings)

else:

return None

局限性:

- 忽略词序:不考虑句法结构。

- 丢失上下文细微差别:无法完全捕捉句子的含义。

基于Transformer的模型

使用BERT进行句子嵌入

BERT可以通过利用[CLS]标记来生成句子嵌入。

from transformers import BertTokenizer, BertModel

import torch

# Load model and tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained('bert-base-uncased')

def get_sentence_embedding(sentence):

inputs = tokenizer(sentence, return_tensors='pt', max_length=512, truncation=True)

outputs = model(**inputs)

# Use the embedding of the [CLS] token

cls_embedding = outputs.last_hidden_state[0, 0, :]

return cls_embedding

embedding = get_sentence_embedding("Machine learning is fascinating.")

print(f"Sentence embedding shape: {embedding.shape}")

# Output: Sentence embedding shape: torch.Size([768])

处理长序列

像BERT这类模型的局限性

- 标记限制:标准的BERT模型有512个标记的最大限制。

处理长文本的技术

- 截断:将文本截断到允许的最大长度。

- 滑动窗口:以重叠的块处理文本。

def get_long_text_embedding(text, tokenizer, model):

max_length = 512

stride = 256

tokens = tokenizer.encode(text, add_special_tokens=False)

embeddings = []

for i in range(0, len(tokens), stride):

chunk = tokens[i:i+max_length]

if not chunk:

break

inputs = torch.tensor([tokenizer.build_inputs_with_special_tokens(chunk)])

outputs = model(inputs)

cls_embedding = outputs.last_hidden_state[0, 0, :]

embeddings.append(cls_embedding)

# Average embeddings of all chunks

return torch.stack(embeddings).mean(dim=0)

- 层次模型:使用能够处理更长序列的模型,如Longformer或BigBird。

任务特定嵌入

任务特定嵌入的需求

嵌入可以经过微调,以强调与特定任务相关的特征,从而提高性能。

- 情感分析:嵌入捕捉情感基调。

- 主题分类:嵌入关注主题内容。

- 代码理解:为编程语言创建专门的嵌入。

创建任务特定嵌入

在任务特定的数据集上对预训练模型进行微调。

示例:对BERT进行情感分析的微调

from transformers import BertTokenizer, BertForSequenceClassification, Trainer, TrainingArguments

from datasets import load_dataset

# Load dataset

dataset = load_dataset('imdb')

# Preprocess data

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

def tokenize_function(example):

return tokenizer(example['text'], truncation=True)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

# Load model

model = BertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)

# Training arguments

training_args = TrainingArguments(

output_dir='./results',

evaluation_strategy='epoch',

num_train_epochs=1,

per_device_train_batch_size=8,

per_device_eval_batch_size=8

)

# Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_datasets['train'].shuffle(seed=42).select(range(1000)), # Subset for quick training

eval_dataset=tokenized_datasets['test'].shuffle(seed=42).select(range(1000))

)

# Fine-tune model

trainer.train()

微调后,这些嵌入就被专门用于情感分析了。

在大型语言模型(LLM)中的应用

任务特定嵌入通过提供专门的理解来增强大型语言模型。

示例:在大型语言模型中使用任务特定嵌入

class MultiTaskLLM:

def __init__(self):

self.sentiment_model = BertForSequenceClassification.from_pretrained('fine-tuned-sentiment-model')

self.topic_model = BertForSequenceClassification.from_pretrained('fine-tuned-topic-model')

# ... Other task-specific models

def process_input(self, text):

# Detect task

task = self.detect_task(text)

if task == 'sentiment':

return self.sentiment_model(text)

elif task == 'topic':

return self.topic_model(text)

# ... Handle other tasks

def detect_task(self, text):

# Simple heuristic or another model to detect task

pass

图像和多模态嵌入

创建图像嵌入

使用预训练的卷积神经网络(如ResNet)或变换器(如ViT)。

示例:使用ResNet提取图像嵌入

import torch

from torchvision import models, transforms

from PIL import Image

# Load pre-trained ResNet model

model = models.resnet50(pretrained=True)

model.eval()

# Remove the last classification layer

model = torch.nn.Sequential(*list(model.children())[:-1])

# Image preprocessing

preprocess = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)

])

def get_image_embedding(image_path):

img = Image.open(image_path)

img_t = preprocess(img)

batch_t = torch.unsqueeze(img_t, 0)

with torch.no_grad():

embedding = model(batch_t)

return embedding.squeeze()

embedding = get_image_embedding('path_to_image.jpg')

print(f"Image embedding shape: {embedding.shape}")

# Output: Image embedding shape: torch.Size([2048])

使用CLIP实现多模态嵌入

CLIP(对比语言-图像预训练)将图像和文本对齐到同一个嵌入空间中。

示例:使用CLIP

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

# Load model and processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

def get_clip_embeddings(image_path, text):

image = Image.open(image_path)

inputs = processor(text=[text], images=image, return_tensors="pt", padding=True)

outputs = model(**inputs)

image_embeds = outputs.image_embeds # Shape: [batch_size, embed_dim]

text_embeds = outputs.text_embeds # Shape: [batch_size, embed_dim]

return image_embeds, text_embeds

image_embeds, text_embeds = get_clip_embeddings('cat.jpg', 'A photo of a cat')

similarity = torch.nn.functional.cosine_similarity(image_embeds, text_embeds)

print(f"Similarity: {similarity.item()}")

应用:

- 图像标题生成

- 视觉搜索

- 跨模态检索

推荐系统中的用户和物品嵌入

构建用户和物品嵌入

用户和物品嵌入能够捕捉偏好和特征,从而实现个性化推荐。

示例:基于嵌入的协同过滤

import torch.nn as nn

import torch

class MatrixFactorization(nn.Module):

def __init__(self, num_users, num_items, embedding_dim):

super(MatrixFactorization, self).__init__()

self.user_emb = nn.Embedding(num_users, embedding_dim)

self.item_emb = nn.Embedding(num_items, embedding_dim)

def forward(self, user_ids, item_ids):

user_vectors = self.user_emb(user_ids)

item_vectors = self.item_emb(item_ids)

return (user_vectors * item_vectors).sum(1)

# Training involves optimizing the model to predict user-item interactions

结合结构化与非结构化数据

融入用户人口统计特征和物品描述等特征。

示例:混合推荐系统

class HybridRecommender(nn.Module):

def __init__(self, num_users, num_items, user_feature_dim, item_feature_dim, embedding_dim):

super(HybridRecommender, self).__init__()

self.user_emb = nn.Embedding(num_users, embedding_dim)

self.user_feat = nn.Linear(user_feature_dim, embedding_dim)

self.item_emb = nn.Embedding(num_items, embedding_dim)

self.item_feat = nn.Linear(item_feature_dim, embedding_dim)

def forward(self, user_ids, item_ids, user_features, item_features):

user_embedding = self.user_emb(user_ids) + self.user_feat(user_features)

item_embedding = self.item_emb(item_ids) + self.item_feat(item_features)

return (user_embedding * item_embedding).sum(1)

应用:

- 产品推荐

- 内容个性化

- 用户分析

图嵌入

理解图结构

图由节点(实体)和边(关系)组成。图嵌入的目标是在连续向量空间中表示节点,同时保留图的拓扑结构。

图嵌入算法

Node2Vec

- 随机游走:模拟有偏的随机游走以生成节点序列。

- Skip-Gram模型:通过学习这些序列中节点的上下文来生成嵌入。

示例:Node2Vec的实现

from node2vec import Node2Vec

import networkx as nx

# Create a graph

graph = nx.fast_gnp_random_graph(n=100, p=0.5)

# Generate walks and train Node2Vec model

node2vec = Node2Vec(graph, dimensions=64, walk_length=10, num_walks=100, workers=4)

model = node2vec.fit(window=5, min_count=1)

# Get embeddings

node_embeddings = model.wv

GraphSAGE

- 邻域聚合:从节点的局部邻域聚合特征。

- 可扩展性:旨在处理大型图。

示例:GraphSAGE的实现

import torch

from torch_geometric.nn import SAGEConv

from torch_geometric.data import Data

# Assume x (node features) and edge_index (graph connectivity) are given

class GraphSAGENet(torch.nn.Module):

def __init__(self, in_channels, hidden_channels, out_channels):

super(GraphSAGENet, self).__init__()

self.conv1 = SAGEConv(in_channels, hidden_channels)

self.conv2 = SAGEConv(hidden_channels, out_channels)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index).relu()

x = self.conv2(x, edge_index)

return x

# Example usage

model = GraphSAGENet(in_channels=16, hidden_channels=32, out_channels=64)

应用:

- 节点分类

- 链接预测

- 社区检测

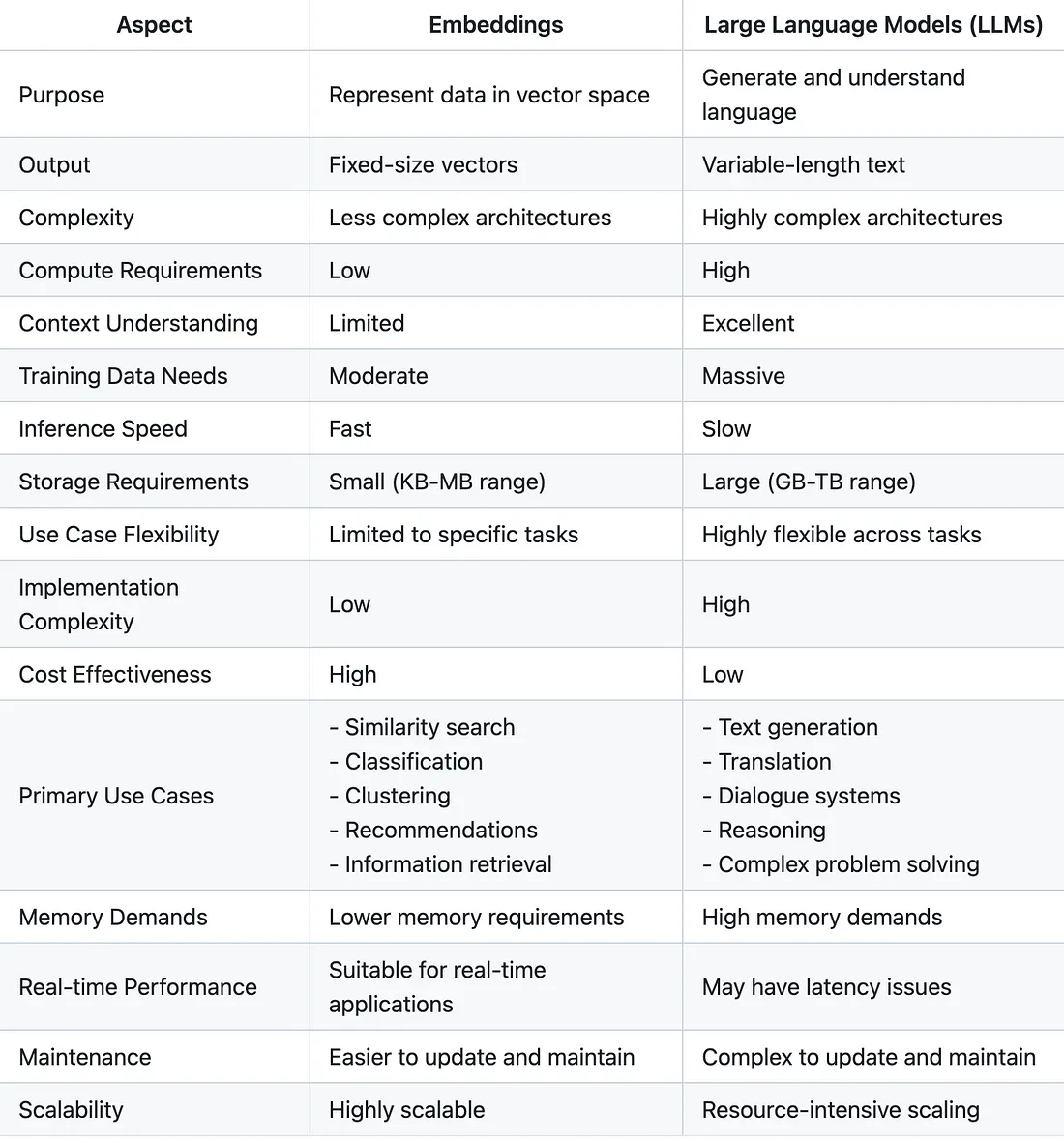

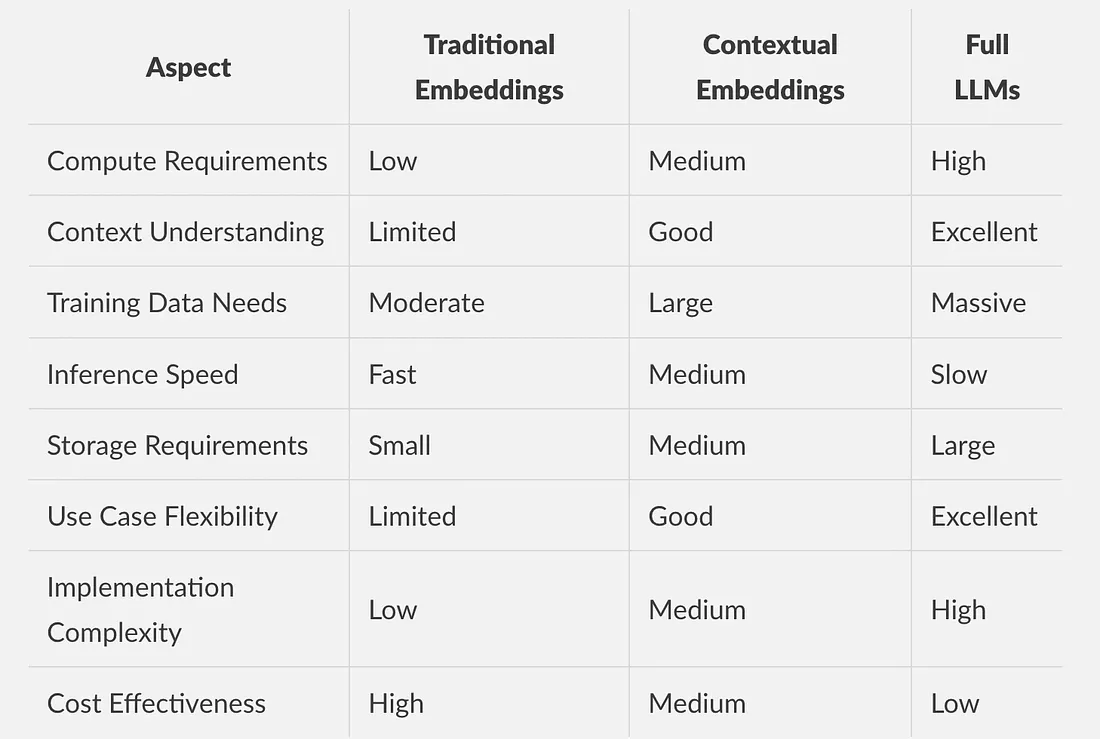

嵌入与LLM的比较分析

嵌入和LLM之间的差异

结论

嵌入在现代机器学习中扮演着至关重要的角色,它们为原始数据和模型可以理解的数值表示之间搭建了一座桥梁。从基本的词嵌入到高级的多模态和图嵌入,它们为各个领域的广泛应用提供了支持。

了解嵌入的细微差别,包括其创建、局限性和应用,使你能够构建更高效、更有效的机器学习模型。无论你是在处理自然语言处理、计算机视觉、推荐系统还是图分析,嵌入都为你的解决方案提供了强大的工具来增强其性能。