【指南】使用QLoRA对大型语言模型进行微调

微调语言模型涉及通过在特定任务的数据集上进一步训练,将预训练模型适应到特定任务或领域。这种技术利用了预训练阶段获取的大量知识,使模型能够更有效地执行专门任务。在本文中,我们将探索使用QLoRA进行语言模型微调的过程,QLoRA是一种将低秩适应(LoRA)与量化相结合以实现高效训练的方法。我们还将深入探讨使用Weights and Biases(Wandb)(W&B)来跟踪和可视化微调过程的好处。通过理解这些概念,你将能够获得高效微调大型模型、监控其性能以及做出明智决策以优化其输出的能力。

为什么需要可视化模型进度?

在微调过程中,监控模型的进度对于深入了解其行为和性能至关重要。通过可视化模型的最终结果和中间结果,我们可以实现以下目标:

- 跟踪损失和指标:监控损失和其他指标,以评估模型的收敛性和性能。

- 分析超参数:观察超参数对模型性能的影响,并在超参数调优过程中做出明智的决策。

- 调试和诊断:识别模型输出中可能存在的问题或异常,并诊断根本原因。

- 解读和解释:更好地理解模型的决策过程,并解读其预测结果。

使用W&B的好处

使用W&B(Weights & Biases)为微调语言模型带来了诸多好处:

- 实验跟踪:W&B能够跟踪所有实验,便于比较不同的运行和配置。

- 可视化:实时可视化指标和损失,有助于理解训练过程并诊断问题。

- 超参数优化:W&B提供了搜索和比较不同超参数设置的工具,从而简化了超参数调优过程。

- 协作:团队可以通过共享实验结果和可视化内容,实现更有效的协作。

- 模型版本管理:W&B支持模型版本管理,让你能够跟踪模型的不同版本,并在需要时回滚到之前的版本。

不同的微调方法

以下是微调大型语言模型(LLM)时最常用的方法。

- 方法1:逐层微调——在这种方法中,可以冻结预训练模型的前几层,而只微调后面的层。或者,也可以对整个模型进行微调。

- 方法2:参数选择性微调——这种技术涉及识别和更新仅与当前任务相关的参数子集。

- 方法3:基于适配器的微调——另一种技术是在预训练模型旁边加入轻量级的“适配器”模块。这些适配器包含针对特定任务的调整,从而实现更精确的微调。

设置Weights and Biases(W&B)

Weights and Biases(W&B)是一个强大的平台,有助于跟踪、可视化和分析机器学习实验。它提供了一系列用于实验跟踪、模型可视化和结果分析的功能。要开始使用W&B,请按照以下步骤操作:

- 注册:如果尚未注册,请在Wandb(W&B)网站上创建一个免费账户。

- 安装库:安装W&B的Python库。

- 导入库:在你的Python脚本中导入Wandb模块。

- 初始化W&B:在脚本开头添加以下代码片段以初始化W&B。

pip install transformers datasets wandb peft scikit-learn bitsandbytes accelerate transformers[torch

现在,我们已经配置好了W&B。在微调模型时,我们使用的是QLoRA。让我们以非常简单的方式来理解这个过程。

使用QLoRA微调语言模型

LORA(低秩适应)旨在通过关注对当前任务影响最大的模型权重子集来高效地微调大规模模型。与传统的微调方法(可能会更新大量权重)不同,LORA采用了一种不同的方法,即跟踪权重的修改而不是直接更新它们。它将权重变化的大矩阵分解为包含“可训练参数”的较小矩阵。

这种方法带来了几个好处:

- 大大减少了可训练参数的数量,从而实现了更快、更高效的微调。

- 对于较小硬件的适应性:LORA的较低参数数量使得在较不强大的硬件(如中等性能的GPU或CPU)上也能微调大型模型。

- 保留了原始的预训练权重,从而可以创建多个针对不同任务的轻量级模型。

- 与其他参数高效技术兼容,可实现进一步优化。

- 性能通常与完全微调的模型相当。

- 由于适配器权重可以与基础模型合并,因此没有额外的推理延迟。

QLoRA在LORA的基础上更进一步,通过对可训练参数进行量化,用更少的比特来表示它们。这进一步减小了模型的大小,使得在内存和计算资源有限的设备上部署成为可能。

示例:

- 模型大小:具有100万个32位浮点权重的模型需要4MB的内存。

- 16位精度:将内存使用量减少到2MB。

- 8位量化:进一步将内存减少到1MB。

另一个示例:

以下图表显示了LoRA可训练参数占整体模型总参数的百分比。下表详细列出了秩和参数大小的信息。

| Rank | 7B | 13B | 70B | 180B |

|------|--------|--------|--------|---------|

| 1 | 0.002% | 0.002% | 0.001% | 0.000% |

| 2 | 0.005% | 0.004% | 0.002% | 0.001% |

| 4 | 0.010% | 0.007% | 0.003% | 0.002% |

| 8 | 0.019% | 0.014% | 0.006% | 0.004% |

| 16 | 0.038% | 0.028% | 0.012% | 0.008% |

从上面的内容中,我们可以看到随着秩的增加,可训练参数的数量也在增加。

现在,让我们深入了解如何使用Hugging Face Transformers库和QLoRA进行高效的微调过程。

import torch

from datasets import Dataset

import wandb

from transformers import T5ForConditionalGeneration, T5Tokenizer, Trainer, TrainingArguments, TrainerCallback, AutoTokenizer, DataCollatorForSeq2Seq

from peft import LoftQConfig, LoraConfig, get_peft_model

from datasets import Dataset

初始化W&B

wandb.init(project="language_model_finetuning", entity="your_username")

#Custom callback for logging

class LoggingCallback(TrainerCallback):

def on_log(self, args, state, control, logs=None, **kwargs):

if logs is not None:

wandb.log(logs)

初始化Weights & Biases

wandb.init(project="t5-finetuning-peft")

样本数据

你可以用自己的数据替换此样本数据。

train_data = [

{"article": "Fine-tuning language models can significantly improve their performance on specialized tasks. By training the model on task-specific data, it can learn the nuances of the task and provide better results.", "summary": "Fine-tuning improves model performance on specialized tasks."},

{"article": "Quantized methods like QLoRA are becoming popular for efficient fine-tuning of large language models. These methods reduce the computational resources required for training while maintaining accuracy.", "summary": "QLoRA enables efficient fine-tuning of large models."},

{"article": "Weight and Biases (W&B) is a powerful tool for tracking and visualizing machine learning experiments. It helps data scientists monitor the training process and gain insights into model performance.", "summary": "W&B aids in tracking and visualizing ML experiments."},

{"article": "Using pre-trained models as a starting point for fine-tuning can save time and resources. Pre-trained models have already learned a vast amount of information from large datasets.", "summary": "Pre-trained models save time and resources."},

{"article": "During fine-tuning, it is essential to monitor the model's loss and metrics to ensure it is learning correctly. Visualization tools can help in understanding the training process.", "summary": "Monitoring loss and metrics is crucial during fine-tuning."},

{"article": "Data augmentation techniques can help improve the robustness of machine learning models. By artificially increasing the diversity of the training data, models can generalize better to new, unseen data.", "summary": "Data augmentation improves model robustness."},

{"article": "Transfer learning involves using a pre-trained model on a new, but related task. This approach can accelerate the training process and improve model performance.", "summary": "Transfer learning accelerates training and improves performance."},

{"article": "Hyperparameter tuning is a critical step in optimizing model performance. Techniques like grid search and random search are commonly used to find the best hyperparameter values.", "summary": "Hyperparameter tuning optimizes model performance."},

{"article": "Regularization methods like dropout and weight decay help prevent overfitting in neural networks. These techniques ensure that the model generalizes well to new data.", "summary": "Regularization prevents overfitting in neural networks."},

{"article": "Cross-validation is a robust method for assessing the performance of machine learning models. It provides a more accurate estimate of model performance than a single train-test split.", "summary": "Cross-validation provides accurate performance estimates."}

]

test_data = [

{"article": "Active learning can reduce the amount of labeled data needed for training. By selectively choosing the most informative samples, models can learn more efficiently.", "summary": "Active learning reduces the need for labeled data."},

{"article": "Ensemble methods combine the predictions of multiple models to improve accuracy. Techniques like bagging and boosting are popular ensemble methods.", "summary": "Ensemble methods improve prediction accuracy."},

{"article": "Gradient descent is a fundamental optimization algorithm used to train machine learning models. Variants like stochastic gradient descent (SGD) offer improvements in convergence speed.", "summary": "Gradient descent and its variants optimize model training."}]

evaluation_data = [

{"article": "Feature engineering is the process of using domain knowledge to create features that make machine learning algorithms work better. It is a crucial step in building effective models.", "summary": "Feature engineering enhances model effectiveness."},

{"article": "Batch normalization is a technique used to improve the training of deep neural networks. It normalizes the input of each layer, allowing for faster and more stable training.", "summary": "Batch normalization stabilizes and speeds up training."},

{"article": "Model interpretability is important for understanding how machine learning models make decisions. Techniques like SHAP and LIME provide insights into model predictions.", "summary": "Model interpretability techniques provide insights into predictions."}]

train_dataset = Dataset.from_list(train_data)

test_dataset = Dataset.from_list(test_data)

eval_dataset = Dataset.from_list(evaluation_data)

我们将使用T5模型。T5是一个在多任务混合(包括无监督和有监督任务)上预训练的编码器-解码器模型,其中每个任务都被转换为文本到文本的格式。

加载T5分词器和模型

T5-small分词器模型拥有6000万个可训练参数,并且存储大小为987MB。

model_name = 't5-small'

tokenizer = T5Tokenizer.from_pretrained(model_name)

base_model = T5ForConditionalGeneration.from_pretrained(model_name)

使用LoRA应用PEFT

loftq_config = LoftQConfig(loftq_bits=4) # set 4bit quantization

lora_config = LoraConfig(

r=6, # Rank of the adaptation matrices

lora_alpha=16 #When the weight changes are added back into the original model weights, they are multiplied by a scaling factor that's #calculated as alpha divided by rank.

loftq_config=loftq_config,

target_modules=["k","q", "v"], # Target modules for LoRA adaptation

lora_dropout=0.1, # Dropout rate

bias="none" # Whether to adapt bias terms

)

peft_model = get_peft_model(base_model, lora_config)

peft_model.print_trainable_parameters()

我们可以清楚地看到,在使用PEFT配置后,可训练参数从6000万减少到了近30万。

预处理数据集

def preprocess_function(examples):

inputs = tokenizer(examples["article"], padding="max_length", truncation=True, max_length=512)

targets = tokenizer(examples["summary"], padding="max_length", truncation=True, max_length=128)

inputs["labels"] = targets["input_ids"]

return inputs

train_dataset = train_dataset.map(preprocess_function, batched=True)

test_dataset = test_dataset.map(preprocess_function, batched=True)

eval_dataset = eval_dataset.map(preprocess_function, batched=True)

定义compute_metrics函数

def compute_metrics(pred: EvalPrediction):

metric = load_metric("rouge")

labels_ids = pred.label_ids

pred_ids = pred.predictions

#Decode the predicted and true labels

decoded_preds = tokenizer.batch_decode(pred_ids, skip_special_tokens=True)

decoded_labels = tokenizer.batch_decode(labels_ids, skip_special_tokens=True)

# Rouge expects a newline after each sentence

decoded_preds = ["\n".join(pred.strip().split()) for pred in decoded_preds]

decoded_labels = ["\n".join(label.strip().split()) for label in decoded_labels]

result = metric.compute(predictions=decoded_preds, references=decoded_labels)

return {"rouge1": result["rouge1"].mid.fmeasure}

定义训练参数

training_args = TrainingArguments(do_eval=True,

output_dir="./results",

eval_strategy="steps", # Change to "steps" for more frequent logging

eval_steps=10, # Evaluate every 10 steps

logging_dir='./logs', # Directory for storing logs

logging_steps=20, # Log every 5 steps

learning_rate=2e-5,

per_device_train_batch_size=20,

per_device_eval_batch_size=20,

num_train_epochs=30,

weight_decay=0.01,

report_to="wandb",

)

初始化训练器

trainer = Trainer(

model=peft_model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

tokenizer=tokenizer,

compute_metrics=compute_metrics,

callbacks=[LoggingCallback()] # Add the custom logging callback

)

trainer.train()

eval_results = trainer.evaluate()

保存模型

trainer.save_model("./t5-finetuned")现在我们已经有了一个训练好的模型,接下来需要将训练好的模型与基础模型合并。我们可以通过调用merge_and_unload()方法来实现这一点。这个方法会将LoRA层与基础模型合并。

加载LoRA适配器并合并

merged_model = peft_model.merge_and_unload()

保存合并后的模型

merged_model.save_pretrained("./t5-finetuned")完成W&B运行。

wandb.finish().finish()

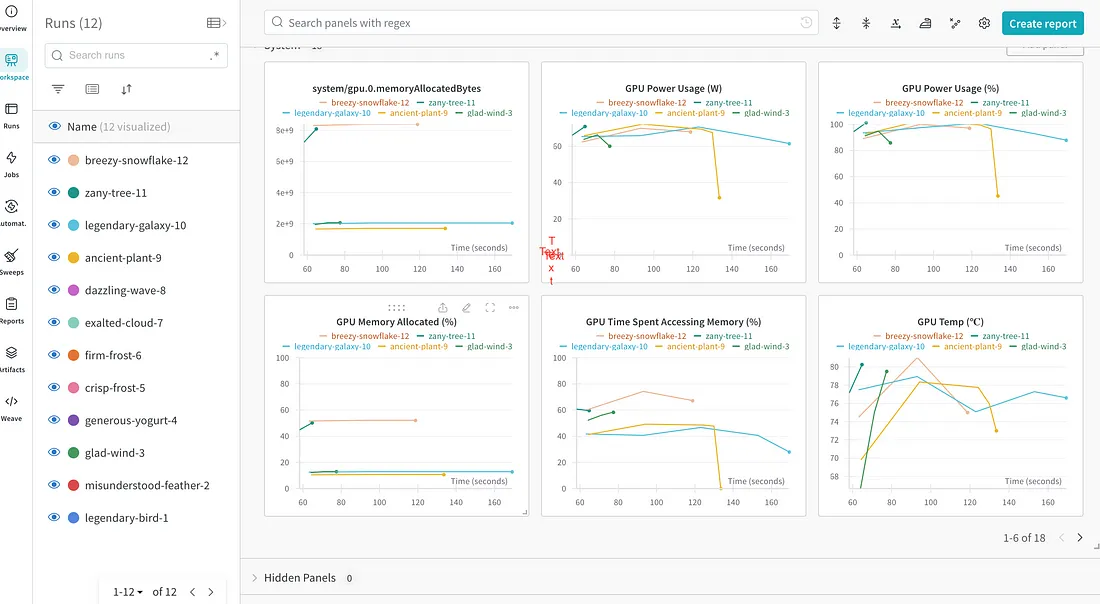

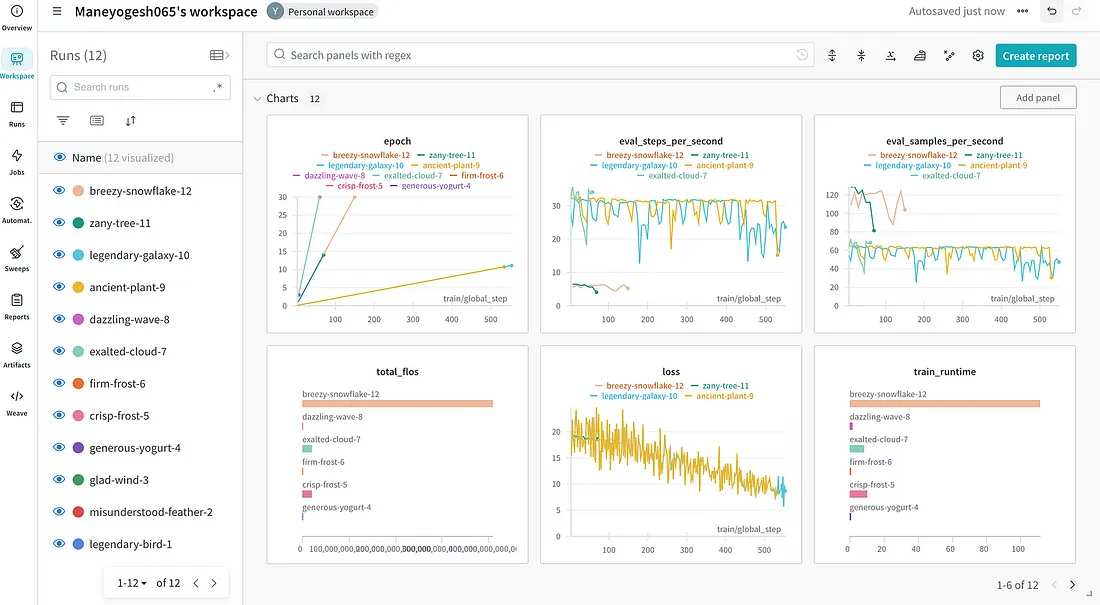

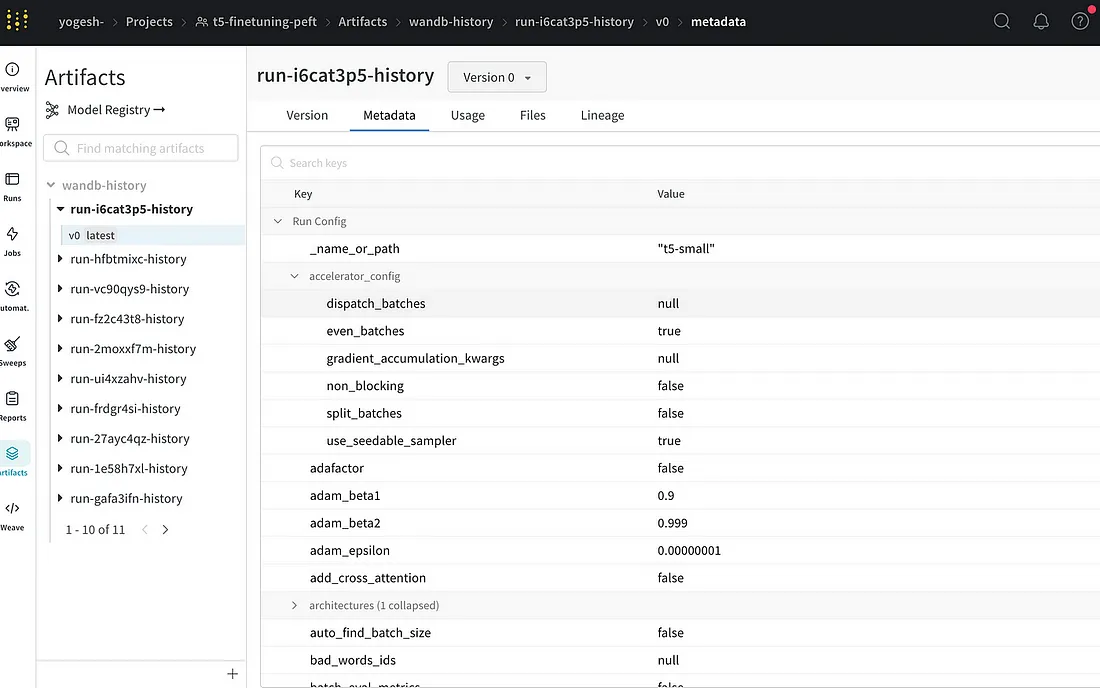

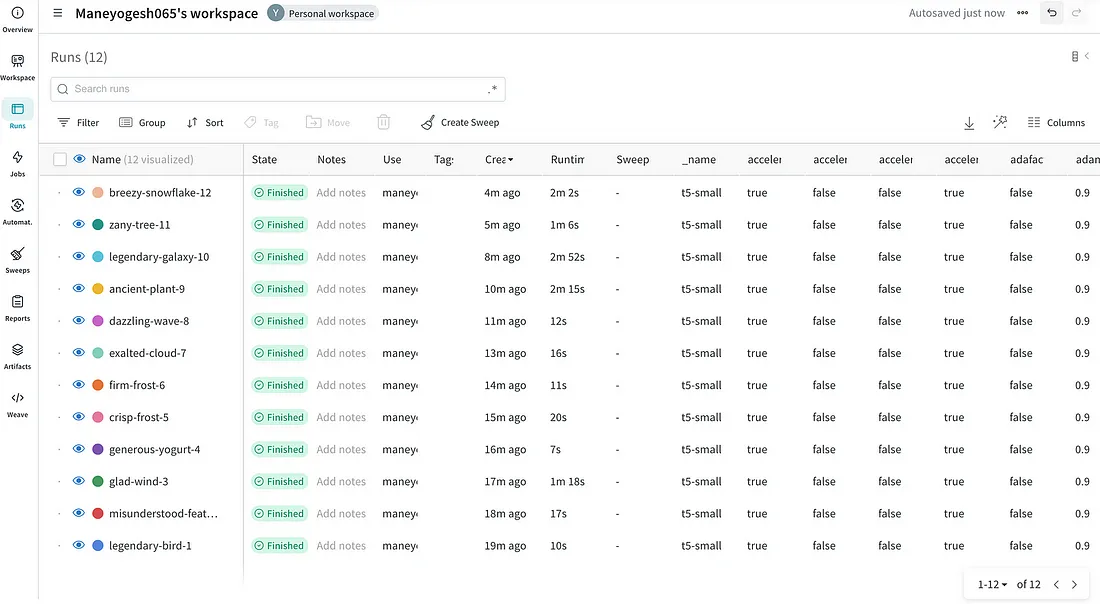

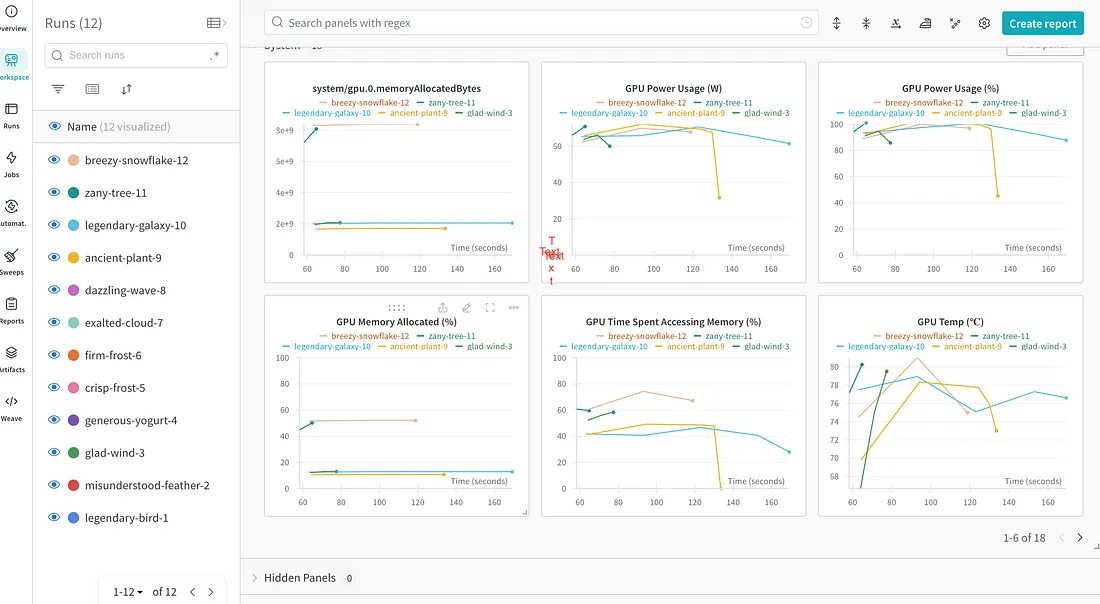

一旦完成,你将收到Weights & Biases(Wandb)提供的一些链接和日志。点击这些链接后,你将看到以下结果,其中包括图形化的可视化表示。你还可以根据自己的需求添加日志、表格和许多其他元素。

我们在Wandb中获得了如此出色的可视化效果:

结论

通过利用QLoRA,我们可以减小模型尺寸并减少可训练参数的数量。我们能够轻松地训练和使用大型语言模型,并获得更好的性能。利用W&B,我们可以深入了解微调过程,并做出明智的决策来提高语言模型的性能。W&B提供的强大可视化工具使我们能够监控模型的进展、跟踪超参数,并分析不同配置的影响。将W&B纳入我们的微调工作流程可以显著提高我们对训练过程的理解和控制能力。