【指南】使用Lang Chain和Lang Graph构建多代理RAG与Gemma 2

检索代理在我们想要决定是否从索引中检索文档(如果文档相关)或者是否可以从网络搜索工具中检索时非常有用。

要实现检索代理,我们只需让大型语言模型(LLM)访问检索工具即可。

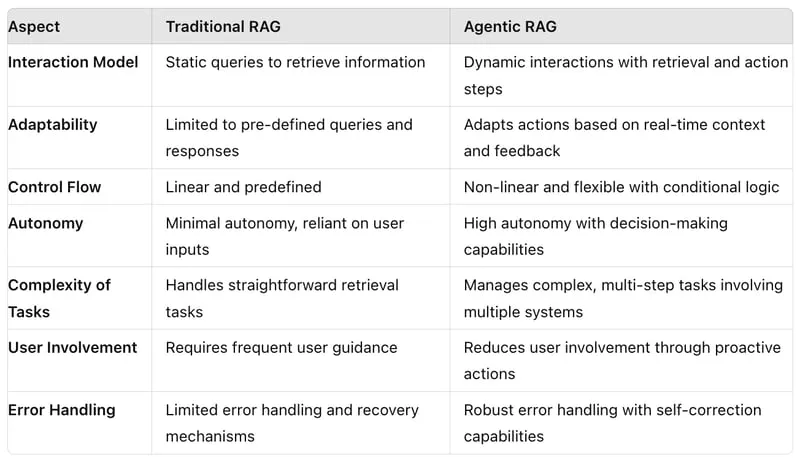

代理式检索增强生成(Agentic RAG)是一种基于代理的方法,用于以协调的方式对多个文档执行问答任务。虽然标准的检索增强生成(RAG)在处理少量文档的简单查询时表现出色,但代理式RAG更进一步,成为问答任务的强大解决方案。它通过引入AI代理来增加智能层,这些代理作为自主决策者,分析初步结果并战略性地选择最有效的工具进行进一步的数据检索。

代理式RAG采用基于代理的方法,以系统的方式在多个文档中进行问答。传统的RAG在有限数量的文档内处理简单查询时很有效,而代理式RAG则提升了这一过程,为问答提供了稳健的解决方案。它通过AI代理的使用融入了智能元素。这些代理独立运作,评估初步结果,并精心选择最适合的工具进行额外的数据提取。

代理式RAG作为传统RAG的演进,融合了AI代理来增强RAG方法。这种方法利用自主代理来分析初步结果,并战略性地选择有效的数据检索工具。这些AI代理能够将复杂的任务分解成几个子任务,从而使其更易于处理。它们还拥有记忆能力(如聊天历史),因此知道已经发生了什么以及接下来需要采取哪些步骤。

此外,这些AI代理非常智能,能够在需要解决任务时调用任何API或工具。代理能够运用逻辑、进行推理并据此采取行动。这正是代理式RAG方法如此突出的原因。该系统将复杂查询分解为可管理的部分,为每个部分分配特定的代理,同时保持无缝协调。

Agentic RAG的关键优势和用例

与传统系统相比,Agentic RAG具有诸多优势。其自主代理能够独立工作,从而可以并行高效地处理复杂查询。系统的适应性使其能够根据新信息或用户需求的演变动态调整策略。在营销领域,代理式RAG能够分析客户数据,以生成个性化通信内容,并提供实时的竞争情报。它还能在营销活动管理中增强决策能力,并优化搜索引擎策略。

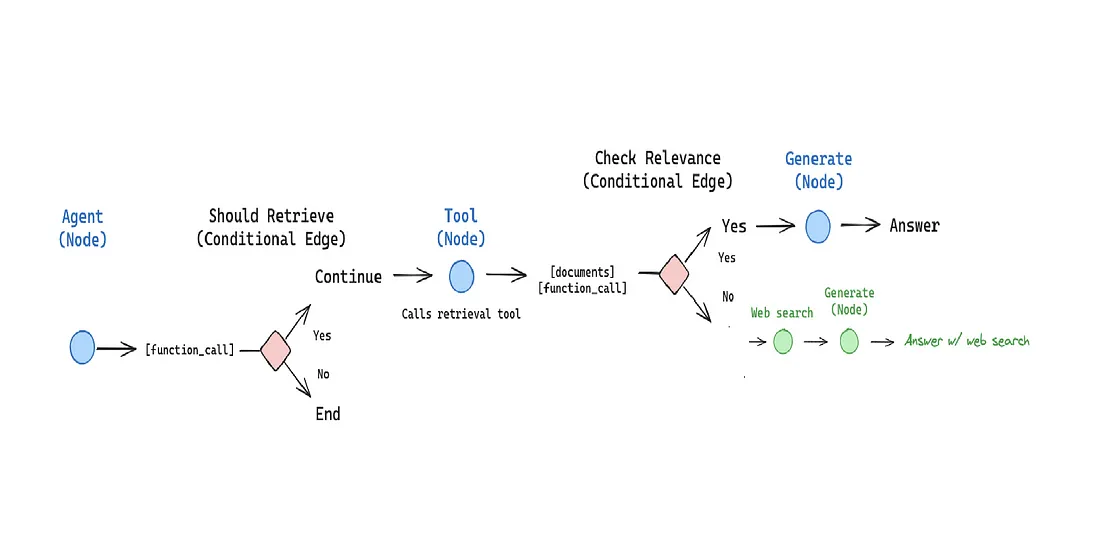

- 该过程从代理节点开始。

- 然后,代理决定是否需要检索信息。

- 如果决定是“是”,则过程移动到工具节点,在那里选择特定的工具或资源。

- 从工具节点开始,过程继续到继续节点。

- 在继续节点,会做出一个决定,即是继续过程还是结束过程。

- 如果决定是“是”,则过程移动到检查相关性节点。

- 在检查相关性节点,会评估一个条件。如果条件满足(是),则过程移动到生成节点,在那里生成最终答案。

- 如果检查相关性节点的条件不满足(否),则过程移动到网络搜索节点。

- 在网络搜索节点,进行网络搜索,并使用网络搜索获得的信息生成最终答案。

问题描述:

我创建了几篇关于RAG、Graph RAG与RAG的对比以及Ollama的文章。如果有人想从这些博客中了解相关概念,比如“什么是Graph RAG?”

解决方案:

要么读过这些文章的人来回答,要么你自己去读……

不读文章,无论是你还是其他人,都无法回答与博客相关的问题,想想怎么可能呢?

“没错,有了我们的AI代理,这是可能的!”

我们的代理将配备两个子代理,一个是与文章相关的检索器,另一个是网络搜索代理。

如果有人问与文章相关的问题,代理状态将调用检索器工具来回答问题。

如果有人问与文章无关的问题,代理状态将把问题重定向到网络搜索工具,并生成答案……

请在你的Google Colab中安装以下内容:

! pip install -U langchain_community tiktoken langchain-openai langchainhub chromadb langchain langgraph langchain-google-genai langchain-groq

import os

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_ENDPOINT"] = "https://api.smith.langchain.com"

os.environ["LANGCHAIN_API_KEY"] = ""

使用Gemini Pro生成嵌入表示,你可以选择任何你喜欢的嵌入模型。

我已经取了3篇中等长度的文章。

流程是:文本 -> 分割 -> 生成嵌入表示 -> 存储到Chroma数据库 -> 检索器。

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_google_genai import GoogleGenerativeAIEmbeddings

GOOGLE_API_KEY = ""

GROQ_API_KEY=""

embeddings = GoogleGenerativeAIEmbeddings(model="models/embedding-001", google_api_key=GOOGLE_API_KEY)

urls = [

"https://medium.com/@sridevi.gogusetty/rag-vs-graph-rag-llama-3-1-8f2717c554e6",

"https://medium.com/@sridevi.gogusetty/retrieval-augmented-generation-rag-gemini-pro-pinecone-1a0a1bfc0534",

"https://medium.com/@sridevi.gogusetty/introduction-to-ollama-run-llm-locally-data-privacy-f7e4e58b37a0",

]

docs = [WebBaseLoader(url).load() for url in urls]

docs_list = [item for sublist in docs for item in sublist]

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=250, chunk_overlap=0

)

doc_splits = text_splitter.split_documents(docs_list)

# Add to vectorDB

vectorstore = Chroma.from_documents(

documents=doc_splits,

collection_name="rag-chroma",

embedding=embeddings,

)

retriever = vectorstore.as_retriever()

使用检索器创建了检索工具。

from langchain.tools.retriever import create_retriever_tool

retriever_tool = create_retriever_tool(

retriever,

"retrieve_blog_posts",

"Search and return information about sridevi gogusetty blog posts on RAG, RAG VS Graph RAG, Ollama.",

)

tools = [retriever_tool]

Agent State用于在遍历各个节点时存储和表示代理图的状态。它将存储并跟踪用户查询、一个标志变量(告诉我们是否需要进行网络搜索)、上下文文档列表(从向量数据库和/或网络搜索中检索得到)以及由大型语言模型(LLM)生成的响应。

from typing import Annotated, Sequence, TypedDict

from langchain_core.messages import BaseMessage

from langgraph.graph.message import add_messages

class AgentState(TypedDict):

# The add_messages function defines how an update should be processed

# Default is to replace. add_messages says "append"

messages: Annotated[Sequence[BaseMessage], add_messages]

如果你想为自己创建一个(检索工具或相关服务),请传递TAVILY_API_KEY,你可以从此链接https://app.tavily.com/获取它。

import getpass

import os

os.environ["TAVILY_API_KEY"] = getpass.getpass()

在这里,我们将使用Tavily API作为我们的网络搜索工具,因此我们加载与该API的连接。对于我们的搜索,我们将使用前3个搜索结果作为额外的上下文信息;然而,你也可以自由选择加载更多的搜索结果。

### Search

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain.utilities.tavily_search import TavilySearchAPIWrapper

from langchain.tools.tavily_search import TavilySearchResults

# tavily_tool = TavilySearchResults(k=3)

search = TavilySearchAPIWrapper()

web_search_tool = TavilySearchResults(api_wrapper=search, max_results=5,

include_answer=True,

include_raw_content=True,

include_images=True,)

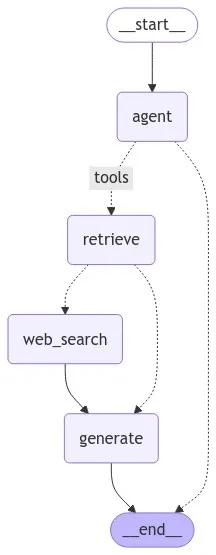

定义节点 => “web_search_agent, retrieve_agent, generate”

web_search_agent:这个节点将用于使用网络搜索工具对给定查询进行网络搜索,并从网络上检索一些信息。

retrieve_agent:这个节点将决定是使用检索器工具进行检索,还是直接结束。

generate:这是RAG系统中根据查询和上下文文档生成标准大型语言模型(LLM)响应的函数。

定义边/关系 => “grade_documents”

grade_documents:这个关系将用于使用LLM评分器确定检索到的文档是否与问题相关。如果文档相关,则返回generate;否则,返回web_search。

from typing import Annotated, Literal, Sequence

from typing_extensions import TypedDict

from langchain import hub

from langchain_core.messages import BaseMessage, HumanMessage

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import PromptTemplate

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

from langchain_core.documents import Document

from langgraph.prebuilt import tools_condition

from langchain_groq import ChatGroq

### Edges

def grade_documents(state) -> Literal["generate", "web_search"]:

"""

Determines whether the retrieved documents are relevant to the question.

Args:

state (messages): The current state

Returns:

str: A decision for whether the documents are relevant or not

"""

print("---CHECK RELEVANCE---")

# Data model

class grade(BaseModel):

"""Binary score for relevance check."""

binary_score: str = Field(description="Relevance score 'yes' or 'no'")

# LLM

model = ChatGroq(temperature=0, model_name="gemma2-9b-it", groq_api_key=GROQ_API_KEY)

# LLM with tool and validation

llm_with_tool = model.with_structured_output(grade)

# Prompt

prompt = PromptTemplate(

template="""You are a grader assessing relevance of a retrieved document to a user question. \n

Here is the retrieved document: \n\n {context} \n\n

Here is the user question: {question} \n

If the document contains keyword(s) or semantic meaning related to the user question, grade it as relevant. \n

Give a binary score 'yes' or 'no' score to indicate whether the document is relevant to the question.""",

input_variables=["context", "question"],

)

# Chain

chain = prompt | llm_with_tool

messages = state["messages"]

last_message = messages[-1]

question = messages[0].content

docs = last_message.content

scored_result = chain.invoke({"question": question, "context": docs})

score = scored_result.binary_score

if score == "yes":

print("---DECISION: DOCS RELEVANT---")

return "generate"

else:

print("---DECISION: DOCS NOT RELEVANT---")

print(score)

return "web_search"

### Nodes

def web_search_agent(state):

"""

Web search based on the re-phrased question.

Args:

state (dict): The current graph state

Returns:

state (dict): Updates documents key with appended web results

"""

print("---WEB SEARCH---")

question = state["messages"]

query = question[0].content

print(question)

# Web search

docs = web_search_tool.invoke({"query": query})

web_results = "\n".join([d["content"] for d in docs])

web = Document(page_content=web_results)

# We return a list, because this will get added to the existing list

return {"messages": [web_results]}

def retrieve_agent(state):

"""

Invokes the agent model to generate a response based on the current state. Given

the question, it will decide to retrieve using the retriever tool, or simply end.

Args:

state (messages): The current state

Returns:

dict: The updated state with the agent response appended to messages

"""

print("---CALL AGENT---")

messages = state["messages"]

model = ChatGroq(temperature=0, model_name="gemma2-9b-it", groq_api_key=GROQ_API_KEY)

model = model.bind_tools(tools)

response = model.invoke(messages)

# We return a list, because this will get added to the existing list

return {"messages": [response]}

def rewrite(state):

"""

Transform the query to produce a better question.

Args:

state (messages): The current state

Returns:

dict: The updated state with re-phrased question

"""

print("---TRANSFORM QUERY---")

messages = state["messages"]

question = messages[0].content

msg = [

HumanMessage(

content=f""" \n

Look at the input and try to reason about the underlying semantic intent / meaning. \n

Here is the initial question:

\n ------- \n

{question}

\n ------- \n

Formulate an improved question: """,

)

]

llm = ChatGroq(temperature=0, model_name="gemma2-9b-it", groq_api_key=GROQ_API_KEY)

response = llm.invoke(msg)

return {"messages": [response]}

def generate(state):

"""

Generate answer

Args:

state (messages): The current state

Returns:

dict: The updated state with re-phrased question

"""

print("---GENERATE---")

messages = state["messages"]

question = messages[0].content

last_message = messages[-1]

docs = last_message.content

# Prompt

prompt = hub.pull("rlm/rag-prompt")

# LLM

llm = ChatGroq(temperature=0, model_name="gemma2-9b-it", groq_api_key=GROQ_API_KEY)

# Post-processing

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

# Chain

rag_chain = prompt | llm | StrOutputParser()

# Run

response = rag_chain.invoke({"context": docs, "question": question})

return {"messages": [response]}

print("*" * 20 + "Prompt[rlm/rag-prompt]" + "*" * 20)

prompt = hub.pull("rlm/rag-prompt").pretty_print() # Show what the prompt looks like

添加节点和边/关系

from langgraph.graph import END, StateGraph, START

from langgraph.prebuilt import ToolNode

# Define a new graph

workflow = StateGraph(AgentState)

# Define the nodes we will cycle between

workflow.add_node("web_search", web_search_agent) # web search

workflow.add_node("agent", retrieve_agent) # agent

retrieve = ToolNode([retriever_tool])

workflow.add_node("retrieve", retrieve)

workflow.add_node(

"generate", generate

) # Generating a response after we know the documents are relevant

# Call agent node to decide to retrieve or not

workflow.add_edge(START, "agent")

workflow.add_edge("web_search", "generate")

# Decide whether to retrieve

workflow.add_conditional_edges(

"agent",

# Assess agent decision

tools_condition,

{

# Translate the condition outputs to nodes in our graph

"tools": "retrieve",

END: END,

},

)

# Edges taken after the `action` node is called.

workflow.add_conditional_edges(

"retrieve",

# Assess agent decision

grade_documents,

)

workflow.add_edge("generate", END)

# Compile

graph = workflow.compile()

import pprint

inputs = {

"messages": [

("user", "how do you play cricket?"),

]

}

for output in graph.stream(inputs):

for key, value in output.items():

pprint.pprint(f"Output from node '{key}':")

pprint.pprint("---")

pprint.pprint(value, indent=2, width=80, depth=None)

pprint.pprint("\n---\n")上述问题“how do you play cricket?”(你如何打板球?)的输出如下:

这个问题与文档无关,所以代理将使用网络搜索工具来生成答案。

---CALL AGENT---

"Output from node 'agent':"

'---'

{ 'messages': [ AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_a0e8', 'function': {'arguments': '{"query":"how to play cricket"}', 'name': 'retrieve_blog_posts'}, 'type': 'function'}]}, response_metadata={'token_usage': {'completion_tokens': 87, 'prompt_tokens': 978, 'total_tokens': 1065, 'completion_time': 0.158181818, 'prompt_time': 0.031489045, 'queue_time': 0.0030664820000000023, 'total_time': 0.189670863}, 'model_name': 'gemma2-9b-it', 'system_fingerprint': 'fp_10c08bf97d', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-93d87331-d092-43dc-875e-04095a50d423-0', tool_calls=[{'name': 'retrieve_blog_posts', 'args': {'query': 'how to play cricket'}, 'id': 'call_a0e8', 'type': 'tool_call'}], usage_metadata={'input_tokens': 978, 'output_tokens': 87, 'total_tokens': 1065})]}

'\n---\n'

---CHECK RELEVANCE---

---DECISION: DOCS NOT RELEVANT---

no

"Output from node 'retrieve':"

'---'

{ 'messages': [ ToolMessage(content='by Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams\n\nby Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams\n\ngive suggestions or comments for better teaching..RagGraphragLlmLlama3 1----FollowWritten by Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams\n\ngive suggestions or comments for better teaching..RagGraphragLlmLlama3 1----FollowWritten by Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams', name='retrieve_blog_posts', id='3b812678-1118-442f-9fd4-dbc89585c449', tool_call_id='call_a0e8')]}

'\n---\n'

---WEB SEARCH---

[HumanMessage(content='how do you play cricket?', additional_kwargs={}, response_metadata={}, id='821c3254-52a0-47de-b973-2c393845b916'), AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_a0e8', 'function': {'arguments': '{"query":"how to play cricket"}', 'name': 'retrieve_blog_posts'}, 'type': 'function'}]}, response_metadata={'token_usage': {'completion_tokens': 87, 'prompt_tokens': 978, 'total_tokens': 1065, 'completion_time': 0.158181818, 'prompt_time': 0.031489045, 'queue_time': 0.0030664820000000023, 'total_time': 0.189670863}, 'model_name': 'gemma2-9b-it', 'system_fingerprint': 'fp_10c08bf97d', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-93d87331-d092-43dc-875e-04095a50d423-0', tool_calls=[{'name': 'retrieve_blog_posts', 'args': {'query': 'how to play cricket'}, 'id': 'call_a0e8', 'type': 'tool_call'}], usage_metadata={'input_tokens': 978, 'output_tokens': 87, 'total_tokens': 1065}), ToolMessage(content='by Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams\n\nby Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams\n\ngive suggestions or comments for better teaching..RagGraphragLlmLlama3 1----FollowWritten by Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams\n\ngive suggestions or comments for better teaching..RagGraphragLlmLlama3 1----FollowWritten by Sreedevi Gogusetty9 FollowersFollowHelpStatusAboutCareersPressBlogPrivacyTermsText to speechTeams', name='retrieve_blog_posts', id='3b812678-1118-442f-9fd4-dbc89585c449', tool_call_id='call_a0e8')]

"Output from node 'web_search':"

'---'

{ 'messages': [ 'wikiHow is a “wiki,” similar to Wikipedia, which means that '

'many of our articles are co-written by multiple authors.\n'

'The bowling team has dedicated bowlers, while the rest of the '

'players spread across the ground try to prevent the batters '

'from scoring runs as well as to catch the ball in order to '

'get the batters out. Since cricket is played with pairs of '

'batsmen, when 10 players from the batting team are dismissed, '

'their innings concludes, and the sum of the runs they scored '

'sets the target score for the bowling team. Run-Out: A '

'run-out happens when the fielding team throws the ball at the '

'wicket while the batter is trying to score a run and before '

'they can reach the opposite side of the pitch.\n'

'Runs can also be scored when the bowler bowls a wide delivery '

'(a ball that is too far away from the stumps), a no ball '

'(where the bowler oversteps the front line on the wicket), a '

'bye (where no one touches the ball but the two batsmen run '

'anyway) and a leg Pitch sizes vary greatly in cricket but are '

'usually played on a circular grass field with a circumference '

'of around 200m. Around the edge of the field is what’s known '

'as the boundary edge and is basically the line between being '

'in play and out of play.\n'

' The bowler will bowl the cricket ball from one end whilst '

'the batsmen will try and hit the ball from the other end.\n'

' Scoring\n'

'A run occurs when a batsmen hits the ball with their bat and '

'the two batsmen at the wicket mange to successfully run to '

'the other end. The batting team will try and score as many '

'runs as possible in the allotted time whilst the bowling team '

'will try and contain them by fielding the ball.']}

'\n---\n'

---GENERATE---

"Output from node 'generate':"

'---'

{ 'messages': [ 'Cricket is played with two teams of 11 players each. The '

'batting team tries to score runs by hitting a ball bowled by '

'the opposing team, while the fielding team tries to prevent '

'runs and get the batsmen out. Runs are scored by running '

'between the wickets or by hitting the ball to the boundary. \n'

'\n'

'\n']}

'\n---\n'

import pprint

inputs = {

"messages": [

("user", "What is difference between RAG VS GRAPH RAG"),

]

}

for output in graph.stream(inputs):

for key, value in output.items():

pprint.pprint(f"Output from node '{key}':")

pprint.pprint("---")

pprint.pprint(value, indent=2, width=80, depth=None)

pprint.pprint("\n---\n")上述问题“What is difference between RAG VS GRAPH RAG?”(RAG与GRAPH RAG之间有什么区别?)的输出如下(翻译成中文):

这个问题与文档相关,所以代理将使用检索器工具来生成答案。

---CALL AGENT---

"Output from node 'agent':"

'---'

{ 'messages': [ AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_zm54', 'function': {'arguments': '{"query":"RAG VS Graph RAG"}', 'name': 'retrieve_blog_posts'}, 'type': 'function'}]}, response_metadata={'token_usage': {'completion_tokens': 85, 'prompt_tokens': 981, 'total_tokens': 1066, 'completion_time': 0.154545455, 'prompt_time': 0.031727456, 'queue_time': 0.0029311909999999997, 'total_time': 0.186272911}, 'model_name': 'gemma2-9b-it', 'system_fingerprint': 'fp_10c08bf97d', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-b4ea1e95-9dea-47be-98c6-b658ffa1dac7-0', tool_calls=[{'name': 'retrieve_blog_posts', 'args': {'query': 'RAG VS Graph RAG'}, 'id': 'call_zm54', 'type': 'tool_call'}], usage_metadata={'input_tokens': 981, 'output_tokens': 85, 'total_tokens': 1066})]}

'\n---\n'

---CHECK RELEVANCE---

---DECISION: DOCS RELEVANT---

"Output from node 'retrieve':"

'---'

{ 'messages': [ ToolMessage(content='their relationships. When processing a query, Graph RAG explores the knowledge graph, identifying entities and connections relevant to the user’s intent. This approach provides a deeper understanding of the context and relationships within the information.Thematic relevance vs. Context and Relationships:Thematic relevance focuses on how similar topics are. Imagine two documents, one about the French Revolution and another about the American Revolution. Both discuss revolutions, making them thematically relevant to a query about “revolutions.”Context and Relationships delve deeper. Here, we consider not just the topics but also the connections between them. The knowledge graph in Graph RAG might show that the American Revolution was inspired by the French Revolution, providing a richer understanding of the context.Information vs. Entities:Information is a broader term encompassing any kind of knowledge retrieved. In Vector RAG, retrieved information could be entire documents or summaries.Entities are specific objects or concepts within the information. In Graph RAG, the retrieved information focuses on identifying entities (like the French Revolution) and the relationships between them (e.g., historical event with causes).Choosing the Right RAG ApproachThe choice between Vector RAG and Graph RAG depends on your specific\n\ntheir relationships. When processing a query, Graph RAG explores the knowledge graph, identifying entities and connections relevant to the user’s intent. This approach provides a deeper understanding of the context and relationships within the information.Thematic relevance vs. Context and Relationships:Thematic relevance focuses on how similar topics are. Imagine two documents, one about the French Revolution and another about the American Revolution. Both discuss revolutions, making them thematically relevant to a query about “revolutions.”Context and Relationships delve deeper. Here, we consider not just the topics but also the connections between them. The knowledge graph in Graph RAG might show that the American Revolution was inspired by the French Revolution, providing a richer understanding of the context.Information vs. Entities:Information is a broader term encompassing any kind of knowledge retrieved. In Vector RAG, retrieved information could be entire documents or summaries.Entities are specific objects or concepts within the information. In Graph RAG, the retrieved information focuses on identifying entities (like the French Revolution) and the relationships between them (e.g., historical event with causes).Choosing the Right RAG ApproachThe choice between Vector RAG and Graph RAG depends on your specific\n\ntheir relationships. When processing a query, Graph RAG explores the knowledge graph, identifying entities and connections relevant to the user’s intent. This approach provides a deeper understanding of the context and relationships within the information.Thematic relevance vs. Context and Relationships:Thematic relevance focuses on how similar topics are. Imagine two documents, one about the French Revolution and another about the American Revolution. Both discuss revolutions, making them thematically relevant to a query about “revolutions.”Context and Relationships delve deeper. Here, we consider not just the topics but also the connections between them. The knowledge graph in Graph RAG might show that the American Revolution was inspired by the French Revolution, providing a richer understanding of the context.Information vs. Entities:Information is a broader term encompassing any kind of knowledge retrieved. In Vector RAG, retrieved information could be entire documents or summaries.Entities are specific objects or concepts within the information. In Graph RAG, the retrieved information focuses on identifying entities (like the French Revolution) and the relationships between them (e.g., historical event with causes).Choosing the Right RAG ApproachThe choice between Vector RAG and Graph RAG depends on your specific\n\nRAG vs Vector RAG and delve deeper into each:Rag Vs Graph RagVector RAGImagine a vast library where books are organized based on their thematic connections. Vector RAG employs vector databases. These databases represent information (like documents or entities) as numerical vectors in a high-dimensional space. Documents with similar meanings or topics will have vectors closer together in this space. During retrieval, Vector RAG searches the database for documents with vectors closest to the user query, essentially finding the most thematically relevant information.Example:User Query: “What are the causes of the French Revolution?”Retrieved Information (Vector Search): Documents discussing historical events leading up to the French Revolution, documents on social and economic conditions in 18th century France.Generated Response: The LLM, using the retrieved documents, might explain the various political, social, and economic factors that contributed to the French Revolution.Graph RAGThink of a detailed map where locations and their connections are clearly defined. Graph RAG utilizes knowledge graphs. These are structured databases that represent entities (like people, places, or events) and the relationships between them. During retrieval, Graph RAG traverses the knowledge graph based on the user query, identifying entities and', name='retrieve_blog_posts', id='6490648b-73ef-41a8-ad7f-55a9ab86730d', tool_call_id='call_zm54')]}

'\n---\n'

---GENERATE---

"Output from node 'generate':"

'---'

{ 'messages': [ 'Vector RAG focuses on finding information thematically '

'relevant to a query, while Graph RAG emphasizes understanding '

'the relationships between entities in a knowledge graph. '

'Vector RAG retrieves documents based on similarity, while '

'Graph RAG identifies entities and their connections to '

'provide a deeper contextual understanding. The best approach '

'depends on whether your application needs thematic relevance '

'or a richer understanding of relationships. \n']}

'\n---\n'