【指南】使用LSTM 揭示时间序列数据中的特征重要性(1)

特征重要性是机器学习领域中一个广泛讨论的话题,但通常围绕的是适用于表格数据的方法,如SHAP、特征选择或排列重要性。然而,这些方法可能并不直接适用于时间序列数据。在这篇文章中,我将探索一种使用LSTM权重来识别时间序列数据中重要特征的简单方法。

LSTM如何为特征分配权重

LSTM使用三个门——输入门、遗忘门和输出门——来管理特征信息随时间保留或丢弃的程度。其工作原理如下:

1. 输入门

决定当前输入(特征)对单元状态的贡献程度。此处权重较高表明该特征对当前预测至关重要。

2. 遗忘门

控制来自前一个时间步的信息应该“遗忘”多少。在遗忘门中权重较低的特征可能对未来的预测影响不大。

3. 输出门

决定在每个时间步哪些信息流入最终输出。

分步实施

以下是带有详细解释的完整Python代码:

导入库并加载数据

import pandas as pd

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, TensorDataset

from sklearn.preprocessing import LabelEncoder, MinMaxScaler

import matplotlib.pyplot as plt

from tqdm import tqdm

预处理

# Load Data

df = pd.read_csv('/content/synthetic_financial_data.csv')

df['transaction_time'] = pd.to_datetime(df['transaction_time'])

df = df.drop(['transaction_id'], axis=1)

# Encode Categorical Variables

categorical_var = ['customer_id', 'merchant_id', 'card_type', 'location', 'purchase_category', 'transaction_description']

label_encoder = LabelEncoder()

for col in categorical_var:

df[col] = label_encoder.fit_transform(df[col])

# Scale Numerical Features

scaler = MinMaxScaler()

cols_to_scale = ['customer_id', 'merchant_id', 'amount', 'card_type', 'location',

'purchase_category', 'customer_age', 'transaction_description']

df[cols_to_scale] = scaler.fit_transform(df[cols_to_scale])

# Sort by Time

df = df.sort_values(by='transaction_time')

# Prepare Features and Target

X = df.drop(['transaction_time', 'is_fraudulent'], axis=1).values

y = df['is_fraudulent'].values

这一步将分类数据转换为数值,并对数值特征进行缩放,以确保范围一致。按交易时间对数据进行排序以确保顺序排列。

创建时间序列序列

LSTM需要序列格式的数据。我们准备长度为10的序列(你可以根据分析选择序列长度)。

# Reshape Data into Sequences

sequence_length = 10

X_sequences, y_sequences = [], []

for i in range(len(X) - sequence_length + 1):

X_sequences.append(X[i:i + sequence_length])

y_sequences.append(y[i + sequence_length - 1])

X_sequences = np.array(X_sequences)

y_sequences = np.array(y_sequences)

# Convert to PyTorch Tensors

X_tensor = torch.tensor(X_sequences, dtype=torch.float32)

y_tensor = torch.tensor(y_sequences, dtype=torch.long)

定义LSTM模型

# Define LSTM Model

class LSTMModel(nn.Module):

def __init__(self, input_size, hidden_size, num_classes):

super(LSTMModel, self).__init__()

self.hidden_size = hidden_size

self.lstm = nn.LSTM(input_size, hidden_size, batch_first=True)

self.fc = nn.Linear(hidden_size, num_classes)

def forward(self, x):

h_0 = torch.zeros(1, x.size(0), self.hidden_size).to(x.device) # Hidden state

c_0 = torch.zeros(1, x.size(0), self.hidden_size).to(x.device) # Cell state

out, _ = self.lstm(x, (h_0, c_0))

out = self.fc(out[:, -1, :]) # Take the last output

return out

该模型包括:

- 一个用于处理序列的LSTM层。

- 一个全连接层,用于将LSTM的输出映射到类别预测。

模型训练

# Model Parameters

input_size = X_tensor.shape[2]

hidden_size = 50

num_classes = len(np.unique(y))

num_epochs = 100

batch_size = 64

learning_rate = 0.001

# DataLoader

dataset = TensorDataset(X_tensor, y_tensor)

dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True)

# Initialize Model, Loss, Optimizer

model = LSTMModel(input_size, hidden_size, num_classes)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

# Track weights over epochs

feature_weights_log = {col: [] for col in df.drop(['transaction_time', 'is_fraudulent'], axis=1).columns}

# Training Loop

for epoch in range(num_epochs):

model.train()

epoch_loss = 0

for X_batch, y_batch in dataloader:

optimizer.zero_grad()

outputs = model(X_batch)

loss = criterion(outputs, y_batch)

loss.backward()

optimizer.step()

epoch_loss += loss.item()

# Log weights every 5 epochs

if (epoch + 1) % 5 == 0:

with torch.no_grad():

lstm_weights = model.lstm.weight_ih_l0.cpu().numpy() # Input weights

for i, col in enumerate(feature_weights_log.keys()):

feature_weights_log[col].append(lstm_weights[:, i].mean()) # Average weight per feature

print(f"Epoch {epoch + 1}/{num_epochs}, Loss: {epoch_loss / len(dataloader):.4f}")

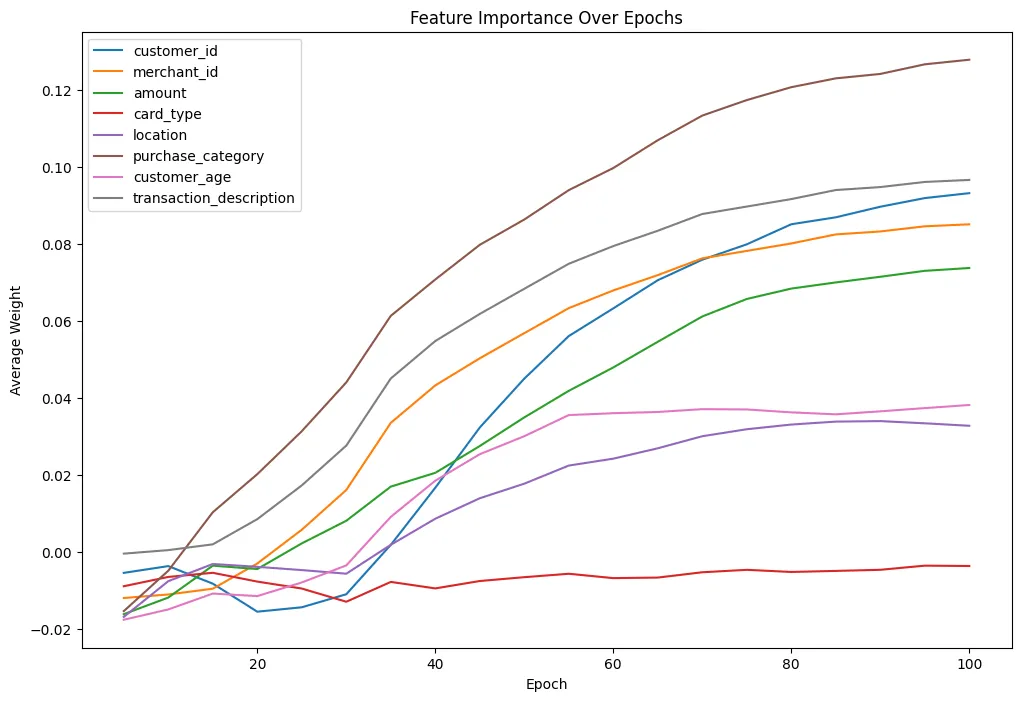

绘制特征重要性图

# Plot Feature Importance

plt.figure(figsize=(12, 8))

for feature, weights in feature_weights_log.items():

plt.plot(range(5, num_epochs + 1, 5), weights, label=feature)

plt.xlabel('Epoch')

plt.ylabel('Average Weight')

plt.title('Feature Importance Over Epochs')

plt.legend()

plt.show()

这个图展示了特征权重在训练过程中的变化情况。权重持续较高的特征可能更为重要。

这种方法可能失败的地方

1. LSTM的动态特性

LSTM根据序列模式调整权重,而不仅仅是单个特征的贡献。一个单独看起来不重要的特征可能在特定的时间步或序列中至关重要。

2. 权重归因的复杂性

该方法假设权重越高意味着重要性越大,但LSTM的权重还取决于梯度传播、特征之间的相互作用以及梯度消失问题。

3. 过拟合或模型性能不佳

如果模型泛化能力不佳(例如,由于过拟合或数据质量差),那么跟踪的权重可能无法反映真实的特征重要性。

4. 对某些特征的偏倚

由于缩放或编码导致初始值较高的特征可能会获得更高的权重,即使它们实际上并不重要。

关键要点

- LSTM的权重可以为时间序列数据的特征重要性提供见解。

- 虽然这种方法较为基础,但它为探索特征重要性提供了一个起点。

- 在后续部分中,我们将探讨更稳健的方法,并验证特征重要性。