【指南】使用LSTM 揭示时间序列数据中的特征重要性(2)

在这一部分中,我们将深入探讨排列重要性(Permutation Importance)在实际中的应用,以评估LSTM模型中的特征重要性。我们将逐步分解这一过程,重点介绍每一步背后的思考过程以及特征排列对模型性能的影响。本指南面向初学者,友好易懂,旨在帮助你理解每一步背后的“为什么”。

什么是排列重要性?

简而言之,排列重要性衡量的是每个特征对模型准确性的贡献程度。我们通过随机打乱(或排列)某个特征的值,并观察模型准确性下降的程度来实现这一点。准确性下降得越多,说明该特征对模型的重要性越高。

代码背后的思考过程

让我们用一个友好的解释来探索这种方法的工作原理。

1. 训练LSTM模型:

首先,我们在序列数据上训练一个LSTM模型。LSTM在捕捉序列中的依赖关系方面表现出色,例如金融交易中的欺诈检测。

2. 评估基线准确性:

训练完成后,我们计算模型在没有任何特征变化的数据集上的基线准确性。这作为参考点。

3. 遍历特征:

对于输入数据中的每个特征:

- 排列特征:随机打乱该特征在所有数据点中的值。

- 这有什么作用?打乱破坏了特征与目标变量之间的关系,有效地“移除”了该特征的贡献。

- 重新计算准确性:在排列后的数据集上评估模型的准确性。

- 计算重要性:从基线准确性中减去新的(较低的)准确性。较大的下降表明该特征对模型的预测至关重要。

4. 按重要性对特征进行排序:

遍历所有特征后,根据计算出的重要性对它们进行排序。

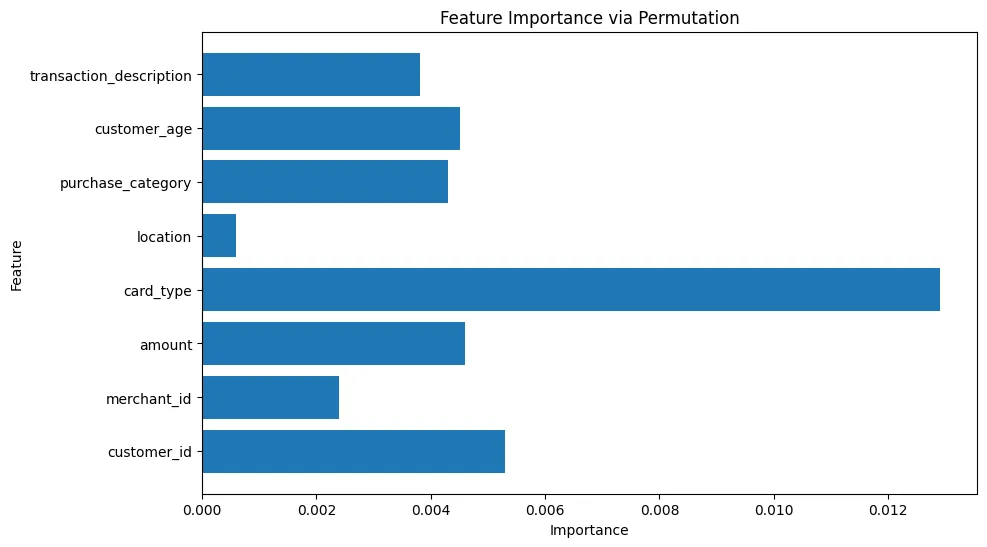

5. 可视化结果:

最后,绘制特征重要性值,以了解哪些特征对模型的准确性贡献最大。

代码逐步解析

以下是代码中关键步骤的分解:

导入库并加载数据

我们首先导入必要的库并准备数据集。对分类变量进行编码,对数值变量进行缩放,并按交易时间对数据进行排序。

import pandas as pd

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, TensorDataset

from sklearn.preprocessing import LabelEncoder, MinMaxScaler

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt

from tqdm import tqdm

# Load and preprocess the dataset

df = pd.read_csv('/content/synthetic_financial_data.csv')

df['transaction_time'] = pd.to_datetime(df['transaction_time'])

df = df.sort_values(by='transaction_time')

# Load Data

df = pd.read_csv('/content/synthetic_financial_data.csv')

df['transaction_time'] = pd.to_datetime(df['transaction_time'])

df = df.drop(['transaction_id'], axis=1)

# Categorical Variables

categorical_var = ['customer_id', 'merchant_id', 'card_type', 'location',

'purchase_category', 'transaction_description']

# Encode Categorical Variables

label_encoder = LabelEncoder()

for col in categorical_var:

df[col] = label_encoder.fit_transform(df[col])

# Scale Numerical Features

scaler = MinMaxScaler()

cols_to_scale = ['customer_id', 'merchant_id', 'amount', 'card_type', 'location',

'purchase_category', 'customer_age', 'transaction_description']

df[cols_to_scale] = scaler.fit_transform(df[cols_to_scale])

# Sort Data by Transaction Time

df = df.sort_values(by='transaction_time')

# Features and Target

X = df.drop(['transaction_time', 'is_fraudulent'], axis=1).values

y = df['is_fraudulent'].values

为LSTM准备数据

我们创建数据序列,每个序列包含10笔交易,模型预测序列中最后一笔交易是否为欺诈交易。

# Reshape Data into Sequences

sequence_length = 10

X_sequences, y_sequences = [], []

for i in range(len(X) - sequence_length + 1):

X_sequences.append(X[i:i + sequence_length])

y_sequences.append(y[i + sequence_length - 1])

X_sequences = np.array(X_sequences)

y_sequences = np.array(y_sequences)

# Convert to Torch Tensors

X_tensor = torch.tensor(X_sequences, dtype=torch.float32)

y_tensor = torch.tensor(y_sequences, dtype=torch.long)

# Prepare Dataset and Dataloader

dataset = TensorDataset(X_tensor, y_tensor)

dataloader = DataLoader(dataset, batch_size=64, shuffle=True)

定义和训练LSTM模型

LSTM模型由一个LSTM层和一个用于分类的全连接层组成。我们使用交叉熵损失和Adam优化器对其进行训练。

# LSTM Model Definition

class LSTMModel(nn.Module):

def __init__(self, input_size, hidden_size, num_classes):

super(LSTMModel, self).__init__()

self.hidden_size = hidden_size

self.lstm = nn.LSTM(input_size, hidden_size, batch_first=True)

self.fc = nn.Linear(hidden_size, num_classes)

def forward(self, x):

h_0 = torch.zeros(1, x.size(0), self.hidden_size).to(x.device)

c_0 = torch.zeros(1, x.size(0), self.hidden_size).to(x.device)

out, _ = self.lstm(x, (h_0, c_0))

out = self.fc(out[:, -1, :]) # Output of the last time step

return out

基线准确性计算

训练完成后,我们计算模型在数据集上的基线准确性。

# Model Training Function

def train_model(model, dataloader, criterion, optimizer, num_epochs):

model.train()

for epoch in range(num_epochs):

epoch_loss = 0

for X_batch, y_batch in dataloader:

optimizer.zero_grad()

outputs = model(X_batch)

loss = criterion(outputs, y_batch)

loss.backward()

optimizer.step()

epoch_loss += loss.item()

print(f"Epoch {epoch+1}/{num_epochs}, Loss: {epoch_loss / len(dataloader):.4f}")

return model

# Initialize and Train the Model

input_size = X_tensor.shape[2]

hidden_size = 50

num_classes = len(np.unique(y))

model = LSTMModel(input_size, hidden_size, num_classes)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

num_epochs = 20

model = train_model(model, dataloader, criterion, optimizer, num_epochs)

# Evaluate Baseline Metric

model.eval()

with torch.no_grad():

outputs = model(X_tensor)

preds = torch.argmax(outputs, axis=1).numpy()

baseline_accuracy = accuracy_score(y_tensor.numpy(), preds)

排列重要性计算

这是整个过程的核心:

# Permutation Importance Function

def permutation_importance(model, X, y, metric_func, baseline_metric):

feature_importance = []

X_np = X.numpy()

for i in range(X.shape[2]): # Iterate over features

X_permuted = X_np.copy()

np.random.shuffle(X_permuted[:, :, i]) # Permute feature i

X_permuted_tensor = torch.tensor(X_permuted, dtype=torch.float32)

outputs = model(X_permuted_tensor)

preds = torch.argmax(outputs, axis=1).numpy()

permuted_metric = metric_func(y, preds)

importance = baseline_metric - permuted_metric

feature_importance.append(importance)

return feature_importance

# Compute Permutation Importance

feature_importance = permutation_importance(model, X_tensor, y_tensor.numpy(), accuracy_score, baseline_accuracy)

# Map Importance to Feature Names

feature_names = df.drop(['transaction_time', 'is_fraudulent'], axis=1).columns

importance_dict = dict(zip(feature_names, feature_importance))

关键步骤:

- 遍历特征:对数据集中的每个特征进行循环。

- 排列特征:使用np.random.shuffle打乱特征的值。这会破坏特征与目标变量之间的关系。

- 重新计算准确性:在排列后的数据集上运行模型,并计算新的准确性。

- 测量下降幅度:用基线准确性减去新的准确性。下降幅度越大,说明该特征越重要。

可视化特征重要性

最后,将计算出的重要性值映射到特征名称,并进行绘图。

# Plot feature importance

plt.figure(figsize=(10, 6))

plt.barh(list(importance_dict.keys()), importance_dict.values())

plt.xlabel("Importance")

plt.ylabel("Feature")

plt.title("Feature Importance via Permutation")

plt.show()

这种方法为什么有效?

排列重要性方法简单但有效,因为:

- 它直接衡量了每个特征对模型性能的影响。

- 通过一次打乱一个特征,它隔离了该特征的影响,从而清晰地展现了其重要性。

结论

排列重要性是一种强大的、与模型无关的技术,用于理解特征的贡献。通过打乱每个特征并观察准确性的下降,你可以确定哪些特征在驱动模型的预测。