时间序列分析:深度学习架构比较研究

2025年01月22日 由 alex 发表

2619

0

深度学习通过FNN、LSTM、CNN、TCN和Transformer等架构彻底改变了时间序列分析领域。每种模型都有其独特的优势:FNN提供了基线性能,LSTM能够捕捉长期依赖关系,CNN擅长识别局部模式,TCN在效率和范围之间取得了平衡,而Transformer在处理复杂关系方面表现出色。

我们将使用一个合成数据集,该数据集模拟了现实世界时间序列的特征,包括趋势、季节性和噪声,以及来自ERCOT的实际电力负荷数据。

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

import tensorflow as tf

from tensorflow.keras.layers import MultiHeadAttention, LayerNormalization, Dense, Conv1D, Dropout

# Generate synthetic data

date_rng = pd.date_range(start='2020-01-01', end='2022-12-31', freq='D')

n = len(date_rng)

trend = np.linspace(0, 100, n)

seasonality = 10 * np.sin(2 * np.pi * np.arange(n)/365.25)

noise = np.random.normal(0, 5, n)

y = trend + seasonality + noise

df = pd.DataFrame(data={'date': date_rng, 'value': y})

# Normalize and prepare data

scaler = MinMaxScaler()

scaled_data = scaler.fit_transform(df[['value']])

X, y = prepare_data(scaled_data, n_steps=30)

# Function to prepare data for time series modeling

def prepare_data(data, n_steps):

X, y = [], []

for i in range(len(data) - n_steps):

X.append(data[i:i+n_steps])

y.append(data[i+n_steps])

return np.array(X), np.array(y)

# Function to load and preprocess data

def load_and_preprocess_data(filepath, n_steps=30):

df = pd.read_csv(filepath, parse_dates=["date"], index_col="date")

df.sort_index(inplace=True)

scaler = MinMaxScaler()

scaled_data = scaler.fit_transform(df[['values']])

X, y = prepare_data(scaled_data, n_steps)

train_size = int(len(X) * 0.8)

X_train, X_test = X[:train_size], X[train_size:]

y_train, y_test = y[:train_size], y[train_size:]

return X_train, X_test, y_train, y_test, scaler

既然我们的数据集已经准备好了,让我们从最简单的模型开始,逐步探索更复杂的深度学习架构。

我们需要几个辅助函数。

from tensorflow.keras.layers import MultiHeadAttention, LayerNormalization, Dense.keras.layers import MultiHeadAttention, LayerNormalization, Dense

# Residual block for TCN

def residual_block(x, dilation_rate, filters):

skip = x

x = Conv1D(filters, kernel_size=3, dilation_rate=dilation_rate, padding='causal', activation='relu')(x)

x = Dropout(0.1)(x)

x = Conv1D(filters, kernel_size=3, dilation_rate=dilation_rate, padding='causal', activation='relu')(x)

x = Dropout(0.4)(x)

x = LayerNormalization()(x + skip)

return x

# Transformer encoder block

def transformer_encoder(inputs, head_size, num_heads, ff_dim, dropout=0):

x = MultiHeadAttention(key_dim=head_size, num_heads=num_heads, dropout=dropout)(inputs, inputs)

x = Dropout(dropout)(x)

x = LayerNormalization(epsilon=1e-6)(x)

res = x + inputs

x = Dense(ff_dim, activation="relu")(res)

x = Dropout(dropout)(x)

x = Dense(inputs.shape[-1])(x)

x = LayerNormalization(epsilon=1e-6)(x)

return x + res

- 前馈神经网络作为基线

- 长短期记忆网络(LSTM)能够捕捉长距离依赖

- 卷积神经网络(CNN)用于捕捉局部模式

- 时序卷积网络(TCN)——结合CNN和RNN的优点

- 基于注意力机制的序列建模Transformer

这段代码定义了每种模型。为了部署,我希望将这些内容移到一个配置文件中,这样更容易维护。

# Function to train and evaluate models

def run_models(X_train, X_test, y_train, y_test, n_steps):

results = {}

# FNN

model_fnn = tf.keras.Sequential([

tf.keras.layers.Input(shape=(n_steps, 1)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dense(1)

])

model_fnn.compile(optimizer='adam', loss='mse')

model_fnn.fit(X_train, y_train, epochs=50, batch_size=32, validation_split=0.2, verbose=0)

results['FNN'] = model_fnn.predict(X_test)

# LSTM

X_train_lstm = X_train.reshape((X_train.shape[0], X_train.shape[1], 1))

X_test_lstm = X_test.reshape((X_test.shape[0], X_test.shape[1], 1))

model_lstm = tf.keras.Sequential([

tf.keras.layers.Input(shape=(n_steps, 1)),

tf.keras.layers.LSTM(50, activation='relu'),

tf.keras.layers.Dense(1)

])

model_lstm.compile(optimizer='adam', loss='mse')

model_lstm.fit(X_train_lstm, y_train, epochs=50, batch_size=32, validation_split=0.2, verbose=0)

results['LSTM'] = model_lstm.predict(X_test_lstm)

# CNN

model_cnn = tf.keras.Sequential([

tf.keras.layers.Input(shape=(n_steps, 1)),

tf.keras.layers.Conv1D(filters=64, kernel_size=3, activation='relu'),

tf.keras.layers.MaxPooling1D(pool_size=2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(50, activation='relu'),

tf.keras.layers.Dense(1)

])

model_cnn.compile(optimizer='adam', loss='mse')

model_cnn.fit(X_train_lstm, y_train, epochs=50, batch_size=32, validation_split=0.2, verbose=0)

results['CNN'] = model_cnn.predict(X_test_lstm)

# TCN

inputs_tcn = tf.keras.layers.Input(shape=(n_steps, 1))

x = inputs_tcn

for i in range(4):

x = residual_block(x, dilation_rate=2**i, filters=64)

x = Dense(1)(x[:, -1, :])

model_tcn = tf.keras.Model(inputs_tcn, x)

model_tcn.compile(optimizer='adam', loss='mse')

model_tcn.fit(X_train_lstm, y_train, epochs=50, batch_size=32, validation_split=0.2, verbose=0)

results['TCN'] = model_tcn.predict(X_test_lstm)

# Transformer

inputs_transformer = tf.keras.Input(shape=(n_steps, 1))

x = inputs_transformer

for _ in range(4):

x = transformer_encoder(x, head_size=256, num_heads=4, ff_dim=4, dropout=0.1)

x = Dense(1)(x[:, -1, :])

model_transformer = tf.keras.Model(inputs_transformer, x)

model_transformer.compile(optimizer='adam', loss='mse')

model_transformer.fit(X_train_lstm, y_train, epochs=50, batch_size=32, validation_split=0.2, verbose=0)

results['Transformer'] = model_transformer.predict(X_test_lstm)

return results

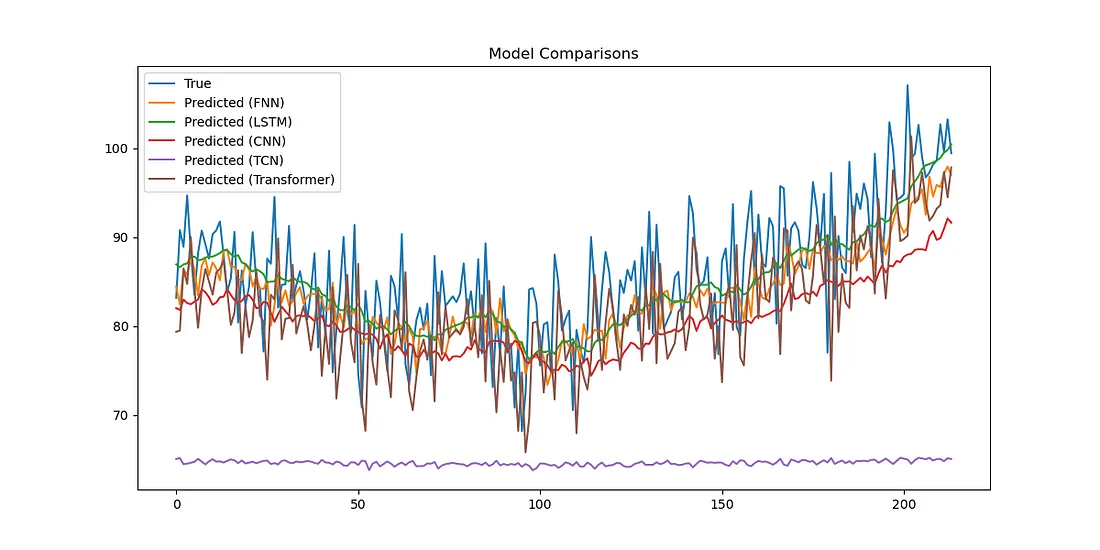

既然我们已经实现了各种架构,接下来让我们比较一下它们的性能:

# Train models (FNN, LSTM, CNN, TCN, Transformer)

results = run_models(X_train, X_test, y_train, y_test, n_steps=30)

# Function to evaluate and visualize results

def evaluate_and_plot(y_test, results, scaler):

y_test_inv = scaler.inverse_transform(y_test.reshape(-1, 1))

predictions_inv = {name: scaler.inverse_transform(pred) for name, pred in results.items()}

# Plot predictions

plt.figure(figsize=(12, 6))

plt.plot(y_test_inv, label='True')

for name, pred in predictions_inv.items():

plt.plot(pred, label=f'Predicted ({name})')

plt.title('Model Comparisons')

plt.legend()

plt.savefig("Model_Comparisons.png")

plt.show()

# Calculate and print MSE

mse_scores = {name: mean_squared_error(y_test_inv, pred) for name, pred in predictions_inv.items()}

for model, mse in mse_scores.items():

print(f"{model} MSE: {mse:.3f}")

return mse_scores

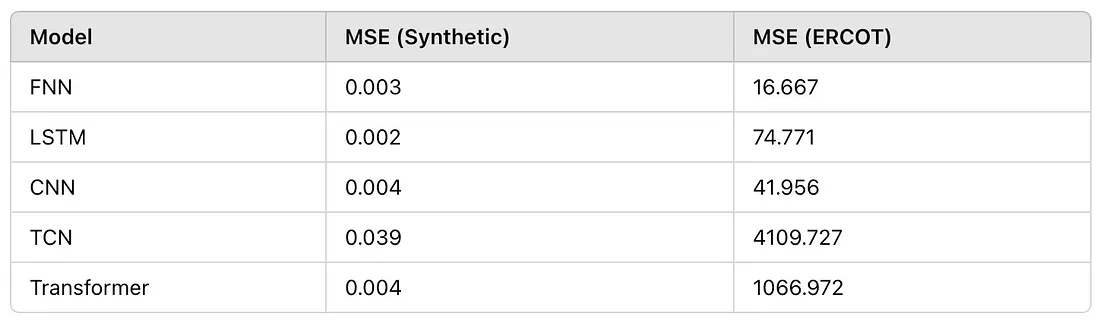

FNN MSE: 0.003

LSTM MSE: 0.002

CNN MSE: 0.004

TCN MSE: 0.039

Transformer MSE: 0.004

在我们分析这些不同架构的性能时,得出了几个关键观察结果:

- 前馈神经网络(FNN)提供了一个合理的基线,但往往在捕捉复杂的时间依赖关系时表现不佳。

- 长短期记忆网络(LSTM)在捕捉长距离依赖方面表现出色,使其特别适用于具有长期模式的时间序列。

- 卷积神经网络(CNN)在时间序列上的表现令人惊讶地有效,尤其是当局部模式很重要时。与循环架构相比,它们在计算上可能更高效。

- 时序卷积网络(TCN)在CNN的局部模式识别能力和捕捉更长距离依赖的能力之间取得了良好的平衡。

- Transformer凭借其注意力机制,对于具有复杂非线性关系的时间序列可能非常强大,尽管它们可能需要更多的数据和仔细的调优。

架构的选择取决于时间序列数据的具体特征:

- 对于具有明显季节性和趋势的数据,LSTM或TCN可能是更好的选择。

- 如果你的数据具有强烈的局部模式,CNN可能是一个不错的选择。

- 对于复杂的多元时间序列,Transformer可能提供最佳性能。

性能可能会因超参数调优、数据预处理和时间序列的具体性质而大不相同。通常值得尝试结合不同架构优点的集成方法。

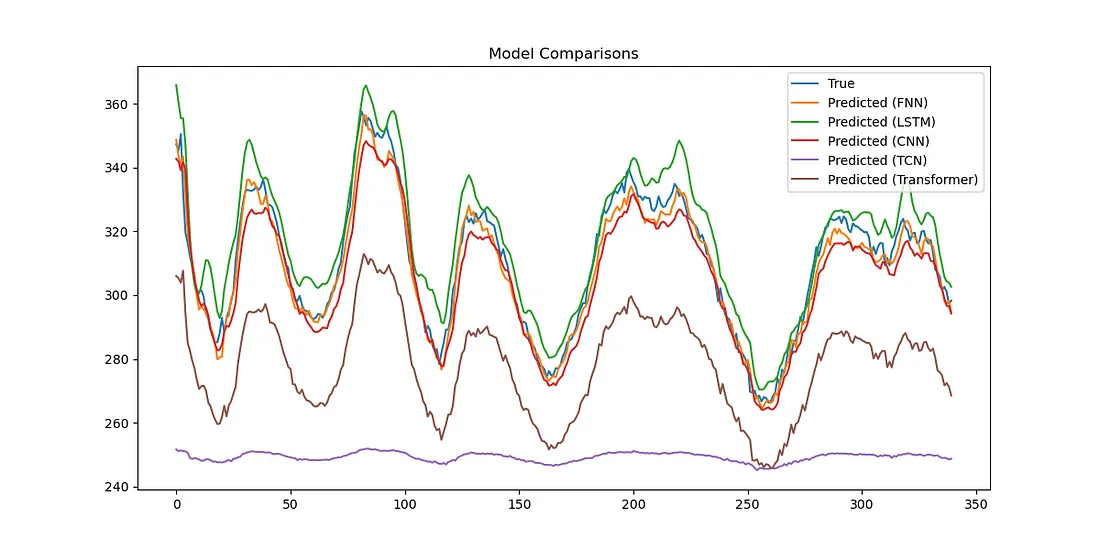

让我们用ERCOT数据来试一试。

由于我们的代码是模块化的,我们可以直接使用相同的管道。

# Main workflow

filepath = "ercot_load_data.csv" # Replace with your dataset file path

n_steps = 30

# Load and preprocess data

X_train, X_test, y_train, y_test, scaler = load_and_preprocess_data(filepath, n_steps)

# Train models and get predictions

results = run_models(X_train, X_test, y_train, y_test, n_steps)

# Evaluate and visualize results

mse_scores = evaluate_and_plot(y_test, results, scaler)

FNN MSE: 16.667

LSTM MSE: 74.771

CNN MSE: 41.956

TCN MSE: 4109.727

Transformer MSE: 1066.972

在合成数据中,Transformer的均方误差(MSE)最低,但在ERCOT数据中,它的表现相当糟糕。

未来方向

随着时间序列深度学习的不断发展,我们看到混合模型这一领域出现了令人兴奋的进展,这些模型结合了不同架构的优势。例如,CNN-LSTM模型或注意力增强的RNN在各种应用中展现出了广阔的前景。

文章来源:https://medium.com/@kylejones_47003/comparing-deep-learning-architectures-for-time-series-d8c3d4c8da3e

欢迎关注ATYUN官方公众号

商务合作及内容投稿请联系邮箱:bd@atyun.com

下一篇

通过统计分析优化服务器性能

热门企业

热门职位

写评论取消

回复取消