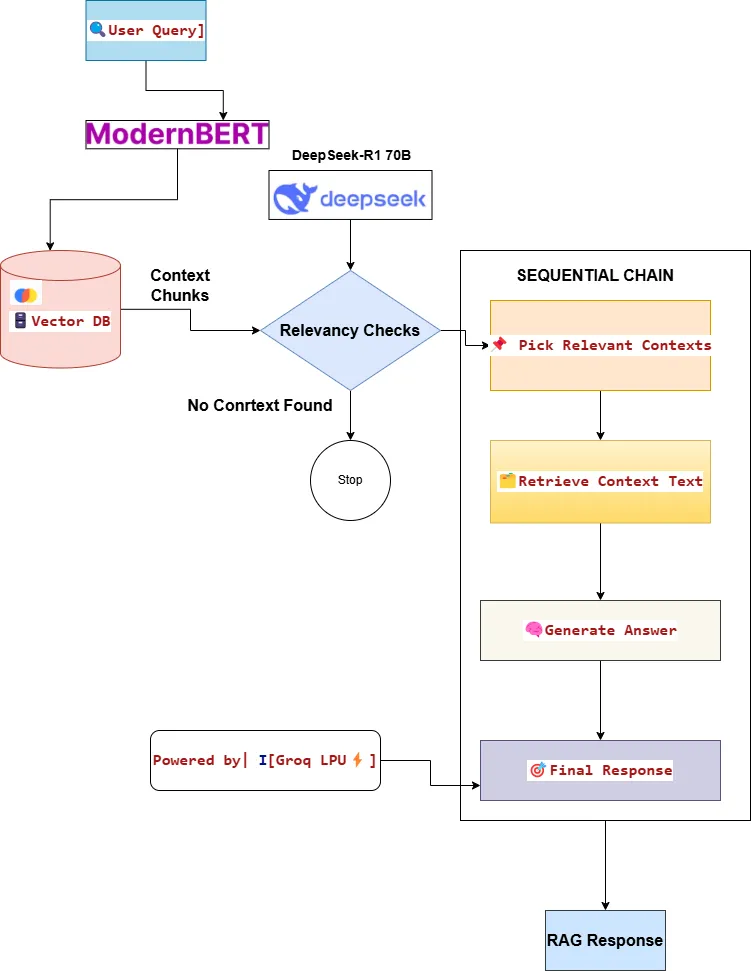

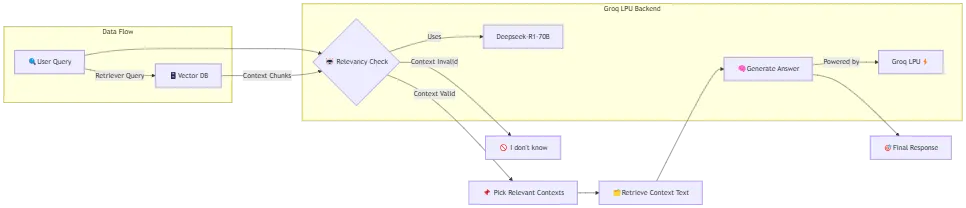

DeepSeek - R1 70B在 Groq 等平台的RAG检查代理应用

介绍

RAG 架构将大型语言模型 (LLM) 的生成能力与信息检索的精确度相结合。这种方法有可能重新定义我们如何与生成模型中的结构化和非结构化知识进行交互并增强这些知识,从而提高响应的透明度、准确性和语境性。

RAG 涉及的步骤:

1. 数据收集:该过程从收集外部来源(如 PDF、结构化文档或文本文件)的相关、特定领域的文本数据开始。这些文档可作为原始数据,用于创建系统将在检索过程中查询的定制知识库。

2. 数据预处理:然后清理和预处理收集到的数据,以创建可管理且有意义的数据块。此步骤包括消除噪音和格式化、规范化文本以及将其分割成较小的单元,例如标记(例如单词或单词组),以便以后轻松索引和检索。

3. 创建向量嵌入:预处理后,使用嵌入模型(例如 BERT 或 Sentence Transformers)将数据块转换为向量表示。这些向量嵌入捕获文本的语义含义,使系统能够执行相似性搜索。向量表示存储在向量存储中,这是一个索引数据库,针对基于相似性度量的快速检索进行了优化。

4. 检索相关内容:当查询输入系统时,它会转换为向量嵌入,类似于向量存储中的文档。然后,检索器组件会在向量存储中进行搜索,以识别和检索与查询相关的最相关的信息块。

5. 上下文增强: 系统将两个知识流合并在一起——嵌入在 LLM 中的固定、一般知识和按需增强的灵活、特定领域信息(作为额外的上下文层)。这使大型语言模型 (LLM) 与既有信息和新兴信息保持一致。

6. LLM 生成响应: 将包含原始用户查询和检索到的相关内容的上下文提示提供给 GPT、T5 或 Llama 等大型语言模型 (LLM)。然后,LLM 处理此增强输入以生成连贯且基于事实的响应。

7. 最终输出:RAG 系统的最终输出具有多种优势,例如最大限度地降低产生幻觉或过时信息的风险、通过将输出清晰地链接到现实世界来源来增强可解释性以及提供丰富而准确的响应。

真正的挑战是通过余弦相似度方法在相关查询的检索过程中获取的最有意义和最相关的上下文来进行规划。

尽管 LangChain 中提供了现成的打包解决方案来消除检索到的上下文中的噪音,但我尝试了通过使用 LangChain 和 Groq 实现代理来采用类似的方法。

实现所用的技术堆栈

- LangChain:用作应用程序框架

- Deepseek-R1 70B : 法学硕士

- Groq:更快的 LLM 推理

- ModernBERT:嵌入模型

什么是 LangChain?

LangChain 是一个开发者框架,用于构建由语言模型 (LLM)(如 GPT )驱动的应用程序。它将模块“链接”在一起,以创建智能的、上下文感知的应用程序 。

核心组件

1. LLM:连接到模型(OpenAI、Anthropic 等)进行文本生成。

2. 提示:指导模型输出的模板(例如,“将其翻译成法语:{text}”)。

3. 链:结合多个步骤(例如,获取数据→分析→生成报告)。

4. 代理:使用工具(网络搜索、计算器、API)解决任务的人工智能。

5. 记忆:存储聊天历史或对话内容(?→?→?)。

6. 数据加载器:提取文档、网站或数据库。

工作原理

1. 输入:用户查询(“总结此 PDF”)。

2. 检索:从文件/API 获取数据(?→☁️)。

3. 过程:模型分析数据(?✨)。

4. 生成:输出答案、代码或动作(?)。

使用案例

- 具有长期记忆的聊天机器人。

- 文档 QA :询问有关 PDF/网站的问题。

- 代码助手:生成 + 调试代码)。

- 自定义工作流程:自动化研究、电子邮件等。

好处

- 模块化:混合搭配工具。

- 可扩展:从简单脚本到企业应用程序。

- 开源:Python/JS 支持。

简而言之:LangChain = LLM + 你的数据 + 逻辑 。一次一条链,构建未来!?

什么是Deepseek-R1 70B

概述

Deepseek-R1–70B是由Deepseek (一家中国人工智能研究公司 ??)开发的尖端700 亿参数人工智能模型 。它专为高级推理、编码和复杂问题解决 而设计,针对性能和效率进行了优化。

基础架构

- 模型类型:仅解码器的变压器模型(如 GPT-4/LLaMA),针对自回归文本生成进行了优化。

- 规模:700 亿个参数,使其成为复杂推理和编码的“前沿模型”。

- 层数:可能有80 多个变压器层堆叠用于深度学习。

- 隐藏维度:每层约8,192+ 个单元,用于丰富文本/代码的表示。

- 注意力头:〜64 +个注意力头可并行处理多种语言/代码模式。

关键技术组件

1. 变压器块:

- 自注意力:使用多头注意力来衡量标记之间的关系(例如,代码中的“if”↔“else”)。

- 层规范化:使用RMNSNorm或Pre-LN等技术稳定训练。

- 前馈网络:Swish/GELU 激活用于非线性处理。

2.标记化:

- 在具有大量词汇量(~100k+ 个标记)的代码友好型标记器上进行训练,以处理编程语法。

- 支持多语言文本和数学符号(∫、∑等)。

3. 上下文窗口:可能是8k–32k 个标记,允许分析长文档或代码库。

主要特点

架构:

建立在基于变压器的框架上(如 GPT/LLaMA)。

在海量、多样化的数据集(文本、代码、数学等)上进行训练。

专业化:

擅长逻辑推理(例如,数学证明、代码调试)。

强大的编码能力(支持Python,Java,C++等)。

效率:

使用优化来降低计算成本同时保持高精度。

性能

- 基准:在推理任务中与GPT-4 和Claude 3等顶级模型竞争。

- 编码:在 HumanEval上表现优于许多开源模型(例如 LLaMA 2、CodeLlama)。

使用案例

- AI 助手:用于技术支持、辅导或编码帮助的高级聊天机器人。

- 研究:解决复杂的科学/数学问题。

- 软件开发:自动生成代码、调试或编写文档。

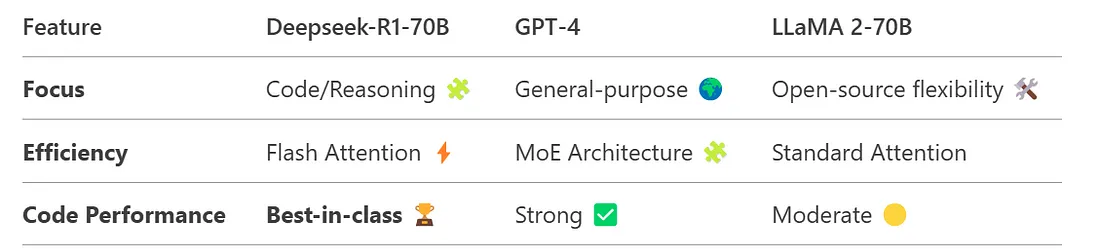

与其他模型的比较

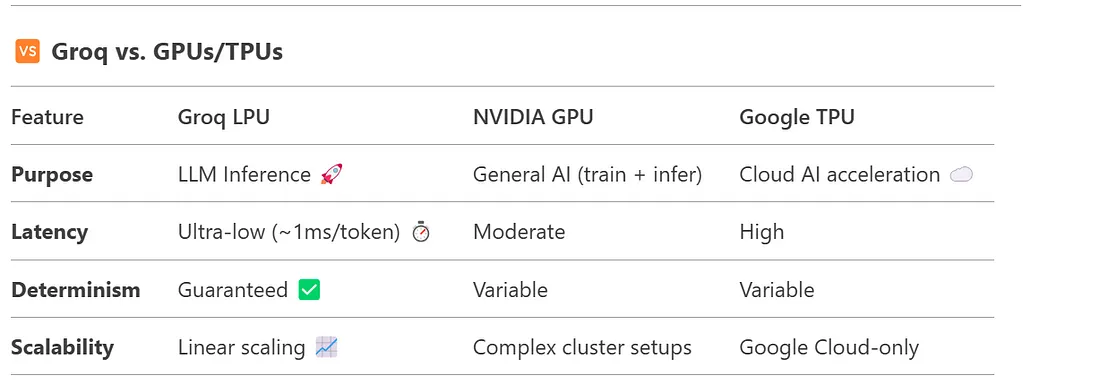

什么是 Groq?

Groq是一家硬件公司,专门制造超快的 AI 加速器芯片LPU(语言处理单元)。这些芯片旨在以闪电般的速度运行大型语言模型 (LLM),如 GPT-4 或 Gemini ,并具有确定性的性能(无延迟峰值!)。

主要特点

LPU架构:

- 专门用于LLM 推理(而非训练)。

- 专注于低延迟和高吞吐量(想想:每秒 500+ 个令牌?️)。

- 使用具有确定性执行的单核设计。

速度恶魔:

- 在实时 AI 任务(例如聊天机器人、代码生成)方面表现优于 GPU(例如 NVIDIA A100/H100)。

- 示例:运行 Meta 的 Llama 3 70B 的速度比大多数云 GPU 快。

能源效率:

- 与传统 GPU 相比,每个令牌消耗的电量更少 。

工作原理

1. 张量流处理器(TSP):

- 以可预测的顺序处理数据(无缓存未命中 ❌)。

2. 软件栈:

- 将机器学习模型(PyTorch/TensorFlow)编译为在Groq芯片上原生运行。

可用性

- 通过GroqCloud(基于API)访问。

- 作为企业级本地硬件出售。

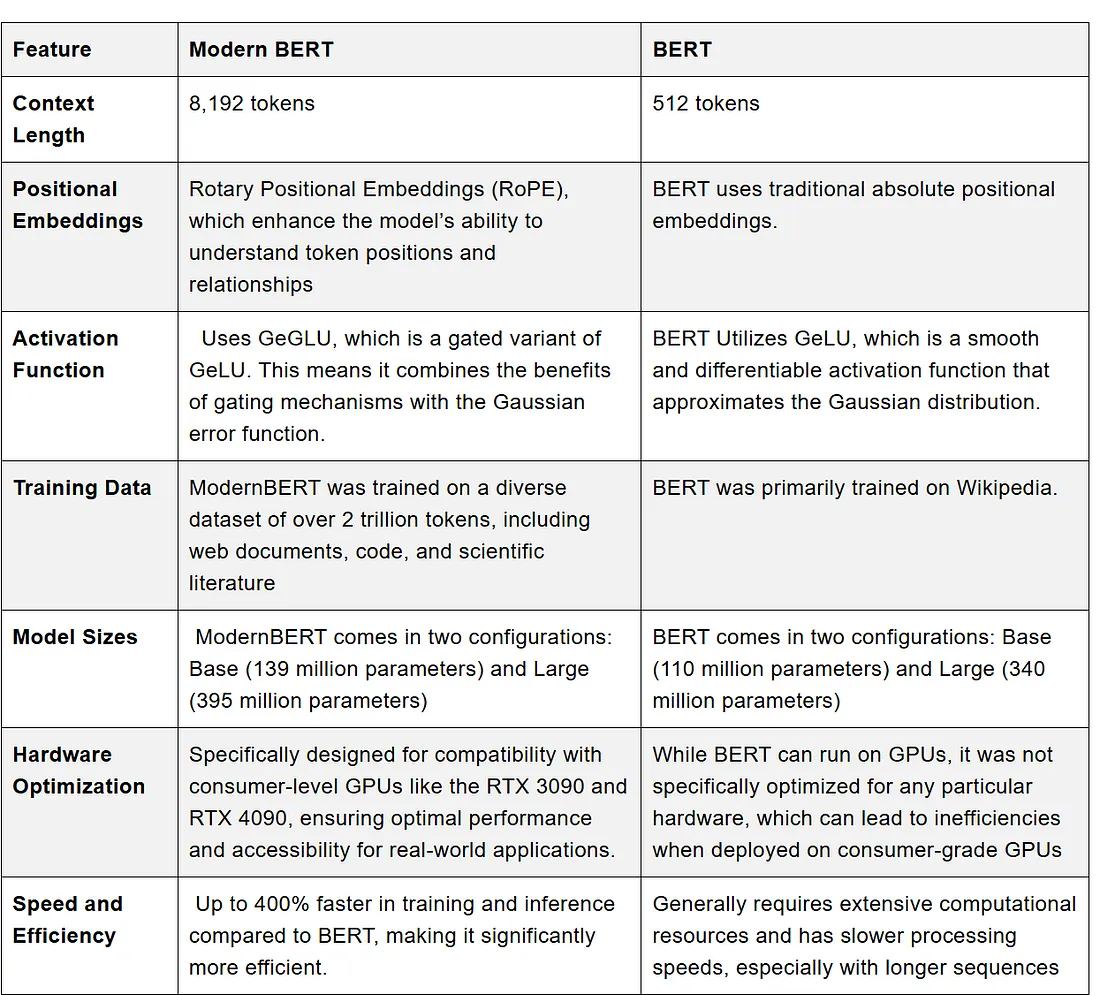

什么是ModernBERT?

ModernBERT是谷歌原始BERT模型的改进版,针对现代硬件的效率和性能进行了优化。由Nomic AI开发,旨在提供高质量的嵌入,同时更快、更轻量。

主要特点

1. 架构:

- 保留了BERT的transformer主干,但具有优化的注意力机制。

- 使用现代分词策略(如Unigram分词器)进行训练。

2. 效率:

- 与原生BERT相比,内存使用减少。

- 在CPU/GPU上推理更快(适合边缘设备?)。

3. 用例:

- 语义搜索

- 检索增强生成(RAG)管道

- 文本分类/聚类

在代码中使用的原因

在你的RAG管道中:

embedding_model = HuggingFaceEmbeddings(model_name="nomic-ai/modernbert-embed-base")HuggingFaceEmbeddings(model_name="nomic-ai/modernbert-embed-base")- 语义分块:ModernBERT 生成嵌入以将文档拆分成有意义的块。

- 准确性:比旧版BERT变体更好地捕捉文本片段之间细微的关系。

比较

代码工作流程亮点

1. 设置

- 安装依赖库(LangChain/Groq/HuggingFace)

- 连接Groq的超快速API

2. 文档处理

- 使用语义拆分将PDF转换为文本块

- 存储在Chroma向量数据库中

3. AI代理团队

- 相关性评判者 :对上下文的有用性进行评分(0/1)

- 上下文选择器 :筛选出最佳块

- 回答烹饪师 :烹饪出最终答案

4. 顺序工作流程

5. 关键创新

- Agentic Validation:在回答前对上下文质量进行二次检查

- Groq Speed :使用LPU芯片实现即时大型语言模型(LLM)响应

- ModernBERT :最先进的嵌入技术,用于准确检索

代码实现

安装所需的依赖项

%pip install -qU langchain langchain_community langchain_groq langchain-huggingface

%pip install -qU pyPDF2 pdfplumber

%pip install --quiet langchain_experimental

%pip install -qU sentence-transformers

%pip install -qU transformers

%pip install -qU langchain-chroma

设置Groq API密钥

from google.colab import userdata

import os

#

os.environ["GROQ_API_KEY"] = userdata.get('GROQ_API_KEY')

os.environ["HF_TOKEN"] = userdata.get('HF_TOKEN')

导入所需的依赖项

from langchain.chains import SequentialChain, LLMChain

from langchain.prompts import PromptTemplate

from langchain_groq import ChatGroq

设置LLM

llm_judge = ChatGroq(model="deepseek-r1-distill-llama-70b")ChatGroq(model="deepseek-r1-distill-llama-70b")

rag_llm = ChatGroq(model="mixtral-8x7b-32768")

#

llm_judge.verbose = True

rag_llm.verbose = True

加载待处理的数据

!mkdir data

!wget "https://arxiv.org/pdf/2410.15944v1" -O data/RAG.pdf"https://arxiv.org/pdf/2410.15944v1" -O data/RAG.pdf

处理PDF文档

from langchain.document_loaders import PDFPlumberLoader

loader = PDFPlumberLoader("data/RAG.pdf")

docs = loader.load()

print(len(docs))

print(docs[0].metadata)

################Response########################

36

{'source': 'data/RAG.pdf',

'file_path': 'data/RAG.pdf',

'page': 0,

'total_pages': 36,

'Author': '',

'CreationDate': 'D:20241022015619Z',

'Creator': 'LaTeX with hyperref',

'Keywords': '',

'ModDate': 'D:20241022015619Z',

'PTEX.Fullbanner': 'This is pdfTeX, Version 3.141592653-2.6-1.40.25 (TeX Live 2023) kpathsea version 6.3.5',

'Producer': 'pdfTeX-1.40.25',

'Subject': '',

'Title': '',

'Trapped': 'False'}

将文档拆分成更小、更易管理的块

from langchain_huggingface import HuggingFaceEmbeddings

from langchain_experimental.text_splitter import SemanticChunker

#

text_splitter = SemanticChunker(embedding_model)

documents = text_splitter.split_documents(docs)

print(len(documents))

print(documents[0].page_content)

############################Response###############################

73

Developing Retrieval Augmented Generation

(RAG) based LLM Systems from PDFs: An

Experience Report

Ayman Asad Khan Md Toufique Hasan

Tampere University Tampere University

ayman.khan@tuni.fi mdtoufique.hasan@tuni.fi

Kai Kristian Kemell Jussi Rasku Pekka Abrahamsson

Tampere University Tampere University Tampere University

kai-kristian.kemell@tuni.fi jussi.rasku@tuni.fi pekka.abrahamsson@tuni.fi

Abstract. This paper presents an experience report on the develop-

ment of Retrieval Augmented Generation (RAG) systems using PDF

documentsastheprimarydatasource.TheRAGarchitecturecombines

generativecapabilitiesofLargeLanguageModels(LLMs)withthepreci-

sionofinformationretrieval.Thisapproachhasthepotentialtoredefine

how we interact with and augment both structured and unstructured

knowledge in generative models to enhance transparency, accuracy and

contextuality of responses. The paper details the end-to-end pipeline,

from data collection, preprocessing, to retrieval indexing and response

generation,highlightingtechnicalchallengesandpracticalsolutions.We

aim to offer insights to researchers and practitioners developing similar

systems using two distinct approaches: OpenAI’s Assistant API with

GPTSeries andLlama’sopen-sourcemodels.Thepracticalimplications

of this research lie in enhancing the reliability of generative AI systems

in various sectors where domain specific knowledge and real time infor-

mationretrievalisimportant.ThePythoncodeusedinthisworkisalso

available at: GitHub. Keywords: Retrieval Augmented Generation (RAG), Large Language Models

(LLMs), Generative AI in Software Development, Transparent AI. 1 Introduction

Large language models (LLMs) excel at generating human like responses, but

base AI models can’t keep up with the constantly evolving information within

dynamicsectors.Theyrelyonstatictrainingdata,leadingtooutdatedorincom-

plete answers.

设置嵌入模型

model_name = "nomic-ai/modernbert-embed-base""nomic-ai/modernbert-embed-base"

model_kwargs = {'device': 'cpu'}

encode_kwargs = {'normalize_embeddings': False}

embedding_model = HuggingFaceEmbeddings(

model_name=model_name,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs

)

设置向量存储

from langchain_chroma import Chroma

vector_store = Chroma(

collection_name="deepseek_collection",

collection_metadata={"hnsw:space": "cosine"},

embedding_function=embedding_model,

persist_directory="./chroma_langchain_db", # Where to save data locally, remove if not necessary

)

将嵌入添加到向量存储中

vector_store.add_documents(documents)add_documents(documents)

len(vector_store.get()["documents"])

############Response############

73

设置检索器

retriver =vector_store.as_retriever(search_type="similarity",search_kwargs={"k": 5})as_retriever(search_type="similarity",search_kwargs={"k": 5})上下文相关性检查器代理

relevancy_prompt = """You are an expert judge tasked with evaluating whather the EACH OF THE CONTEXT provided in the CONTEXT LIST is self sufficient to answer the QUERY asked."""You are an expert judge tasked with evaluating whather the EACH OF THE CONTEXT provided in the CONTEXT LIST is self sufficient to answer the QUERY asked.

Analyze the provided QUERY AND CONTEXT to determine if each Ccontent in the CONTEXT LIST contains Relevant information to answer the QUERY.

Guidelines:

1. The content must not introduce new information beyond what's provided in the QUERY.

2. Pay close attention to the subject of statements. Ensure that attributes, actions, or dates are correctly associated with the right entities (e.g., a person vs. a TV show they star in).

6. Be vigilant for subtle misattributions or conflations of information, even if the date or other details are correct.

7. Check that the content in the CONTEXT LIST doesn't oversimplify or generalize information in a way that changes the meaning of the QUERY.

Analyze the text thoroughly and assign a relevancy score 0 or 1 where:

- 0: The content has all the necessary information to answer the QUERY

- 1: The content does not has the necessary information to answer the QUERY

```

EXAMPLE:

INPUT (for context only, not to be used for faithfulness evaluation):

What is the capital of France?

CONTEXT:

['France is a country in Western Europe. Its capital is Paris, which is known for landmarks like the Eiffel Tower.',

'Mr. Naveen patnaik has been the chief minister of Odisha for consequetive 5 terms']

OUTPUT:

The Context has sufficient information to answer the query.

RESPONSE:

{{"score":0}}

```

CONTENT LIST:

{context}

QUERY:

{retriever_query}

Provide your verdict in JSON format with a single key 'score' and no preamble or explanation:

[{{"content:1,"score": <your score either 0 or 1>,"Reasoning":<why you have chose the score as 0 or 1>}},

{{"content:2,"score": <your score either 0 or 1>,"Reasoning":<why you have chose the score as 0 or 1>}},

...]

"""

context_relevancy_checker_prompt = PromptTemplate(input_variables=["retriever_query","context"],template=relevancy_prompt)

相关上下文选择器代理

# Relevant Context Picker AgentRelevant Context Picker Agent

relevant_prompt = PromptTemplate(

input_variables=["relevancy_response"],

template="""

You main task is to analyze the json structure as a part of the Relevancy Response.

Review the Relevancy Response and do the following:-

(1) Look at the Json Structure content

(2) Analyze the 'score' key in the Json Structure content.

(3) pick the value of 'content' key against those 'score' key value which has 0.

.

Relevancy Response:

{relevancy_response}

Provide your verdict in JSON format with a single key 'content number' and no preamble or explanation:

[{{"content":<content number>}}]

"""

)

回答合成代理

# MeaningFul Context for Response synthesis AgentMeaningFul Context for Response synthesis Agent

context_prompt = PromptTemplate(

input_variables=["context_number"],

template="""

You main task is to analyze the json structure as a part of the Context Number Response and the list of Contexts provided in the 'Content List' and perform the following steps:-

(1) Look at the output from the Relevant Context Picker Agent.

(2) Analyze the 'content' key in the Json Structure format({{"content":<<content_number>>}}).

(3) Retrieve the value of 'content' key and pick up the context corresponding to that element from the Content List provided.

(4) Pass the retrieved context for each corresponing element number referred in the 'Context Number Response'

Context Number Response:

{context_number}

Content List:

{context}

Provide your verdict in JSON format with a two key 'relevant_content' and 'context_number' no preamble or explanation:

[{{"context_number":<content1>,"relevant_content":<content corresponing to that element 1 in the Content List>}},

{{"context_number":<content4>,"relevant_content":<content corresponing to that element 4 in the Content List>}},

...

]

"""

)

创建链条:为每个代理定义LLM链条

from langchain.chains import SequentialChain, LLMChain

#

context_relevancy_evaluation_chain = LLMChain(llm=llm_judge, prompt=context_relevancy_checker_prompt, output_key="relevancy_response")

#

pick_relevant_context_chain = LLMChain(llm=llm_judge, prompt=relevant_prompt, output_key="context_number")

#

relevant_contexts_chain = LLMChain(llm=llm_judge, prompt=context_prompt, output_key="relevant_contexts")

#

response_chain = LLMChain(llm=rag_llm,prompt=final_prompt,output_key="final_response")

使用Langchain SequentialChain进行编排

context_management_chain = SequentialChain(SequentialChain(

chains=[context_relevancy_evaluation_chain ,pick_relevant_context_chain, relevant_contexts_chain,response_chain],

input_variables=["context","retriever_query","query"],

output_variables=["relevancy_response", "context_number","relevant_contexts","final_response"]

)

查询和上下文 —— 1

contexts = retriver.invoke("What is RAG?")invoke("What is RAG?")

context =[d.page_content for d in contexts]

rag_prompt = """ You are ahelpful assistant very profiient in formulating clear and meaningful answers from the context provided.Based on the CONTEXT Provided ,Please formulate

a clear concise and meaningful answer for the QUERY asked.Please refrain from making up your own answer in case the COTEXT

provided is not sufficient to answer the QUERY.In such a situation please respond as 'I do not know'.

QUERY:

{query}

CONTEXT

{context}

ANSWER:

调用代理编排

final_output = context_management_chain({"context":context,"retriever_query":query,"query":query})context_management_chain({"context":context,"retriever_query":query,"query":query})回复

# Print first response (RAG Relevan Context Picker Agent)Print first response (RAG Relevan Context Picker Agent)

print("\n-------- ? context_relevancy_evaluation_chain Statement ? --------\n")

print(final_output["relevancy_response"])

# Print final legally refined response

print("\n-------- ? pick_relevant_context_chain Statement ? --------\n")

print(final_output["context_number"])

print("\n-------- ? relevant_contexts_chain Statement ? --------\n")

print(final_output["relevant_contexts"])

print("\n-------- ? Rag Response Statement ? --------\n")

print(final_output["final_response"])

-------- ? context_relevancy_evaluation_chain Statement ? --------Statement ? --------

<think>

Okay, so I need to evaluate each content in the provided list to see if they sufficiently answer the query "What is RAG?" without introducing new information or misattributing details. Let me go through each content one by one.

Starting with content 1: It's a figure title about the most valuable aspects of a workshop on RAG systems. It doesn't explain what RAG is, just mentions implementation. So, it doesn't answer the question.

Content 2: This one talks about enhancing the model's ability to respond and mentions the architecture of RAG systems. It refers to a figure but doesn't define RAG itself. So, it's not sufficient.

Content 3: Discusses participants' familiarity with RAG and their understanding improvement. It doesn't explain what RAG is, just how participants interacted with it. Not sufficient.

Content 4: This content seems more detailed. It talks about the design incorporating feedback, real-time retrieval capabilities, and integration between retrieval and generation. It also mentions the foundation for a RAG system and its applications. While it explains some aspects, it doesn't directly define what RAG is. So, still not sufficient on its own.

Content 5: This is about setting up a development environment for RAG. It's more about the setup process and tools, not defining RAG itself. So, it doesn't answer the query.

Content 6: This part explains that RAG models provide solutions by pulling real-time data and mentions their ability to explain and trace answers. It also talks about the guide developed and tested in a workshop, integrating RAG into workflows. This content does explain what RAG is by describing its function and purpose. It mentions that RAG models help address real-world challenges with dynamic data. So, this one does provide a sufficient explanation of what RAG is.

Content 7: This is about future trends and tools like Haystack and Elasticsearch. It explains how these tools enhance RAG models but doesn't define RAG itself. So, it doesn't answer the query.

So, only content 6 provides enough information to define RAG, while the others don't. Therefore, I'll score them accordingly.

</think>

```json

[{"content":1,"score":1,"Reasoning":"Content 1 only mentions implementation of RAG systems without defining what RAG is."},

{"content":2,"score":1,"Reasoning":"Content 2 refers to the architecture but does not define RAG."},

{"content":3,"score":1,"Reasoning":"Content 3 discusses participants' understanding but doesn't explain RAG."},

{"content":4,"score":1,"Reasoning":"Content 4 details design aspects but doesn't define RAG."},

{"content":5,"score":1,"Reasoning":"Content 5 is about setup, not defining RAG."},

{"content":6,"score":0,"Reasoning":"Content 6 explains RAG's function and purpose, providing a sufficient definition."},

{"content":7,"score":1,"Reasoning":"Content 7 discusses future trends without defining RAG."}]

```

-------- ? pick_relevant_context_chain Statement ? --------

<think>

Alright, I need to figure out which content number has a score of 0 in the provided JSON structure. The JSON is an array of objects, each with "content", "score", and "Reasoning" keys.

First, I'll look through each object to find where the "score" is 0. I'll start with the first object: content 1 has a score of 1, so that's not it. Next, content 2 also has a score of 1. Moving on, content 3 and 4 both have a score of 1. Content 5 has a score of 1 as well. Then, I check content 6 and see that its score is 0. Finally, content 7 has a score of 1.

So, the only content with a score of 0 is content 6. Therefore, the answer is content number 6.

</think>

{"content":6}

-------- ? relevant_contexts_chain Statement ? --------

<think>

Okay, so I have this JSON structure and a list of contexts. I need to figure out which content number corresponds to each element in the JSON. Let me start by understanding the JSON. It looks like it's an array of objects, each with "content", "score", and "Reasoning".

First, I need to extract the "content" numbers from each object. The first object has "content":1, the second "content":2, and so on up to "content":7. So, I need to look at each of these content numbers and find the corresponding context in the Content List provided.

Looking at the Content List, it's an array with 7 elements. Each element corresponds to a content number from 1 to 7. So, content 1 is the first element in the list, content 2 is the second, etc.

Now, I need to map each "content" number from the JSON to the correct context in the Content List. For example, the first object in the JSON has "content":1, so I take the first element from the Content List. The second object has "content":2, so I take the second element, and so on.

I'll go through each object in the JSON and retrieve the corresponding context. It's important to make sure I'm matching the correct content number to the right context. I should double-check each one to avoid mistakes.

Once I've mapped all the content numbers, I'll structure the result as a JSON array with objects containing "context_number" and "relevant_content". Each object will have the content number and the corresponding context from the list.

I think that's all I need to do. Now, I'll put it all together into the required JSON format.

</think>

```json

[

{"context_number":1,"relevant_content":"Fig.6: Most Valuable Aspects of the Workshop. Implementation of RAG systems."},

{"context_number":2,"relevant_content":"Fig.1: Architecture of Retrieval Augmented Generation(RAG) system. 2."},

{"context_number":3,"relevant_content":"Fig.4: Participants’ Familiarity with RAG Systems. Priortoattendingtheworkshop,themajorityofparticipantsreportedarea-\nsonable level of familiarity with RAG systems.This indicated that the audience\nhadafoundationalunderstandingoftheconceptspresented,allowingformorein\ndepthdiscussionsduringtheworkshop.Aftertheworkshop,therewasanotable\nimprovement in participants’ understanding of RAG systems. Fig.5: Participants’ Improvement in Understanding RAG Systems. Themajorityofparticipantshighlightedthepracticalcodingexercisesasthe\nmostvaluableaspectoftheworkshop,whichhelpedthembetterunderstandthe\n31\n"},

{"context_number":4,"relevant_content":"The design also incorporates the feedback from a diverse group of partici-\npantsduringaworkshopsession,whichfocusedonthepracticalaspectsofimple-\nmenting RAG systems. Their input highlighted the effectiveness of the system’s\nreal-timeretrievalcapabilities,particularlyinknowledge-intensivedomains,and\nunderscored the importance of refining the integration between retrieval and\ngeneration to enhance the transparency and reliability of the system’s outputs. This design sets the foundation for a RAG system capable of addressing the\nneeds of domains requiring precise, up-to-date information. 4 Results: Step-by-Step Guide to RAG\n4.1 Setting Up the Environment\nThis section walks you through the steps required to set up a development\nenvironmentforRetrievalAugmentedGeneration(RAG)onyourlocalmachine. We will cover the installation of Python, setting up a virtual environment and\nconfiguring an IDE (VSCode)."},

{"context_number":5,"relevant_content":"RAG\nmodels provide practical solutions with pulling in real time data from provided\nsources. The ability to explain and trace how RAG models reach their answers\nalsobuildstrustwhereaccountabilityanddecisionmakingbasedonrealevidence\nis important. Inthispaper,wedevelopedaRAGguidethatwetestedinaworkshopsetting,\nwhere participants set up and deployed RAG systems following the approaches\nmentioned. This contribution is practical, as it helps practitioners implement\nRAG models to address real world challenges with dynamic data and improved\naccuracy.Theguideprovidesusersclear,actionablestepstointegrateRAGinto\ntheir workflows, contributing to the growing toolkit of AI driven solutions. With that, RAG also opens new research avenues that can shape the future\nofAIandNLPtechnologies.Asthesemodelsandtoolsimprove,therearemany\npotentialareasforgrowth,suchasfindingbetterwaystosearchforinformation,\nadapting to new data automatically, and handling more than just text (like\nimages or audio). Recent advancements in tools and technologies have further\naccelerated the development and deployment of RAG models. As RAG models\ncontinue to evolve, several emerging trends are shaping the future of this field. 1. Haystack: An open-source framework that integrates dense and sparse re-\ntrieval methods with large-scale language models. Haystack supports real-\ntime search applications and can be used to develop RAG models that per-\nform tasks such as document retrieval, question answering, and summariza-\ntion [4]. 2. Elasticsearch with Vector Search: Enhanced support for dense vector\nsearch capabilities, allowing RAG models to perform more sophisticated re-\ntrieval tasks. Elasticsearch’s integration with frameworks like Faiss enables\n33\n"},

{"context_number":6,"relevant_content":"The design also incorporates the feedback from a diverse group of partici-\npantsduringaworkshopsession,whichfocusedonthepracticalaspectsofimple-\nmenting RAG systems. Their input highlighted the effectiveness of the system’s\nreal-timeretrievalcapabilities,particularlyinknowledge-intensivedomains,and\nunderscored the importance of refining the integration between retrieval and\ngeneration to enhance the transparency and reliability of the system’s outputs. This design sets the foundation for a RAG system capable of addressing the\nneeds of domains requiring precise, up-to-date information. 4 Results: Step-by-Step Guide to RAG\n4.1 Setting Up the Environment\nThis section walks you through the steps required to set up a development\nenvironmentforRetrievalAugmentedGeneration(RAG)onyourlocalmachine. We will cover the installation of Python, setting up a virtual environment and\nconfiguring an IDE (VSCode)."},

{"context_number":7,"relevant_content":"RAG\nmodels provide practical solutions with pulling in real time data from provided\nsources. The ability to explain and trace how RAG models reach their answers\nalsobuildstrustwhereaccountabilityanddecisionmakingbasedonrealevidence\nis important. Inthispaper,wedevelopedaRAGguidethatwetestedinaworkshopsetting,\nwhere participants set up and deployed RAG systems following the approaches\nmentioned. This contribution is practical, as it helps practitioners implement\nRAG models to address real world challenges with dynamic data and improved\naccuracy.Theguideprovidesusersclear,actionablestepstointegrateRAGinto\ntheir workflows, contributing to the growing toolkit of AI driven solutions. With that, RAG also opens new research avenues that can shape the future\nofAIandNLPtechnologies.Asthesemodelsandtoolsimprove,therearemany\npotentialareasforgrowth,suchasfindingbetterwaystosearchforinformation,\nadapting to new data automatically, and handling more than just text (like\nimages or audio). Recent advancements in tools and technologies have further\naccelerated the development and deployment of RAG models. As RAG models\ncontinue to evolve, several emerging trends are shaping the future of this field. 1. Haystack: An open-source framework that integrates dense and sparse re-\ntrieval methods with large-scale language models. Haystack supports real-\ntime search applications and can be used to develop RAG models that per-\nform tasks such as document retrieval, question answering, and summariza-\ntion [4]. 2. Elasticsearch with Vector Search: Enhanced support for dense vector\nsearch capabilities, allowing RAG models to perform more sophisticated re-\ntrieval tasks. Elasticsearch’s integration with frameworks like Faiss enables\n33\n"}

]

-------- ? Rag Response Statement ? --------

RAG stands for Retrieval Augmented Generation. It is a system that combines real-time data retrieval with large-scale language models to provide practical solutions for addressing challenges with dynamic data and improved accuracy. RAG models are capable of explaining and tracing how they reach their answers, which builds trust in scenarios where accountability and decision-making based on real evidence is important. The design of RAG systems can be refined based on feedback to enhance their transparency, reliability, and real-time retrieval capabilities, particularly in knowledge-intensive domains. Recent advancements in tools and technologies have accelerated the development and deployment of RAG models, and emerging trends continue to shape the future of this field.

查询和上下文 —— 2

contexts = retriver.invoke("What are the key steps that a RAG Process is structured into?")invoke("What are the key steps that a RAG Process is structured into?")

context = [d.page_content for d in contexts]

query = "What are the key steps that a RAG Process is structured into?"

retriever_query = query

调用代理编排

final_output = context_management_chain({"context":context,"retriever_query":query,"query":query})context_management_chain({"context":context,"retriever_query":query,"query":query})回复

# Print first response (RAG Relevan Context Picker Agent)Print first response (RAG Relevan Context Picker Agent)

print("\n-------- ? context_relevancy_evaluation_chain Statement ? --------\n")

print(final_output["relevancy_response"])

# Print final legally refined response

print("\n-------- ? pick_relevant_context_chain Statement ? --------\n")

print(final_output["context_number"])

print("\n-------- ? relevant_contexts_chain Statement ? --------\n")

print(final_output["relevant_contexts"])

print("\n-------- ? Rag Response Statement ? --------\n")

print(final_output["final_response"])

-------- ? context_relevancy_evaluation_chain Statement ? --------Statement ? --------

<think>

Okay, I need to evaluate each content in the provided CONTEXT LIST to determine if it contains enough information to answer the query: "What are the key steps that a RAG Process is structured into?"

First, I'll look at each content piece one by one.

Content 1: This is about the most valuable aspects of a workshop on RAG systems. It mentions feedback from participants and the effectiveness of real-time retrieval but doesn't outline any steps of the RAG process itself. So, it's not relevant.

Content 2: Discusses the design incorporating feedback, effectiveness of real-time retrieval, and the integration between retrieval and generation. It also talks about setting up a development environment, like installing Python and VSCode, which are setup steps but not the key steps of the RAG process. So, partially relevant but not enough.

Content 3: Covers participants' familiarity and improvement in understanding RAG systems. It mentions practical coding exercises but doesn't detail the RAG process steps. Not relevant.

Content 4: Talks about enhancing the model's ability to respond and mentions real-time data pulling. It also briefly mentions the architecture of RAG but doesn't break it down into steps. Not sufficient.

Content 5: This seems more detailed. It explains that RAG models provide practical solutions by pulling real-time data and mentions integrating RAG into workflows. It also talks about tools like Haystack and Elasticsearch with vector search. However, it doesn't explicitly list the key steps of the RAG process. It's more about applications and tools rather than the structured steps.

After reviewing all, none of the contents provide a clear, step-by-step structure of the RAG process. They discuss aspects like setup, tools, and applications but don't outline the key steps. Therefore, each content doesn't have the necessary information to answer the query.

</think>

{"score":1}

-------- ? pick_relevant_context_chain Statement ? --------

<think>

Alright, I need to determine which content from the provided list has a 'score' of 0 and then extract the 'content' value from that entry.

Looking at the JSON response, I see an array of objects. Each object has a 'content' and a 'score'. I'll go through each one:

1. The first object has 'content': 'Content 1' and 'score': 0. This is the one I'm interested in.

2. The second object has 'score': 1, so I skip it.

3. There's only two objects in the array, so I stop here.

The content with a score of 0 is 'Content 1'.

</think>

{"content": "Content 1"}

-------- ? relevant_contexts_chain Statement ? --------

<think>

Okay, let's tackle this problem step by step. I need to analyze the JSON structure provided and the list of contexts to determine the relevant content for each context number specified in the 'Context Number Response'.

First, I'll look at the 'Context Number Response' which is a JSON array containing objects with 'context_number' and 'score'. My task is to find the entries where the 'score' is 0 and extract the corresponding 'content' from the 'Content List'.

Looking at the 'Context Number Response', I see two objects:

1. The first object has 'context_number': 1 and 'score': 0.

2. The second object has 'context_number': 4 and 'score': 1.

Since I'm only interested in entries with a 'score' of 0, I'll focus on the first object where 'context_number' is 1.

Next, I'll refer to the 'Content List' provided. The list has five elements, each corresponding to a context number from 1 to 5. I need to find the content for context number 1.

Looking at the 'Content List', the first element (index 0) is "Fig.6: Most Valuable Aspects of the Workshop. Implementation of RAG systems." This corresponds to context number 1.

Therefore, the relevant content for context number 1 is "Fig.6: Most Valuable Aspects of the Workshop. Implementation of RAG systems."

Now, I'll format the result as a JSON array with two keys: 'context_number' and 'relevant_content' for each relevant entry. Since only context number 1 has a score of 0, I'll include only that in the result.

So, the final JSON output should be:

[{"context_number":1,"relevant_content":"Fig.6: Most Valuable Aspects of the Workshop. Implementation of RAG systems."}]

</think>

```json

[

{

"context_number": 1,

"relevant_content": "Fig.6: Most Valuable Aspects of the Workshop. Implementation of RAG systems."

}

]

```

-------- ? Rag Response Statement ? --------

Based on the provided context, the RAG (Retrieval Augmented Generation) process seems to be structured into the following key steps:

1. Setting Up the Environment: This involves installing Python, setting up a virtual environment, and configuring an Integrated Development Environment (IDE), such as VSCode.

2. Implementing the RAG System: This involves refining the integration between retrieval and generation to enhance the transparency and reliability of the system's outputs. The system's real-time retrieval capabilities are particularly effective in knowledge-intensive domains.

3. Enhancing the Model's Ability to Respond: This can be achieved through practical coding exercises, which help users better understand the RAG system.

4. Integrating RAG into Workflows: The RAG guide provides clear and actionable steps to help users integrate RAG into their workflows, contributing to the growing toolkit of AI-driven solutions.

Additionally, there are emerging trends and tools shaping the future of RAG, such as Haystack, an open-source framework that integrates dense and sparse retrieval methods with large-scale language models, and Elasticsearch with Vector Search, which enhances support for dense vector search capabilities.

查询和上下文 —— 3

contexts = retriver.invoke("What are the drawbacks of RAG approach?")invoke("What are the drawbacks of RAG approach?")

context = [d.page_content for d in contexts]

query = "What are the drawbacks of RAG approach?"

retriever_query = query

调用代理编排

final_output = context_management_chain({"context":context,"retriever_query":query,"query":query})context_management_chain({"context":context,"retriever_query":query,"query":query})回复

# Print first response (RAG Relevan Context Picker Agent)Print first response (RAG Relevan Context Picker Agent)

print("\n-------- ? context_relevancy_evaluation_chain Statement ? --------\n")

print(final_output["relevancy_response"])

# Print final legally refined response

print("\n-------- ? pick_relevant_context_chain Statement ? --------\n")

print(final_output["context_number"])

print("\n-------- ? relevant_contexts_chain Statement ? --------\n")

print(final_output["relevant_contexts"])

print("\n-------- ? Rag Response Statement ? --------\n")

print(final_output["final_response"])

-------- ? context_relevancy_evaluation_chain Statement ? --------Statement ? --------

<think>

Okay, I need to evaluate each context in the CONTENT LIST to see if it provides sufficient information to answer the query: "What are the drawbacks of RAG approach?"

The query is asking specifically about the drawbacks, so I'm looking for any mention of cons, limitations, or disadvantages related to RAG.

Looking at the first content: It talks about the most valuable aspects of a workshop and implementation of RAG systems. It doesn't mention any drawbacks, so this one doesn't help. I'll score it 1.

The second content discusses a decision framework comparing Fine-Tuning, RAG, and Base Models. It lists drawbacks of RAG, such as limited performance on domain-specific queries needing customization. This directly addresses the query, so it gets a 0.

The third content is about the role of PDFs in RAG, highlighting their importance. No drawbacks mentioned here, so score 1.

The fourth content describes RAG models, their benefits, and some future trends. It mentions that RAG requires complex infrastructure and is resource-intensive, which are drawbacks. So, this gets a 0.

The fifth content talks about customer support and RAG's ability to handle dynamic info. It doesn't mention any drawbacks, so score 1.

</think>

{"score":0}

{"score":0}

{"score":1}

{"score":0}

{"score":1}

Wait, but the user instructed to provide the response in JSON format with each content evaluated. Let me correct that.

Actually, the correct approach is to evaluate each content in the list and assign a score of 0 or 1 based on whether it contains the necessary information.

Here's the step-by-step evaluation:

1. Content 1: No mention of drawbacks. Score 1.

2. Content 2: Explicitly lists drawbacks. Score 0.

3. Content 3: Talks about PDFs, no drawbacks. Score 1.

4. Content 4: Discusses infrastructure needs and resource intensity. Score 0.

5. Content 5: Use case examples, no drawbacks. Score 1.

So the final JSON should reflect each content's score individually.

```json

[

{"content": "1", "score": 1, "Reasoning": "Does not mention drawbacks of RAG."},

{"content": "2", "score": 0, "Reasoning": "Explicitly lists drawbacks of RAG."},

{"content": "3", "score": 1, "Reasoning": "Focuses on PDFs, no drawbacks mentioned."},

{"content": "4", "score": 0, "Reasoning": "Discusses RAG's infrastructure and resource requirements as drawbacks."},

{"content": "5", "score": 1, "Reasoning": "Describes use cases without mentioning drawbacks."}

]

```

-------- ? pick_relevant_context_chain Statement ? --------

<think>

Alright, I need to help the user by analyzing the JSON structure they provided. Their main goal is to evaluate each content item to see if it addresses the drawbacks of the RAG approach.

First, I'll look at each content in the list:

1. Content 1 talks about valuable aspects and implementation of RAG systems. No drawbacks mentioned here, so I'll score it 1.

2. Content 2 discusses a decision framework comparing different models and explicitly lists drawbacks of RAG. This is relevant, so I'll score it 0.

3. Content 3 focuses on the role of PDFs in RAG. No drawbacks here, so score 1.

4. Content 4 mentions the need for complex infrastructure and resources as drawbacks. Score 0.

5. Content 5 is about customer support and handling dynamic info. No drawbacks mentioned, so score 1.

Now, I need to pick the 'content' key where the 'score' is 0. That means I'm looking for content numbers 2 and 4.

I should present this in the required JSON format without any extra explanation, just the content numbers with scores 0.

</think>

{"content": "2"}

{"content": "4"}

-------- ? relevant_contexts_chain Statement ? --------

<think>

Alright, I need to help the user by evaluating each content item to determine if it addresses the drawbacks of the RAG approach.

Looking at the Context Number Response, the user is asking for content numbers 1, 4, and 5.

1. **Content 1**: This talks about the valuable aspects and implementation of RAG systems. It doesn't mention any drawbacks, so it's not relevant.

2. **Content 4**: This explicitly lists the drawbacks of RAG, such as requiring complex infrastructure and being resource-intensive. This is relevant.

3. **Content 5**: This focuses on base models and when to use them, not discussing RAG's drawbacks. Not relevant.

So, only content 4 is relevant. I'll format the response as a JSON array with the relevant content number and its corresponding content from the list.

</think>

```json

[

{

"context_number": 4,

"relevant_content": "Drawbacks:RAGrequirescomplexinfrastructure,includingvectordatabases\nand effective retrieval pipelines, and can be resource-intensive during inference."

}

]

```

-------- ? Rag Response Statement ? --------

The drawbacks of the Retrieval-Augmented Generation (RAG) approach include:

1. Limited performance on domain-specific queries or tasks that need high levels of customization.

2. Requirement of complex infrastructure, such as vector databases and effective retrieval pipelines, which can be resource-intensive during inference.

These drawbacks make RAG less suitable for applications that require broad generalization, low-cost deployment, or rapid prototyping. In such cases, base models without fine-tuning or RAG are more appropriate.

结论

所实现的检索增强生成(RAG)管道通过集成尖端工具,展示了构建上下文感知AI系统的强大框架:

1. 高效嵌入:使用ModernBERT(nomic-ai/modernbert-embed-base)进行语义分块,实现精确的文档分割,平衡了上下文保留和计算效率。

2. 速度优化的推理:利用Groq的LPU与mixtral-8x7b和deepseek-r1-distill-llama-70b,确保低延迟响应生成,这对于聊天机器人或代码助手等实时应用至关重要。

3. 代理工作流程:SequentialChain编排了一个多步验证过程:

- 相关性检查器过滤掉不相关的上下文(减少幻觉)。

- 上下文选择器动态选择最佳文本块。

- 响应合成器基于验证数据生成答案。