Google Colab 入门:VLLM与 DeepSeek R1的快速指南

最近,我尝试在本地运行使用 Qwen 7B 精炼的 DeepSeek R1,不使用任何 GPU。我的所有 CPU 核心和线程都被推到了极限,最高温度达到 90 摄氏度。

我的朋友问我为什么不用Google Colab?因为它能免费提供GPU(3到4小时)。他一直在用它来解析80多页的RAG应用PDF文件,并串联大型语言模型(LLM),因为我们现在还可以“利用”(使用)Google Colab。

然后我就试了,体验了一下T4(20系列)GPU,不过也有一些限制(稍后我会解释,简言之因为它是免费的)。所以我一直用它来在Google Colab中测试可变长度大型语言模型(VLLM)。我还使用了FastAPI和ngrok来将API公开给大众(只是为了测试,为何不呢?)。

PIP(Pip Install Package,即包安装程序)

它允许你安装和管理标准Python库中未包含的额外库和依赖项。现在,我们将通过在Jupyter Notebook的代码前加上!来使用命令行界面(CLI)安装它。

!pip install fastapi nest-asyncio pyngrok uvicorn

!pip install vllm

我们将安装FastAPI、nest-asyncio、pyngrok和Uvicorn作为Python服务,以处理来自外部源的请求。VLLM主要是用于大型语言模型(LLM)推理和服务的库。虽然Ollama是一个选项,但我相信这种方法会更有效。

现在,我们将与VLLM函数进行交互。

# Load and run the model:

import subprocess

import time

import os

# Start vllm server in the background

vllm_process = subprocess.Popen([

'vllm',

'serve', # Subcommand must follow vllm

'deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B',

'--trust-remote-code',

'--dtype', 'half',

'--max-model-len', '16384', # This is max token input and output that you send and retrieve

'--enable-chunked-prefill', 'true',

'--tensor-parallel-size', '1'

], stdout=subprocess.PIPE, stderr=subprocess.PIPE, start_new_session=True)

好的,以下是我加载模型的方法:在后台启动VLLM服务器,因为如果你在Jupyter Notebook中运行VLLM,它会卡在运行过程中,我们无法将其暴露出来(我想应该可以,但我只是选择这样做)。在这里我们可以看到:

- trust-remote-code,表示信任远程代码。

- dtype,设置为half以减少内存使用。

- max-model-len,用于设置你想要发送和检索的最大令牌输入+输出的总和。

- enable-chunked-prefill,指在生成开始前将令牌预加载到模型中的过程。

- tensor-parallel-size,将模型拆分到多个GPU上以加快推理速度。

这样做我们不会受到T4的限制,但需要注意:

- 小心CUDA内存不足错误(我们只有15GB的显存)

- Colab的GPU内存限制可能需要调整参数

- 12GB的RAM,应该够了……我想。

现在运行它。

子进程

好的,由于我们使用了子进程,并且设置了start_new_session为true,我们无法正常地通过管道传输输出,如果出现错误,我们在错误发生之前是无法看到的。

import requests

def check_vllm_status():

try:

response = requests.get("http://localhost:8000/health")

if response.status_code == 200:

print("vllm server is running")

return True

except requests.exceptions.ConnectionError:

print("vllm server is not running")

return False

try:

# Monitor the process

while True:

if check_vllm_status() == True:

print("The vllm server is ready to serve.")

break

else:

print("The vllm server has stopped.")

stdout, stderr = vllm_process.communicate(timeout=10)

print(f"STDOUT: {stdout.decode('utf-8')}")

print(f"STDERR: {stderr.decode('utf-8')}")

break

time.sleep(5) # Check every second

except KeyboardInterrupt:

print("Stopping the check of vllm...")

它会每5秒检查一次,并尝试将输出通过管道传输,如果有错误,则会打印VLLM的标准错误输出和标准输出。如果VLLM一切正常,那么你可以继续进行下一块代码。

创建函数调用VLLM

import requests

import json

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from fastapi.responses import StreamingResponse

import requests

# Request schema for input

class QuestionRequest(BaseModel):

question: str

model: str = "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B" # Default model

def ask_model(question: str, model: str):

"""

Sends a request to the model server and fetches a response.

"""

url = "http://localhost:8000/v1/chat/completions" # Adjust the URL if different

headers = {"Content-Type": "application/json"}

data = {

"model": model,

"messages": [

{

"role": "user",

"content": question

}

]

}

response = requests.post(url, headers=headers, json=data)

response.raise_for_status() # Raise exception for HTTP errors

return response.json()

# Usage:

result = ask_model("What is the capital of France?", "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B")

print(json.dumps(result, indent=2))

def stream_llm_response(question:str, model:str):

url = "http://localhost:8000/v1/chat/completions"

headers = {"Content-Type": "application/json"}

data = {

"model": model,

"messages": [{"role": "user", "content": question}],

"stream": True # 🔥 Enable streaming

}

with requests.post(url, headers=headers, json=data, stream=True) as response:

for line in response.iter_lines():

if line:

# OpenAI-style streaming responses are prefixed with "data: "

decoded_line = line.decode("utf-8").replace("data: ", "")

yield decoded_line + "\n"

我们有两个用于测试的API,

ask_model 函数

目的:

向vLLM服务器发送请求,并等待完整响应。

工作原理:

- 构建一个POST请求到http://localhost:8000/v1/chat/completions。

- 发送一个包含以下内容的JSON负载:

- 模型名称。

- 用户的问题(作为消息)。

- 等待响应并将其作为JSON返回。

关键特性:

- 阻塞调用(等待生成完整响应)。

- 如果请求失败,则抛出异常。

stream_llm_response 函数

目的:

从vLLM流式传输响应,而不是等待完整输出。

工作原理:

- 发送一个POST请求,其中"stream": True,启用分块响应。

- 使用response.iter_lines()实时处理响应块。

- 每个接收到的块被解码并以流的形式返回。

关键特性:

- 非阻塞流式传输(适用于聊天机器人和交互式应用程序)。

- 数据以小部分返回,减少感知延迟。

我们已经对其进行了测试,输出结果类似于这样,

{

"id": "chatcmpl-680bc07cd6de42e7a00a50dfbd99e833",

"object": "chat.completion",

"created": 1738129381,

"model": "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "<think>\nOkay, so I'm trying to find out what the capital of France is. Hmm, I remember hearing a few cities named after the myths or something. Let me think. I think Neuch portfolio is where the comma was named. Yeah, that's right, until sometimes they changed it, but I think it's still there now. Then there's Charles-de-Lorraine. I've seen that name written before in various contexts, maybe managers or something. And then I think there's Saint Mal\u25e6e as a significant city in France. Wait, I'm a bit confused about the last one. Is that the capital or somewhere else? I think the capital blew my mind once, and I still don't recall it. Let me think of the names that come to mind. Maybe Paris? But is there something else? I've heard about places likequalification, Guiness, and Agoura also named after mythological figures, but are they capitals? I don't think so. So among the prominent ones, maybe Neuch portfolio, Charles-de-Lorraine, and Saint Mal\u25e6e are the names intended for the capital, but I'm unsure which one it is. Wait, I think I might have confused some of them. Let me try to look up the actual capital. The capital of France is a city in the eastern department of\u5c55\u51fa. Oh, right, there's a special place called Place de la Confluense. Maybe that's where the capital is. So I think the capital is Place de la Confluense, not the city name. So the capital isn't the town; it's quite a vein-shaped area. But I'm a bit confused because some people might refer to just the town as the capital, but in reality, it's a larger area. So to answer the question, the capital of France is Place de la Confluense, and its formal name is la Confluense. I'm not entirely certain if there are any other significant cities or names, but from what I know, the others I listed might be historical places but not exactly capitals. Maybe the\u6bebot\u00e9 family name is still sometimes used for the capital, but I think it's not the actual name. So putting it all together, the capital is Place de la Confluense, and the correct name is \"la Confluense.\" The other names like Neuch portfolio are places, not capitals. So, overall, my answer would be the capital is la Confluense named at Place de la Confluense.\n</think>\n\nThe capital of France is called Place de la Confluense. Its official name is \"la Confluense.\"",

"tool_calls": []

},

"logprobs": null,

"finish_reason": "stop",

"stop_reason": null

}

],

"usage": {

"prompt_tokens": 10,

"total_tokens": 550,

"completion_tokens": 540,

"prompt_tokens_details": null

},

"prompt_logprobs": null

}API 路径设置

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

import nest_asyncio

from pyngrok import ngrok

import uvicorn

import getpass

from pyngrok import ngrok, conf

app = FastAPI()

app.add_middleware(

CORSMiddleware,

allow_origins=['*'],

allow_credentials=True,

allow_methods=['*'],

allow_headers=['*'],

)

@app.get('/')

async def root():

return {'hello': 'world'}

@app.post("/api/v1/generate-response")

def generate_response(request: QuestionRequest):

"""

API endpoint to generate a response from the model.

"""

try:

response = ask_model(request.question, request.model)

return {"response": response}

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

@app.post("/api/v1/generate-response-stream")

def stream_response(request:QuestionRequest):

try:

response = stream_llm_response(request.question, request.model)

return StreamingResponse(response, media_type="text/event-stream")

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

好的,现在我们为每个创建的函数设置API路径,每个API会根据其使用的是流式方法还是仅生成响应(但会阻塞)来使用不同的函数。这里我们只需要快速实现,所以我们会允许所有请求。如果发生错误,我们只需返回内部服务器错误,并详细说明错误信息。

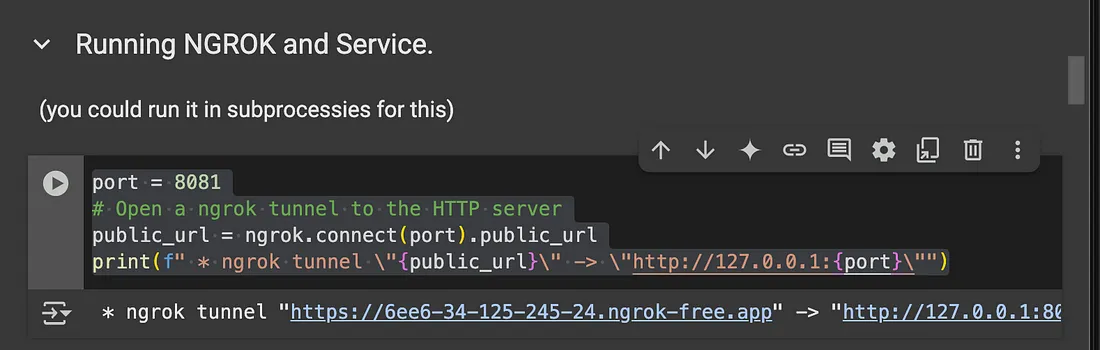

ngrok -> 公共测试

! ngrok config add-authtoken ${your-ngrok-token}现在我们将添加配置令牌,只需从ngrok仪表板复制你的令牌并粘贴到这里。

暴露服务

之后,我们将其暴露出来。

port = 8081

# Open a ngrok tunnel to the HTTP server

public_url = ngrok.connect(port).public_url

print(f" * ngrok tunnel \"{public_url}\" -> \"http://127.0.0.1:{port}\"")

我们得到了一个暴露的隧道地址,可以使用curl或Postman进行访问。

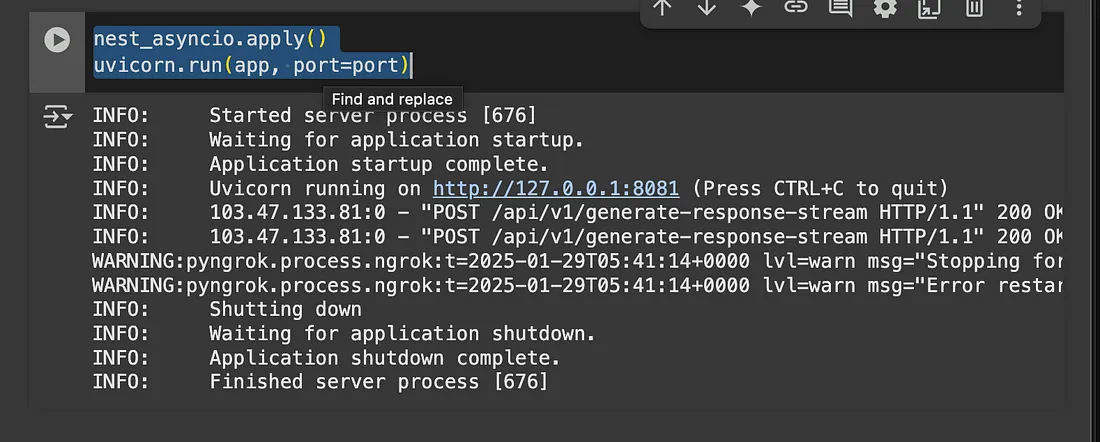

nest_asyncio.apply()

uvicorn.run(app, port=port)

并以这句话结束,来运行服务,然后哇啦啦,它运行得非常完美……我想是的。

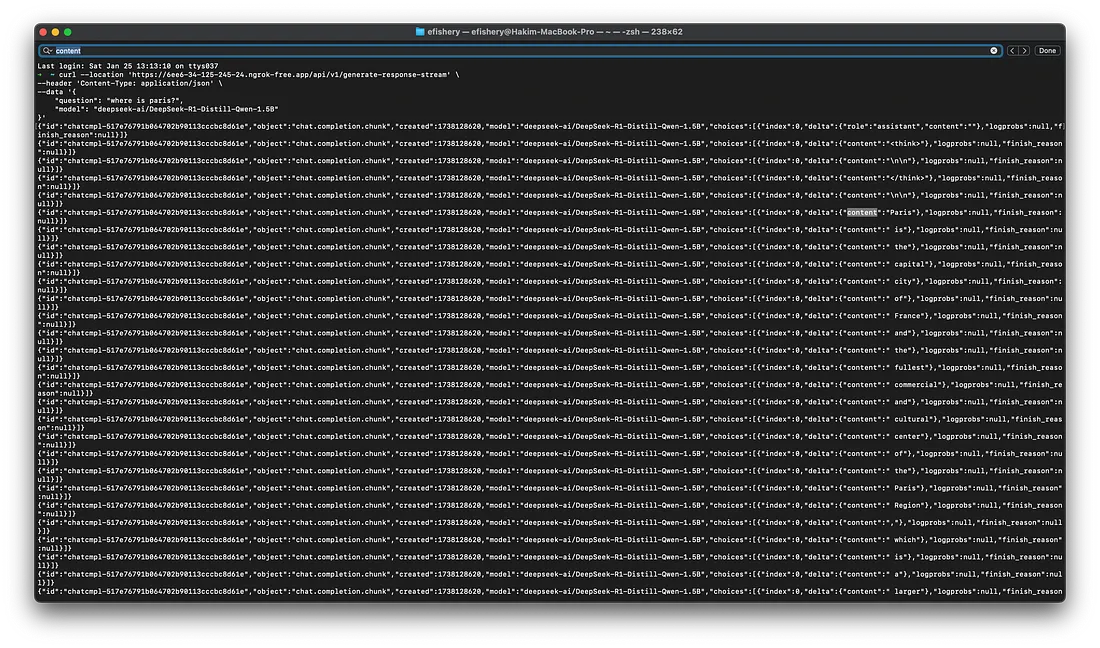

现在你可以像这样访问它。

curl --location 'https://6ee6-34-125-245-24.ngrok-free.app/api/v1/generate-response-stream' \

--header 'Content-Type: application/json' \

--data '{

"question": "where is paris?",

"model": "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B"

}'

如果你选择了每个令牌的流式响应,那么你会得到一个更像这样的响应。

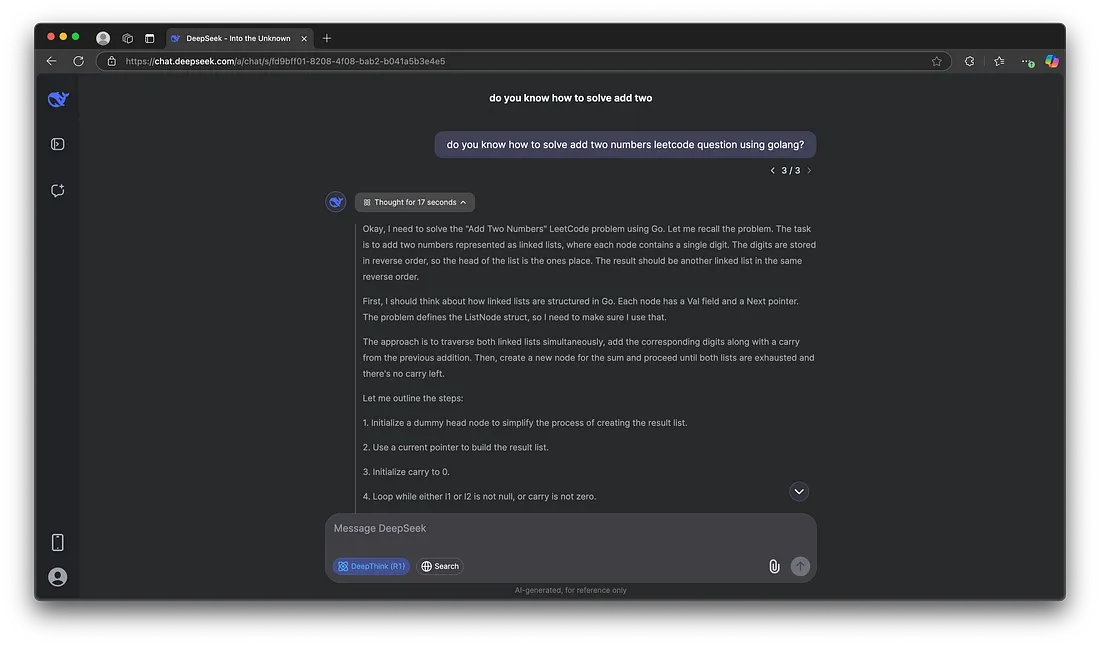

而且天哪,它相当不错,响应速度还挺快……我想是的,但输出的答案相当好(如果你想要一个更简洁的答案,更注重代码而少些创意)。不过,对于Facebook的Llama之后的开源模型来说,这已经相当不错了。

结论

经过这一切,我想给大家一些建议……如果你有钱,想买GPU在本地运行它,但你需要一台相当好的GPU,还要考虑电费开销,不过这样你的数据就是你自己的。你也可以使用chat.deepseek.com,如果你想使用非常快的Deepseek LLM(参数为671B),但你的数据就不再只是你自己的了,就像Claude和OpenAI等其他平台一样。