元提示:自动优化提示的实用指南

在这篇文章中,我将引导你了解如何优化基本提示,以提高语言模型输出内容的质量。

我将使用OpenAI Cookbook中一个改编的新闻文章摘要示例来演示其工作原理。

什么是元提示(Meta Prompting)?

元提示是一种技术,即使用一个语言模型(LLM)为另一个语言模型生成或优化提示。

通常,会使用一个更高级的模型(如o1-preview)来为不太复杂的模型优化提示。

目标是创建更清晰、更有结构、且能更好地引导目标模型生成高质量、相关回应的提示。

通过利用o1-preview等模型的高级推理能力,我们可以系统地增强提示,以确保它们更有效地引出所需的输出。

为什么使用元提示?

这种技术简化了与语言模型合作时的开发过程,使得更容易获得更好的结果。

它对于摘要、问题回答或任何需要精确度的场景尤其有用。

工作原理

我将从一个设计用于总结新闻文章的简单提示开始。

然后,使用o1-preview,我将逐步分析并优化它,增加清晰度和细节,以提高其有效性。

最后,系统地评估输出,以衡量我们更改的影响。

下面的代码执行必要的安装和导入操作。它还会提示你输入你的OpenAI API密钥。

!pip install datasets openai pandas tqdm

import pandas as pd

import getpass

import matplotlib.pyplot as plt

from concurrent.futures import ThreadPoolExecutor, as_completed

from tqdm import tqdm

from pydantic import BaseModel

from datasets import load_dataset

# Initialize OpenAI client

api_key = getpass.getpass("Enter your OpenAI API key: ")

client = openai.OpenAI(api_key=api_key)

ds = load_dataset("RealTimeData/bbc_news_alltime", "2024-08")

df = pd.DataFrame(ds['train']).sample(n=100, random_state=1)

df.head()

以下是加载到应用程序中的数据顶部部分。

首先,我们将使用下面定义的简单提示来引导o1-preview模型。

为了改进这个简单提示,需要向o1-preview模型提供指导和上下文,以达到预期结果。

simple_prompt = "Summarize this news article: {article}"

meta_prompt = """

Improve the following prompt to generate a more detailed summary.

Adhere to prompt engineering best practices.

Make sure the structure is clear and intuitive and contains the type of news, tags and sentiment analysis.

{simple_prompt}

Only return the prompt.

"""

def get_model_response(messages, model="o1-preview"):

response = client.chat.completions.create(

messages=messages,

model=model,

)

return response.choices[0].message.content

complex_prompt = get_model_response([{"role": "user", "content": meta_prompt.format(simple_prompt=simple_prompt)}])

complex_prompt以下是由我们的高级或更优模型生成的增强版提示:

Please read the following news article and provide a detailed summary that includes:

- **Type of News**: Categorize the article (e.g., Politics, Technology, Sports, Business, etc.).

- **Tags**: List relevant keywords or phrases associated with the article.

- **Sentiment Analysis**: Analyze the overall tone (e.g., Positive, Negative, Neutral) and provide a brief explanation.

Ensure the summary is well-structured and easy to understand.

**Article**:

{article}

好的,现在我们有了简单提示和增强提示。因此,我们现在可以在你的数据集上使用简单提示和增强提示,并比较结果。

我们现在正在对我们的较低级模型运行这些提示。

def generate_response(prompt):

messages = [{"role": "user", "content": prompt}]

response = get_model_response(messages, model="gpt-4o-mini")

return response

def generate_summaries(row):

simple_itinerary = generate_response(simple_prompt.format(article=row["content"]))

complex_itinerary = generate_response(complex_prompt + row["content"])

return simple_itinerary, complex_itinerary

generate_summaries(df.iloc[0])

比较简单提示和增强提示生成的摘要,可以看出明显的改进。

虽然初始摘要提供了大致的概述,但增强版更进一步,提供了详细的分解,对新闻类型进行了分类,列出了相关标签,甚至进行了情感分析。

现在正在测试整个数据集……

# Add new columns to the dataframe for storing itineraries

df['simple_summary'] = None

df['complex_summary'] = None

# Use ThreadPoolExecutor to generate itineraries concurrently

with ThreadPoolExecutor() as executor:

futures = {executor.submit(generate_summaries, row): index for index, row in df.iterrows()}

for future in tqdm(as_completed(futures), total=len(futures), desc="Generating Itineraries"):

index = futures[future]

simple_itinerary, complex_itinerary = future.result()

df.at[index, 'simple_summary'] = simple_itinerary

df.at[index, 'complex_summary'] = complex_itinerary

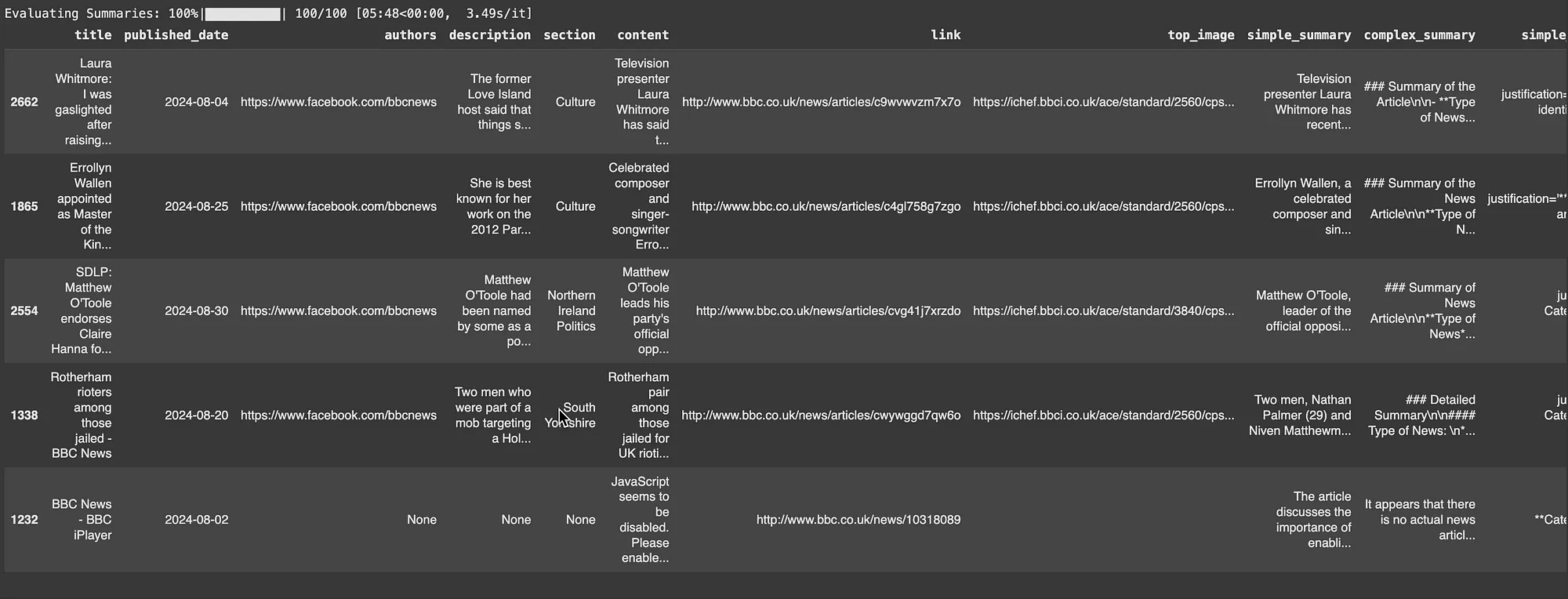

df.head()

结果……

评估结果

为了比较两个提示的性能,使用一种结构化的方法,其中语言模型本身充当评判者。

这意味着语言模型将根据准确性、清晰度和相关性等特定标准来评估输出,提供无人为偏见的客观评估。

evaluation_prompt = """

You are an expert editor tasked with evaluating the quality of a news article summary. Below is the original article and the summary to be evaluated:

**Original Article**:

{original_article}

**Summary**:

{summary}

Please evaluate the summary based on the following criteria, using a scale of 1 to 5 (1 being the lowest and 5 being the highest). Be critical in your evaluation and only give high scores for exceptional summaries:

1. **Categorization and Context**: Does the summary clearly identify the type or category of news (e.g., Politics, Technology, Sports) and provide appropriate context?

2. **Keyword and Tag Extraction**: Does the summary include relevant keywords or tags that accurately capture the main topics and themes of the article?

3. **Sentiment Analysis**: Does the summary accurately identify the overall sentiment of the article and provide a clear, well-supported explanation for this sentiment?

4. **Clarity and Structure**: Is the summary clear, well-organized, and structured in a way that makes it easy to understand the main points?

5. **Detail and Completeness**: Does the summary provide a detailed account that includes all necessary components (type of news, tags, sentiment) comprehensively?

Provide your scores and justifications for each criterion, ensuring a rigorous and detailed evaluation.

"""

class ScoreCard(BaseModel):

justification: str

categorization: int

keyword_extraction: int

sentiment_analysis: int

clarity_structure: int

detail_completeness: int

评估已运行并存储在数据框中。

def evaluate_summaries(row):

simple_messages = [{"role": "user", "content": evaluation_prompt.format(original_article=row["content"], summary=row['simple_summary'])}]

complex_messages = [{"role": "user", "content": evaluation_prompt.format(original_article=row["content"], summary=row['complex_summary'])}]

simple_summary = client.beta.chat.completions.parse(

model="gpt-4o",

messages=simple_messages,

response_format=ScoreCard)

simple_summary = simple_summary.choices[0].message.parsed

complex_summary = client.beta.chat.completions.parse(

model="gpt-4o",

messages=complex_messages,

response_format=ScoreCard)

complex_summary = complex_summary.choices[0].message.parsed

return simple_summary, complex_summary

# Add new columns to the dataframe for storing evaluations

df['simple_evaluation'] = None

df['complex_evaluation'] = None

# Use ThreadPoolExecutor to evaluate itineraries concurrently

with ThreadPoolExecutor() as executor:

futures = {executor.submit(evaluate_summaries, row): index for index, row in df.iterrows()}

for future in tqdm(as_completed(futures), total=len(futures), desc="Evaluating Summaries"):

index = futures[future]

simple_evaluation, complex_evaluation = future.result()

df.at[index, 'simple_evaluation'] = simple_evaluation

df.at[index, 'complex_evaluation'] = complex_evaluation

df.head()

结果如下

结果已绘制成图表……

import matplotlib.pyplot as plt

df["simple_scores"] = df["simple_evaluation"].apply(lambda x: [score for key, score in x.model_dump().items() if key != 'justification'])

df["complex_scores"] = df["complex_evaluation"].apply(lambda x: [score for key, score in x.model_dump().items() if key != 'justification'])

# Calculate average scores for each criterion

criteria = [

'Categorisation',

'Keywords and Tags',

'Sentiment Analysis',

'Clarity and Structure',

'Detail and Completeness'

]

# Calculate average scores for each criterion by model

simple_avg_scores = df['simple_scores'].apply(pd.Series).mean()

complex_avg_scores = df['complex_scores'].apply(pd.Series).mean()

# Prepare data for plotting

avg_scores_df = pd.DataFrame({

'Criteria': criteria,

'Original Prompt': simple_avg_scores,

'Improved Prompt': complex_avg_scores

})

# Plotting

ax = avg_scores_df.plot(x='Criteria', kind='bar', figsize=(6, 4))

plt.ylabel('Average Score')

plt.title('Comparison of Simple vs Complex Prompt Performance by Model')

plt.xticks(rotation=45, ha='right')

plt.tight_layout()

plt.legend(loc='upper left', bbox_to_anchor=(1, 1))

plt.show()

结果如下